Microsoft PL-300 Exam Practice Questions (P. 2)

- Full Access (382 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

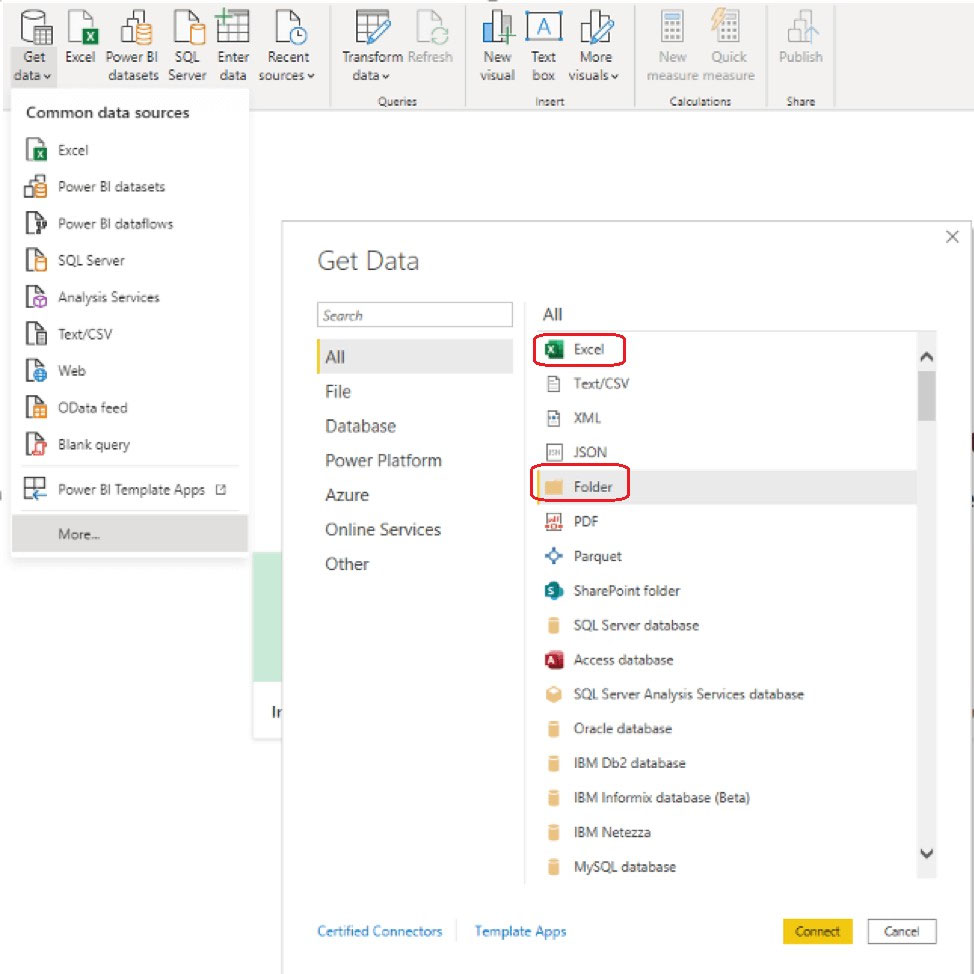

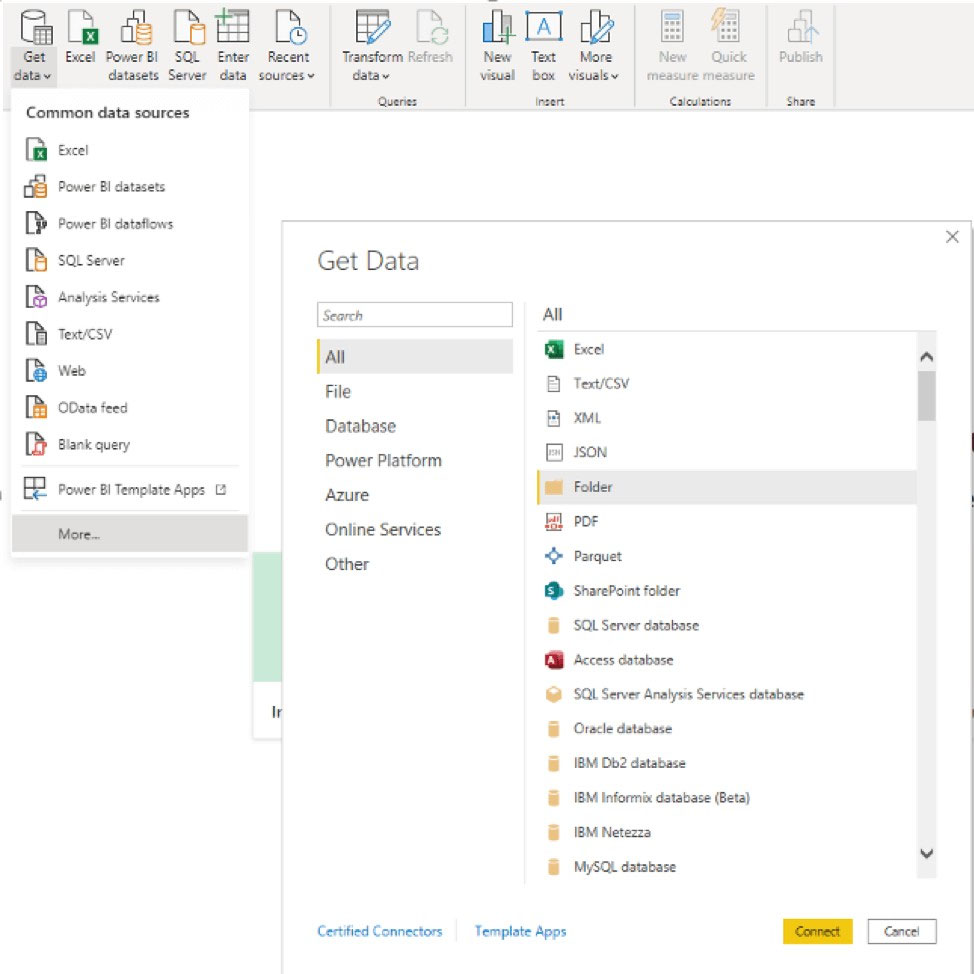

You have a Microsoft Excel file in a Microsoft OneDrive folder.

The file must be imported to a Power BI dataset.

You need to ensure that the dataset can be refreshed in powerbi.com.

Which two connectors can you use to connect to the file? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

The file must be imported to a Power BI dataset.

You need to ensure that the dataset can be refreshed in powerbi.com.

Which two connectors can you use to connect to the file? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- AExcel Workbook

- BText/CSV

- CFolder

- DSharePoint folderMost Voted

- EWebMost Voted

Correct Answer:

AC

A: Connect to an Excel workbook from Power Query Desktop

To make the connection from Power Query Desktop:

1. Select the Excel option in the connector selection.

2. Browse for and select the Excel workbook you want to load. Then select Open.

3. Etc.

C: Folder connector capabilities supported

Folder path -

Combine -

Combine and load -

Combine and transform -

Connect to a folder from Power Query Online

To connect to a folder from Power Query Online:

1. Select the Folder option in the connector selection.

2. Enter the path to the folder you want to load.

Note:

Reference:

https://docs.microsoft.com/en-us/power-query/connectors/excel https://docs.microsoft.com/en-us/power-query/connectors/folder

AC

A: Connect to an Excel workbook from Power Query Desktop

To make the connection from Power Query Desktop:

1. Select the Excel option in the connector selection.

2. Browse for and select the Excel workbook you want to load. Then select Open.

3. Etc.

C: Folder connector capabilities supported

Folder path -

Combine -

Combine and load -

Combine and transform -

Connect to a folder from Power Query Online

To connect to a folder from Power Query Online:

1. Select the Folder option in the connector selection.

2. Enter the path to the folder you want to load.

Note:

Reference:

https://docs.microsoft.com/en-us/power-query/connectors/excel https://docs.microsoft.com/en-us/power-query/connectors/folder

send

light_mode

delete

Question #12

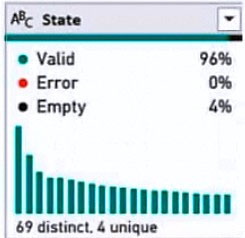

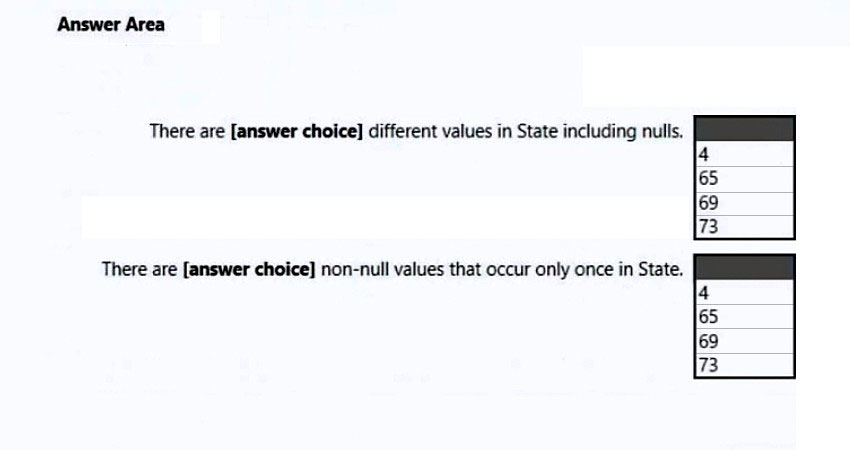

HOTSPOT -

You are profiling data by using Power Query Editor.

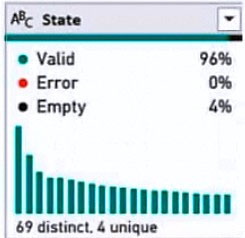

You have a table named Reports that contains a column named State. The distribution and quality data metrics for the data in State is shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

You are profiling data by using Power Query Editor.

You have a table named Reports that contains a column named State. The distribution and quality data metrics for the data in State is shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: 69 -

69 distinct/different values.

Note: Column Distribution allows you to get a sense for the overall distribution of values within a column in your data previews, including the count of distinct values (total number of different values found in a given column) and unique values (total number of values that only appear once in a given column).

Box 2: 4 -

Reference:

https://systemmanagement.ro/2018/10/16/power-bi-data-profiling-distinct-vs-unique/

Box 1: 69 -

69 distinct/different values.

Note: Column Distribution allows you to get a sense for the overall distribution of values within a column in your data previews, including the count of distinct values (total number of different values found in a given column) and unique values (total number of values that only appear once in a given column).

Box 2: 4 -

Reference:

https://systemmanagement.ro/2018/10/16/power-bi-data-profiling-distinct-vs-unique/

send

light_mode

delete

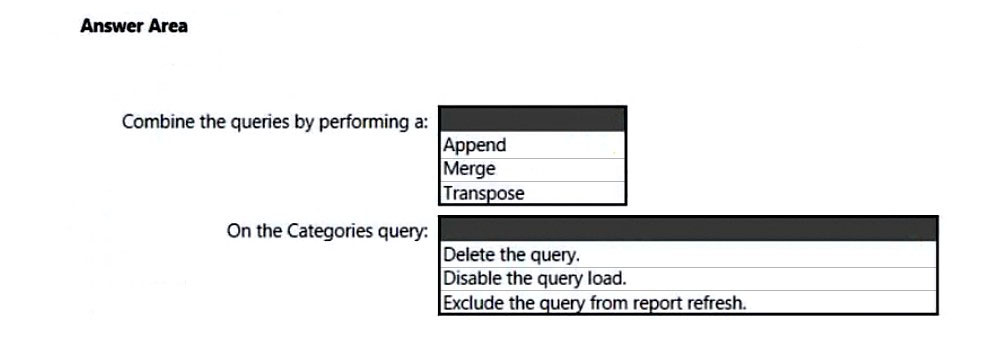

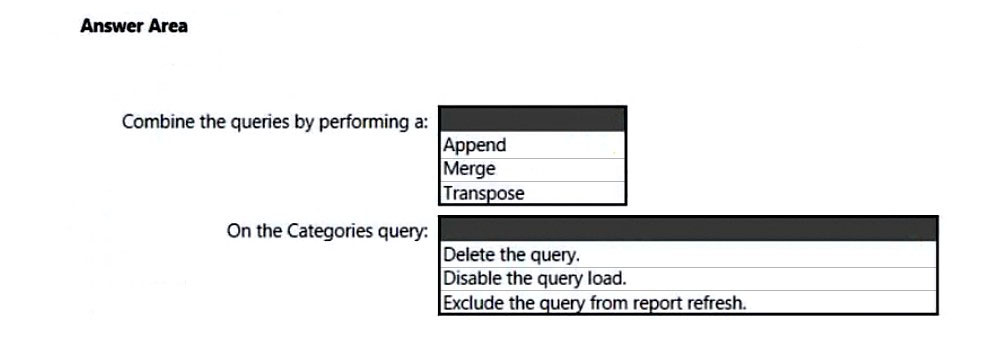

Question #13

HOTSPOT -

You have two CSV files named Products and Categories.

The Products file contains the following columns:

✑ ProductID

✑ ProductName

✑ SupplierID

✑ CategoryID

The Categories file contains the following columns:

✑ CategoryID

✑ CategoryName

✑ CategoryDescription

From Power BI Desktop, you import the files into Power Query Editor.

You need to create a Power BI dataset that will contain a single table named Product. The Product will table includes the following columns:

✑ ProductID

✑ ProductName

✑ SupplierID

✑ CategoryID

✑ CategoryName

✑ CategoryDescription

How should you combine the queries, and what should you do on the Categories query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

You have two CSV files named Products and Categories.

The Products file contains the following columns:

✑ ProductID

✑ ProductName

✑ SupplierID

✑ CategoryID

The Categories file contains the following columns:

✑ CategoryID

✑ CategoryName

✑ CategoryDescription

From Power BI Desktop, you import the files into Power Query Editor.

You need to create a Power BI dataset that will contain a single table named Product. The Product will table includes the following columns:

✑ ProductID

✑ ProductName

✑ SupplierID

✑ CategoryID

✑ CategoryName

✑ CategoryDescription

How should you combine the queries, and what should you do on the Categories query? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

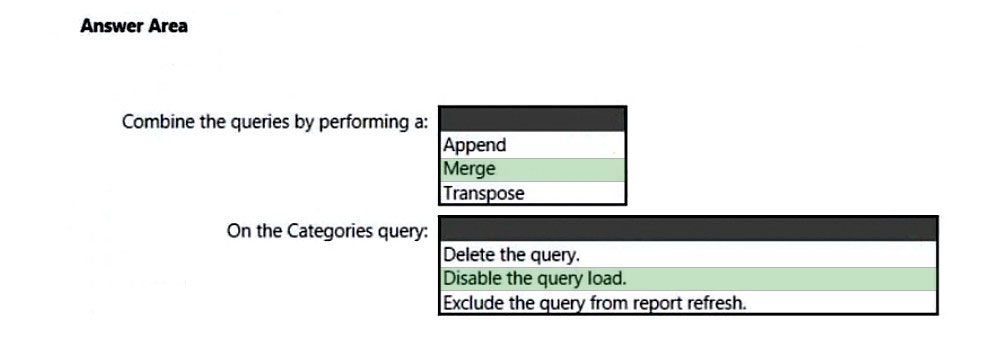

Correct Answer:

Box 1: Merge -

There are two primary ways of combining queries: merging and appending.

* When you have one or more columns that you'd like to add to another query, you merge the queries.

* When you have additional rows of data that you'd like to add to an existing query, you append the query.

Box 2: Disable the query load -

Managing loading of queries -

In many situations, it makes sense to break down your data transformations in multiple queries. One popular example is merging where you merge two queries into one to essentially do a join. In this type of situations, some queries are not relevant to load into Desktop as they are intermediate steps, while they are still required for your data transformations to work correctly. For these queries, you can make sure they are not loaded in Desktop by un-checking 'Enable load' in the context menu of the query in Desktop or in the Properties screen:

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-shape-and-combine-data https://docs.microsoft.com/en-us/power-bi/connect-data/refresh-include-in-report-refresh

Box 1: Merge -

There are two primary ways of combining queries: merging and appending.

* When you have one or more columns that you'd like to add to another query, you merge the queries.

* When you have additional rows of data that you'd like to add to an existing query, you append the query.

Box 2: Disable the query load -

Managing loading of queries -

In many situations, it makes sense to break down your data transformations in multiple queries. One popular example is merging where you merge two queries into one to essentially do a join. In this type of situations, some queries are not relevant to load into Desktop as they are intermediate steps, while they are still required for your data transformations to work correctly. For these queries, you can make sure they are not loaded in Desktop by un-checking 'Enable load' in the context menu of the query in Desktop or in the Properties screen:

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-shape-and-combine-data https://docs.microsoft.com/en-us/power-bi/connect-data/refresh-include-in-report-refresh

send

light_mode

delete

Question #14

You have an Azure SQL database that contains sales transactions. The database is updated frequently.

You need to generate reports from the data to detect fraudulent transactions. The data must be visible within five minutes of an update.

How should you configure the data connection?

You need to generate reports from the data to detect fraudulent transactions. The data must be visible within five minutes of an update.

How should you configure the data connection?

- AAdd a SQL statement.

- BSet the Command timeout in minutes setting.

- CSet Data Connectivity mode to Import.

- DSet Data Connectivity mode to DirectQuery.Most Voted

Correct Answer:

D

DirectQuery: No data is imported or copied into Power BI Desktop. For relational sources, the selected tables and columns appear in the Fields list. For multi- dimensional sources like SAP Business Warehouse, the dimensions and measures of the selected cube appear in the Fields list. As you create or interact with a visualization, Power BI Desktop queries the underlying data source, so you're always viewing current data.

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-use-directquery

D

DirectQuery: No data is imported or copied into Power BI Desktop. For relational sources, the selected tables and columns appear in the Fields list. For multi- dimensional sources like SAP Business Warehouse, the dimensions and measures of the selected cube appear in the Fields list. As you create or interact with a visualization, Power BI Desktop queries the underlying data source, so you're always viewing current data.

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/desktop-use-directquery

send

light_mode

delete

Question #15

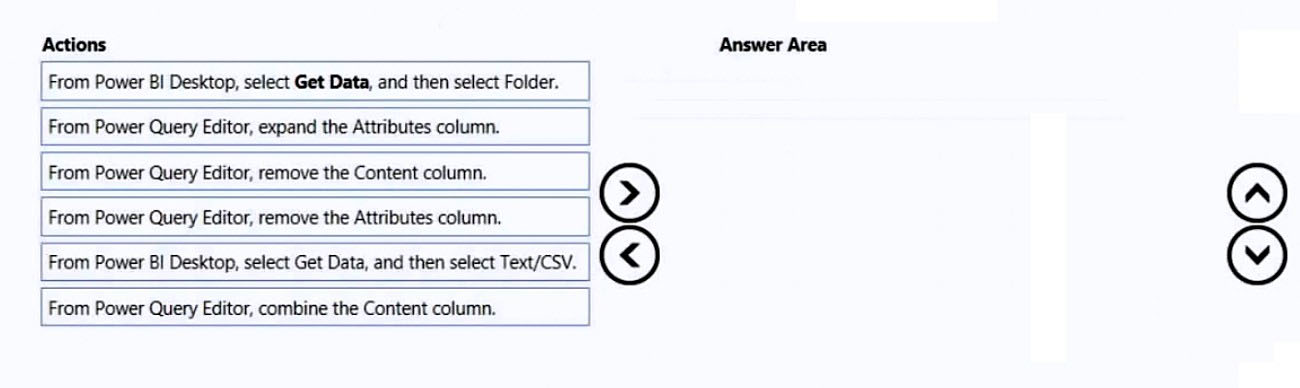

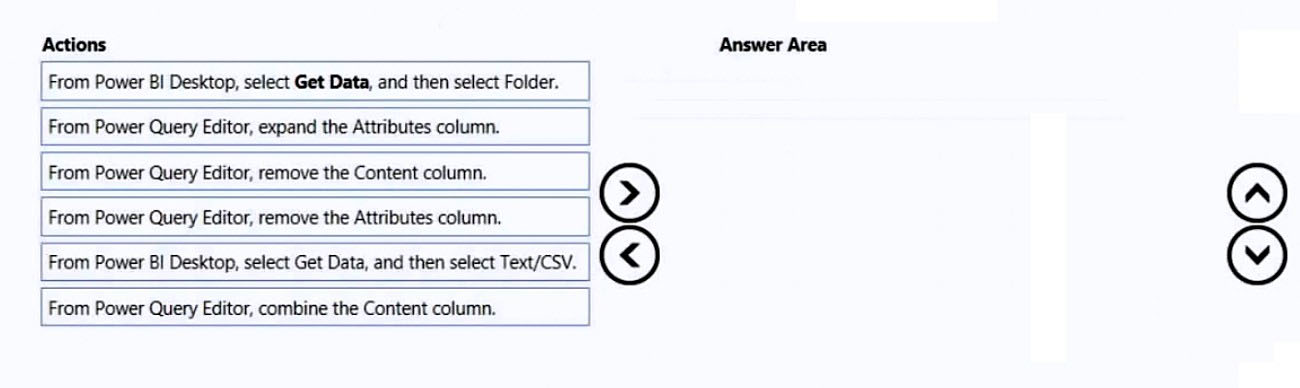

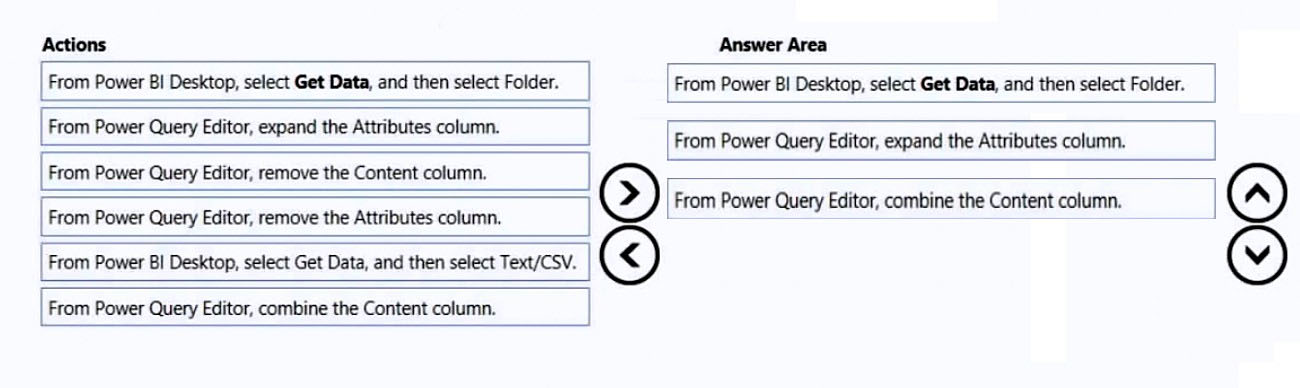

DRAG DROP -

You have a folder that contains 100 CSV files.

You need to make the file metadata available as a single dataset by using Power BI. The solution must NOT store the data of the CSV files.

Which three actions should you perform in sequence. To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

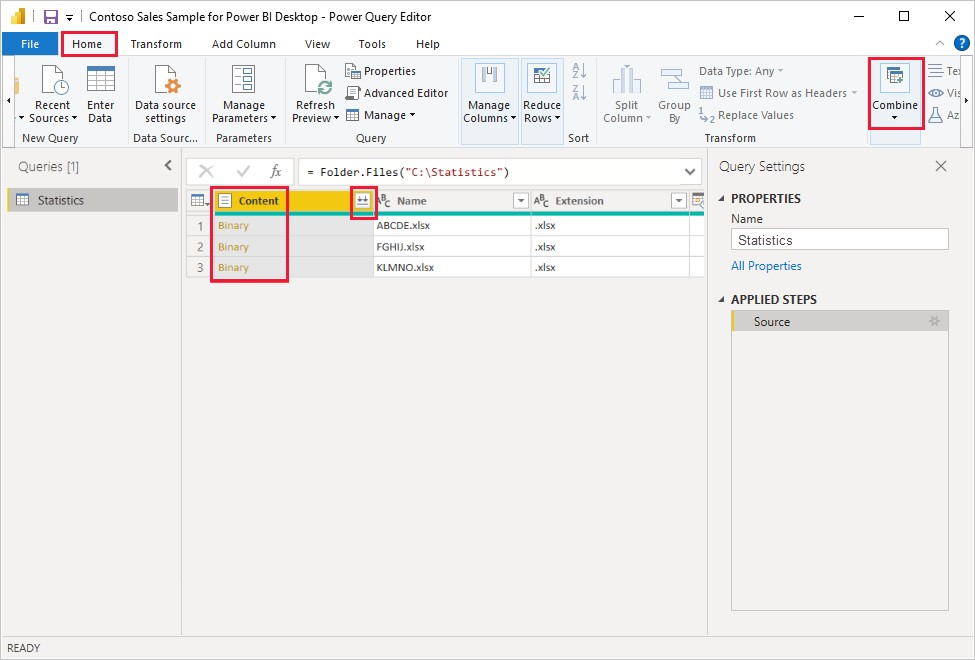

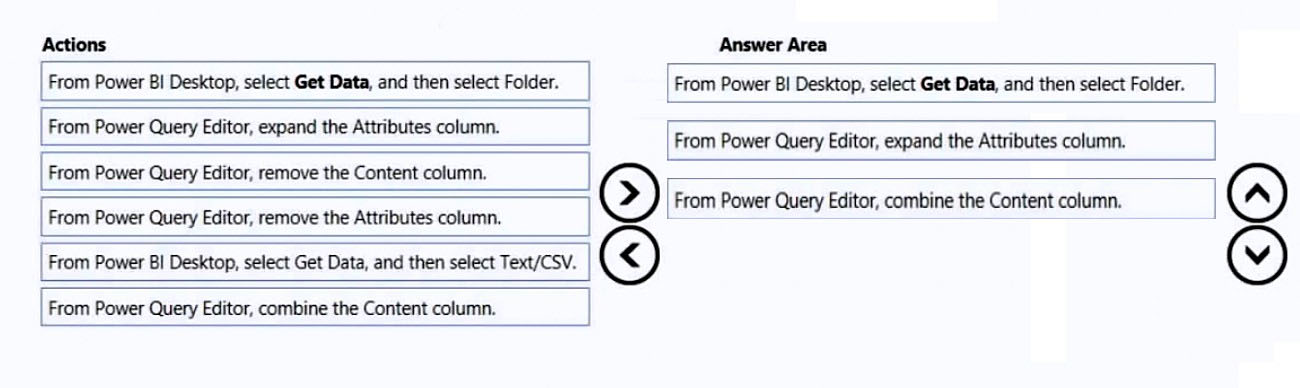

Select and Place:

You have a folder that contains 100 CSV files.

You need to make the file metadata available as a single dataset by using Power BI. The solution must NOT store the data of the CSV files.

Which three actions should you perform in sequence. To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

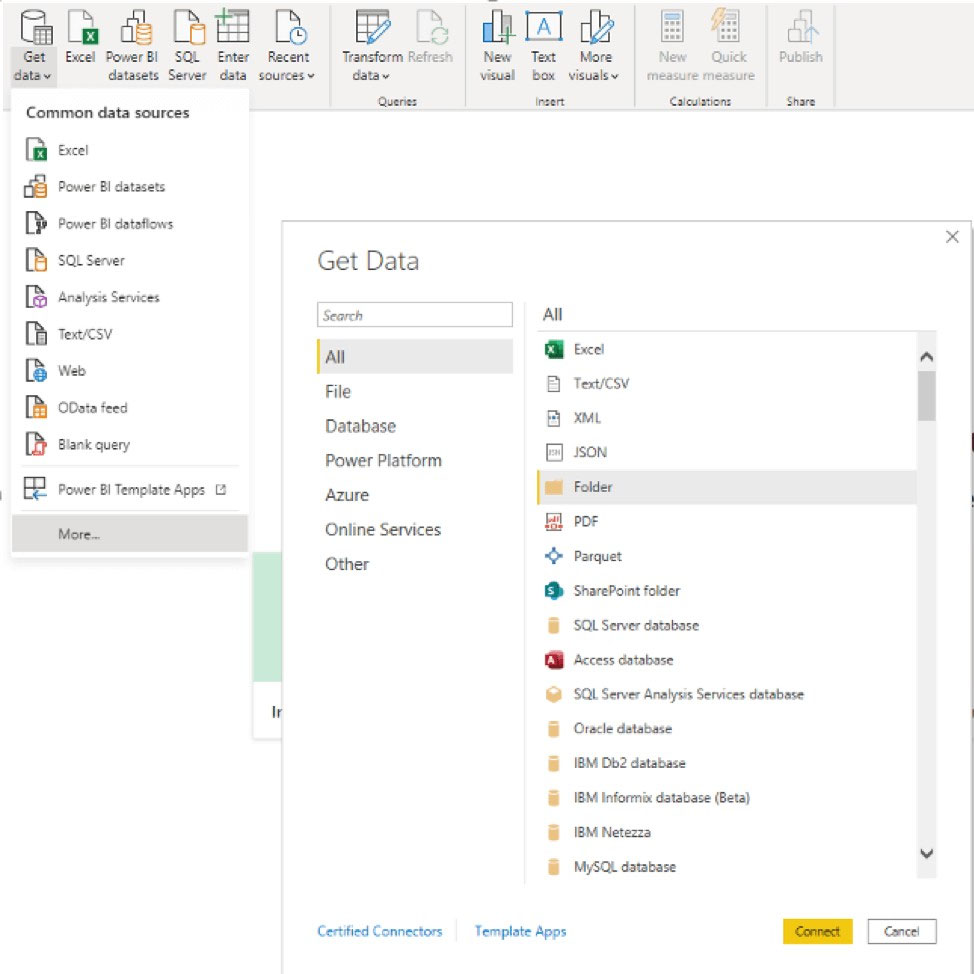

Step 1: From Power BI Desktop, Select Get Data, and then Select Folder.

Open Power BI Desktop and then select Get Data\Moreג€¦ and choose Folder from the All options on the left.

Enter the folder path, select OK, and then select Transform data to see the folder's files in Power Query Editor.

Step 2: From Power Query Editor, expand the Attributes column.

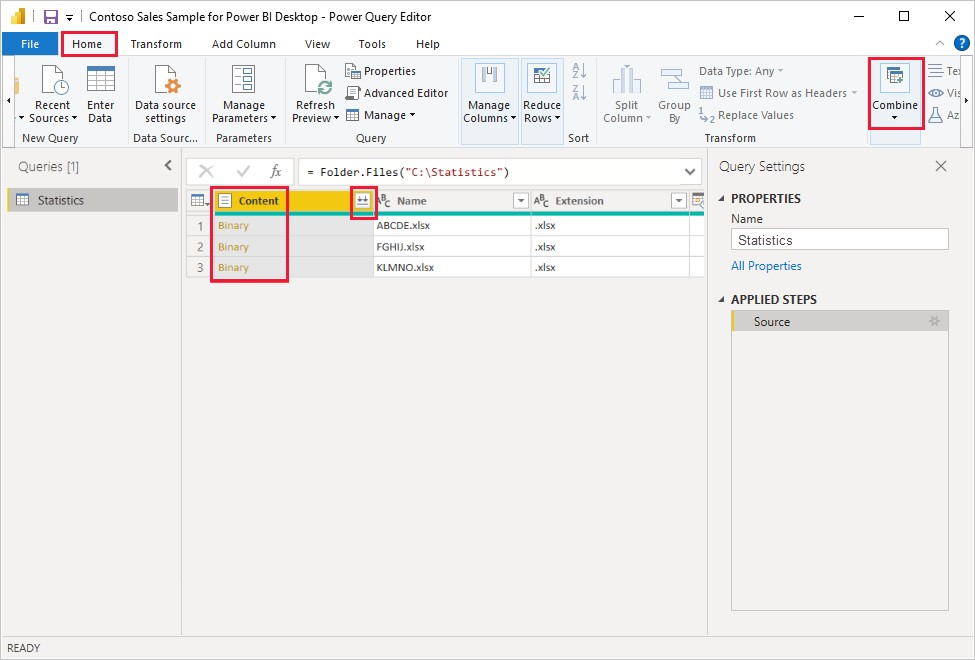

Step 3: From Power Query Editor, combine the Content column.

Combine files behavior -

To combine binary files in Power Query Editor, select Content (the first column label) and select Home > Combine Files. Or you can just select the Combine Files icon next to Content.

Reference:

https://docs.microsoft.com/en-us/power-bi/transform-model/desktop-combine-binaries

Step 1: From Power BI Desktop, Select Get Data, and then Select Folder.

Open Power BI Desktop and then select Get Data\Moreג€¦ and choose Folder from the All options on the left.

Enter the folder path, select OK, and then select Transform data to see the folder's files in Power Query Editor.

Step 2: From Power Query Editor, expand the Attributes column.

Step 3: From Power Query Editor, combine the Content column.

Combine files behavior -

To combine binary files in Power Query Editor, select Content (the first column label) and select Home > Combine Files. Or you can just select the Combine Files icon next to Content.

Reference:

https://docs.microsoft.com/en-us/power-bi/transform-model/desktop-combine-binaries

send

light_mode

delete

Question #16

A business intelligence (BI) developer creates a dataflow in Power BI that uses DirectQuery to access tables from an on-premises Microsoft SQL server. The

Enhanced Dataflows Compute Engine is turned on for the dataflow.

You need to use the dataflow in a report. The solution must meet the following requirements:

✑ Minimize online processing operations.

✑ Minimize calculation times and render times for visuals.

✑ Include data from the current year, up to and including the previous day.

What should you do?

Enhanced Dataflows Compute Engine is turned on for the dataflow.

You need to use the dataflow in a report. The solution must meet the following requirements:

✑ Minimize online processing operations.

✑ Minimize calculation times and render times for visuals.

✑ Include data from the current year, up to and including the previous day.

What should you do?

- ACreate a dataflows connection that has DirectQuery mode selected.

- BCreate a dataflows connection that has DirectQuery mode selected and configure a gateway connection for the dataset.

- CCreate a dataflows connection that has Import mode selected and schedule a daily refresh.Most Voted

- DCreate a dataflows connection that has Import mode selected and create a Microsoft Power Automate solution to refresh the data hourly.

Correct Answer:

C

A daily update is adequate.

When you set up a refresh schedule, Power BI connects directly to the data sources using connection information and credentials in the dataset to query for updated data, then loads the updated data into the dataset. Any visualizations in reports and dashboards based on that dataset in the Power BI service are also updated.

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/refresh-desktop-file-local-drive

C

A daily update is adequate.

When you set up a refresh schedule, Power BI connects directly to the data sources using connection information and credentials in the dataset to query for updated data, then loads the updated data into the dataset. Any visualizations in reports and dashboards based on that dataset in the Power BI service are also updated.

Reference:

https://docs.microsoft.com/en-us/power-bi/connect-data/refresh-desktop-file-local-drive

send

light_mode

delete

Question #17

You are creating a report in Power BI Desktop.

You load a data extract that includes a free text field named coll.

You need to analyze the frequency distribution of the string lengths in col1. The solution must not affect the size of the model.

What should you do?

You load a data extract that includes a free text field named coll.

You need to analyze the frequency distribution of the string lengths in col1. The solution must not affect the size of the model.

What should you do?

- AIn the report, add a DAX calculated column that calculates the length of col1

- BIn the report, add a DAX function that calculates the average length of col1

- CFrom Power Query Editor, add a column that calculates the length of col1

- DFrom Power Query Editor, change the distribution for the Column profile to group by length for col1Most Voted

Correct Answer:

A

The LEN DAX function returns the number of characters in a text string.

Note: DAX is a collection of Power BI functions, operators, and constants that can be used in a formula, or expression, to calculate and return one or more values.

Stated more simply, DAX helps you create new information from data already in your model.

Reference:

https://docs.microsoft.com/en-us/dax/len-function-dax

https://docs.microsoft.com/en-us/power-bi/transform-model/desktop-quickstart-learn-dax-basics

A

The LEN DAX function returns the number of characters in a text string.

Note: DAX is a collection of Power BI functions, operators, and constants that can be used in a formula, or expression, to calculate and return one or more values.

Stated more simply, DAX helps you create new information from data already in your model.

Reference:

https://docs.microsoft.com/en-us/dax/len-function-dax

https://docs.microsoft.com/en-us/power-bi/transform-model/desktop-quickstart-learn-dax-basics

send

light_mode

delete

Question #18

You have a collection of reports for the HR department of your company. The datasets use row-level security (RLS). The company has multiple sales regions.

Each sales region has an HR manager.

You need to ensure that the HR managers can interact with the data from their region only. The HR managers must be prevented from changing the layout of the reports.

How should you provision access to the reports for the HR managers?

Each sales region has an HR manager.

You need to ensure that the HR managers can interact with the data from their region only. The HR managers must be prevented from changing the layout of the reports.

How should you provision access to the reports for the HR managers?

- APublish the reports in an app and grant the HR managers access permission.Most Voted

- BCreate a new workspace, copy the datasets and reports, and add the HR managers as members of the workspace.

- CPublish the reports to a different workspace other than the one hosting the datasets.

- DAdd the HR managers as members of the existing workspace that hosts the reports and the datasets.

Correct Answer:

A

Reference:

https://kunaltripathy.com/2021/10/06/bring-your-power-bi-to-power-apps-portal-part-ii/

A

Reference:

https://kunaltripathy.com/2021/10/06/bring-your-power-bi-to-power-apps-portal-part-ii/

send

light_mode

delete

Question #19

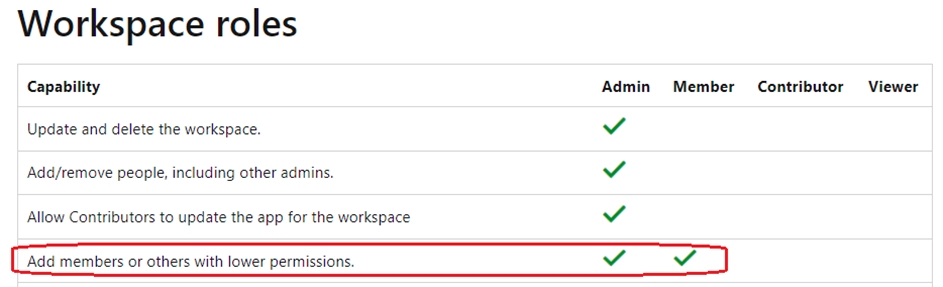

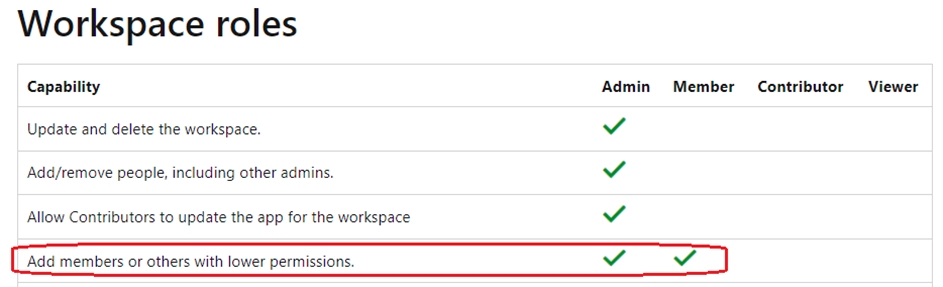

You need to provide a user with the ability to add members to a workspace. The solution must use the principle of least privilege.

Which role should you assign to the user?

Which role should you assign to the user?

- AViewer

- BAdmin

- CContributor

- DMemberMost Voted

Correct Answer:

D

Member role allows adding members or other with lower permissions to the workspace.

Reference:

https://docs.microsoft.com/en-us/power-bi/collaborate-share/service-roles-new-workspaces

D

Member role allows adding members or other with lower permissions to the workspace.

Reference:

https://docs.microsoft.com/en-us/power-bi/collaborate-share/service-roles-new-workspaces

send

light_mode

delete

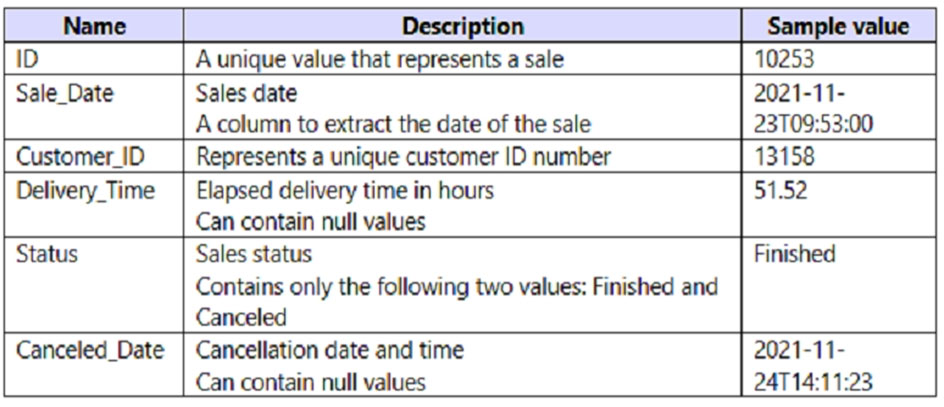

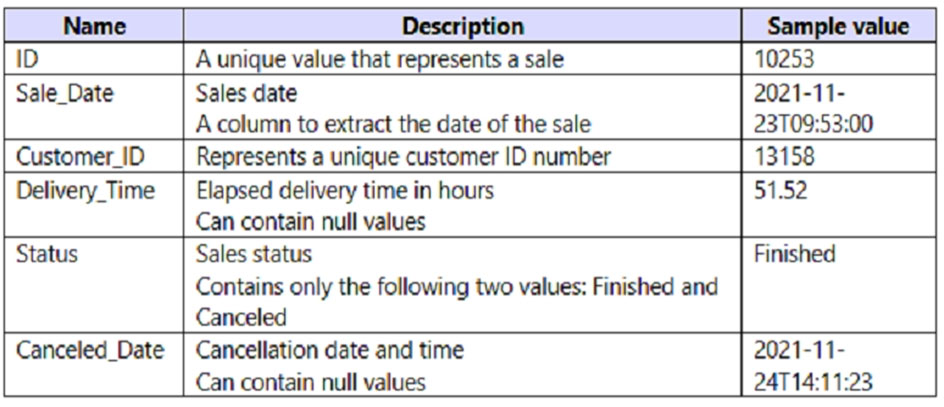

Question #20

You have a Power BI query named Sales that imports the columns shown in the following table.

Users only use the date part of the Sales_Date field. Only rows with a Status of Finished are used in analysis.

You need to reduce the load times of the query without affecting the analysis.

Which two actions achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

Users only use the date part of the Sales_Date field. Only rows with a Status of Finished are used in analysis.

You need to reduce the load times of the query without affecting the analysis.

Which two actions achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- ARemove the rows in which Sales[Status] has a value of Canceled.Most Voted

- BRemove Sales[Sales_Date].

- CChange the data type of Sale[Delivery_Time] to Integer.

- DSplit Sales[Sale_Date] into separate date and time columns.

- ERemove Sales[Canceled Date].Most Voted

Correct Answer:

AD

A: Removing uninteresting rows will increase query performance.

D: Splitting the Sales_Date column will make comparisons on the Sales date faster.

AD

A: Removing uninteresting rows will increase query performance.

D: Splitting the Sales_Date column will make comparisons on the Sales date faster.

send

light_mode

delete

All Pages