Microsoft DP-200 Exam Practice Questions (P. 4)

- Full Access (372 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #31

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an enterprise data warehouse in Azure Synapse Analytics.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is less than 1 MB.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an enterprise data warehouse in Azure Synapse Analytics.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is less than 1 MB.

Does this meet the goal?

- AYes

- BNo

Correct Answer:

B

Instead convert the files to compressed delimited text files.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

B

Instead convert the files to compressed delimited text files.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

send

light_mode

delete

Question #32

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is more than 1 MB.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an Azure SQL data warehouse.

You need to prepare the files to ensure that the data copies quickly.

Solution: You modify the files to ensure that each row is more than 1 MB.

Does this meet the goal?

- AYes

- BNo

Correct Answer:

B

Instead modify the files to ensure that each row is less than 1 MB.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

B

Instead modify the files to ensure that each row is less than 1 MB.

References:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

send

light_mode

delete

Question #33

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an enterprise data warehouse in Azure Synapse Analytics.

You need to prepare the files to ensure that the data copies quickly.

Solution: You copy the files to a table that has a columnstore index.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Storage account that contains 100 GB of files. The files contain text and numerical values. 75% of the rows contain description data that has an average length of 1.1 MB.

You plan to copy the data from the storage account to an enterprise data warehouse in Azure Synapse Analytics.

You need to prepare the files to ensure that the data copies quickly.

Solution: You copy the files to a table that has a columnstore index.

Does this meet the goal?

- AYes

- BNo

Correct Answer:

B

Instead convert the files to compressed delimited text files.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

B

Instead convert the files to compressed delimited text files.

Reference:

https://docs.microsoft.com/en-us/azure/sql-data-warehouse/guidance-for-loading-data

send

light_mode

delete

Question #34

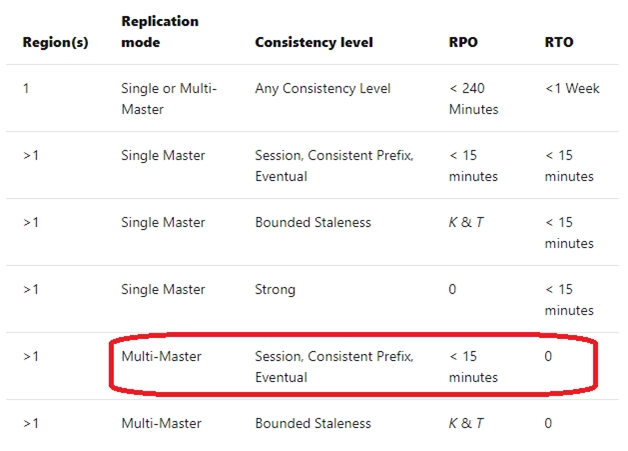

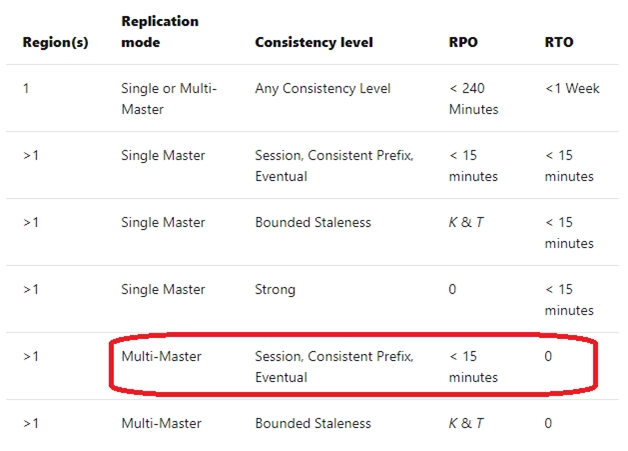

You plan to deploy an Azure Cosmos DB database that supports multi-master replication.

You need to select a consistency level for the database to meet the following requirements:

✑ Provide a recovery point objective (RPO) of less than 15 minutes.

✑ Provide a recovery time objective (RTO) of zero minutes.

What are three possible consistency levels that you can select? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

You need to select a consistency level for the database to meet the following requirements:

✑ Provide a recovery point objective (RPO) of less than 15 minutes.

✑ Provide a recovery time objective (RTO) of zero minutes.

What are three possible consistency levels that you can select? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- AStrong

- BBounded Staleness

- CEventual

- DSession

- EConsistent Prefix

Correct Answer:

CDE

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels-choosing

CDE

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/consistency-levels-choosing

send

light_mode

delete

Question #35

SIMULATION -

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to ensure that you can recover any blob data from an Azure Storage account named storage 10277521 up to 30 days after the data is deleted.

To complete this task, sign in to the Azure portal.

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to ensure that you can recover any blob data from an Azure Storage account named storage 10277521 up to 30 days after the data is deleted.

To complete this task, sign in to the Azure portal.

Correct Answer:

See the explanation below.

1. Open Azure Portal and open the Azure Blob storage account named storage10277521.

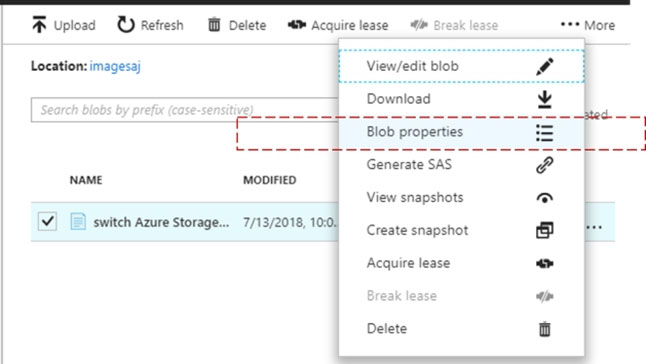

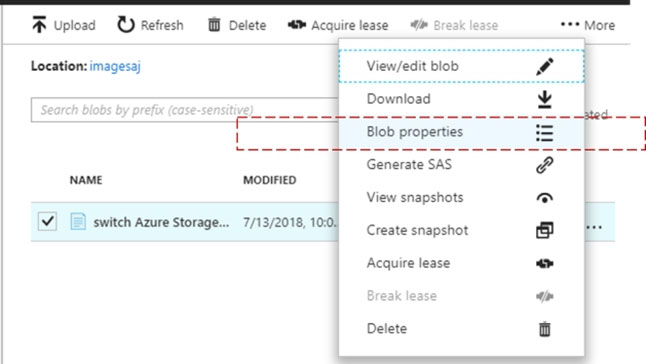

2. Right-click and select Blob properties

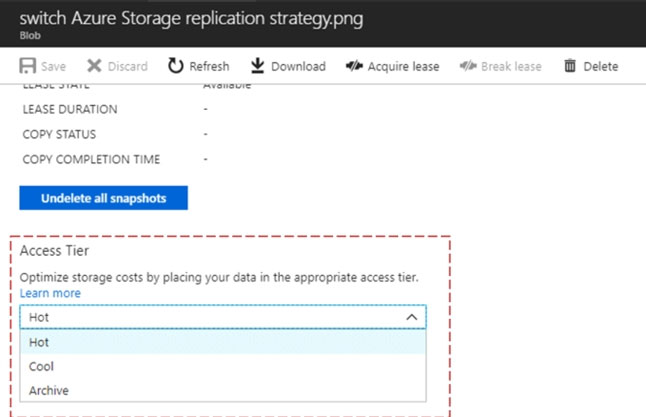

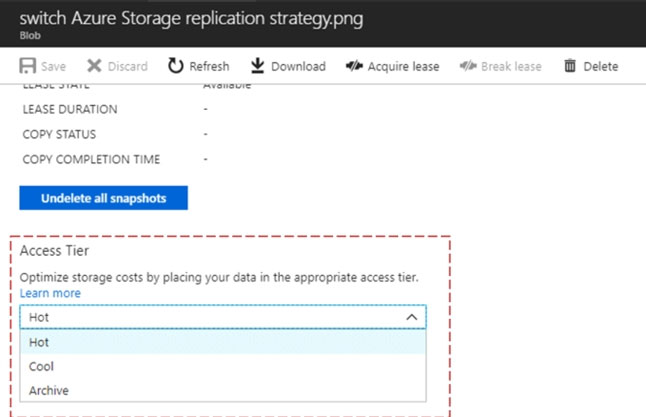

3. From the properties window, change the access tier for the blob to Cool.

Note: The cool access tier has lower storage costs and higher access costs compared to hot storage. This tier is intended for data that will remain in the cool tier for at least 30 days.

Reference:

https://dailydotnettips.com/how-to-update-access-tier-in-azure-storage-blob-level/

See the explanation below.

1. Open Azure Portal and open the Azure Blob storage account named storage10277521.

2. Right-click and select Blob properties

3. From the properties window, change the access tier for the blob to Cool.

Note: The cool access tier has lower storage costs and higher access costs compared to hot storage. This tier is intended for data that will remain in the cool tier for at least 30 days.

Reference:

https://dailydotnettips.com/how-to-update-access-tier-in-azure-storage-blob-level/

send

light_mode

delete

Question #36

SIMULATION -

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

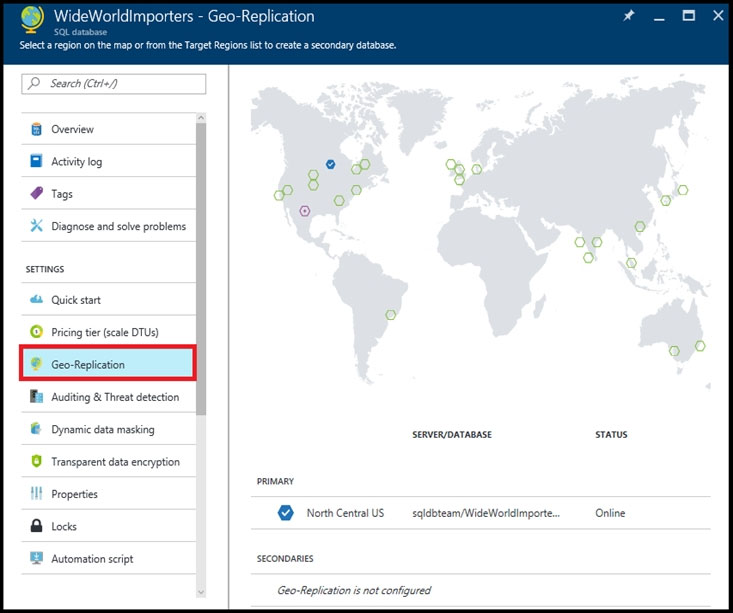

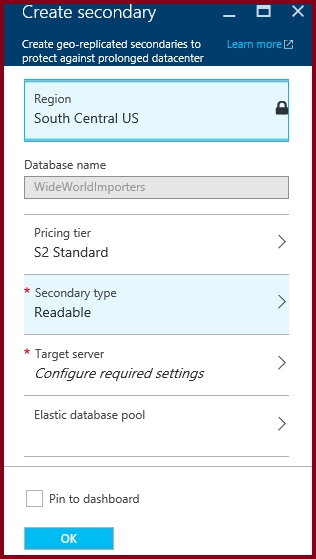

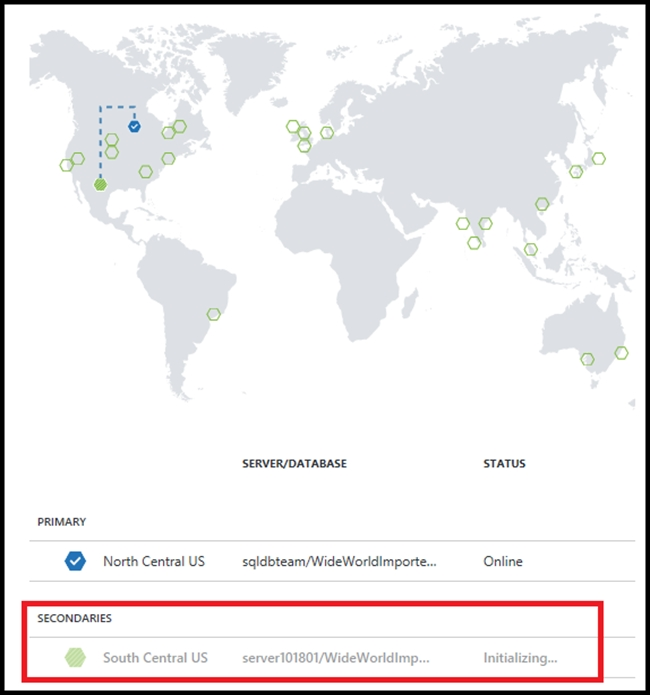

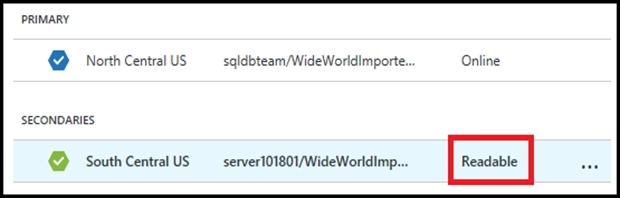

You need to replicate db1 to a new Azure SQL server named REPL10277521 in the Central Canada region.

To complete this task, sign in to the Azure portal.

NOTE: This task might take several minutes to complete. You can perform other tasks while the task completes or ends this section of the exam.

To complete this task, sign in to the Azure portal.

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to replicate db1 to a new Azure SQL server named REPL10277521 in the Central Canada region.

To complete this task, sign in to the Azure portal.

NOTE: This task might take several minutes to complete. You can perform other tasks while the task completes or ends this section of the exam.

To complete this task, sign in to the Azure portal.

Correct Answer:

See the explanation below.

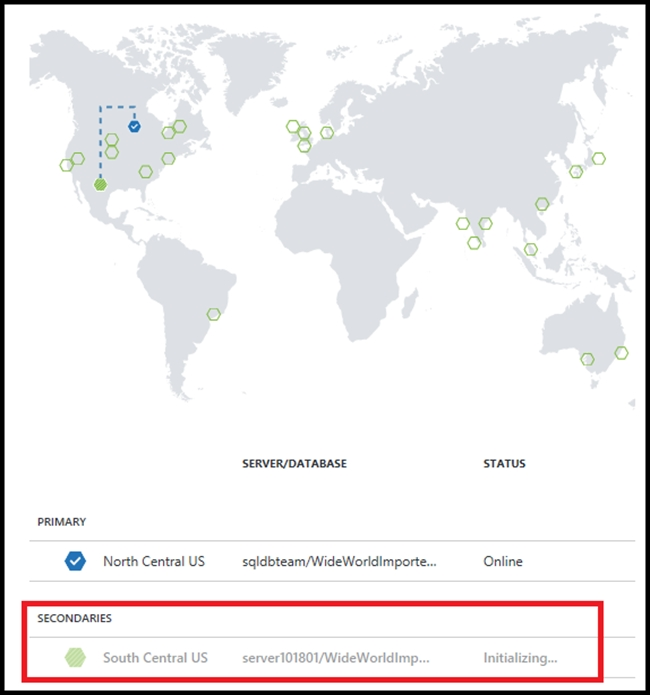

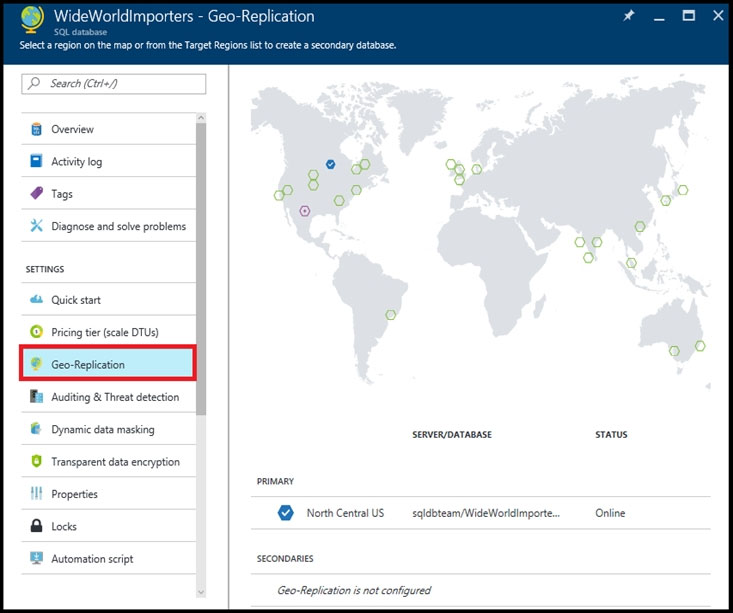

1. In the Azure portal, browse to the database that you want to set up for geo-replication.

2. On the SQL database page, select geo-replication, and then select the region to create the secondary database.

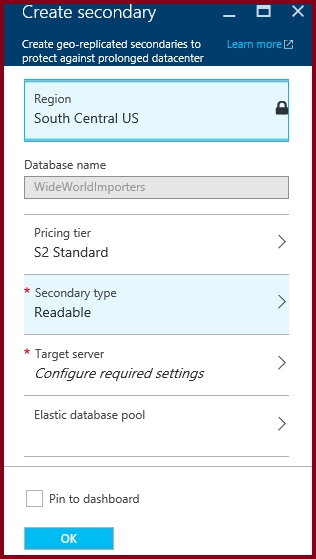

3. Select or configure the server and for the secondary database.

Region: Central Canada -

Target server: REPL10277521 -

4. Click Create to add the secondary.

5. The secondary database is created and the seeding process begins.

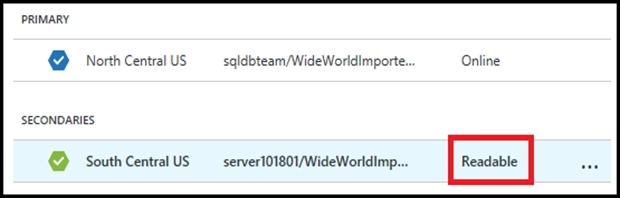

6. When the seeding process is complete, the secondary database displays its status.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-active-geo-replication-portal

See the explanation below.

1. In the Azure portal, browse to the database that you want to set up for geo-replication.

2. On the SQL database page, select geo-replication, and then select the region to create the secondary database.

3. Select or configure the server and for the secondary database.

Region: Central Canada -

Target server: REPL10277521 -

4. Click Create to add the secondary.

5. The secondary database is created and the seeding process begins.

6. When the seeding process is complete, the secondary database displays its status.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-active-geo-replication-portal

send

light_mode

delete

Question #37

SIMULATION -

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to create an Azure SQL database named db3 on an Azure SQL server named SQL10277521. Db3 must use the Sample (AdventureWorksLT) source.

To complete this task, sign in to the Azure portal.

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to create an Azure SQL database named db3 on an Azure SQL server named SQL10277521. Db3 must use the Sample (AdventureWorksLT) source.

To complete this task, sign in to the Azure portal.

Correct Answer:

See the explanation below.

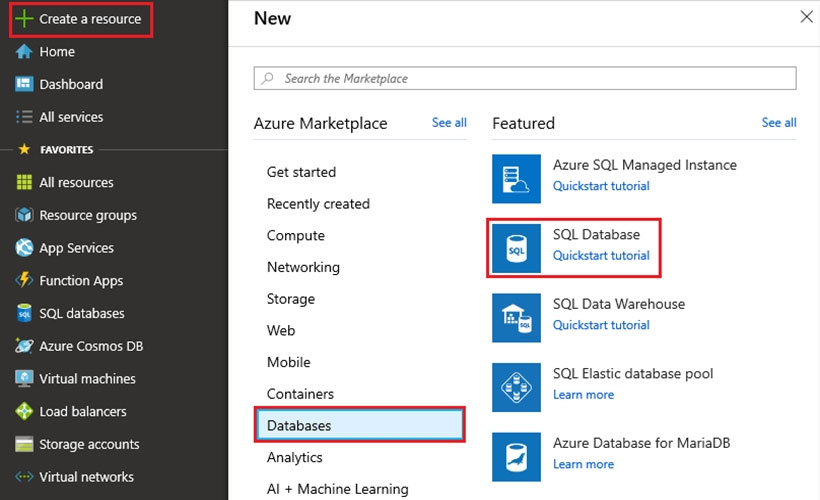

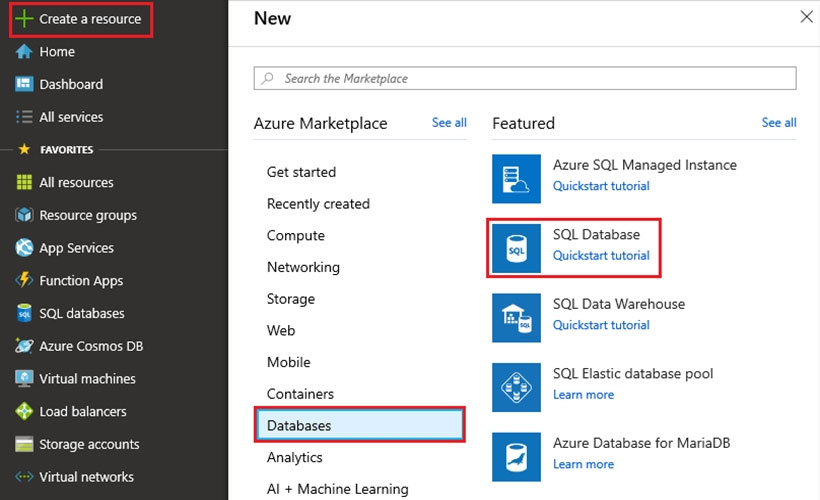

1. Click Create a resource in the upper left-hand corner of the Azure portal.

2. On the New page, select Databases in the Azure Marketplace section, and then click SQL Database in the Featured section.

3. Fill out the SQL Database form with the following information, as shown below:

Database name: Db3 -

Select source: Sample (AdventureWorksLT)

Server: SQL10277521 -

4. Click Select and finish the Wizard using default options.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-design-first-database

See the explanation below.

1. Click Create a resource in the upper left-hand corner of the Azure portal.

2. On the New page, select Databases in the Azure Marketplace section, and then click SQL Database in the Featured section.

3. Fill out the SQL Database form with the following information, as shown below:

Database name: Db3 -

Select source: Sample (AdventureWorksLT)

Server: SQL10277521 -

4. Click Select and finish the Wizard using default options.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-design-first-database

send

light_mode

delete

Question #38

SIMULATION -

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You plan to query db3 to retrieve a list of sales customers. The query will retrieve several columns that include the email address of each sales customer.

You need to modify db3 to ensure that a portion of the email addresses is hidden in the query results.

To complete this task, sign in to the Azure portal.

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You plan to query db3 to retrieve a list of sales customers. The query will retrieve several columns that include the email address of each sales customer.

You need to modify db3 to ensure that a portion of the email addresses is hidden in the query results.

To complete this task, sign in to the Azure portal.

Correct Answer:

See the explanation below.

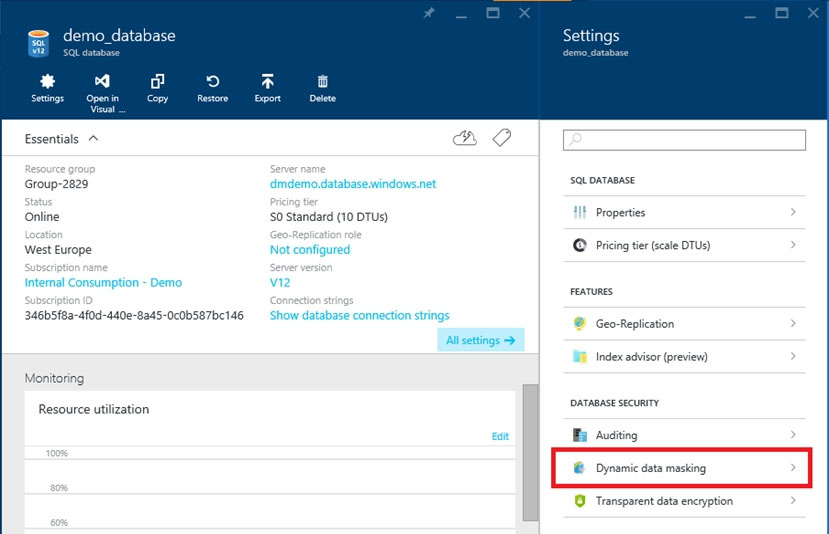

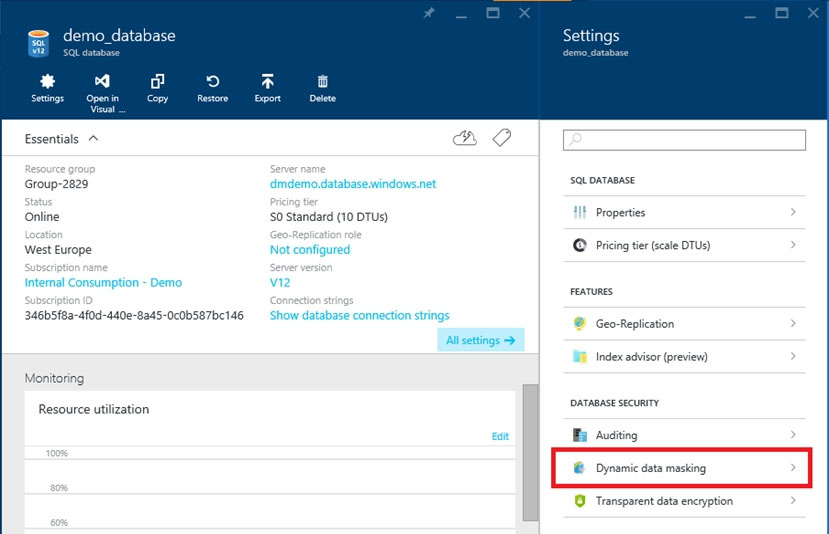

1. Launch the Azure portal.

2. Navigate to the settings page of the database db3 that includes the sensitive data you want to mask.

3. Click the Dynamic Data Masking tile that launches the Dynamic Data Masking configuration page.

Note: Alternatively, you can scroll down to the Operations section and click Dynamic Data Masking.

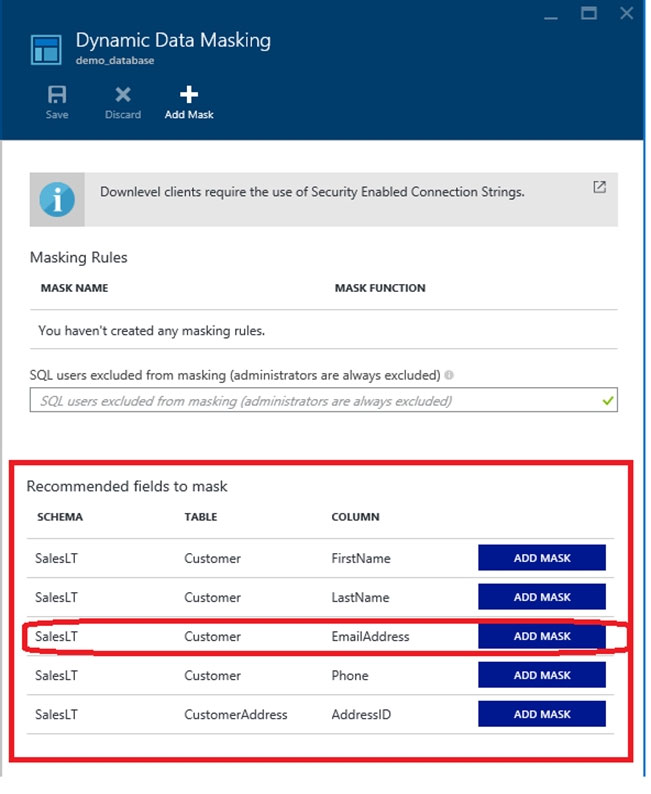

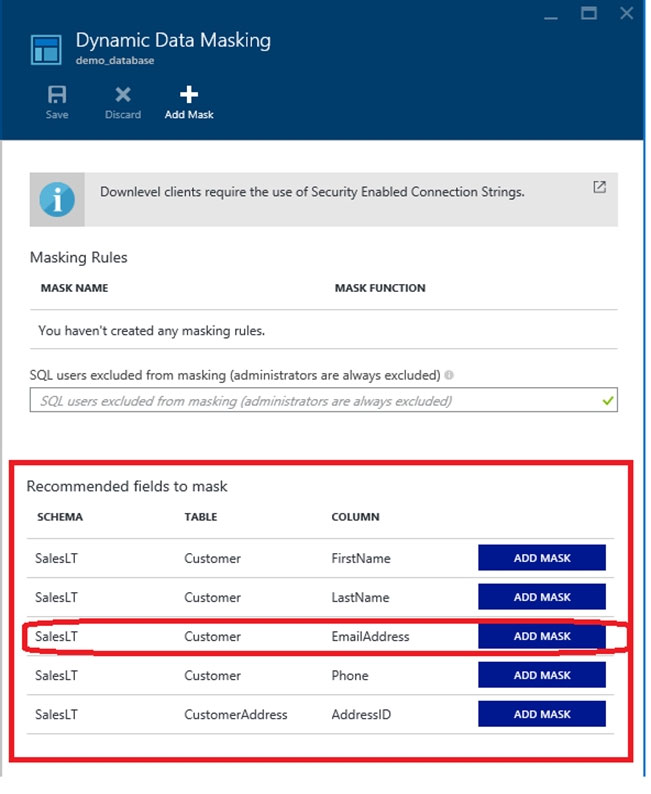

4. In the Dynamic Data Masking configuration page, you may see some database columns that the recommendations engine has flagged for masking.

5. Click ADD MASK for the EmailAddress column

6. Click Save in the data masking rule page to update the set of masking rules in the dynamic data masking policy.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started-portal

See the explanation below.

1. Launch the Azure portal.

2. Navigate to the settings page of the database db3 that includes the sensitive data you want to mask.

3. Click the Dynamic Data Masking tile that launches the Dynamic Data Masking configuration page.

Note: Alternatively, you can scroll down to the Operations section and click Dynamic Data Masking.

4. In the Dynamic Data Masking configuration page, you may see some database columns that the recommendations engine has flagged for masking.

5. Click ADD MASK for the EmailAddress column

6. Click Save in the data masking rule page to update the set of masking rules in the dynamic data masking policy.

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-dynamic-data-masking-get-started-portal

send

light_mode

delete

Question #39

SIMULATION -

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to increase the size of db2 to store up to 250 GB of data.

To complete this task, sign in to the Azure portal.

Use the following login credentials as needed:

Azure Username: xxxxx -

Azure Password: xxxxx -

The following information is for technical support purposes only:

Lab Instance: 10277521 -

You need to increase the size of db2 to store up to 250 GB of data.

To complete this task, sign in to the Azure portal.

Correct Answer:

See the explanation below.

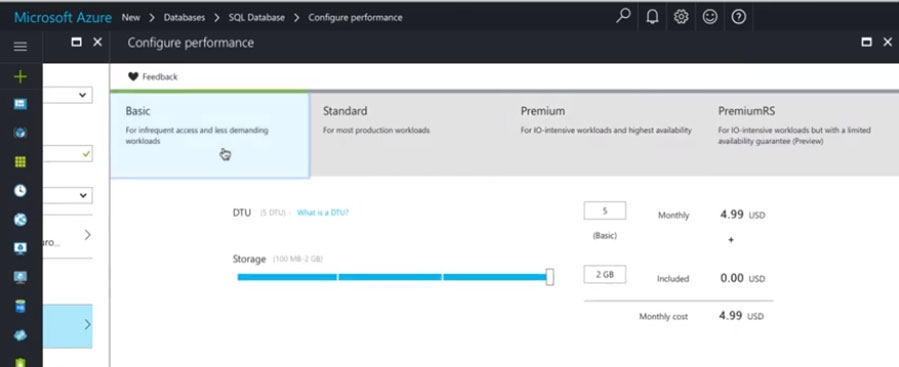

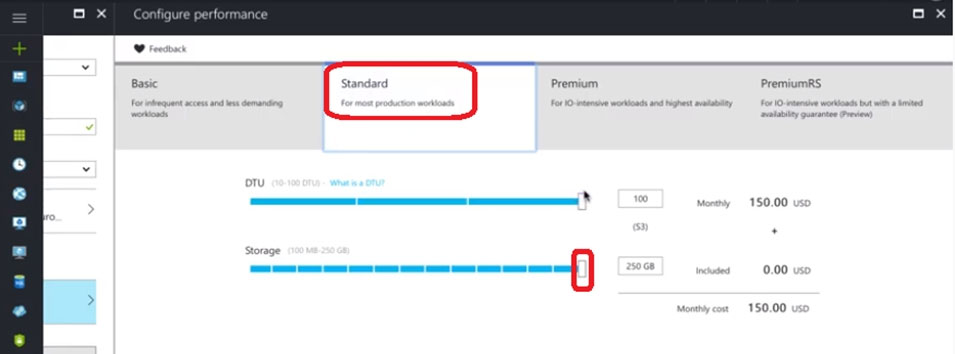

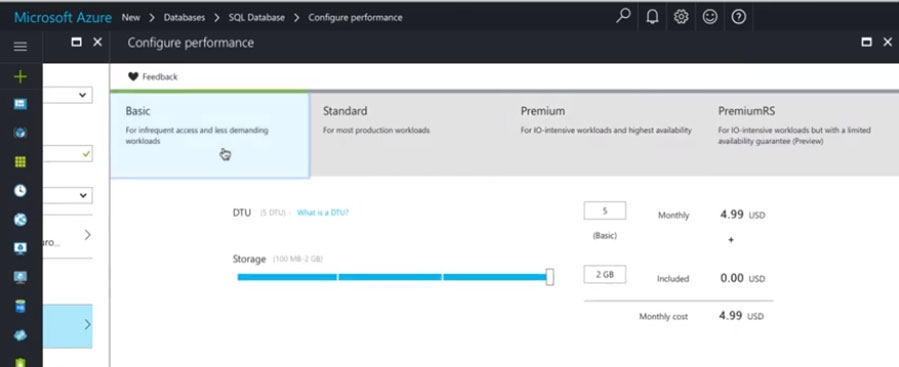

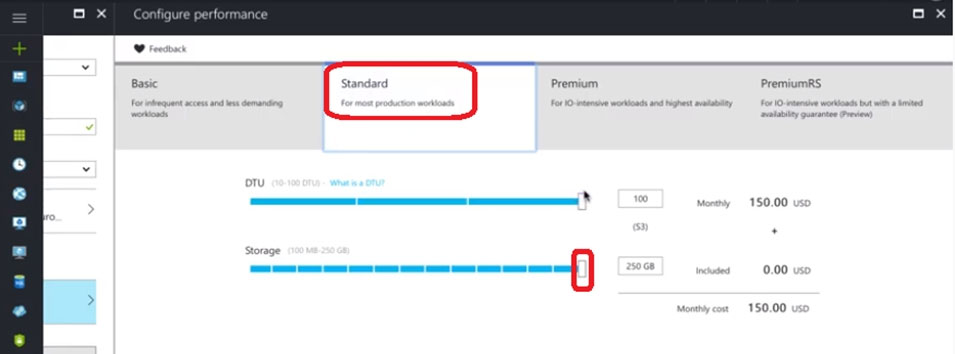

1. In Azure Portal, navigate to the SQL databases page, select the db2 database , and choose Configure performance

2. Click on Standard and Adjust the Storage size to 250 GB

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-single-databases-manage

See the explanation below.

1. In Azure Portal, navigate to the SQL databases page, select the db2 database , and choose Configure performance

2. Click on Standard and Adjust the Storage size to 250 GB

References:

https://docs.microsoft.com/en-us/azure/sql-database/sql-database-single-databases-manage

send

light_mode

delete

Question #40

HOTSPOT -

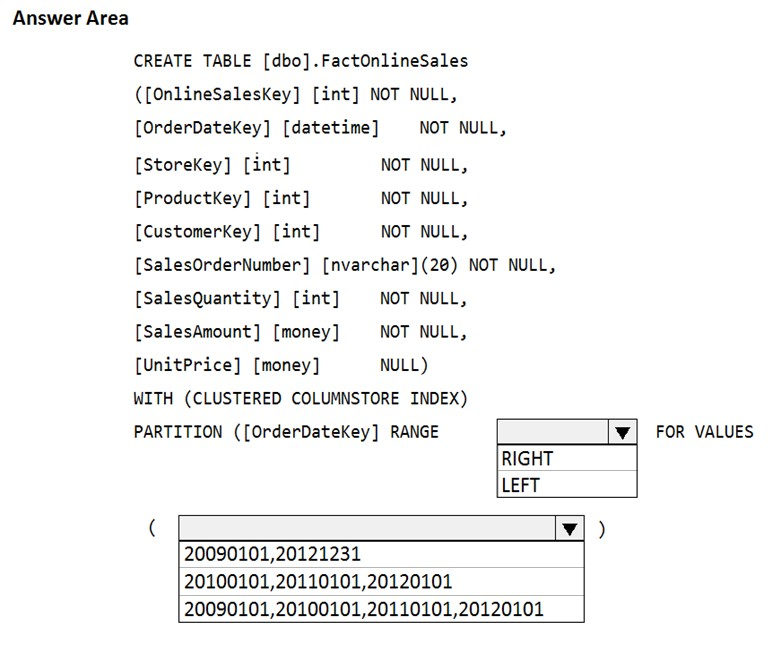

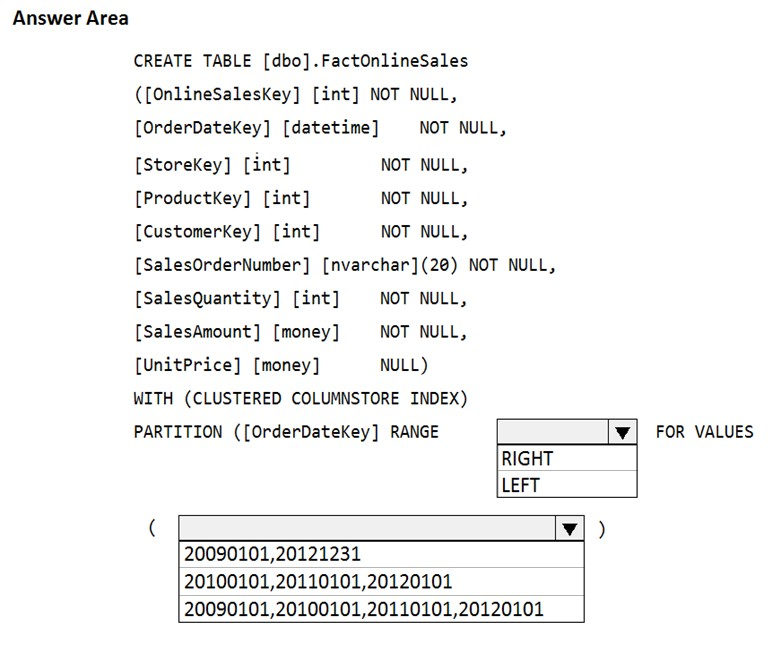

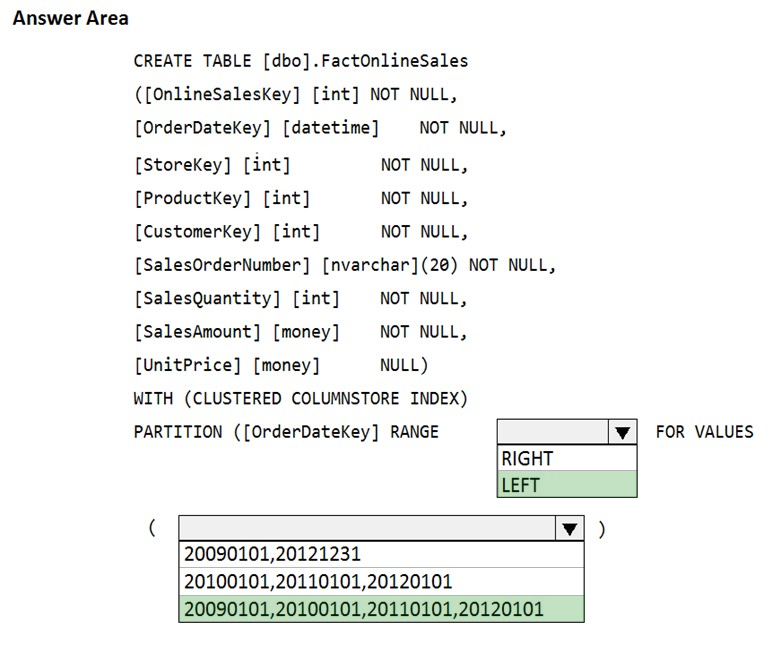

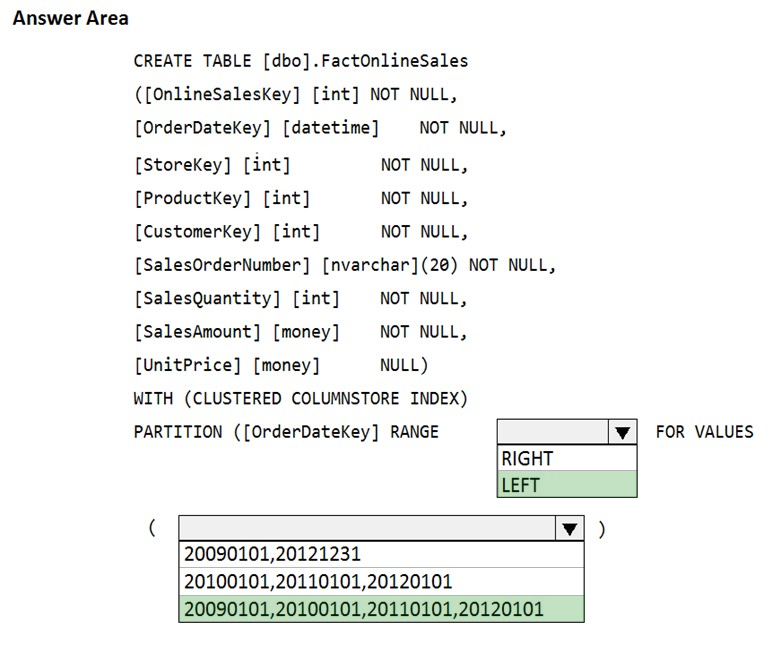

You have an enterprise data warehouse in Azure Synapse Analytics that contains a table named FactOnlineSales. The table contains data from the start of 2009 to the end of 2012.

You need to improve the performance of queries against FactOnlineSales by using table partitions. The solution must meet the following requirements:

✑ Create four partitions based on the order date.

✑ Ensure that each partition contains all the orders placed during a given calendar year.

How should you complete the T-SQL command? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

You have an enterprise data warehouse in Azure Synapse Analytics that contains a table named FactOnlineSales. The table contains data from the start of 2009 to the end of 2012.

You need to improve the performance of queries against FactOnlineSales by using table partitions. The solution must meet the following requirements:

✑ Create four partitions based on the order date.

✑ Ensure that each partition contains all the orders placed during a given calendar year.

How should you complete the T-SQL command? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

Box 1: LEFT -

RANGE LEFT: Specifies the boundary value belongs to the partition on the left (lower values). The default is LEFT.

Box 2: 20090101, 20100101, 20110101, 20120101

FOR VALUES ( boundary_value [,...n] ) specifies the boundary values for the partition. boundary_value is a constant expression.

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse

Box 1: LEFT -

RANGE LEFT: Specifies the boundary value belongs to the partition on the left (lower values). The default is LEFT.

Box 2: 20090101, 20100101, 20110101, 20120101

FOR VALUES ( boundary_value [,...n] ) specifies the boundary values for the partition. boundary_value is a constant expression.

Reference:

https://docs.microsoft.com/en-us/sql/t-sql/statements/create-table-azure-sql-data-warehouse

send

light_mode

delete

All Pages