Microsoft 70-776 Exam Practice Questions (P. 4)

- Full Access (83 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #16

You have a Microsoft Azure SQL data warehouse named DW1 that is used only from Monday to Friday.

You need to minimize Data Warehouse Unit (DWU) usage during the weekend.

What should you do?

You need to minimize Data Warehouse Unit (DWU) usage during the weekend.

What should you do?

- ARun the Suspend-AzureRmSqlDatabase Azure PowerShell cmdlet

- BCall the Create or Update Database REST API

- CFrom the Azure CLI, run the account set command

- DRun the ALTER DATABASE statement

Correct Answer:

A

Pause compute -

To save costs, you can pause and resume compute resources on-demand. For example, if you are not using the database during the night and on weekends, you can pause it during those times, and resume it during the day. There is no charge for compute resources while the database is paused. However, you continue to be charged for storage.

To pause a database, use the Suspend-AzureRmSqlDatabase cmdlet.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/pause-and-resume-compute-powershell

A

Pause compute -

To save costs, you can pause and resume compute resources on-demand. For example, if you are not using the database during the night and on weekends, you can pause it during those times, and resume it during the day. There is no charge for compute resources while the database is paused. However, you continue to be charged for storage.

To pause a database, use the Suspend-AzureRmSqlDatabase cmdlet.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/pause-and-resume-compute-powershell

send

light_mode

delete

Question #17

You plan to deploy a Microsoft Azure virtual machine that will a host data warehouse. The data warehouse will contain a 10-TB database.

You need to provide the fastest read and writes times for the database.

Which disk configuration should you use?

You need to provide the fastest read and writes times for the database.

Which disk configuration should you use?

- Aspanned volumes

- Bstorage pools with striped disks

- CRAID 5 volumes

- Dstorage pools with mirrored disks

- Estripped volumes

Correct Answer:

B

B

send

light_mode

delete

Question #18

You have a Microsoft Azure SQL data warehouse that has a fact table named FactOrder. FactOrder contains three columns named CustomerID, OrderID, and

OrderDateKey. FactOrder is hash distributed on CustomerID. OrderID is the unique identifier for FactOrder. FactOrder contains 3 million rows.

Orders are distributed evenly among different customers from a table named dimCustomers that contains 2 million rows.

You often run queries that join FactOrder and dimCustomers by selecting and grouping by the OrderDateKey column.

You add 7 million rows to FactOrder. Most of the new records have a more recent OrderDateKey value than the previous records.

You need to reduce the execution time of queries that group on OrderDateKey and that join dimCustomers and FactOrder.

What should you do?

OrderDateKey. FactOrder is hash distributed on CustomerID. OrderID is the unique identifier for FactOrder. FactOrder contains 3 million rows.

Orders are distributed evenly among different customers from a table named dimCustomers that contains 2 million rows.

You often run queries that join FactOrder and dimCustomers by selecting and grouping by the OrderDateKey column.

You add 7 million rows to FactOrder. Most of the new records have a more recent OrderDateKey value than the previous records.

You need to reduce the execution time of queries that group on OrderDateKey and that join dimCustomers and FactOrder.

What should you do?

- AChange the distribution for the FactOrder table to round robin

- BChange the distribution for the FactOrder table to be based on OrderID

- CUpdate the statistics for the OrderDateKey column

- DChange the distribution for the dimCustomers table to OrderDateKey

Correct Answer:

C

Updating statistics -

One best practice is to update statistics on date columns each day as new dates are added. Each time new rows are loaded into the data warehouse, new load dates or transaction dates are added. These change the data distribution and make the statistics out of date.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-statistics

C

Updating statistics -

One best practice is to update statistics on date columns each day as new dates are added. Each time new rows are loaded into the data warehouse, new load dates or transaction dates are added. These change the data distribution and make the statistics out of date.

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/sql-data-warehouse-tables-statistics

send

light_mode

delete

Question #19

DRAG DROP -

You have a Microsoft Azure SQL data warehouse.

You plan to reference data from Azure Blob storage. The data is stored in the GZIP compressed format. The blob storage requires authentication.

You create a master key for the data warehouse and a database schema.

You need to reference the data without importing the data to the data warehouse.

Which four statements should you execute in sequence? To answer, move the appropriate statements from the list of statements to the answer area and arrange them in the correct order.

Select and Place:

You have a Microsoft Azure SQL data warehouse.

You plan to reference data from Azure Blob storage. The data is stored in the GZIP compressed format. The blob storage requires authentication.

You create a master key for the data warehouse and a database schema.

You need to reference the data without importing the data to the data warehouse.

Which four statements should you execute in sequence? To answer, move the appropriate statements from the list of statements to the answer area and arrange them in the correct order.

Select and Place:

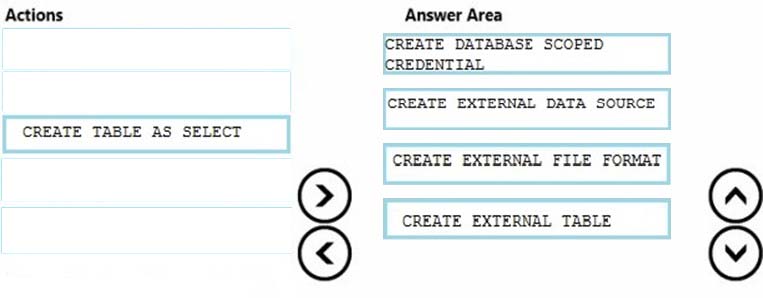

Correct Answer:

Step 1: CREATE DATABASE SCOPED CREDENTIAL

Step 2: CREATE EXTERNAL DATA SOURCE

Creates an external data source for PolyBase, or Elastic Database queries.

Example Step 1 and Step 2:

-- Create a database scoped credential with Kerberos user name and password.

CREATE DATABASE SCOPED CREDENTIAL HadoopUser1

WITH IDENTITY = '<hadoop_user_name>',

SECRET = '<hadoop_password>';

-- Create an external data source with CREDENTIAL option.

CREATE EXTERNAL DATA SOURCE MyHadoopCluster WITH (

TYPE = HADOOP,

LOCATION = 'hdfs://10.10.10.10:8050',

RESOURCE_MANAGER_LOCATION = '10.10.10.10:8050',

CREDENTIAL = HadoopUser1 -

);

Step 3: CREATE EXTERNAL FILE FORMAT

Creates an External File Format object defining external data stored in Hadoop, Azure Blob Storage, or Azure Data Lake Store. Creating an external file format is a prerequisite for creating an External Table. By creating an External File Format, you specify the actual layout of the data referenced by an external table.

Step 4: CREATE EXTERNAL TABLE -

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-external-file-format-transact-sql

Step 1: CREATE DATABASE SCOPED CREDENTIAL

Step 2: CREATE EXTERNAL DATA SOURCE

Creates an external data source for PolyBase, or Elastic Database queries.

Example Step 1 and Step 2:

-- Create a database scoped credential with Kerberos user name and password.

CREATE DATABASE SCOPED CREDENTIAL HadoopUser1

WITH IDENTITY = '<hadoop_user_name>',

SECRET = '<hadoop_password>';

-- Create an external data source with CREDENTIAL option.

CREATE EXTERNAL DATA SOURCE MyHadoopCluster WITH (

TYPE = HADOOP,

LOCATION = 'hdfs://10.10.10.10:8050',

RESOURCE_MANAGER_LOCATION = '10.10.10.10:8050',

CREDENTIAL = HadoopUser1 -

);

Step 3: CREATE EXTERNAL FILE FORMAT

Creates an External File Format object defining external data stored in Hadoop, Azure Blob Storage, or Azure Data Lake Store. Creating an external file format is a prerequisite for creating an External Table. By creating an External File Format, you specify the actual layout of the data referenced by an external table.

Step 4: CREATE EXTERNAL TABLE -

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-external-file-format-transact-sql

send

light_mode

delete

Question #20

You need to connect to a Microsoft Azure SQL data warehouse from an Azure Machine Learning experiment.

Which data source should you use?

Which data source should you use?

- AAzure Table

- BSQL Database

- CWeb URL via HTTP

- DData Feed Provider

Correct Answer:

B

Use Azure SQL Database as the Data Source.

References: https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/import-from-azure-sql-database

B

Use Azure SQL Database as the Data Source.

References: https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/import-from-azure-sql-database

send

light_mode

delete

All Pages