Microsoft 70-776 Exam Practice Questions (P. 3)

- Full Access (83 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

You are designing a data loading process for a Microsoft Azure SQL data warehouse. Data will be loaded to Azure Blob storage, and then the data will be loaded to the data warehouse.

Which tool should you use to load the data to Azure Blob storage?

Which tool should you use to load the data to Azure Blob storage?

- AAdlCopy

- Bbcp

- CFTP

- DAzCopy

Correct Answer:

D

AzCopy is a command-line utility designed for copying data to/from Microsoft Azure Blob, File, and Table storage, using simple commands designed for optimal performance. You can copy data between a file system and a storage account, or between storage accounts.

References: https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy#copy-blobs-in-blob-storage

D

AzCopy is a command-line utility designed for copying data to/from Microsoft Azure Blob, File, and Table storage, using simple commands designed for optimal performance. You can copy data between a file system and a storage account, or between storage accounts.

References: https://docs.microsoft.com/en-us/azure/storage/common/storage-use-azcopy#copy-blobs-in-blob-storage

send

light_mode

delete

Question #12

You have a Microsoft Azure SQL data warehouse to which 1,000 Data Warehouse Units (DWUs) are allocated.

You plan to load 10 million rows of data to the data warehouse.

You need to load the data in the least amount of time possible. The solution must ensure that queries against the new data execute as quickly as possible.

What should you use to optimize the data load?

You plan to load 10 million rows of data to the data warehouse.

You need to load the data in the least amount of time possible. The solution must ensure that queries against the new data execute as quickly as possible.

What should you use to optimize the data load?

- Aresource classes

- Bresource pools

- CMAXDOP

- DResource Governor

Correct Answer:

A

Resource classes are pre-determined resource limits that govern query execution. SQL Data Warehouse limits the compute resources for each query according to resource class.

Resource classes help you manage the overall performance of your data warehouse workload.Using resource classes effectively helps you manage your workload by setting limits on the number of queries that run concurrently and the compute-resources assigned to each query.

✑ Smaller resource classes use less compute resources but enable greater overall query concurrency

✑ Larger resource classes provide more compute resources but restrict the query concurrency

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/resource-classes-for-workload-management

A

Resource classes are pre-determined resource limits that govern query execution. SQL Data Warehouse limits the compute resources for each query according to resource class.

Resource classes help you manage the overall performance of your data warehouse workload.Using resource classes effectively helps you manage your workload by setting limits on the number of queries that run concurrently and the compute-resources assigned to each query.

✑ Smaller resource classes use less compute resources but enable greater overall query concurrency

✑ Larger resource classes provide more compute resources but restrict the query concurrency

References: https://docs.microsoft.com/en-us/azure/sql-data-warehouse/resource-classes-for-workload-management

send

light_mode

delete

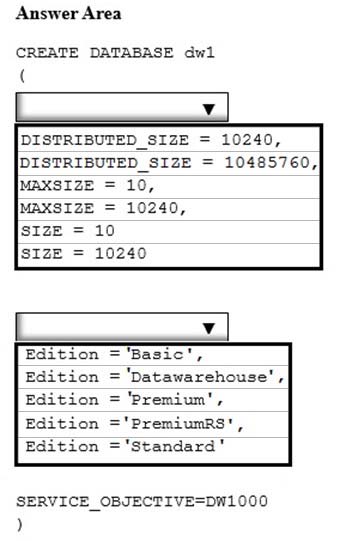

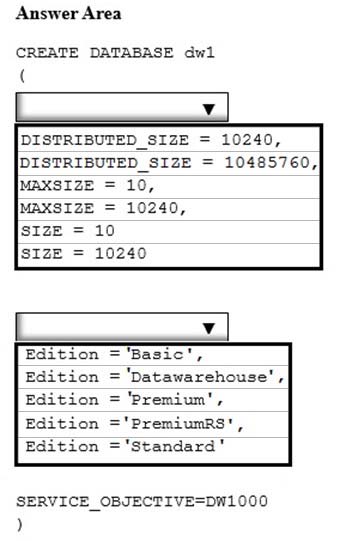

Question #13

HOTSPOT -

You need to create a Microsoft Azure SQL data warehouse named dw1 that supports up to 10 TB of data.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

You need to create a Microsoft Azure SQL data warehouse named dw1 that supports up to 10 TB of data.

How should you complete the statement? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

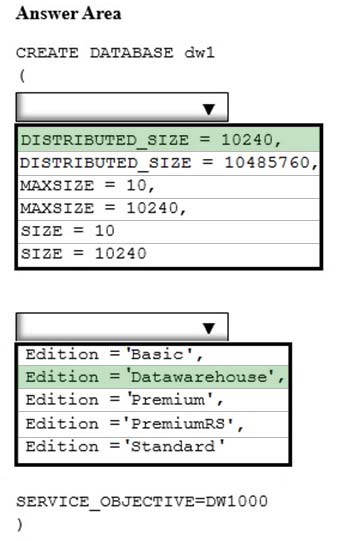

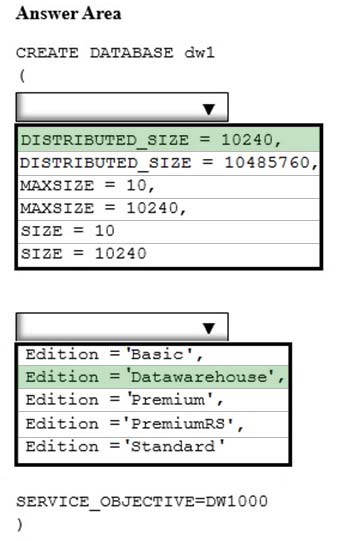

Correct Answer:

Box 1: distributed_size =10240 -

10240 GB equals 10 TB.

Parameter: distributed_size [ GB ]

A positive number. The size, in integer or decimal gigabytes, for the total space allocated to distributed tables (and corresponding data) across the appliance.

Box 2: datawarehouse -

The EDITION parameter specifies the service tier of the database. For SQL Data Warehouse use 'datawarehouse' .

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-database-azure-sql-data-warehouse

Box 1: distributed_size =10240 -

10240 GB equals 10 TB.

Parameter: distributed_size [ GB ]

A positive number. The size, in integer or decimal gigabytes, for the total space allocated to distributed tables (and corresponding data) across the appliance.

Box 2: datawarehouse -

The EDITION parameter specifies the service tier of the database. For SQL Data Warehouse use 'datawarehouse' .

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-database-azure-sql-data-warehouse

send

light_mode

delete

Question #14

You have a Microsoft Azure SQL data warehouse stored in geo-redundant storage.

You experience a regional outage.

You plan to recover the database to a new region.

You need to get a list of the backup files that can be restored to the new region.

Which cmdlet should you run?

You experience a regional outage.

You plan to recover the database to a new region.

You need to get a list of the backup files that can be restored to the new region.

Which cmdlet should you run?

- AGet-AzureRmSqlDatabase

- BGet-AzureRmSqlDatabaseGeoBackup

- CGet-AzureRmSqlDatabaseReplicationLink

- DGet-AzureRmSqlDatabaseRestore Points

Correct Answer:

B

The Get-AzureRMSqlDatabaseGeoBackup cmdlet gets a specified geo-redundant backup of a SQL database or all available geo-redundant backups on a specified server.

A geo-redundant backup is a restorable resource using data files from a separate geographic location. You can use Geo-Restore to restore a geo-redundant backup in the event of a regional outage to recover your database to a new region.

References: https://docs.microsoft.com/en-us/powershell/module/azurerm.sql/get-azurermsqldatabasegeobackup?view=azurermps-5.4.0

B

The Get-AzureRMSqlDatabaseGeoBackup cmdlet gets a specified geo-redundant backup of a SQL database or all available geo-redundant backups on a specified server.

A geo-redundant backup is a restorable resource using data files from a separate geographic location. You can use Geo-Restore to restore a geo-redundant backup in the event of a regional outage to recover your database to a new region.

References: https://docs.microsoft.com/en-us/powershell/module/azurerm.sql/get-azurermsqldatabasegeobackup?view=azurermps-5.4.0

send

light_mode

delete

Question #15

You manage an on-premises data warehouse that uses Microsoft SQL Server. The data warehouse contains 100 TB of data. The data is partitioned by month.

One TB of data is added to the data warehouse each month.

You create A Microsoft Azure SQL data warehouse and copy the on-premises data to the data warehouse.

You need to implement a process to replicate the on-premises data warehouse to the Azure SQL data warehouse. The solution must support daily incremental updates and must provide error handling.

What should you use?

One TB of data is added to the data warehouse each month.

You create A Microsoft Azure SQL data warehouse and copy the on-premises data to the data warehouse.

You need to implement a process to replicate the on-premises data warehouse to the Azure SQL data warehouse. The solution must support daily incremental updates and must provide error handling.

What should you use?

- Athe export and import of BACPAC files

- BSQL Server Integration Services (SSIS)

- CSQL Server backup and restore

- DSQL Server snapshot replication

Correct Answer:

B

SQL Data Warehouse supports many loading methods, including SSIS, BCP, the SQLBulkCopy API, and Azure Data Factory (ADF).

References: https://docs.microsoft.com/en-us/sql/integration-services/data-flow/error-handling-in-data

B

SQL Data Warehouse supports many loading methods, including SSIS, BCP, the SQLBulkCopy API, and Azure Data Factory (ADF).

References: https://docs.microsoft.com/en-us/sql/integration-services/data-flow/error-handling-in-data

send

light_mode

delete

All Pages