Microsoft DP-300 Exam Practice Questions (P. 3)

- Full Access (373 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

You plan to build a structured streaming solution in Azure Databricks. The solution will count new events in five-minute intervals and report only events that arrive during the interval.

The output will be sent to a Delta Lake table.

Which output mode should you use?

The output will be sent to a Delta Lake table.

Which output mode should you use?

- Acomplete

- BappendMost Voted

- Cupdate

Correct Answer:

A

Complete mode: You can use Structured Streaming to replace the entire table with every batch.

Incorrect Answers:

B: By default, streams run in append mode, which adds new records to the table.

Reference:

https://docs.databricks.com/delta/delta-streaming.html

A

Complete mode: You can use Structured Streaming to replace the entire table with every batch.

Incorrect Answers:

B: By default, streams run in append mode, which adds new records to the table.

Reference:

https://docs.databricks.com/delta/delta-streaming.html

send

light_mode

delete

Question #22

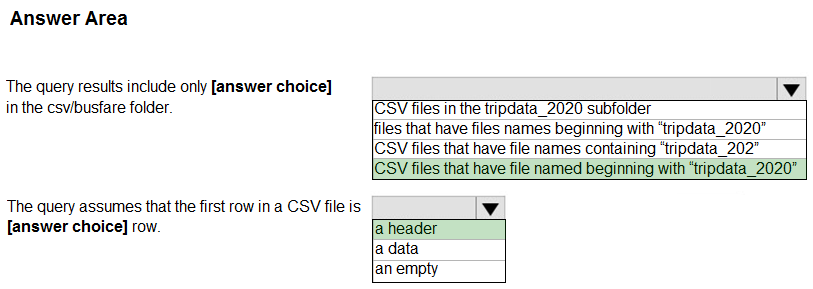

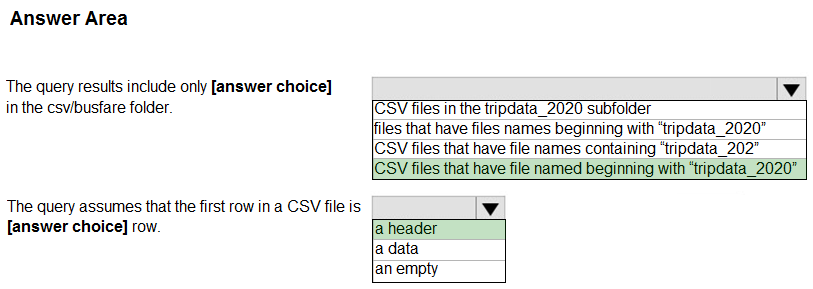

HOTSPOT -

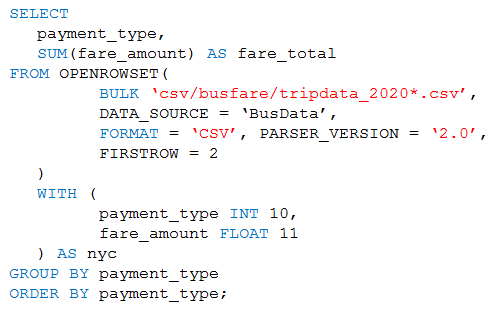

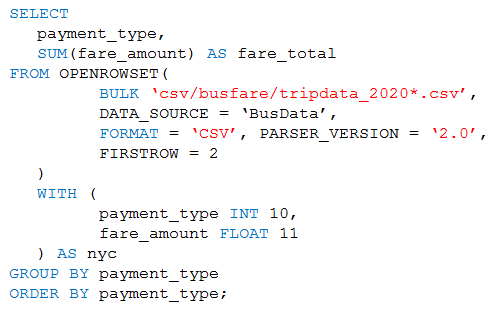

You are performing exploratory analysis of bus fare data in an Azure Data Lake Storage Gen2 account by using an Azure Synapse Analytics serverless SQL pool.

You execute the Transact-SQL query shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Hot Area:

You are performing exploratory analysis of bus fare data in an Azure Data Lake Storage Gen2 account by using an Azure Synapse Analytics serverless SQL pool.

You execute the Transact-SQL query shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

Hot Area:

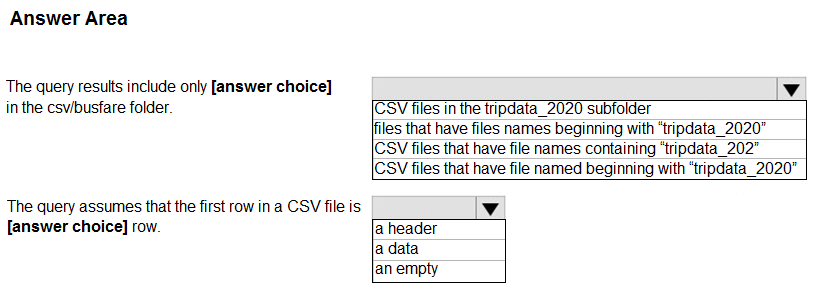

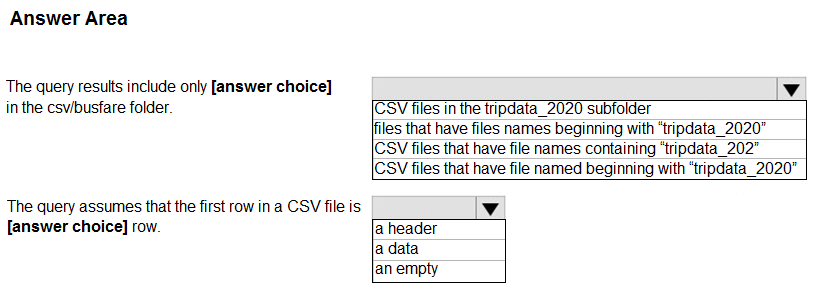

Correct Answer:

Box 1: CSV files that have file named beginning with "tripdata_2020"

Box 2: a header -

FIRSTROW = 'first_row'

Specifies the number of the first row to load. The default is 1 and indicates the first row in the specified data file. The row numbers are determined by counting the row terminators. FIRSTROW is 1-based.

Example: Option firstrow is used to skip the first row in the CSV file that represents header in this case (firstrow=2). select top 10 * from openrowset( bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.csv', format = 'csv', parser_version = '2.0', firstrow = 2 ) as rows

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-openrowset https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-single-csv-file

Box 1: CSV files that have file named beginning with "tripdata_2020"

Box 2: a header -

FIRSTROW = 'first_row'

Specifies the number of the first row to load. The default is 1 and indicates the first row in the specified data file. The row numbers are determined by counting the row terminators. FIRSTROW is 1-based.

Example: Option firstrow is used to skip the first row in the CSV file that represents header in this case (firstrow=2). select top 10 * from openrowset( bulk 'https://pandemicdatalake.blob.core.windows.net/public/curated/covid-19/ecdc_cases/latest/ecdc_cases.csv', format = 'csv', parser_version = '2.0', firstrow = 2 ) as rows

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/develop-openrowset https://docs.microsoft.com/en-us/azure/synapse-analytics/sql/query-single-csv-file

send

light_mode

delete

Question #23

You have a SQL pool in Azure Synapse that contains a table named dbo.Customers. The table contains a column name Email.

You need to prevent nonadministrative users from seeing the full email addresses in the Email column. The users must see values in a format of [email protected] instead.

What should you do?

You need to prevent nonadministrative users from seeing the full email addresses in the Email column. The users must see values in a format of [email protected] instead.

What should you do?

- AFrom the Azure portal, set a mask on the Email column.Most Voted

- BFrom the Azure portal, set a sensitivity classification of Confidential for the Email column.

- CFrom Microsoft SQL Server Management Studio, set an email mask on the Email column.

- DFrom Microsoft SQL Server Management Studio, grant the SELECT permission to the users for all the columns in the dbo.Customers table except Email.

Correct Answer:

C

The Email masking method, which exposes the first letter and replaces the domain with XXX.com using a constant string prefix in the form of an email address.

Example: [email protected] -

Incorrect:

Not A: The Email mask feature cannot be set using portal for Azure Synapse (use PowerShell or REST API) or SQL Managed Instance.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

C

The Email masking method, which exposes the first letter and replaces the domain with XXX.com using a constant string prefix in the form of an email address.

Example: [email protected] -

Incorrect:

Not A: The Email mask feature cannot be set using portal for Azure Synapse (use PowerShell or REST API) or SQL Managed Instance.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/dynamic-data-masking-overview

send

light_mode

delete

Question #24

You have an Azure Databricks workspace named workspace1 in the Standard pricing tier. Workspace1 contains an all-purpose cluster named cluster1.

You need to reduce the time it takes for cluster1 to start and scale up. The solution must minimize costs.

What should you do first?

You need to reduce the time it takes for cluster1 to start and scale up. The solution must minimize costs.

What should you do first?

- AUpgrade workspace1 to the Premium pricing tier.

- BConfigure a global init script for workspace1.

- CCreate a pool in workspace1.

- DCreate a cluster policy in workspace1.

Correct Answer:

C

You can use Databricks Pools to Speed up your Data Pipelines and Scale Clusters Quickly.

Databricks Pools, a managed cache of virtual machine instances that enables clusters to start and scale 4 times faster.

Reference:

https://databricks.com/blog/2019/11/11/databricks-pools-speed-up-data-pipelines.html

C

You can use Databricks Pools to Speed up your Data Pipelines and Scale Clusters Quickly.

Databricks Pools, a managed cache of virtual machine instances that enables clusters to start and scale 4 times faster.

Reference:

https://databricks.com/blog/2019/11/11/databricks-pools-speed-up-data-pipelines.html

send

light_mode

delete

Question #25

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: In an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Does this meet the goal?

- AYesMost Voted

- BNo

Correct Answer:

A

You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the Get

Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

A

You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the Get

Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

send

light_mode

delete

Question #26

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use an Azure Synapse Analytics serverless SQL pool to create an external table that has an additional DateTime column.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use an Azure Synapse Analytics serverless SQL pool to create an external table that has an additional DateTime column.

Does this meet the goal?

- AYes

- BNoMost Voted

Correct Answer:

B

This is not about an external table.

Instead, in an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Note: You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the

Get Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

B

This is not about an external table.

Instead, in an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Note: You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the

Get Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

send

light_mode

delete

Question #27

Note: This question is part of a series of questions that present the same scenario. Each question in the series contains a unique solution that might meet the stated goals. Some question sets might have more than one correct solution, while others might not have a correct solution.

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has an additional DateTime column.

Does this meet the goal?

After you answer a question in this section, you will NOT be able to return to it. As a result, these questions will not appear in the review screen.

You have an Azure Synapse Analytics dedicated SQL pool that contains a table named Table1.

You have files that are ingested and loaded into an Azure Data Lake Storage Gen2 container named container1.

You plan to insert data from the files into Table1 and transform the data. Each row of data in the files will produce one row in the serving layer of Table1.

You need to ensure that when the source data files are loaded to container1, the DateTime is stored as an additional column in Table1.

Solution: You use a dedicated SQL pool to create an external table that has an additional DateTime column.

Does this meet the goal?

- AYes

- BNo

Correct Answer:

B

Instead, in an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Note: You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the

Get Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

B

Instead, in an Azure Synapse Analytics pipeline, you use a Get Metadata activity that retrieves the DateTime of the files.

Note: You can use the Get Metadata activity to retrieve the metadata of any data in Azure Data Factory or a Synapse pipeline. You can use the output from the

Get Metadata activity in conditional expressions to perform validation, or consume the metadata in subsequent activities.

Reference:

https://docs.microsoft.com/en-us/azure/data-factory/control-flow-get-metadata-activity

send

light_mode

delete

Question #28

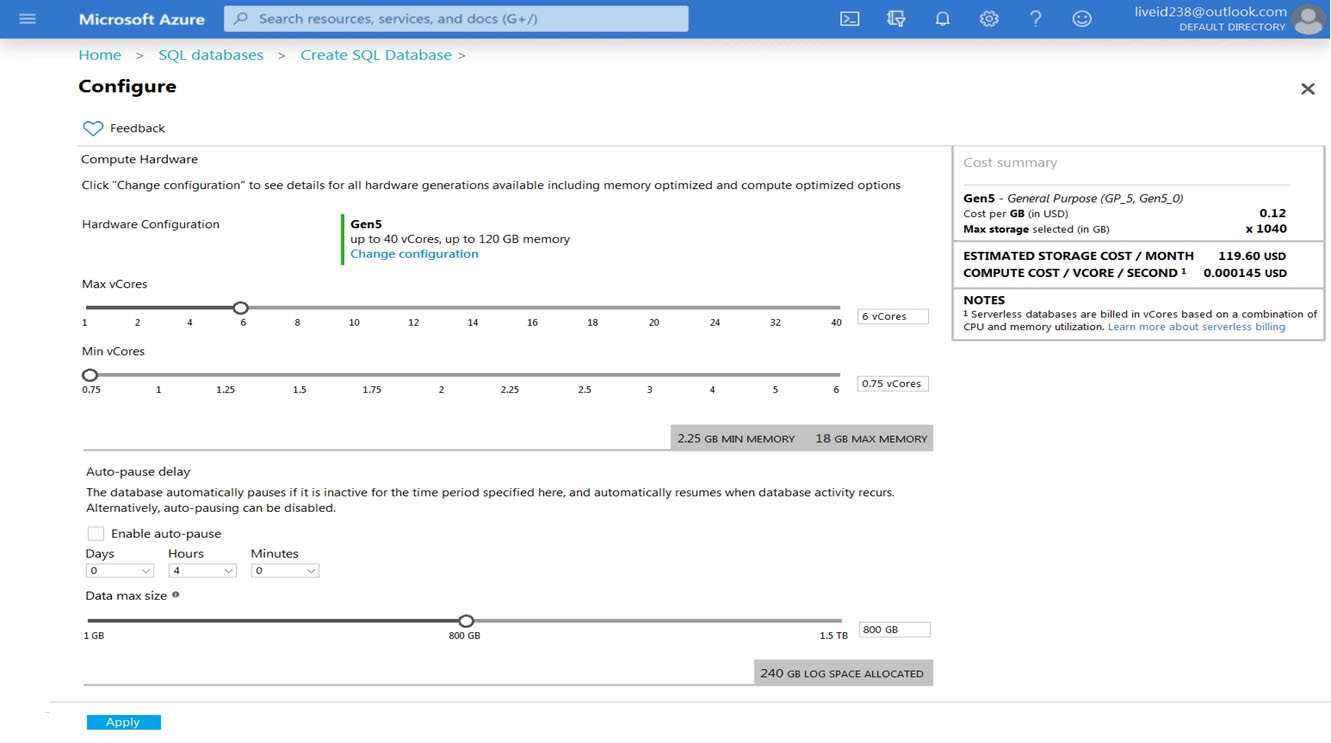

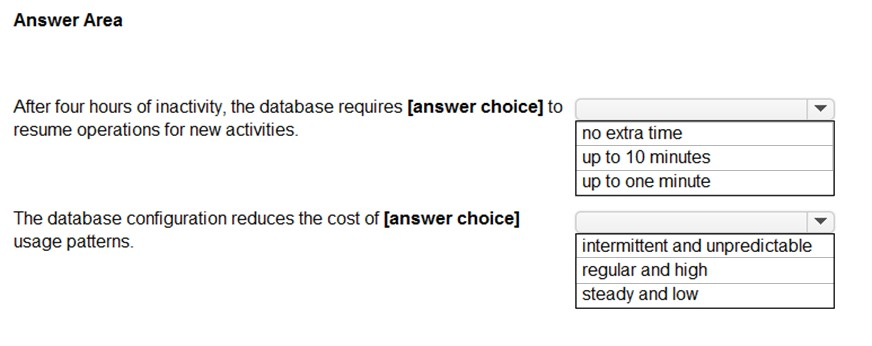

HOTSPOT -

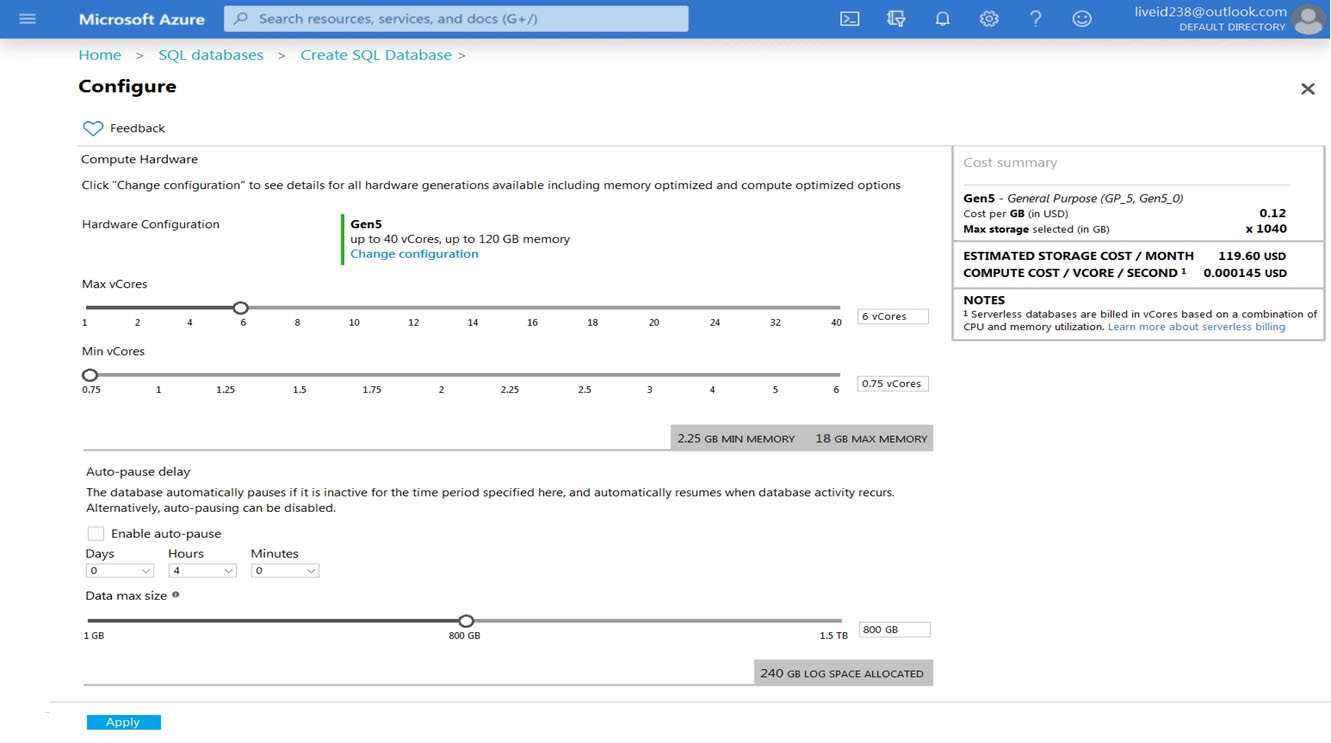

You are provisioning an Azure SQL database in the Azure portal as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

You are provisioning an Azure SQL database in the Azure portal as shown in the following exhibit.

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the graphic.

NOTE: Each correct selection is worth one point.

Hot Area:

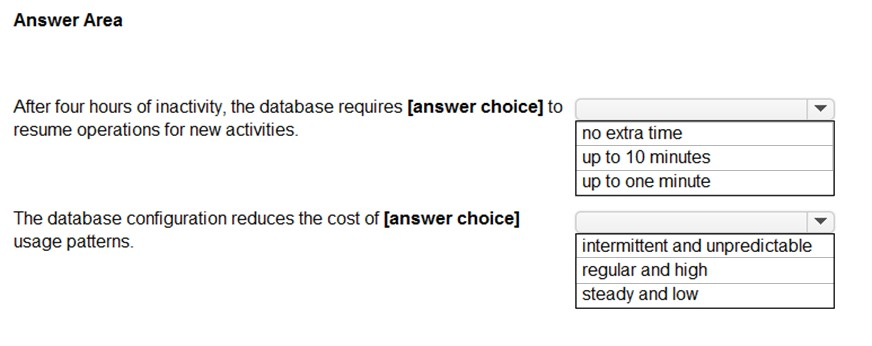

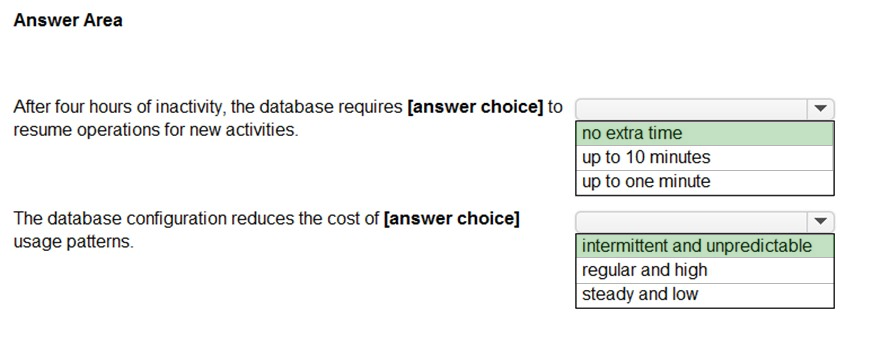

Correct Answer:

Box 1: no extra time -

Auto Pause is not checked in the exhibit.

Note: If Auto Pause is checked the correct answer is: up to one minute

Box 2: intermittent and unpredictable

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/serverless-tier-overview

Box 1: no extra time -

Auto Pause is not checked in the exhibit.

Note: If Auto Pause is checked the correct answer is: up to one minute

Box 2: intermittent and unpredictable

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/serverless-tier-overview

send

light_mode

delete

Question #29

You plan to deploy an app that includes an Azure SQL database and an Azure web app. The app has the following requirements:

✑ The web app must be hosted on an Azure virtual network.

✑ The Azure SQL database must be assigned a private IP address.

✑ The Azure SQL database must allow connections only from a specific virtual network.

You need to recommend a solution that meets the requirements.

What should you include in the recommendation?

✑ The web app must be hosted on an Azure virtual network.

✑ The Azure SQL database must be assigned a private IP address.

✑ The Azure SQL database must allow connections only from a specific virtual network.

You need to recommend a solution that meets the requirements.

What should you include in the recommendation?

- AAzure Private LinkMost Voted

- Ba network security group (NSG)

- Ca database-level firewall

- Da server-level firewall

Correct Answer:

A

Private Link allows you to connect to various PaaS services in Azure via a private endpoint.

A private endpoint is a private IP address within a specific VNet and subnet.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/private-endpoint-overview

A

Private Link allows you to connect to various PaaS services in Azure via a private endpoint.

A private endpoint is a private IP address within a specific VNet and subnet.

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/private-endpoint-overview

send

light_mode

delete

Question #30

You are planning a solution that will use Azure SQL Database. Usage of the solution will peak from October 1 to January 1 each year.

During peak usage, the database will require the following:

✑ 24 cores

✑ 500 GB of storage

✑ 124 GB of memory

✑ More than 50,000 IOPS

During periods of off-peak usage, the service tier of Azure SQL Database will be set to Standard.

Which service tier should you use during peak usage?

During peak usage, the database will require the following:

✑ 24 cores

✑ 500 GB of storage

✑ 124 GB of memory

✑ More than 50,000 IOPS

During periods of off-peak usage, the service tier of Azure SQL Database will be set to Standard.

Which service tier should you use during peak usage?

- ABusiness CriticalMost Voted

- BPremium

- CHyperscale

Correct Answer:

A

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/resource-limits-vcore-single-databases#business-critical---provisioned-compute---gen4

A

Reference:

https://docs.microsoft.com/en-us/azure/azure-sql/database/resource-limits-vcore-single-databases#business-critical---provisioned-compute---gen4

send

light_mode

delete

All Pages