Microsoft 70-475 Exam Practice Questions (P. 4)

- Full Access (42 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #16

You are designing a solution that will use Apache HBase on Microsoft Azure HDInsight.

You need to design the row keys for the database to ensure that client traffic is directed over all of the nodes in the cluster.

What are two possible techniques that you can use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

You need to design the row keys for the database to ensure that client traffic is directed over all of the nodes in the cluster.

What are two possible techniques that you can use? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- Apadding

- Btrimming

- Chashing

- Dsalting

Correct Answer:

CD

There are two strategies that you can use to avoid hotspotting:

* Hashing keys

To spread write and insert activity across the cluster, you can randomize sequentially generated keys by hashing the keys, inverting the byte order. Note that these strategies come with trade-offs. Hashing keys, for example, makes table scans for key subranges inefficient, since the subrange is spread across the cluster.

* Salting keys

Instead of hashing the key, you can salt the key by prepending a few bytes of the hash of the key to the actual key.

Note. Salted Apache HBase tables with pre-split is a proven effective HBase solution to provide uniform workload distribution across RegionServers and prevent hot spots during bulk writes. In this design, a row key is made with a logical key plus salt at the beginning. One way of generating salt is by calculating n (number of regions) modulo on the hash code of the logical row key (date, etc).

Reference:

https://blog.cloudera.com/blog/2015/06/how-to-scan-salted-apache-hbase-tables-with-region-specific-key-ranges-in-mapreduce/ http://maprdocs.mapr.com/51/MapR-DB/designing_row_keys_for_mapr_db_binary_tables.html

CD

There are two strategies that you can use to avoid hotspotting:

* Hashing keys

To spread write and insert activity across the cluster, you can randomize sequentially generated keys by hashing the keys, inverting the byte order. Note that these strategies come with trade-offs. Hashing keys, for example, makes table scans for key subranges inefficient, since the subrange is spread across the cluster.

* Salting keys

Instead of hashing the key, you can salt the key by prepending a few bytes of the hash of the key to the actual key.

Note. Salted Apache HBase tables with pre-split is a proven effective HBase solution to provide uniform workload distribution across RegionServers and prevent hot spots during bulk writes. In this design, a row key is made with a logical key plus salt at the beginning. One way of generating salt is by calculating n (number of regions) modulo on the hash code of the logical row key (date, etc).

Reference:

https://blog.cloudera.com/blog/2015/06/how-to-scan-salted-apache-hbase-tables-with-region-specific-key-ranges-in-mapreduce/ http://maprdocs.mapr.com/51/MapR-DB/designing_row_keys_for_mapr_db_binary_tables.html

send

light_mode

delete

Question #17

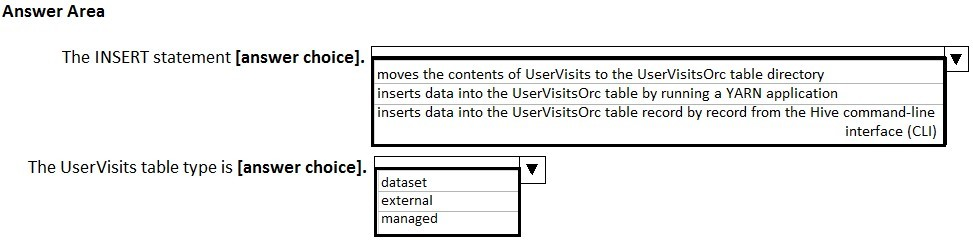

HOTSPOT -

You have the following script.

CREATE TABLE UserVisits (username string, url string, time date)

STORED AS TEXTFILE LOCATION "wasb:///Logs";

CREATE TABLE UserVisitsOrc (username string, url string, time date)

STORED AS ORC;

INSERT INTO TABLE UserVisitsOrc SELECT * FROM UserVisits

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the script.

NOTE: Each correct selection is worth one point.

Hot Area:

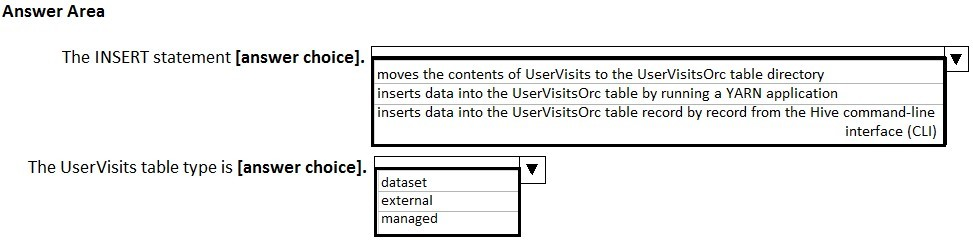

You have the following script.

CREATE TABLE UserVisits (username string, url string, time date)

STORED AS TEXTFILE LOCATION "wasb:///Logs";

CREATE TABLE UserVisitsOrc (username string, url string, time date)

STORED AS ORC;

INSERT INTO TABLE UserVisitsOrc SELECT * FROM UserVisits

Use the drop-down menus to select the answer choice that completes each statement based on the information presented in the script.

NOTE: Each correct selection is worth one point.

Hot Area:

Correct Answer:

A table created without the EXTERNAL clause is called a managed table because Hive manages its data.

Reference: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

A table created without the EXTERNAL clause is called a managed table because Hive manages its data.

Reference: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+DDL

send

light_mode

delete

Question #18

A company named Fabrikam, Inc. has a Microsoft Azure web app. Billions of users visit the app daily.

The web app logs all user activity by using text files in Azure Blob storage. Each day, approximately 200 GB of text files are created.

Fabrikam uses the log files from an Apache Hadoop cluster on Azure HDInsight.

You need to recommend a solution to optimize the storage of the log files for later Hive use.

What is the best property to recommend adding to the Hive table definition to achieve the goal? More than one answer choice may achieve the goal. Select the

BEST answer.

The web app logs all user activity by using text files in Azure Blob storage. Each day, approximately 200 GB of text files are created.

Fabrikam uses the log files from an Apache Hadoop cluster on Azure HDInsight.

You need to recommend a solution to optimize the storage of the log files for later Hive use.

What is the best property to recommend adding to the Hive table definition to achieve the goal? More than one answer choice may achieve the goal. Select the

BEST answer.

- ASTORED AS RCFILE

- BSTORED AS GZIP

- CSTORED AS ORC

- DSTORED AS TEXTFILE

Correct Answer:

C

The Optimized Row Columnar (ORC) file format provides a highly efficient way to store Hive data. It was designed to overcome limitations of the other Hive file formats. Using ORC files improves performance when Hive is reading, writing, and processing data.

Compared with RCFile format, for example, ORC file format has many advantages such as:

✑ a single file as the output of each task, which reduces the NameNode's load

✑ Hive type support including datetime, decimal, and the complex types (struct, list, map, and union)

✑ light-weight indexes stored within the file

✑ skip row groups that don't pass predicate filtering

✑ seek to a given row

✑ block-mode compression based on data type

✑ run-length encoding for integer columns

✑ dictionary encoding for string columns

✑ concurrent reads of the same file using separate RecordReaders

✑ ability to split files without scanning for markers

bound the amount of memory needed for reading or writing

✑ metadata stored using Protocol Buffers, which allows addition and removal of fields

Reference: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+ORC#LanguageManualORC-ORCFileFormat

C

The Optimized Row Columnar (ORC) file format provides a highly efficient way to store Hive data. It was designed to overcome limitations of the other Hive file formats. Using ORC files improves performance when Hive is reading, writing, and processing data.

Compared with RCFile format, for example, ORC file format has many advantages such as:

✑ a single file as the output of each task, which reduces the NameNode's load

✑ Hive type support including datetime, decimal, and the complex types (struct, list, map, and union)

✑ light-weight indexes stored within the file

✑ skip row groups that don't pass predicate filtering

✑ seek to a given row

✑ block-mode compression based on data type

✑ run-length encoding for integer columns

✑ dictionary encoding for string columns

✑ concurrent reads of the same file using separate RecordReaders

✑ ability to split files without scanning for markers

bound the amount of memory needed for reading or writing

✑ metadata stored using Protocol Buffers, which allows addition and removal of fields

Reference: https://cwiki.apache.org/confluence/display/Hive/LanguageManual+ORC#LanguageManualORC-ORCFileFormat

send

light_mode

delete

Question #19

You have structured data that resides in Microsoft Azure Blob storage.

You need to perform a rapid interactive analysis of the data and to generate visualizations of the data.

What is the best type of Azure HDInsight cluster to use to achieve the goal? More than one answer choice may achieve the goal. Choose the BEST answer.

You need to perform a rapid interactive analysis of the data and to generate visualizations of the data.

What is the best type of Azure HDInsight cluster to use to achieve the goal? More than one answer choice may achieve the goal. Choose the BEST answer.

- AApache Storm

- BApache HBase

- CApache Hadoop

- DApache Spark

Correct Answer:

D

A Spark cluster provides in-memory processing, interactive queries, micro-batch stream processing-

Reference: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-provision-linux-clusters

D

A Spark cluster provides in-memory processing, interactive queries, micro-batch stream processing-

Reference: https://docs.microsoft.com/en-us/azure/hdinsight/hdinsight-hadoop-provision-linux-clusters

send

light_mode

delete

Question #20

You are designing a solution based on the lambda architecture.

You need to recommend which technology to use for the serving layer.

What should you recommend?

You need to recommend which technology to use for the serving layer.

What should you recommend?

- AApache Storm

- BKafka

- CMicrosoft Azure DocumentDB

- DApache Hadoop

Correct Answer:

C

The Serving Layer is a bit more complicated in that it needs to be able to answer a single query request against two or more databases, processing platforms, and data storage devices. Apache Druid is an example of a cluster-based tool that can marry the Batch and Speed layers into a single answerable request.

Reference: https://en.wikipedia.org/wiki/Lambda_architecture

C

The Serving Layer is a bit more complicated in that it needs to be able to answer a single query request against two or more databases, processing platforms, and data storage devices. Apache Druid is an example of a cluster-based tool that can marry the Batch and Speed layers into a single answerable request.

Reference: https://en.wikipedia.org/wiki/Lambda_architecture

send

light_mode

delete

All Pages