Microsoft 70-331 Exam Practice Questions (P. 5)

- Full Access (141 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

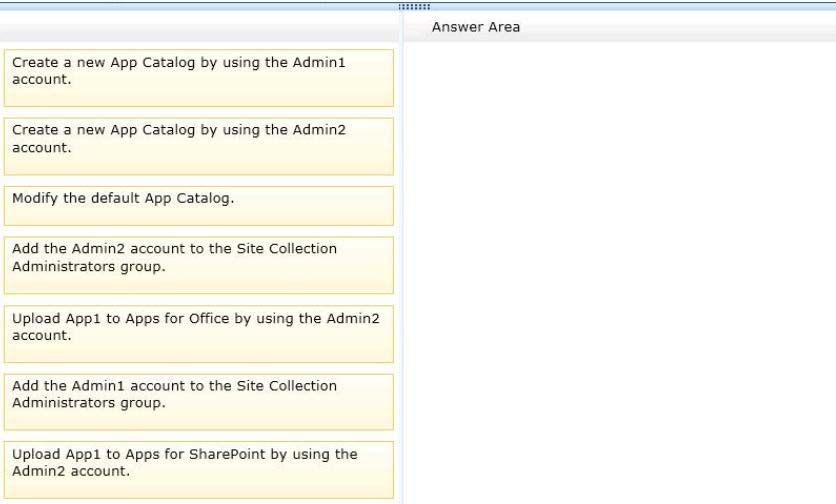

Question #21

DRAG DROP -

You are managing a SharePoint farm. A user account named Admin1 is a member of the Farm Administrators group.

A domain user account named Admin2 will manage a third-party SharePoint app, named App1, and the App Catalog in which it will reside.

You need to make App1 available to users.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Select and Place:

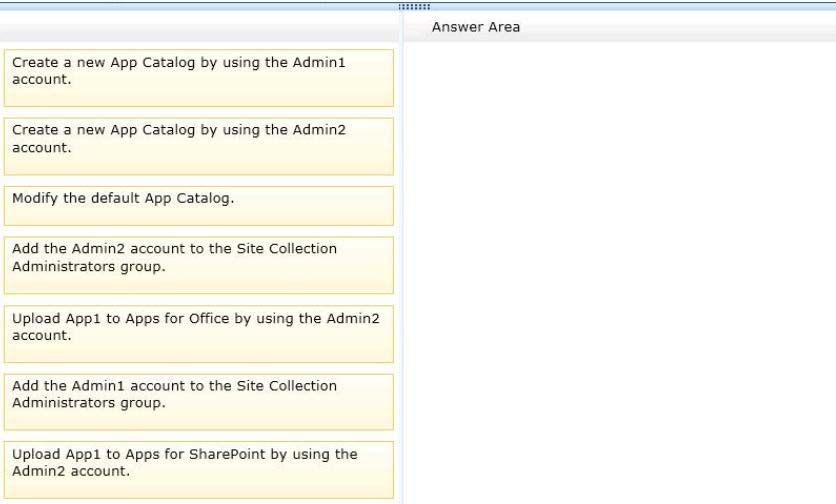

You are managing a SharePoint farm. A user account named Admin1 is a member of the Farm Administrators group.

A domain user account named Admin2 will manage a third-party SharePoint app, named App1, and the App Catalog in which it will reside.

You need to make App1 available to users.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Select and Place:

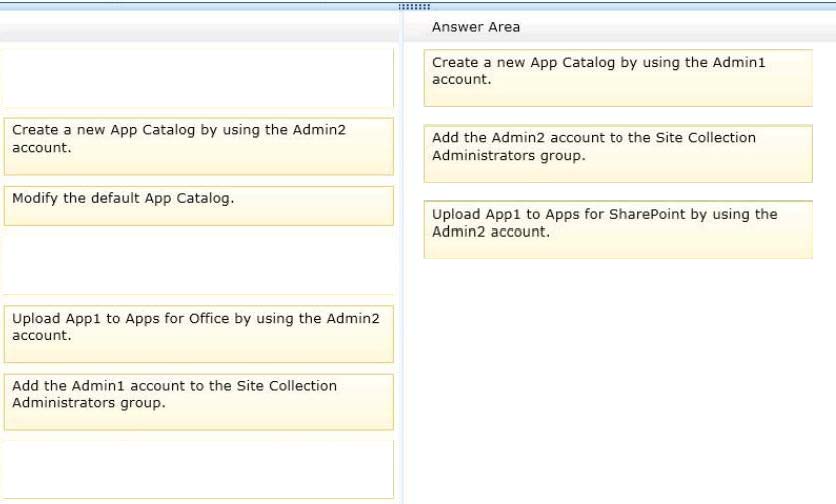

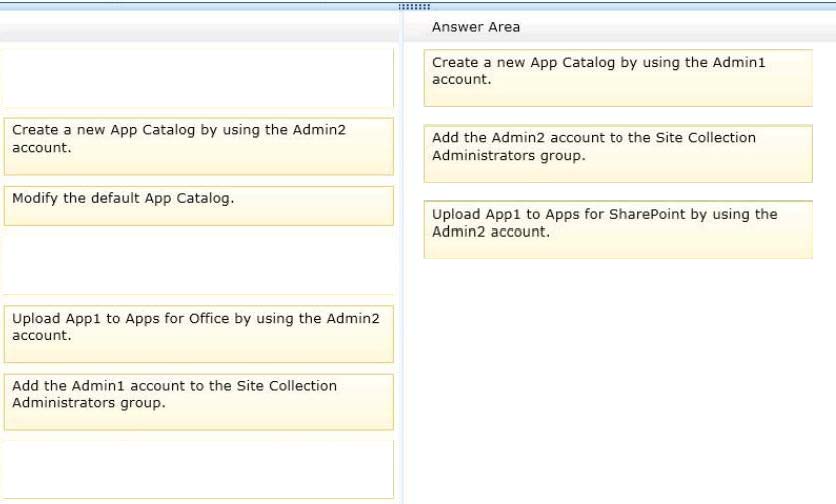

Correct Answer:

Note:

* SharePoint 2013 introduces a Cloud App Model that enables you to create apps. Apps for SharePoint are self-contained pieces of functionality that extend the capabilities of a SharePoint website.

* Incorrect: The apps for Office platform lets you create engaging new consumer and enterprise experiences running within supported Office 2013 applications

Note:

* SharePoint 2013 introduces a Cloud App Model that enables you to create apps. Apps for SharePoint are self-contained pieces of functionality that extend the capabilities of a SharePoint website.

* Incorrect: The apps for Office platform lets you create engaging new consumer and enterprise experiences running within supported Office 2013 applications

send

light_mode

delete

Question #22

DRAG DROP -

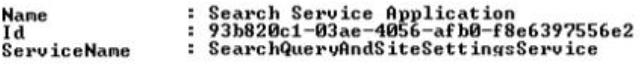

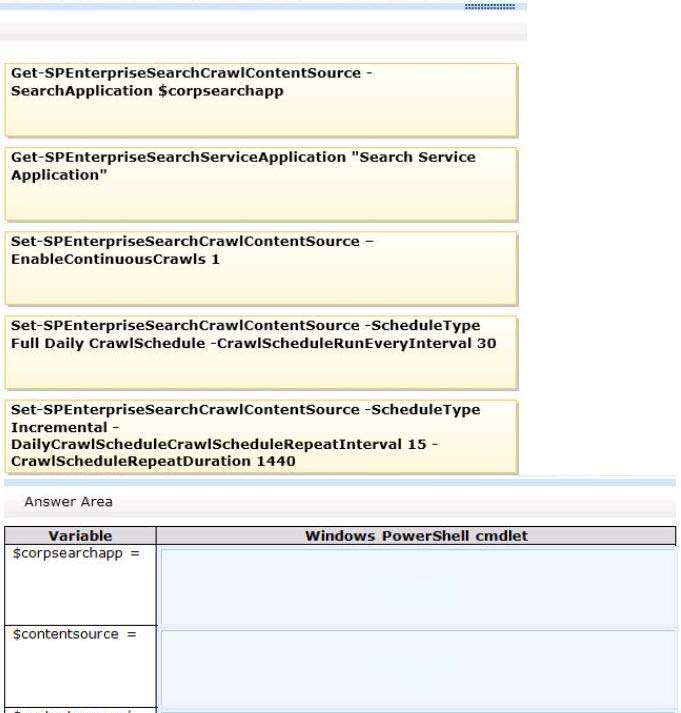

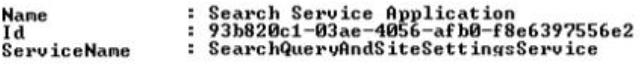

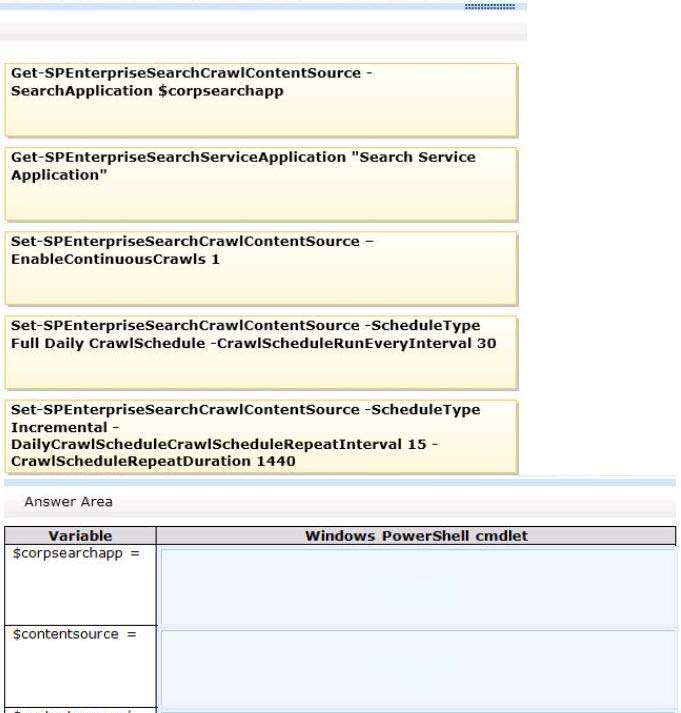

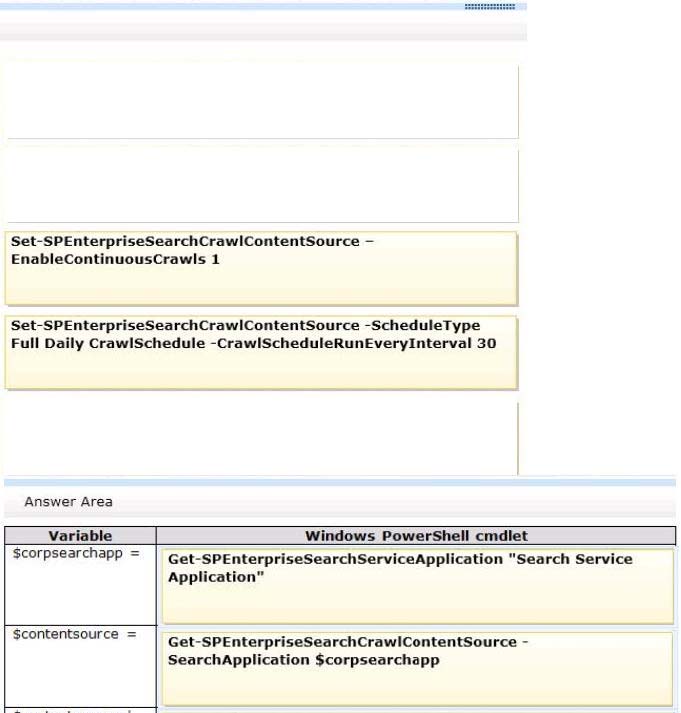

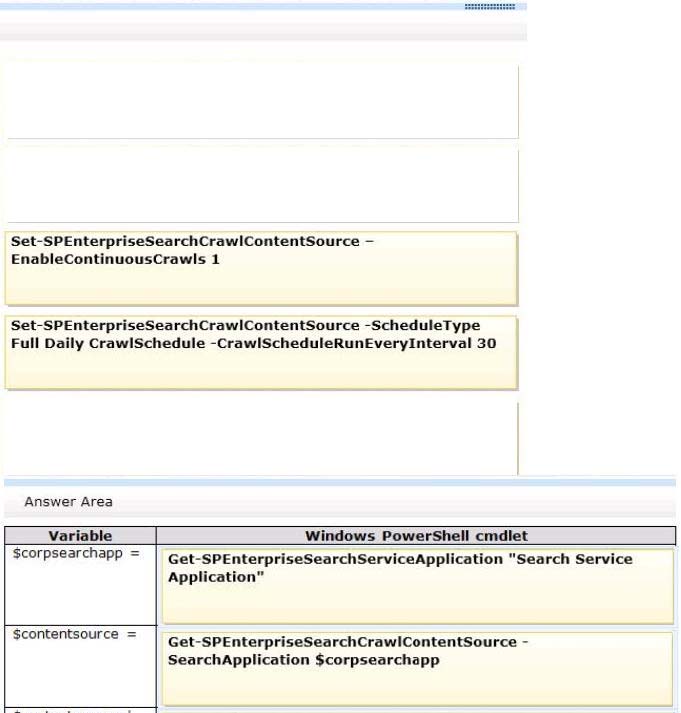

A SharePoint environment includes an enterprise search application. You are configuring the search application crawl schedule for a specific farm.

You plan to configure the crawl schedule at set intervals of 15 minutes on a continuous basis. The relevant information for the farm is shown in the following graphic.

You need to ensure that search results are fresh and up-to-date for all SharePoint sites in the environment.

Which Windows PowerShell cmdlets should you run? (To answer, drag the appropriate cmdlets to the correct variable or variables in the answer area. Each cmdlet may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

Select and Place:

A SharePoint environment includes an enterprise search application. You are configuring the search application crawl schedule for a specific farm.

You plan to configure the crawl schedule at set intervals of 15 minutes on a continuous basis. The relevant information for the farm is shown in the following graphic.

You need to ensure that search results are fresh and up-to-date for all SharePoint sites in the environment.

Which Windows PowerShell cmdlets should you run? (To answer, drag the appropriate cmdlets to the correct variable or variables in the answer area. Each cmdlet may be used once, more than once, or not at all. You may need to drag the split bar between panes or scroll to view content.)

Select and Place:

Correct Answer:

* Get-SPEnterpriseSearchServiceApplication

Returns the search service application for a farm.

------------------EXAMPLE------------------

$ssa = Get-SPEnterpriseSearchServiceApplication Identity MySSA This example obtains a reference to a search service application named MySSA.

Example2:

-----------------EXAMPLE------------------

$searchapp = Get-SPEnterpriseSearchServiceApplication " SearchApp1" $contentsource = Get- SPEnterpriseSearchCrawlContentSource -SearchApplication

$searchapp -Identity "Local SharePoint Sites" $contentsource.StartFullCrawl()

This example retrieves the default content source for the search service application, SearchApp1, and starts a full crawl on the content source.

* Set-SPEnterpriseSearchCrawlContentSource

Sets the properties of a crawl content source for a Search service application.

This cmdlet contains more than one parameter set. You mayonly use parameters from one parameter set, and you may not combine parameters from different parameter sets. For more information about how to use parameter sets, see Cmdlet Parameter Sets. The Set-SPEnterpriseSearchCrawlContentSource cmdlet updates the rules of a crawl content source when the search functionality is initially configured and after any new content source is added. This cmdlet is called once to set the incremental crawl schedule for a content source, and it is called again to set a full crawl schedule. www.dump4certs.com

* Incorrect: Get-SPEnterpriseSearchCrawlContentSource

Returns a crawl content source.

The Get-SPEnterpriseSearchCrawlContentSource cmdlet reads the content source when the rules of content source are created, updated, ordeleted, or reads a

CrawlContentSource object when the search functionality is initially configured and after any new content source is added.

Reference: Set-SPEnterpriseSearchCrawlContentSource

---------------------------------------------------------------------------------------------------------------------------------------------- gerfield01 (DE, 15.07.15):

The given answer is wrong it must set to the follwoing Values.

$corpsearchapp = Get-SPEnterpriseSearchServiceApplication "Search Service Application" $contentsource = Get-SPEnterpriseSearchCrawlContentSource -

SearchApplication $searchapp "" $cs | Set-SPEnterpriseSearchCrawlContentSource -EnableContinousCrawls 1

If you enable continuous crawling, you do not configure incremental or full crawls.

When you select the option to crawl continuously, the content source is crawled every 15 minutes.

* Get-SPEnterpriseSearchServiceApplication

Returns the search service application for a farm.

------------------EXAMPLE------------------

$ssa = Get-SPEnterpriseSearchServiceApplication Identity MySSA This example obtains a reference to a search service application named MySSA.

Example2:

-----------------EXAMPLE------------------

$searchapp = Get-SPEnterpriseSearchServiceApplication " SearchApp1" $contentsource = Get- SPEnterpriseSearchCrawlContentSource -SearchApplication

$searchapp -Identity "Local SharePoint Sites" $contentsource.StartFullCrawl()

This example retrieves the default content source for the search service application, SearchApp1, and starts a full crawl on the content source.

* Set-SPEnterpriseSearchCrawlContentSource

Sets the properties of a crawl content source for a Search service application.

This cmdlet contains more than one parameter set. You mayonly use parameters from one parameter set, and you may not combine parameters from different parameter sets. For more information about how to use parameter sets, see Cmdlet Parameter Sets. The Set-SPEnterpriseSearchCrawlContentSource cmdlet updates the rules of a crawl content source when the search functionality is initially configured and after any new content source is added. This cmdlet is called once to set the incremental crawl schedule for a content source, and it is called again to set a full crawl schedule. www.dump4certs.com

* Incorrect: Get-SPEnterpriseSearchCrawlContentSource

Returns a crawl content source.

The Get-SPEnterpriseSearchCrawlContentSource cmdlet reads the content source when the rules of content source are created, updated, ordeleted, or reads a

CrawlContentSource object when the search functionality is initially configured and after any new content source is added.

Reference: Set-SPEnterpriseSearchCrawlContentSource

---------------------------------------------------------------------------------------------------------------------------------------------- gerfield01 (DE, 15.07.15):

The given answer is wrong it must set to the follwoing Values.

$corpsearchapp = Get-SPEnterpriseSearchServiceApplication "Search Service Application" $contentsource = Get-SPEnterpriseSearchCrawlContentSource -

SearchApplication $searchapp "" $cs | Set-SPEnterpriseSearchCrawlContentSource -EnableContinousCrawls 1

If you enable continuous crawling, you do not configure incremental or full crawls.

When you select the option to crawl continuously, the content source is crawled every 15 minutes.

send

light_mode

delete

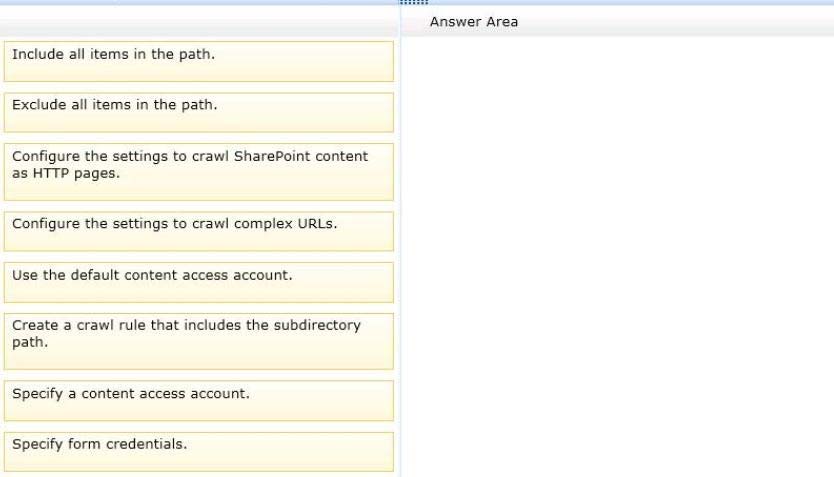

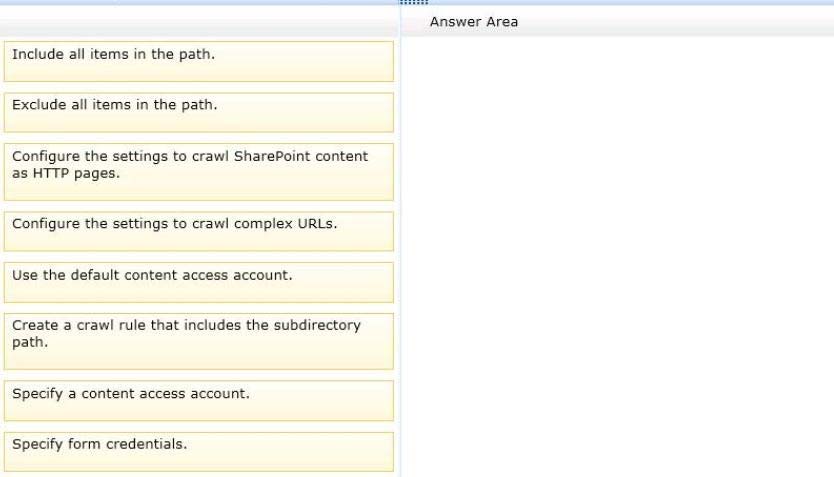

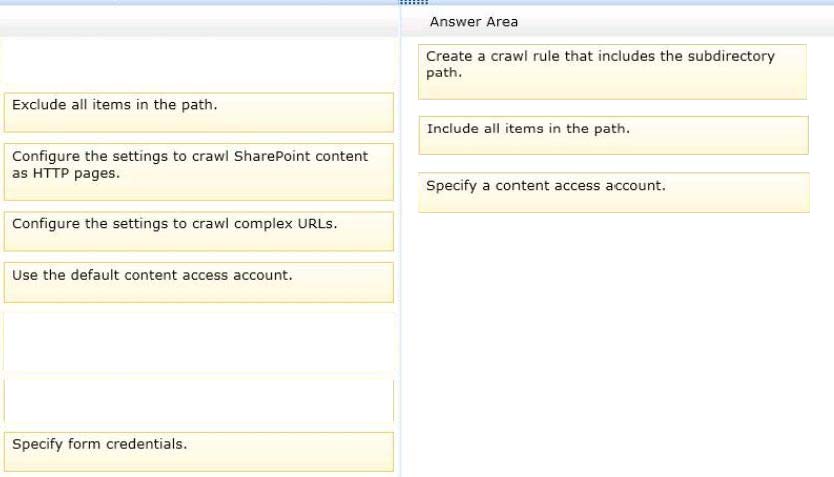

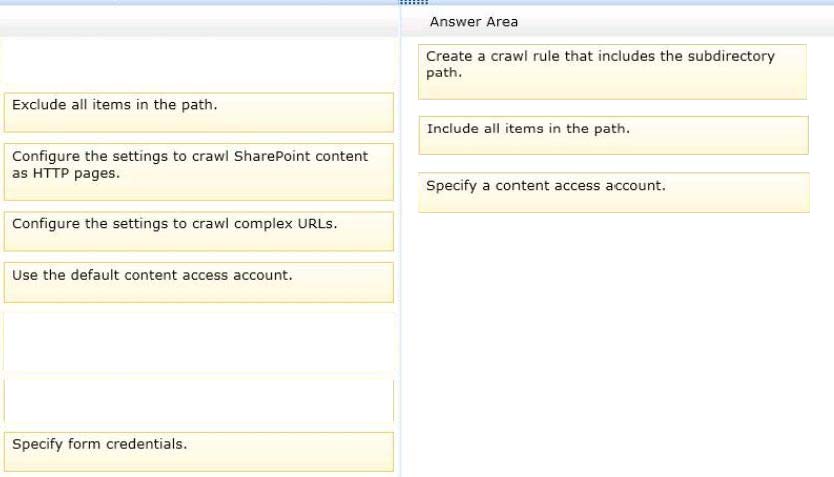

Question #23

DRAG DROP -

You are managing a SharePoint search topology.

An external identity management system handles all user authentication.

SharePoint is not indexing some subdirectories of a public SharePoint site.

You need to ensure that SharePoint indexes the specific subdirectories.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Select and Place:

You are managing a SharePoint search topology.

An external identity management system handles all user authentication.

SharePoint is not indexing some subdirectories of a public SharePoint site.

You need to ensure that SharePoint indexes the specific subdirectories.

Which three actions should you perform in sequence? (To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.)

Select and Place:

Correct Answer:

The default content access account is the account used to crawl content. This account can be overridden by specifying a differentaccount by using a crawl rule.

Learn how to specify a content access account, create crawl rules to include or exclude directories, and prioritize the order of crawl rules.

You can add a crawl rule to include or exclude specific paths when you crawl content. When you include a path, you can optionally provide alternative account credentials to crawl it. In addition to creating or editing crawl rules, you can test, delete, or reorder existing crawl rules.

Before end-users can use search functionality in SharePoint 2013, you must crawl or federate the content that you want to make available for users to search.

Effective search depends on well- planned content sources, connectors, file types, crawl rules,authentication, and federation.

Plan crawl rules to optimize crawls

Crawl rules apply to all content sources in the Search service application. You can apply crawl rules to a particular URL or set of URLs to do the following things:

Avoid crawling irrelevant content by excluding one or more URLs. This also helps reduce the use of server resources and network traffic.

Crawl links on the URL without crawling the URL itself. This option is useful for sites that have links of relevant content when the page that contains the links does not contain relevant information.

Enable complex URLs to be crawled. This option directs the system to crawl URLs that contain a query parameter specified with a question mark. Depending upon the site, these URLs might not include relevant content. Because complex URLs can often redirect to irrelevant sites, it is a good idea to enable this option on only sites where you know that the content available from complex URLs is relevant.

Enable content on SharePoint sites to be crawled as HTTP pages. This option enables the system to crawl SharePoint sites that are behind a firewall or in scenarios in which the site being crawled restricts access to the Web service that is used by the crawler.

Specify whether to use the default content access account, a different content access account, or aclient certificate for crawling the specified URL.

Reference:https://technet.microsoft.com/en-us/library/jj219577.aspx

The default content access account is the account used to crawl content. This account can be overridden by specifying a differentaccount by using a crawl rule.

Learn how to specify a content access account, create crawl rules to include or exclude directories, and prioritize the order of crawl rules.

You can add a crawl rule to include or exclude specific paths when you crawl content. When you include a path, you can optionally provide alternative account credentials to crawl it. In addition to creating or editing crawl rules, you can test, delete, or reorder existing crawl rules.

Before end-users can use search functionality in SharePoint 2013, you must crawl or federate the content that you want to make available for users to search.

Effective search depends on well- planned content sources, connectors, file types, crawl rules,authentication, and federation.

Plan crawl rules to optimize crawls

Crawl rules apply to all content sources in the Search service application. You can apply crawl rules to a particular URL or set of URLs to do the following things:

Avoid crawling irrelevant content by excluding one or more URLs. This also helps reduce the use of server resources and network traffic.

Crawl links on the URL without crawling the URL itself. This option is useful for sites that have links of relevant content when the page that contains the links does not contain relevant information.

Enable complex URLs to be crawled. This option directs the system to crawl URLs that contain a query parameter specified with a question mark. Depending upon the site, these URLs might not include relevant content. Because complex URLs can often redirect to irrelevant sites, it is a good idea to enable this option on only sites where you know that the content available from complex URLs is relevant.

Enable content on SharePoint sites to be crawled as HTTP pages. This option enables the system to crawl SharePoint sites that are behind a firewall or in scenarios in which the site being crawled restricts access to the Web service that is used by the crawler.

Specify whether to use the default content access account, a different content access account, or aclient certificate for crawling the specified URL.

Reference:https://technet.microsoft.com/en-us/library/jj219577.aspx

send

light_mode

delete

Question #24

A company is planning to upgrade from SharePoint 2010 to SharePoint 2013.

You need to find out the web traffic capacity of the SharePoint farm by using a Microsoft Visual Studio Team System project file.

Which tool should you use?

You need to find out the web traffic capacity of the SharePoint farm by using a Microsoft Visual Studio Team System project file.

Which tool should you use?

- ANetwork Monitor

- BSharePoint Health Analyzer

- CSharePoint Diagnostic Studio

- DLoad Testing Kit (LTK)

Correct Answer:

D

D

send

light_mode

delete

Question #25

You install SharePoint Server in a three-tiered server farm that meets the minimum requirements for SharePoint 2013 and surrounding technologies. The content databases will reside on Fibre Channel drives in a storage area network (SAN). The backup solution will utilize SAN snapshots.

You are estimating storage requirements. You plan to migrate 6 TB of current content from file shares to SharePoint.

You need to choose the content database size that will optimize performance, minimize administrative overhead, and minimize the number of content databases.

Which content database size should you choose?

You are estimating storage requirements. You plan to migrate 6 TB of current content from file shares to SharePoint.

You need to choose the content database size that will optimize performance, minimize administrative overhead, and minimize the number of content databases.

Which content database size should you choose?

- A100 GB

- B200 GB

- C3 TB

- D6 TB

Correct Answer:

C

Microsoft recommends content database size limits of 200GB. However, the main reason for this is for ease of management when it comes to backing up and restoring the content databases using SQL Server backups. In this question, the backups are handled by the SAN using SAN snapshots so that is not an issue. A

200GB database size would also mean 30 content databases and therefore 30 site collections. This does not meet the requirements of minimizing administrative overhead or minimizing the number of content databases. Two 3 TBdatabases does meet those requirements. Over 4TB is not recommended which excludes answer D.

C

Microsoft recommends content database size limits of 200GB. However, the main reason for this is for ease of management when it comes to backing up and restoring the content databases using SQL Server backups. In this question, the backups are handled by the SAN using SAN snapshots so that is not an issue. A

200GB database size would also mean 30 content databases and therefore 30 site collections. This does not meet the requirements of minimizing administrative overhead or minimizing the number of content databases. Two 3 TBdatabases does meet those requirements. Over 4TB is not recommended which excludes answer D.

send

light_mode

delete

All Pages