Databricks Certified Data Engineer Professional Exam Practice Questions (P. 5)

- Full Access (327 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #41

The DevOps team has configured a production workload as a collection of notebooks scheduled to run daily using the Jobs UI. A new data engineering hire is onboarding to the team and has requested access to one of these notebooks to review the production logic.

What are the maximum notebook permissions that can be granted to the user without allowing accidental changes to production code or data?

What are the maximum notebook permissions that can be granted to the user without allowing accidental changes to production code or data?

send

light_mode

delete

Question #42

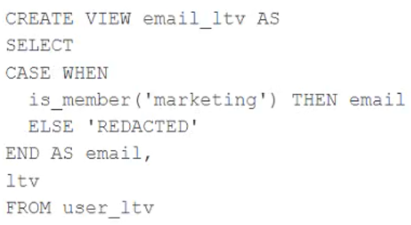

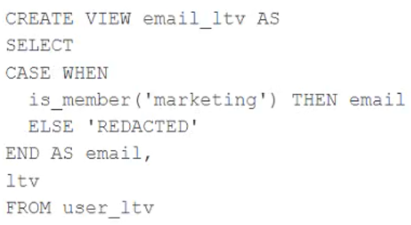

A table named user_ltv is being used to create a view that will be used by data analysts on various teams. Users in the workspace are configured into groups, which are used for setting up data access using ACLs.

The user_ltv table has the following schema:

email STRING, age INT, ltv INT

The following view definition is executed:

An analyst who is not a member of the marketing group executes the following query:

SELECT * FROM email_ltv -

Which statement describes the results returned by this query?

The user_ltv table has the following schema:

email STRING, age INT, ltv INT

The following view definition is executed:

An analyst who is not a member of the marketing group executes the following query:

SELECT * FROM email_ltv -

Which statement describes the results returned by this query?

- AThree columns will be returned, but one column will be named "REDACTED" and contain only null values.

- BOnly the email and ltv columns will be returned; the email column will contain all null values.

- CThe email and ltv columns will be returned with the values in user_ltv.

- DThe email.age, and ltv columns will be returned with the values in user_ltv.

- EOnly the email and ltv columns will be returned; the email column will contain the string "REDACTED" in each row.Most Voted

Correct Answer:

E

E

send

light_mode

delete

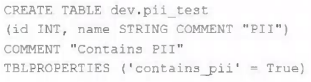

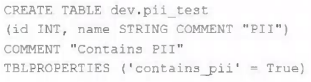

Question #43

The data governance team has instituted a requirement that all tables containing Personal Identifiable Information (PH) must be clearly annotated. This includes adding column comments, table comments, and setting the custom table property "contains_pii" = true.

The following SQL DDL statement is executed to create a new table:

Which command allows manual confirmation that these three requirements have been met?

The following SQL DDL statement is executed to create a new table:

Which command allows manual confirmation that these three requirements have been met?

- ADESCRIBE EXTENDED dev.pii_testMost Voted

- BDESCRIBE DETAIL dev.pii_test

- CSHOW TBLPROPERTIES dev.pii_test

- DDESCRIBE HISTORY dev.pii_test

- ESHOW TABLES dev

Correct Answer:

A

A

send

light_mode

delete

Question #44

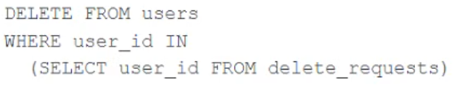

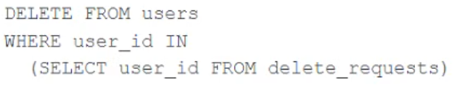

The data governance team is reviewing code used for deleting records for compliance with GDPR. They note the following logic is used to delete records from the Delta Lake table named users.

Assuming that user_id is a unique identifying key and that delete_requests contains all users that have requested deletion, which statement describes whether successfully executing the above logic guarantees that the records to be deleted are no longer accessible and why?

Assuming that user_id is a unique identifying key and that delete_requests contains all users that have requested deletion, which statement describes whether successfully executing the above logic guarantees that the records to be deleted are no longer accessible and why?

- AYes; Delta Lake ACID guarantees provide assurance that the DELETE command succeeded fully and permanently purged these records.

- BNo; the Delta cache may return records from previous versions of the table until the cluster is restarted.

- CYes; the Delta cache immediately updates to reflect the latest data files recorded to disk.

- DNo; the Delta Lake DELETE command only provides ACID guarantees when combined with the MERGE INTO command.

- ENo; files containing deleted records may still be accessible with time travel until a VACUUM command is used to remove invalidated data files.Most Voted

Correct Answer:

E

E

send

light_mode

delete

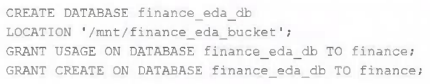

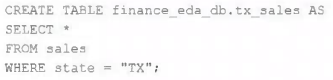

Question #45

An external object storage container has been mounted to the location /mnt/finance_eda_bucket.

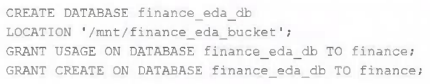

The following logic was executed to create a database for the finance team:

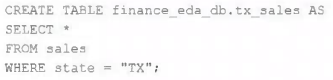

After the database was successfully created and permissions configured, a member of the finance team runs the following code:

If all users on the finance team are members of the finance group, which statement describes how the tx_sales table will be created?

The following logic was executed to create a database for the finance team:

After the database was successfully created and permissions configured, a member of the finance team runs the following code:

If all users on the finance team are members of the finance group, which statement describes how the tx_sales table will be created?

- AA logical table will persist the query plan to the Hive Metastore in the Databricks control plane.

- BAn external table will be created in the storage container mounted to /mnt/finance_eda_bucket.

- CA logical table will persist the physical plan to the Hive Metastore in the Databricks control plane.

- DAn managed table will be created in the storage container mounted to /mnt/finance_eda_bucket.Most Voted

- EA managed table will be created in the DBFS root storage container.

Correct Answer:

D

D

send

light_mode

delete

Question #46

Although the Databricks Utilities Secrets module provides tools to store sensitive credentials and avoid accidentally displaying them in plain text users should still be careful with which credentials are stored here and which users have access to using these secrets.

Which statement describes a limitation of Databricks Secrets?

Which statement describes a limitation of Databricks Secrets?

- ABecause the SHA256 hash is used to obfuscate stored secrets, reversing this hash will display the value in plain text.

- BAccount administrators can see all secrets in plain text by logging on to the Databricks Accounts console.

- CSecrets are stored in an administrators-only table within the Hive Metastore; database administrators have permission to query this table by default.

- DIterating through a stored secret and printing each character will display secret contents in plain text.Most Voted

- EThe Databricks REST API can be used to list secrets in plain text if the personal access token has proper credentials.

Correct Answer:

D

D

send

light_mode

delete

Question #47

What statement is true regarding the retention of job run history?

- AIt is retained until you export or delete job run logs

- BIt is retained for 30 days, during which time you can deliver job run logs to DBFS or S3

- CIt is retained for 60 days, during which you can export notebook run results to HTMLMost Voted

- DIt is retained for 60 days, after which logs are archived

- EIt is retained for 90 days or until the run-id is re-used through custom run configuration

Correct Answer:

C

C

send

light_mode

delete

Question #48

A data engineer, User A, has promoted a new pipeline to production by using the REST API to programmatically create several jobs. A DevOps engineer, User B, has configured an external orchestration tool to trigger job runs through the REST API. Both users authorized the REST API calls using their personal access tokens.

Which statement describes the contents of the workspace audit logs concerning these events?

Which statement describes the contents of the workspace audit logs concerning these events?

- ABecause the REST API was used for job creation and triggering runs, a Service Principal will be automatically used to identify these events.

- BBecause User B last configured the jobs, their identity will be associated with both the job creation events and the job run events.

- CBecause these events are managed separately, User A will have their identity associated with the job creation events and User B will have their identity associated with the job run events.

- DBecause the REST API was used for job creation and triggering runs, user identity will not be captured in the audit logs.

- EBecause User A created the jobs, their identity will be associated with both the job creation events and the job run events.Most Voted

Correct Answer:

C

C

send

light_mode

delete

Question #49

A user new to Databricks is trying to troubleshoot long execution times for some pipeline logic they are working on. Presently, the user is executing code cell-by-cell, using display() calls to confirm code is producing the logically correct results as new transformations are added to an operation. To get a measure of average time to execute, the user is running each cell multiple times interactively.

Which of the following adjustments will get a more accurate measure of how code is likely to perform in production?

Which of the following adjustments will get a more accurate measure of how code is likely to perform in production?

- AScala is the only language that can be accurately tested using interactive notebooks; because the best performance is achieved by using Scala code compiled to JARs, all PySpark and Spark SQL logic should be refactored.

- BThe only way to meaningfully troubleshoot code execution times in development notebooks Is to use production-sized data and production-sized clusters with Run All execution.

- CProduction code development should only be done using an IDE; executing code against a local build of open source Spark and Delta Lake will provide the most accurate benchmarks for how code will perform in production.

- DCalling display() forces a job to trigger, while many transformations will only add to the logical query plan; because of caching, repeated execution of the same logic does not provide meaningful results.Most Voted

- EThe Jobs UI should be leveraged to occasionally run the notebook as a job and track execution time during incremental code development because Photon can only be enabled on clusters launched for scheduled jobs.

send

light_mode

delete

Question #50

A production cluster has 3 executor nodes and uses the same virtual machine type for the driver and executor.

When evaluating the Ganglia Metrics for this cluster, which indicator would signal a bottleneck caused by code executing on the driver?

When evaluating the Ganglia Metrics for this cluster, which indicator would signal a bottleneck caused by code executing on the driver?

- AThe five Minute Load Average remains consistent/flat

- BBytes Received never exceeds 80 million bytes per second

- CTotal Disk Space remains constant

- DNetwork I/O never spikesMost Voted

- EOverall cluster CPU utilization is around 25%

send

light_mode

delete

All Pages