Databricks Certified Data Engineer Professional Exam Practice Questions (P. 2)

- Full Access (327 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

The data engineering team has configured a job to process customer requests to be forgotten (have their data deleted). All user data that needs to be deleted is stored in Delta Lake tables using default table settings.

The team has decided to process all deletions from the previous week as a batch job at 1am each Sunday. The total duration of this job is less than one hour. Every Monday at 3am, a batch job executes a series of VACUUM commands on all Delta Lake tables throughout the organization.

The compliance officer has recently learned about Delta Lake's time travel functionality. They are concerned that this might allow continued access to deleted data.

Assuming all delete logic is correctly implemented, which statement correctly addresses this concern?

The team has decided to process all deletions from the previous week as a batch job at 1am each Sunday. The total duration of this job is less than one hour. Every Monday at 3am, a batch job executes a series of VACUUM commands on all Delta Lake tables throughout the organization.

The compliance officer has recently learned about Delta Lake's time travel functionality. They are concerned that this might allow continued access to deleted data.

Assuming all delete logic is correctly implemented, which statement correctly addresses this concern?

- ABecause the VACUUM command permanently deletes all files containing deleted records, deleted records may be accessible with time travel for around 24 hours.

- BBecause the default data retention threshold is 24 hours, data files containing deleted records will be retained until the VACUUM job is run the following day.

- CBecause Delta Lake time travel provides full access to the entire history of a table, deleted records can always be recreated by users with full admin privileges.

- DBecause Delta Lake's delete statements have ACID guarantees, deleted records will be permanently purged from all storage systems as soon as a delete job completes.

- EBecause the default data retention threshold is 7 days, data files containing deleted records will be retained until the VACUUM job is run 8 days later.Most Voted

Correct Answer:

E

E

GPT-4o - Answer

GPT-4o - Answer

When considering the concerns about Delta Lake's time travel functionality and accessibility to deleted data, keep in mind that the default retention period is indeed 7 days. However, in this specific scenario described in the question, the deletion operation is performed just 24 hours before the VACUUM command, which makes records inaccessible post-vacuuming except for that brief period. The deleted records might be accessible via time travel only between the time of deletion and the following VACUUM execution. Thus, it highlights a window of about 24 hours during which this data remains accessible, despite longer default settings.

send

light_mode

delete

Question #12

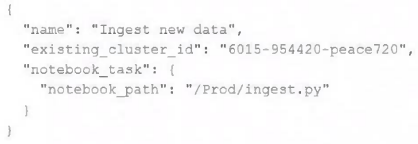

A junior data engineer has configured a workload that posts the following JSON to the Databricks REST API endpoint 2.0/jobs/create.

Assuming that all configurations and referenced resources are available, which statement describes the result of executing this workload three times?

Assuming that all configurations and referenced resources are available, which statement describes the result of executing this workload three times?

- AThree new jobs named "Ingest new data" will be defined in the workspace, and they will each run once daily.

- BThe logic defined in the referenced notebook will be executed three times on new clusters with the configurations of the provided cluster ID.

- CThree new jobs named "Ingest new data" will be defined in the workspace, but no jobs will be executed.Most Voted

- DOne new job named "Ingest new data" will be defined in the workspace, but it will not be executed.

- EThe logic defined in the referenced notebook will be executed three times on the referenced existing all purpose cluster.

Correct Answer:

C

C

send

light_mode

delete

Question #13

An upstream system is emitting change data capture (CDC) logs that are being written to a cloud object storage directory. Each record in the log indicates the change type (insert, update, or delete) and the values for each field after the change. The source table has a primary key identified by the field pk_id.

For auditing purposes, the data governance team wishes to maintain a full record of all values that have ever been valid in the source system. For analytical purposes, only the most recent value for each record needs to be recorded. The Databricks job to ingest these records occurs once per hour, but each individual record may have changed multiple times over the course of an hour.

Which solution meets these requirements?

For auditing purposes, the data governance team wishes to maintain a full record of all values that have ever been valid in the source system. For analytical purposes, only the most recent value for each record needs to be recorded. The Databricks job to ingest these records occurs once per hour, but each individual record may have changed multiple times over the course of an hour.

Which solution meets these requirements?

- ACreate a separate history table for each pk_id resolve the current state of the table by running a union all filtering the history tables for the most recent state.

- BUse MERGE INTO to insert, update, or delete the most recent entry for each pk_id into a bronze table, then propagate all changes throughout the system.

- CIterate through an ordered set of changes to the table, applying each in turn; rely on Delta Lake's versioning ability to create an audit log.

- DUse Delta Lake's change data feed to automatically process CDC data from an external system, propagating all changes to all dependent tables in the Lakehouse.

- EIngest all log information into a bronze table; use MERGE INTO to insert, update, or delete the most recent entry for each pk_id into a silver table to recreate the current table state.Most Voted

Correct Answer:

E

E

GPT-4o - Answer

GPT-4o - Answer

The chosen approach using a bronze table for initial ingestion and a silver table for maintaining the current state of the records through MERGE INTO operations is effective for both auditing and analytical purposes. The bronze table captures and preserves every change as it arrives, serving the audit requirement. Subsequently, the silver table, which is updated with only the latest values for each primary key, serves the need for analytics by providing the most current state of the data. This dual-table strategy harnesses Databricks' capabilities to manage complex data workflows efficiently in a Lakehouse environment.

send

light_mode

delete

Question #14

An hourly batch job is configured to ingest data files from a cloud object storage container where each batch represent all records produced by the source system in a given hour. The batch job to process these records into the Lakehouse is sufficiently delayed to ensure no late-arriving data is missed. The user_id field represents a unique key for the data, which has the following schema: user_id BIGINT, username STRING, user_utc STRING, user_region STRING, last_login BIGINT, auto_pay BOOLEAN, last_updated BIGINT

New records are all ingested into a table named account_history which maintains a full record of all data in the same schema as the source. The next table in the system is named account_current and is implemented as a Type 1 table representing the most recent value for each unique user_id.

Assuming there are millions of user accounts and tens of thousands of records processed hourly, which implementation can be used to efficiently update the described account_current table as part of each hourly batch job?

New records are all ingested into a table named account_history which maintains a full record of all data in the same schema as the source. The next table in the system is named account_current and is implemented as a Type 1 table representing the most recent value for each unique user_id.

Assuming there are millions of user accounts and tens of thousands of records processed hourly, which implementation can be used to efficiently update the described account_current table as part of each hourly batch job?

- AUse Auto Loader to subscribe to new files in the account_history directory; configure a Structured Streaming trigger once job to batch update newly detected files into the account_current table.

- BOverwrite the account_current table with each batch using the results of a query against the account_history table grouping by user_id and filtering for the max value of last_updated.

- CFilter records in account_history using the last_updated field and the most recent hour processed, as well as the max last_iogin by user_id write a merge statement to update or insert the most recent value for each user_id.Most Voted

- DUse Delta Lake version history to get the difference between the latest version of account_history and one version prior, then write these records to account_current.

- EFilter records in account_history using the last_updated field and the most recent hour processed, making sure to deduplicate on username; write a merge statement to update or insert the most recent value for each username.

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

Regarding the efficient updating of the 'account_current' table using hourly batch jobs, the method that utilizes a merge statement after filtering records from 'account_history' by the most recent 'last_updated' field and hour processed, then merging this data to check for the most current value per user_id, is most suitable. This approach ensures that only the most recent data per user influences the 'account_current' table without overwriting historical data mismatchingly, and avoids scanning inefficiencies and the pitfalls of misidentification found in other methods. This merging technique particularly leverages the unique identifier effectively.

send

light_mode

delete

Question #15

A table in the Lakehouse named customer_churn_params is used in churn prediction by the machine learning team. The table contains information about customers derived from a number of upstream sources. Currently, the data engineering team populates this table nightly by overwriting the table with the current valid values derived from upstream data sources.

The churn prediction model used by the ML team is fairly stable in production. The team is only interested in making predictions on records that have changed in the past 24 hours.

Which approach would simplify the identification of these changed records?

The churn prediction model used by the ML team is fairly stable in production. The team is only interested in making predictions on records that have changed in the past 24 hours.

Which approach would simplify the identification of these changed records?

- AApply the churn model to all rows in the customer_churn_params table, but implement logic to perform an upsert into the predictions table that ignores rows where predictions have not changed.

- BConvert the batch job to a Structured Streaming job using the complete output mode; configure a Structured Streaming job to read from the customer_churn_params table and incrementally predict against the churn model.

- CCalculate the difference between the previous model predictions and the current customer_churn_params on a key identifying unique customers before making new predictions; only make predictions on those customers not in the previous predictions.

- DModify the overwrite logic to include a field populated by calling spark.sql.functions.current_timestamp() as data are being written; use this field to identify records written on a particular date.

- EReplace the current overwrite logic with a merge statement to modify only those records that have changed; write logic to make predictions on the changed records identified by the change data feed.Most Voted

Correct Answer:

E

E

GPT-4o - Answer

GPT-4o - Answer

Absolutely spot on with option E. This option leverages the merge statement that effectively minimizes unnecessary data manipulation by updating only the rows that have changed and inserting new ones as required. Including a change data feed (CDC) not only tightens the workflow but also specifically pinpoints exactly which records need to be re-evaluated by the prediction model. This precise targeting drastically reduces computational waste and enhances processing efficiency, making it a smart choice for maintaining the machine learning pipeline in a production environment.

send

light_mode

delete

Question #16

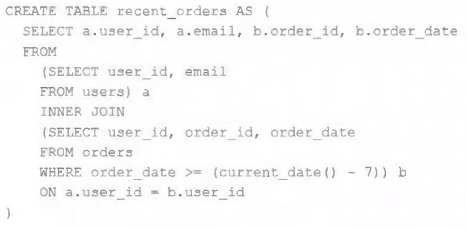

A table is registered with the following code:

Both users and orders are Delta Lake tables. Which statement describes the results of querying recent_orders?

Both users and orders are Delta Lake tables. Which statement describes the results of querying recent_orders?

- AAll logic will execute at query time and return the result of joining the valid versions of the source tables at the time the query finishes.

- BAll logic will execute when the table is defined and store the result of joining tables to the DBFS; this stored data will be returned when the table is queried.Most Voted

- CResults will be computed and cached when the table is defined; these cached results will incrementally update as new records are inserted into source tables.

- DAll logic will execute at query time and return the result of joining the valid versions of the source tables at the time the query began.

- EThe versions of each source table will be stored in the table transaction log; query results will be saved to DBFS with each query.

Correct Answer:

B

B

send

light_mode

delete

Question #17

A production workload incrementally applies updates from an external Change Data Capture feed to a Delta Lake table as an always-on Structured Stream job. When data was initially migrated for this table, OPTIMIZE was executed and most data files were resized to 1 GB. Auto Optimize and Auto Compaction were both turned on for the streaming production job. Recent review of data files shows that most data files are under 64 MB, although each partition in the table contains at least 1 GB of data and the total table size is over 10 TB.

Which of the following likely explains these smaller file sizes?

Which of the following likely explains these smaller file sizes?

- ADatabricks has autotuned to a smaller target file size to reduce duration of MERGE operationsMost Voted

- BZ-order indices calculated on the table are preventing file compaction

- CBloom filter indices calculated on the table are preventing file compaction

- DDatabricks has autotuned to a smaller target file size based on the overall size of data in the table

- EDatabricks has autotuned to a smaller target file size based on the amount of data in each partition

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Databricks employs Auto Optimize to achieve more efficient merge operations by dynamically adjusting the target file size based on specific workload patterns. In scenarios involving frequent data updates or merge operations, smaller file sizes can significantly speed up these processes by reducing the amount of data that needs to be scanned and shuffled. Hence, observing files substantially smaller than the initial 1 GB after enabling Auto Optimize and Auto Compaction is both expected and optimal for performance in high-update environments.

send

light_mode

delete

Question #18

Which statement regarding stream-static joins and static Delta tables is correct?

- AEach microbatch of a stream-static join will use the most recent version of the static Delta table as of each microbatch.Most Voted

- BEach microbatch of a stream-static join will use the most recent version of the static Delta table as of the job's initialization.

- CThe checkpoint directory will be used to track state information for the unique keys present in the join.

- DStream-static joins cannot use static Delta tables because of consistency issues.

- EThe checkpoint directory will be used to track updates to the static Delta table.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Stream-static joins in Databricks involve each microbatch of the streaming data using the latest valid version of the static Delta table available at the time of processing that particular microbatch. This design ensures that the most current snapshot of the static data is used for real-time analytics, aligns with the architectures aiming at low-latency data operations, and effectively supports scenarios where updates to static data are frequent and critical to the join's output accuracy.

send

light_mode

delete

Question #19

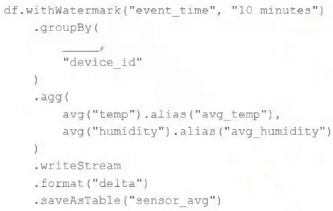

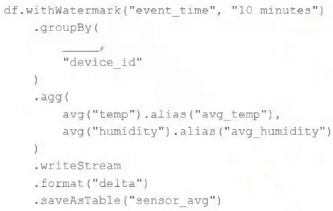

A junior data engineer has been asked to develop a streaming data pipeline with a grouped aggregation using DataFrame df. The pipeline needs to calculate the average humidity and average temperature for each non-overlapping five-minute interval. Events are recorded once per minute per device.

Streaming DataFrame df has the following schema:

"device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT"

Code block:

Choose the response that correctly fills in the blank within the code block to complete this task.

Streaming DataFrame df has the following schema:

"device_id INT, event_time TIMESTAMP, temp FLOAT, humidity FLOAT"

Code block:

Choose the response that correctly fills in the blank within the code block to complete this task.

- Ato_interval("event_time", "5 minutes").alias("time")

- Bwindow("event_time", "5 minutes").alias("time")Most Voted

- C"event_time"

- Dwindow("event_time", "10 minutes").alias("time")

- Elag("event_time", "10 minutes").alias("time")

Correct Answer:

B

B

send

light_mode

delete

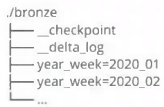

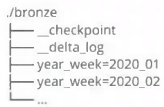

Question #20

A data architect has designed a system in which two Structured Streaming jobs will concurrently write to a single bronze Delta table. Each job is subscribing to a different topic from an Apache Kafka source, but they will write data with the same schema. To keep the directory structure simple, a data engineer has decided to nest a checkpoint directory to be shared by both streams.

The proposed directory structure is displayed below:

Which statement describes whether this checkpoint directory structure is valid for the given scenario and why?

The proposed directory structure is displayed below:

Which statement describes whether this checkpoint directory structure is valid for the given scenario and why?

- ANo; Delta Lake manages streaming checkpoints in the transaction log.

- BYes; both of the streams can share a single checkpoint directory.

- CNo; only one stream can write to a Delta Lake table.

- DYes; Delta Lake supports infinite concurrent writers.

- ENo; each of the streams needs to have its own checkpoint directory.Most Voted

Correct Answer:

E

E

send

light_mode

delete

All Pages