Databricks Certified Associate Developer for Apache Spark Exam Practice Questions (P. 3)

- Full Access (343 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

Which of the following code blocks returns a DataFrame containing only the rows from DataFrame storesDF where the value in column sqft is less than or equal to 25,000 OR the value in column customerSatisfaction is greater than or equal to 30?

- AstoresDF.filter(col("sqft") <= 25000 | col("customerSatisfaction") >= 30)

- BstoresDF.filter(col("sqft") <= 25000 or col("customerSatisfaction") >= 30)

- CstoresDF.filter(sqft <= 25000 or customerSatisfaction >= 30)

- DstoresDF.filter(col(sqft) <= 25000 | col(customerSatisfaction) >= 30)

- EstoresDF.filter((col("sqft") <= 25000) | (col("customerSatisfaction") >= 30))Most Voted

Correct Answer:

E

E

send

light_mode

delete

Question #22

Which of the following code blocks returns a new DataFrame from DataFrame storesDF where column storeId is of the type string?

- AstoresDF.withColumn("storeId, cast(col("storeId"), StringType()))

- BstoresDF.withColumn("storeId, col("storeId").cast(StringType()))Most Voted

- CstoresDF.withColumn("storeId, cast(storeId).as(StringType)

- DstoresDF.withColumn("storeId, col(storeId).cast(StringType)

- EstoresDF.withColumn("storeId, cast("storeId").as(StringType()))

Correct Answer:

B

B

send

light_mode

delete

Question #23

Which of the following code blocks returns a new DataFrame with a new column employeesPerSqft that is the quotient of column numberOfEmployees and column sqft, both of which are from DataFrame storesDF? Note that column employeesPerSqft is not in the original DataFrame storesDF.

- AstoresDF.withColumn("employeesPerSqft", col("numberOfEmployees") / col("sqft"))Most Voted

- BstoresDF.withColumn("employeesPerSqft", "numberOfEmployees" / "sqft")

- CstoresDF.select("employeesPerSqft", "numberOfEmployees" / "sqft")

- DstoresDF.select("employeesPerSqft", col("numberOfEmployees") / col("sqft"))

- EstoresDF.withColumn(col("employeesPerSqft"), col("numberOfEmployees") / col("sqft"))

Correct Answer:

A

A

send

light_mode

delete

Question #24

The code block shown below should return a new DataFrame from DataFrame storesDF where column modality is the constant string "PHYSICAL", Assume DataFrame storesDF is the only defined language variable. Choose the response that correctly fills in the numbered blanks within the code block to complete this task.

Code block:

storesDF. _1_(_2_,_3_(_4_))

Code block:

storesDF. _1_(_2_,_3_(_4_))

- A1. withColumn

2. "modality"

3. col

4. "PHYSICAL" - B1. withColumn

2. "modality"

3. lit

4. PHYSICAL - C1. withColumn

2. "modality"

3. lit

4. "PHYSICAL"Most Voted - D1. withColumn

2. "modality"

3. SrtringType

4. "PHYSICAL" - E1. newColumn

2. modality

3. SrtringType

4. PHYSICAL

Correct Answer:

C

C

send

light_mode

delete

Question #25

Which of the following code blocks returns a DataFrame where column storeCategory from DataFrame storesDF is split at the underscore character into column storeValueCategory and column storeSizeCategory?

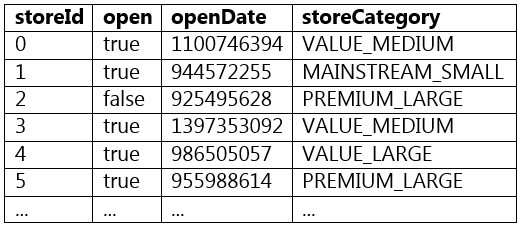

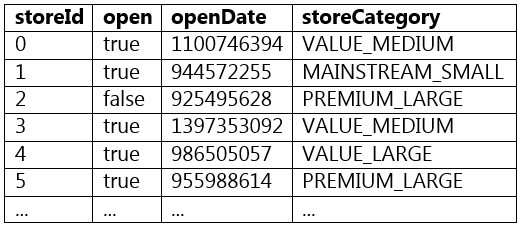

A sample of DataFrame storesDF is displayed below:

A sample of DataFrame storesDF is displayed below:

- A(storesDF.withColumn("storeValueCategory", split(col("storeCategory"), "_")[1])

.withColumn("storeSizeCategory", split(col("storeCategory"), "_")[2])) - B(storesDF.withColumn("storeValueCategory", col("storeCategory").split("_")[0])

.withColumn("storeSizeCategory", col("storeCategory").split("_")[1])) - C(storesDF.withColumn("storeValueCategory", split(col("storeCategory"), "_")[0])

.withColumn("storeSizeCategory", split(col("storeCategory"), "_")[1]))Most Voted - D(storesDF.withColumn("storeValueCategory", split("storeCategory", "_")[0])

.withColumn("storeSizeCategory", split("storeCategory", "_")[1])) - E(storesDF.withColumn("storeValueCategory", col("storeCategory").split("_")[1])

.withColumn("storeSizeCategory", col("storeCategory").split("_")[2]))

Correct Answer:

C

C

send

light_mode

delete

Question #26

Which of the following code blocks returns a new DataFrame where column productCategories only has one word per row, resulting in a DataFrame with many more rows than DataFrame storesDF?

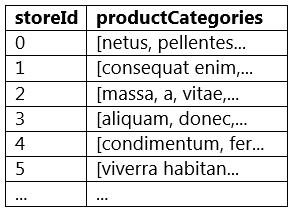

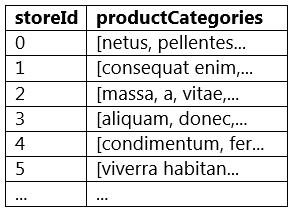

A sample of storesDF is displayed below:

A sample of storesDF is displayed below:

- AstoresDF.withColumn("productCategories", explode(col("productCategories")))Most Voted

- BstoresDF.withColumn("productCategories", split(col("productCategories")))

- CstoresDF.withColumn("productCategories", col("productCategories").explode())

- DstoresDF.withColumn("productCategories", col("productCategories").split())

- EstoresDF.withColumn("productCategories", explode("productCategories"))

Correct Answer:

A

A

send

light_mode

delete

Question #27

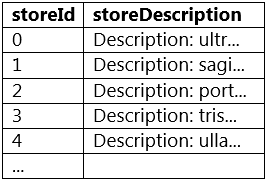

Which of the following code blocks returns a new DataFrame with column storeDescription where the pattern "Description: " has been removed from the beginning of column storeDescription in DataFrame storesDF?

A sample of DataFrame storesDF is below:

A sample of DataFrame storesDF is below:

- AstoresDF.withColumn("storeDescription", regexp_replace(col("storeDescription"), "^Description: "))

- BstoresDF.withColumn("storeDescription", col("storeDescription").regexp_replace("^Description: ", ""))

- CstoresDF.withColumn("storeDescription", regexp_extract(col("storeDescription"), "^Description: ", ""))

- DstoresDF.withColumn("storeDescription", regexp_replace("storeDescription", "^Description: ", ""))

- EstoresDF.withColumn("storeDescription", regexp_replace(col("storeDescription"), "^Description: ", ""))Most Voted

Correct Answer:

E

E

send

light_mode

delete

Question #28

Which of the following code blocks returns a new DataFrame where column division from DataFrame storesDF has been replaced and renamed to column state and column managerName from DataFrame storesDF has been replaced and renamed to column managerFullName?

- A(storesDF.withColumnRenamed(["division", "state"], ["managerName", "managerFullName"])

- B(storesDF.withColumn("state", col("division"))

.withColumn("managerFullName", col("managerName"))) - C(storesDF.withColumn("state", "division")

.withColumn("managerFullName", "managerName")) - D(storesDF.withColumnRenamed("state", "division")

.withColumnRenamed("managerFullName", "managerName")) - E(storesDF.withColumnRenamed("division", "state")

.withColumnRenamed("managerName", "managerFullName"))Most Voted

Correct Answer:

E

E

send

light_mode

delete

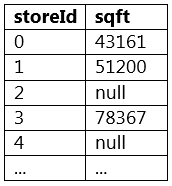

Question #29

The code block shown contains an error. The code block is intended to return a new DataFrame where column sqft from DataFrame storesDF has had its missing values replaced with the value 30,000. Identify the error.

A sample of DataFrame storesDF is displayed below:

Code block:

storesDF.na.fill(30000, col("sqft"))

A sample of DataFrame storesDF is displayed below:

Code block:

storesDF.na.fill(30000, col("sqft"))

- AThe argument to the subset parameter of fill() should be a string column name or a list of string column names rather than a Column object.Most Voted

- BThe na.fill() operation does not work and should be replaced by the dropna() operation.

- Che argument to the subset parameter of fill() should be a the numerical position of the column rather than a Column object.

- DThe na.fill() operation does not work and should be replaced by the nafill() operation.

- EThe na.fill() operation does not work and should be replaced by the fillna() operation.

Correct Answer:

A

A

send

light_mode

delete

Question #30

Which of the following operations fails to return a DataFrame with no duplicate rows?

- ADataFrame.dropDuplicates()

- BDataFrame.distinct()

- CDataFrame.drop_duplicates()

- DDataFrame.drop_duplicates(subset = None)

- EDataFrame.drop_duplicates(subset = "all")Most Voted

send

light_mode

delete

All Pages