Snowflake SnowPro Advanced Data Engineer Exam Practice Questions (P. 5)

- Full Access (65 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

Which output is provided by both the SYSTEM$CLUSTERING_DEPTH function and the SYSTEM$CLUSTERING_INFORMATION function?

- Aaverage_depthMost Voted

- Bnotes

- Caverage_overlaps

- Dtotal_partition_count

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

The correct answer is indeed A, "average_depth." Both the SYSTEM$CLUSTERING_DEPTH and SYSTEM$CLUSTERING_INFORMATION functions in Snowflake provide the "average_depth" output. This metric is crucial for understanding the distribution and depth of data clustering within Snowflake tables, aiding in efficient querying and data management practices.

send

light_mode

delete

Question #22

A Data Engineer needs to ingest invoice data in PDF format into Snowflake so that the data can be queried and used in a forecasting solution.

What is the recommended way to ingest this data?

What is the recommended way to ingest this data?

- AUse Snowpipe to ingest the files that land in an external stage into a Snowflake table.

- BUse a COPY INTO command to ingest the PDF files in an external stage into a Snowflake table with a VARIANT column.

- CCreate an external table on the PDF files that are stored in a stage and parse the data into structured data.

- DCreate a Java User-Defined Function (UDF) that leverages Java-based PDF parser libraries to parse PDF data into structured data.Most Voted

Correct Answer:

D

D

send

light_mode

delete

Question #23

A table is loaded using Snowpipe and truncated afterwards. Later, a Data Engineer finds that the table needs to be reloaded, but the metadata of the pipe will not allow the same files to be loaded again.

How can this issue be solved using the LEAST amount of operational overhead?

How can this issue be solved using the LEAST amount of operational overhead?

- AWait until the metadata expires and then reload the file using Snowpipe.

- BModify the file by adding a blank row to the bottom and re-stage the file.

- CSet the FORCE=TRUE option in the Snowpipe COPY INTO command.Most Voted

- DRecreate the pipe by using the CREATE OR REPLACE PIPE command.

Correct Answer:

C

C

send

light_mode

delete

Question #24

A stream called TRANSACTIONS_STM is created on top of a TRANSACTIONS table in a continuous pipeline running in Snowflake. After a couple of months, the TRANSACTIONS table is renamed TRANSACTIONS_RAW to comply with new naming standards.

What will happen to the TRANSACTIONS_STM object?

What will happen to the TRANSACTIONS_STM object?

- ATRANSACTIONS_STM will keep working as expected.Most Voted

- BTRANSACTIONS_STM will be stale and will need to be re-created.

- CTRANSACTIONS_STM will be automatically renamed TRANSACTIONS_RAW_STM.

- DReading from the TRANSACTIONS_STM stream will succeed for some time after the expected STALE_TIME.

Correct Answer:

B

B

send

light_mode

delete

Question #25

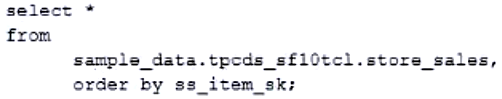

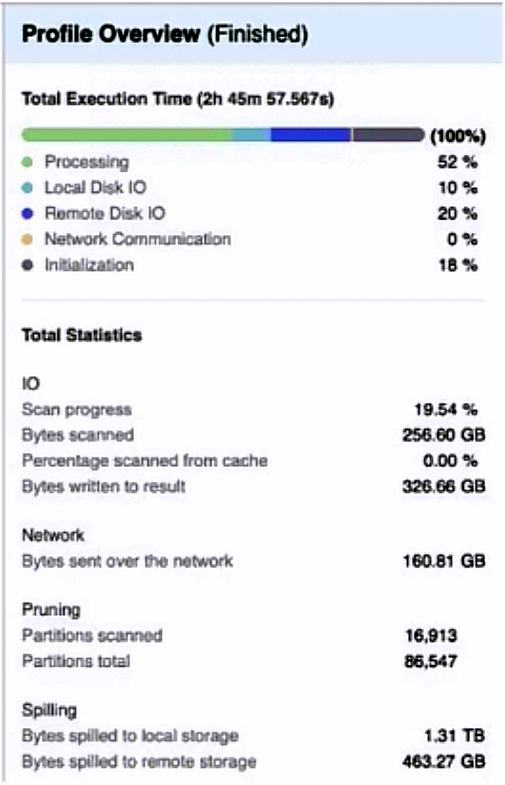

A Data Engineer is evaluating the performance of a query in a development environment.

Based on the Query Profile, what are some performance tuning options the Engineer can use? (Choose two.)

Based on the Query Profile, what are some performance tuning options the Engineer can use? (Choose two.)

- AAdd a LIMIT to the ORDER BY if possibleMost Voted

- BUse a multi-cluster virtual warehouse with the scaling policy set to standard

- CMove the query to a larger virtual warehouseMost Voted

- DCreate indexes to ensure sorted access to data

- EIncrease the MAX_CLUSTER_COUNT

Correct Answer:

AC

AC

send

light_mode

delete

All Pages