Snowflake SnowPro Advanced Data Engineer Exam Practice Questions (P. 2)

- Full Access (143 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #6

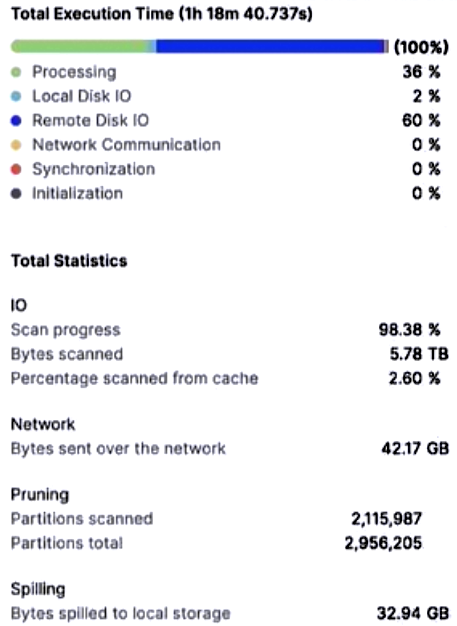

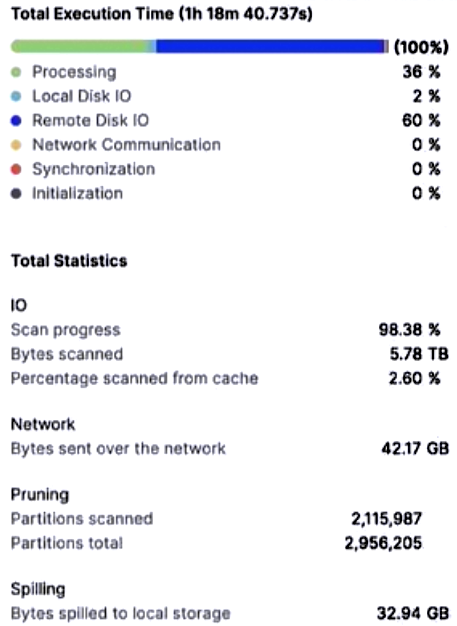

A large table with 200 columns contains two years of historical data. When queried, the table is filtered on a single day. Below is the Query Profile:

Using a size 2XL virtual warehouse, this query took over an hour to complete.

What will improve the query performance the MOST?

Using a size 2XL virtual warehouse, this query took over an hour to complete.

What will improve the query performance the MOST?

- AIncrease the size of the virtual warehouse.

- BIncrease the number of clusters in the virtual warehouse.

- CImplement the search optimization service on the table.

- DAdd a date column as a cluster key on the table.Most Voted

Correct Answer:

D

D

send

light_mode

delete

Question #7

A Data Engineer is working on a Snowflake deployment in AWS eu-west-1 (Ireland). The Engineer is planning to load data from staged files into target tables using the COPY INTO command.

Which sources are valid? (Choose three.)

Which sources are valid? (Choose three.)

- AInternal stage on GCP us-central1 (Iowa)

- BInternal stage on AWS eu-central-1 (Frankfurt)

- CExternal stage on GCP us-central1 (Iowa)Most Voted

- DExternal stage in an Amazon S3 bucket on AWS eu-west-1 (Ireland)Most Voted

- EExternal stage in an Amazon S3 bucket on AWS eu-central-1 (Frankfurt)Most Voted

- FSSD attached to an Amazon EC2 instance on AWS eu-west-1 (Ireland)

Correct Answer:

BDE

BDE

GPT-4o - Answer

GPT-4o - Answer

When using the COPY INTO command in Snowflake, understand that external stages can indeed be set up in any region and on any cloud provider, hence options D and E which involve Amazon S3 buckets in different AWS regions are completely plausible. For internal stages, they're automatically configured to be in the same region as your Snowflake deployment, contrary to the option given for B, hence it can lead to some confusion but generally, option B wouldn't be correct. This highlights the flexibility of external stages in terms of location and cloud provider compared to the more restricted internal stages.

send

light_mode

delete

Question #8

A Data Engineer wants to create a new development database (DEV) as a clone of the permanent production database (PROD). There is a requirement to disable Fail-safe for all tables.

Which command will meet these requirements?

Which command will meet these requirements?

- ACREATE DATABASE DEV -

CLONE PROD -

FAIL_SAFE = FALSE; - BCREATE DATABASE DEV -

CLONE PROD; - CCREATE TRANSIENT DATABASE DEV -

CLONE PROD;Most Voted - DCREATE DATABASE DEV -

CLONE PROD -

DATA_RETENTION_TIME_IN DAYS = 0;

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

Creating a transient database from a production database clone ensures no Fail-safe retention is applied, aligning with your need to disable Fail-safe for all tables within the development environment. Transient databases also offer cost benefits when frequently modifying transitory data without the necessity of Fail-safe overhead. Opting for this database type not only satisfies the requirement but also adds flexibility and efficiency in data handling during development phases.

send

light_mode

delete

Question #9

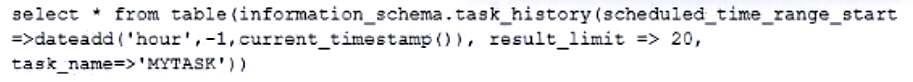

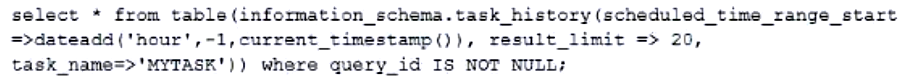

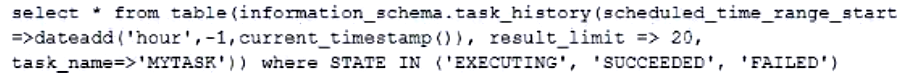

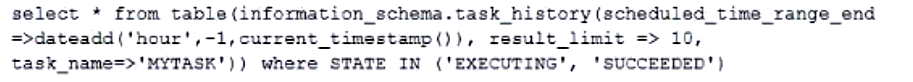

Which query will show a list of the 20 most recent executions of a specified task, MYTASK, that have been scheduled within the last hour that have ended or are still running?

- A

- B

Most Voted

Most Voted - C

- D

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The correct choice, Option C, effectively ensures we are considering only instances of MYTASK that were scheduled within the last hour and are either still running or have ended. This is done by setting conditions on the STATE and SCHEDULED_TIME columns. Key here is the precise use of the ORDER BY clause to fetch the latest 20 task executions. The WHERE condition excludes tasks planned for future execution or those without a designated schedule time, thus honing in on recent activity.

send

light_mode

delete

Question #10

Which methods can be used to create a DataFrame object in Snowpark? (Choose three.)

- Asession.jdbc_connection()

- Bsession.read.json()Most Voted

- Csession.table()Most Voted

- DDataFrame.write()

- Esession.builder()

- Fsession.sql()Most Voted

Correct Answer:

BCF

BCF

GPT-4o - Answer

GPT-4o - Answer

Absolutely! So, for crafting a DataFrame in Snowpark, three proven methods stand out. You can leverage the `session.read.json()` when you want to pull in data from JSON files – super useful for ingesting structured data. The `session.table()` function is handy when you need to access already existing tables directly within your session. And, by utilizing `session.sql()`, you engage the powerful SQL engine directly, running queries and getting results encapsulated as DataFrames. This trio offers flexible, robust ways to handle diverse data sourcing needs in Snowflake's environment.

send

light_mode

delete

All Pages