Oracle 1z0-449 Exam Practice Questions (P. 5)

- Full Access (72 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

Your customer is using the IKM SQL to HDFS File (Sqoop) module to move data from Oracle to HDFS. However, the customer is experiencing performance issues.

What change should you make to the default configuration to improve performance?

What change should you make to the default configuration to improve performance?

- AChange the ODI configuration to high performance mode.

- BIncrease the number of Sqoop mappers.

- CAdd additional tables.

- DChange the HDFS server I/O settings to duplex mode.

Correct Answer:

B

Controlling the amount of parallelism that Sqoop will use to transfer data is the main way to control the load on your database. Using more mappers will lead to a higher number of concurrent data transfer tasks, which can result in faster job completion. However, it will also increase the load on the database as Sqoop will execute more concurrent queries.

References:

https://community.hortonworks.com/articles/70258/sqoop-performance-tuning.html

B

Controlling the amount of parallelism that Sqoop will use to transfer data is the main way to control the load on your database. Using more mappers will lead to a higher number of concurrent data transfer tasks, which can result in faster job completion. However, it will also increase the load on the database as Sqoop will execute more concurrent queries.

References:

https://community.hortonworks.com/articles/70258/sqoop-performance-tuning.html

send

light_mode

delete

Question #22

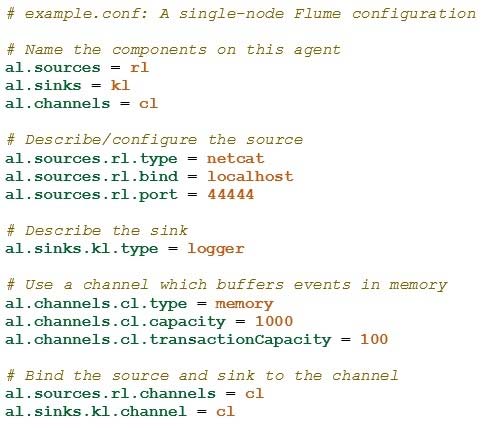

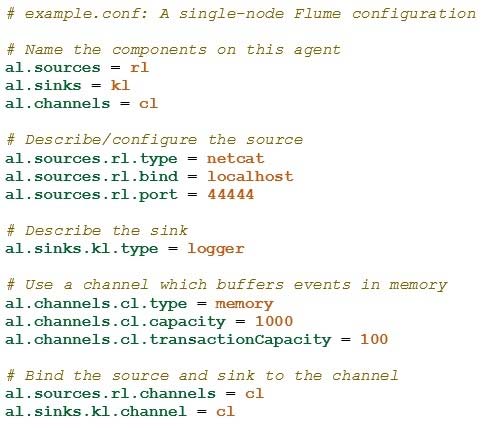

What is the result when a flume event occurs for the following single node configuration?

- AThe event is written to memory.

- BThe event is logged to the screen.

- CThe event output is not defined in this section.

- DThe event is sent out on port 44444.

- EThe event is written to the netcat process.

Correct Answer:

B

This configuration defines a single agent named a1. a1 has a source that listens for data on port 44444, a channel that buffers event data in memory, and a sink that logs event data to the console.

Note:

A sink stores the data into centralized stores like HBase and HDFS. It consumes the data (events) from the channels and delivers it to the destination. The destination of the sink might be another agent or the central stores.

A source is the component of an Agent which receives data from the data generators and transfers it to one or more channels in the form of Flume events.

Incorrect Answers:

D: port 4444 is part of the source, not the sink.

References:

https://flume.apache.org/FlumeUserGuide.html

B

This configuration defines a single agent named a1. a1 has a source that listens for data on port 44444, a channel that buffers event data in memory, and a sink that logs event data to the console.

Note:

A sink stores the data into centralized stores like HBase and HDFS. It consumes the data (events) from the channels and delivers it to the destination. The destination of the sink might be another agent or the central stores.

A source is the component of an Agent which receives data from the data generators and transfers it to one or more channels in the form of Flume events.

Incorrect Answers:

D: port 4444 is part of the source, not the sink.

References:

https://flume.apache.org/FlumeUserGuide.html

send

light_mode

delete

Question #23

What kind of workload is MapReduce designed to handle?

- Abatch processing

- Binteractive

- Ccomputational

- Dreal time

- Ecommodity

Correct Answer:

A

Hadoop was designed for batch processing. That means, take a large dataset in input all at once, process it, and write a large output. The very concept of

MapReduce is geared towards batch and not real-time. With growing data, Hadoop enables you to horizontally scale your cluster by adding commodity nodes and thus keep up with query. In hadoop Map-reduce does the same job it will take large amount of data and process it in batch. It will not give immediate output. It will take time as per Configuration of system,namenode,task-tracker,job-tracker etc.

References:

https://www.quora.com/What-is-batch-processing-in-hadoop

A

Hadoop was designed for batch processing. That means, take a large dataset in input all at once, process it, and write a large output. The very concept of

MapReduce is geared towards batch and not real-time. With growing data, Hadoop enables you to horizontally scale your cluster by adding commodity nodes and thus keep up with query. In hadoop Map-reduce does the same job it will take large amount of data and process it in batch. It will not give immediate output. It will take time as per Configuration of system,namenode,task-tracker,job-tracker etc.

References:

https://www.quora.com/What-is-batch-processing-in-hadoop

send

light_mode

delete

Question #24

Your customer uses LDAP for centralized user/group management.

How will you integrate permissions management for the customers Big Data Appliance into the existing architecture?

How will you integrate permissions management for the customers Big Data Appliance into the existing architecture?

- AMake Oracle Identity Management for Big Data the single source of truth and point LDAP to its keystore for user lookup.

- BEnable Oracle Identity Management for Big Data and point its keystore to the LDAP directory for user lookup.

- CMake Kerberos the single source of truth and have LDAP use the Key Distribution Center for user lookup.

- DEnable Kerberos and have the Key Distribution Center use the LDAP directory for user lookup.

Correct Answer:

D

Kerberos integrates with LDAP servers allowing the principals and encryption keys to be stored in the common repository.

The complication with Kerberos authentication is that your organization needs to have a Kerberos KDC (Key Distribution Center) server setup already, which will then link to your corporate LDAP or Active Directory service to check user credentials when they request a Kerberos ticket.

References:

https://www.rittmanmead.com/blog/2015/04/setting-up-security-and-access-control-on-a-big-data-appliance/

D

Kerberos integrates with LDAP servers allowing the principals and encryption keys to be stored in the common repository.

The complication with Kerberos authentication is that your organization needs to have a Kerberos KDC (Key Distribution Center) server setup already, which will then link to your corporate LDAP or Active Directory service to check user credentials when they request a Kerberos ticket.

References:

https://www.rittmanmead.com/blog/2015/04/setting-up-security-and-access-control-on-a-big-data-appliance/

send

light_mode

delete

Question #25

Your customer collects diagnostic data from its storage systems that are deployed at customer sites. The customer needs to capture and process this data by country in batches.

Why should the customer choose Hadoop to process this data?

Why should the customer choose Hadoop to process this data?

- AHadoop processes data on large clusters (10-50 max) on commodity hardware.

- BHadoop is a batch data processing architecture.

- CHadoop supports centralized computing of large data sets on large clusters.

- DNode failures can be dealt with by configuring failover with clusterware.

- EHadoop processes data serially.

Correct Answer:

B

Hadoop was designed for batch processing. That means, take a large dataset in input all at once, process it, and write a large output. The very concept of

MapReduce is geared towards batch and not real-time. With growing data, Hadoop enables you to horizontally scale your cluster by adding commodity nodes and thus keep up with query. In hadoop Map-reduce does the same job it will take large amount of data and process it in batch. It will not give immediate output. It will take time as per Configuration of system,namenode,task-tracker,job-tracker etc.

Incorrect Answers:

A: Yahoo! has by far the most number of nodes in its massive Hadoop clusters at over 42,000 nodes as of July 2011.

C: Hadoop supports distributed computing of large data sets on large clusters

E: Hadoop processes data in parallel.

References:

https://www.quora.com/What-is-batch-processing-in-hadoop

B

Hadoop was designed for batch processing. That means, take a large dataset in input all at once, process it, and write a large output. The very concept of

MapReduce is geared towards batch and not real-time. With growing data, Hadoop enables you to horizontally scale your cluster by adding commodity nodes and thus keep up with query. In hadoop Map-reduce does the same job it will take large amount of data and process it in batch. It will not give immediate output. It will take time as per Configuration of system,namenode,task-tracker,job-tracker etc.

Incorrect Answers:

A: Yahoo! has by far the most number of nodes in its massive Hadoop clusters at over 42,000 nodes as of July 2011.

C: Hadoop supports distributed computing of large data sets on large clusters

E: Hadoop processes data in parallel.

References:

https://www.quora.com/What-is-batch-processing-in-hadoop

send

light_mode

delete

All Pages