Oracle 1z0-449 Exam Practice Questions (P. 3)

- Full Access (72 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

You want to set up access control lists on your NameNode in your Big Data Appliance. However, when you try to do so, you get an error stating "the NameNode disallows creation of ACLs."

What is the cause of the error?

What is the cause of the error?

- ADuring the Big Data Appliance setup, Cloudera's ACLSecurity product was not installed.

- BAccess control lists are set up on the DataNode and HadoopNode, not the NameNode.

- CDuring the Big Data Appliance setup, the Oracle Audit Vault product was not installed.

- Ddfs.namenode.acls.enabled must be set to true in the NameNode configuration.

Correct Answer:

D

To use ACLs, first youll need to enable ACLs on the NameNode by adding the following configuration property to hdfs-site.xml and restarting the NameNode.

<property>

<name>dfs.namenode.acls.enabled</name>

<value>true</value>

</property>

References:

https://hortonworks.com/blog/hdfs-acls-fine-grained-permissions-hdfs-files-hadoop/

D

To use ACLs, first youll need to enable ACLs on the NameNode by adding the following configuration property to hdfs-site.xml and restarting the NameNode.

<property>

<name>dfs.namenode.acls.enabled</name>

<value>true</value>

</property>

References:

https://hortonworks.com/blog/hdfs-acls-fine-grained-permissions-hdfs-files-hadoop/

send

light_mode

delete

Question #12

Your customer has an older starter rack Big Data Appliance (BDA) that was purchased in 2013. The customer would like to know what the options are for growing the storage footprint of its server.

Which two options are valid for expanding the customers BDA footprint? (Choose two.)

Which two options are valid for expanding the customers BDA footprint? (Choose two.)

- AElastically expand the footprint by adding additional high capacity nodes.

- BElastically expand the footprint by adding additional Big Data Oracle Database Servers.

- CElastically expand the footprint by adding additional Big Data Storage Servers.

- DRacks manufactured before 2014 are no longer eligible for expansion.

- EUpgrade to a full 18-node Big Data Appliance.

Correct Answer:

DE

DE

send

light_mode

delete

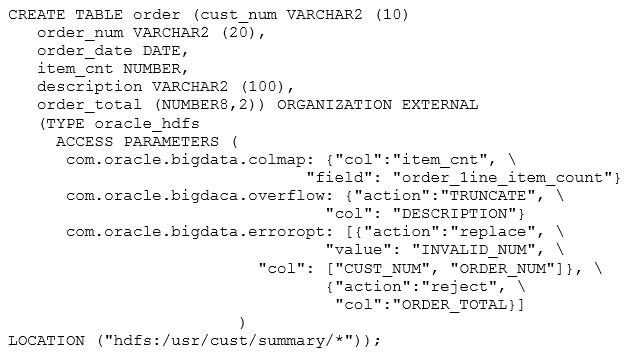

Question #13

What are three correct results of executing the preceding query? (Choose three.)

- AValues longer than 100 characters for the DESCRIPTION column are truncated.

- BORDER_LINE_ITEM_COUNT in the HDFS file matches ITEM_CNT in the external table.

- CITEM_CNT in the HDFS file matches ORDER_LINE_ITEM_COUNT in the external table.

- DErrors in the data for CUST_NUM or ORDER_NUM set the value to INVALID_NUM.

- EErrors in the data for CUST_NUM or ORDER_NUM set the value to 0000000000.

- FValues longer than 100 characters for any column are truncated.

Correct Answer:

ACD

com.oracle.bigdata.overflow: Truncates string data. Values longer than 100 characters for the DESCRIPTION column are truncated. com.oracle.bigdata.overflow: Truncates string data. Values longer than 100 characters for the DESCRIPTION column are truncated. com.oracle.bigdata.erroropt: Replaces bad data. Errors in the data for CUST_NUM or ORDER_NUM set the value to INVALID_NUM.

References:

https://docs.oracle.com/cd/E55905_01/doc.40/e55814/bigsql.htm#BIGUG76679

ACD

com.oracle.bigdata.overflow: Truncates string data. Values longer than 100 characters for the DESCRIPTION column are truncated. com.oracle.bigdata.overflow: Truncates string data. Values longer than 100 characters for the DESCRIPTION column are truncated. com.oracle.bigdata.erroropt: Replaces bad data. Errors in the data for CUST_NUM or ORDER_NUM set the value to INVALID_NUM.

References:

https://docs.oracle.com/cd/E55905_01/doc.40/e55814/bigsql.htm#BIGUG76679

send

light_mode

delete

Question #14

What does the following line do in Apache Pig?

products = LOAD /user/oracle/products AS (prod_id, item);

products = LOAD /user/oracle/products AS (prod_id, item);

- AThe products table is loaded by using data pump with prod_id and item.

- BThe LOAD table is populated with prod_id and item.

- CThe contents of /user/oracle/products are loaded as tuples and aliased to products.

- DThe contents of /user/oracle/products are dumped to the screen.

Correct Answer:

C

The LOAD function loads data from the file system.

Syntax: LOAD 'data' [USING function] [AS schema];

Terms: 'data'

The name of the file or directory, in single quote

References:

https://pig.apache.org/docs/r0.11.1/basic.html#load

C

The LOAD function loads data from the file system.

Syntax: LOAD 'data' [USING function] [AS schema];

Terms: 'data'

The name of the file or directory, in single quote

References:

https://pig.apache.org/docs/r0.11.1/basic.html#load

send

light_mode

delete

Question #15

What is the output of the following six commands when they are executed by using the Oracle XML Extensions for Hive in the Oracle XQuery for Hadoop

Connector?

1. $ echo "xxx" > src.txt

2. $ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

3. hive> CREATE TABLE src (dummy STRING);

4. hive> LOAD DATA LOCAL INPATH 'src.txt' OVERWRITE INTO TABLE src;

5. hive> SELECT * FROM src;

OK -

xxx

6. hive> SELECT xml_query ("x/y", "<x><y>123</y><z>456</z></x>") FROM src;

Connector?

1. $ echo "xxx" > src.txt

2. $ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

3. hive> CREATE TABLE src (dummy STRING);

4. hive> LOAD DATA LOCAL INPATH 'src.txt' OVERWRITE INTO TABLE src;

5. hive> SELECT * FROM src;

OK -

xxx

6. hive> SELECT xml_query ("x/y", "<x><y>123</y><z>456</z></x>") FROM src;

- Axyz

- B123

- C456

- Dxxx

- Ex/y

Correct Answer:

B

Using the Hive Extensions -

To enable the Oracle XQuery for Hadoop extensions, use the --auxpath and -i arguments when starting Hive:

$ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

The first time you use the extensions, verify that they are accessible. The following procedure creates a table named SRC, loads one row into it, and calls the xml_query function.

To verify that the extensions are accessible:

1. Log in to an Oracle Big Data Appliance server where you plan to work.

2. Create a text file named src.txt that contains one line:

$ echo "XXX" > src.txt

3. Start the Hive command-line interface (CLI):

$ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

The init.sql file contains the CREATE TEMPORARY FUNCTION statements that declare the XML functions.

4. Create a simple table:

hive> CREATE TABLE src(dummy STRING);

The SRC table is needed only to fulfill a SELECT syntax requirement. It is like the DUAL table in Oracle Database, which is referenced in SELECT statements to test SQL functions.

5. Load data from src.txt into the table:

hive> LOAD DATA LOCAL INPATH 'src.txt' OVERWRITE INTO TABLE src;

6. Query the table using Hive SELECT statements:

hive> SELECT * FROM src;

OK -

xxx

7. Call an Oracle XQuery for Hadoop function for Hive. This example calls the xml_query function to parse an XML string: hive> SELECT xml_query("x/y", "<x><y>123</y><z>456</z></x>") FROM src;

.

.

.

["123"]

If the extensions are accessible, then the query returns ["123"], as shown in the example

References:

https://docs.oracle.com/cd/E53356_01/doc.30/e53067/oxh_hive.htm#BDCUG693

B

Using the Hive Extensions -

To enable the Oracle XQuery for Hadoop extensions, use the --auxpath and -i arguments when starting Hive:

$ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

The first time you use the extensions, verify that they are accessible. The following procedure creates a table named SRC, loads one row into it, and calls the xml_query function.

To verify that the extensions are accessible:

1. Log in to an Oracle Big Data Appliance server where you plan to work.

2. Create a text file named src.txt that contains one line:

$ echo "XXX" > src.txt

3. Start the Hive command-line interface (CLI):

$ hive --auxpath $OXH_HOME/hive/lib -i $OXH_HOME/hive/init.sql

The init.sql file contains the CREATE TEMPORARY FUNCTION statements that declare the XML functions.

4. Create a simple table:

hive> CREATE TABLE src(dummy STRING);

The SRC table is needed only to fulfill a SELECT syntax requirement. It is like the DUAL table in Oracle Database, which is referenced in SELECT statements to test SQL functions.

5. Load data from src.txt into the table:

hive> LOAD DATA LOCAL INPATH 'src.txt' OVERWRITE INTO TABLE src;

6. Query the table using Hive SELECT statements:

hive> SELECT * FROM src;

OK -

xxx

7. Call an Oracle XQuery for Hadoop function for Hive. This example calls the xml_query function to parse an XML string: hive> SELECT xml_query("x/y", "<x><y>123</y><z>456</z></x>") FROM src;

.

.

.

["123"]

If the extensions are accessible, then the query returns ["123"], as shown in the example

References:

https://docs.oracle.com/cd/E53356_01/doc.30/e53067/oxh_hive.htm#BDCUG693

send

light_mode

delete

All Pages