Microsoft 70-764 Exam Practice Questions (P. 5)

- Full Access (342 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #41

HOTSPOT -

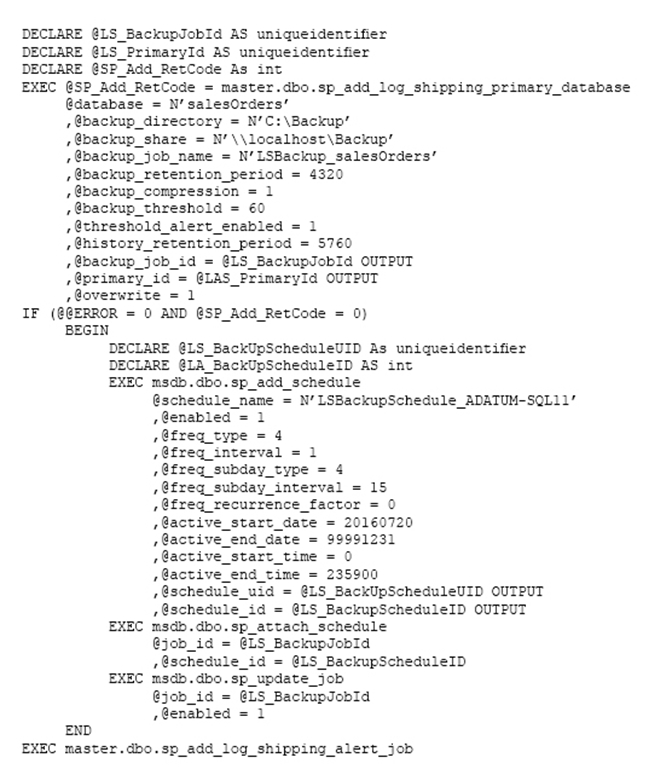

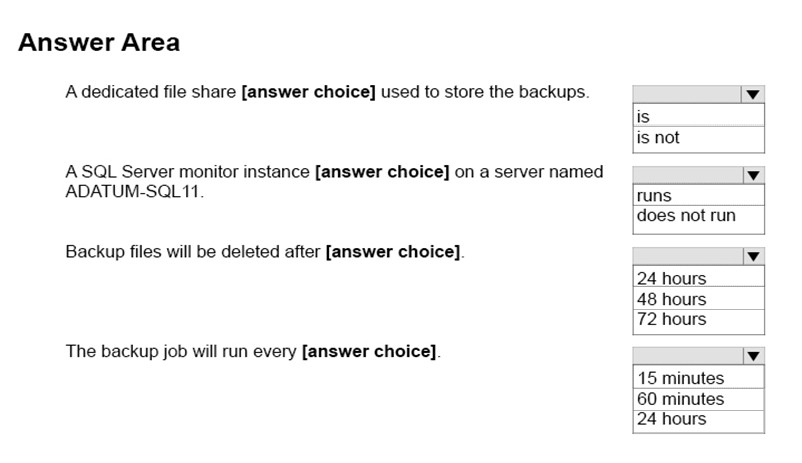

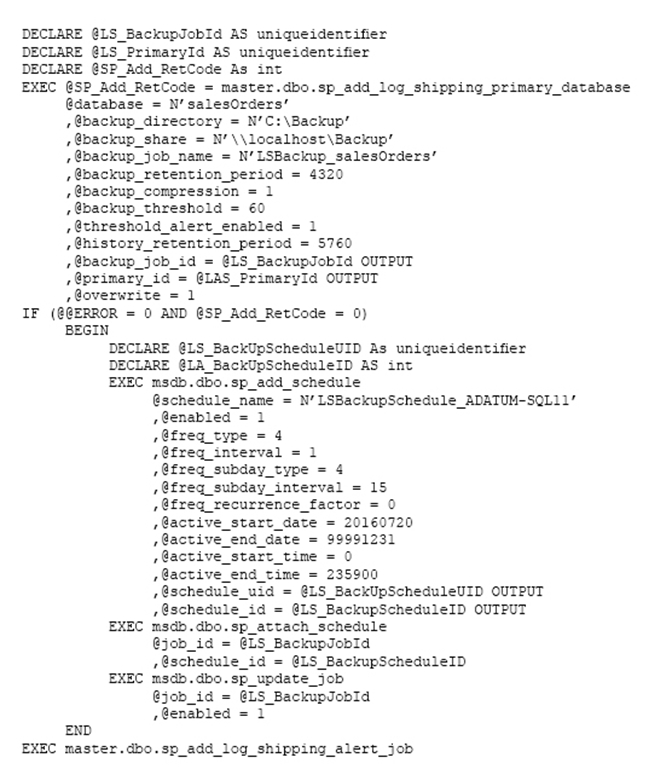

You are configuring log shipping for a Microsoft SQL Server database named salesOrders.

You run the following Transact-SQL script:

You need to determine the changes that the script has on the environment.

How does the script affect the environment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

You are configuring log shipping for a Microsoft SQL Server database named salesOrders.

You run the following Transact-SQL script:

You need to determine the changes that the script has on the environment.

How does the script affect the environment? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

Hot Area:

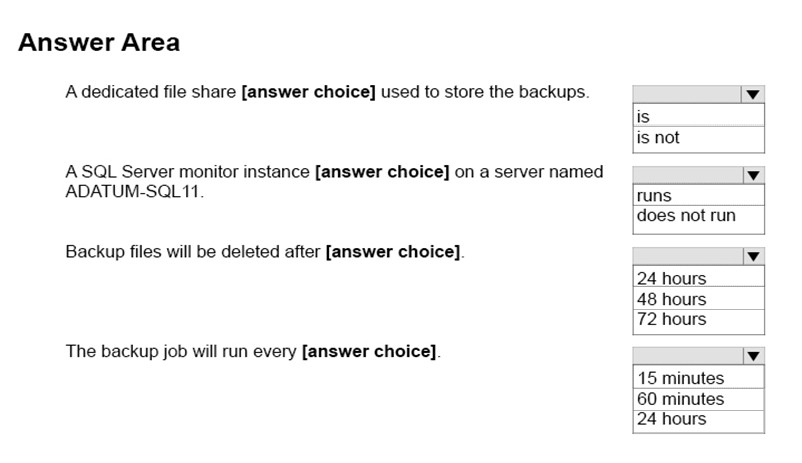

Correct Answer:

Box 1: is -

The dedicated backup file share is \\localhost\Backup

Box 2: does not run -

The only thing with a name related to ADATM-SQL11 is the schedule name.

Box 3: 72 hours -

4320 minutes equals 72 hours.

Note: @backup_retention_period= ] backup_retention_period

Isthe length of time, in minutes, to retain the log backup file in the backup directory on the primary server. backup_retention_period is int, with no default, and cannot be NULL.

Box 4: 15 minutes.

[ @freq_subday_type = ] freq_subday_type

Specifies the units for freq_subday_interval. freq_subday_typeis int, with a default of 0, and can be one of these values.

Here it is 4, which means minutes.

[ @freq_subday_interval = ] freq_subday_interval

The number of freq_subday_type periods to occur between eachexecution of a job. freq_subday_intervalis int, with a default of 0.

Note: Interval should be longer than 10 seconds. freq_subday_interval is ignored in those cases where freq_subday_type is equal to 1.

Box 1: is -

The dedicated backup file share is \\localhost\Backup

Box 2: does not run -

The only thing with a name related to ADATM-SQL11 is the schedule name.

Box 3: 72 hours -

4320 minutes equals 72 hours.

Note: @backup_retention_period= ] backup_retention_period

Isthe length of time, in minutes, to retain the log backup file in the backup directory on the primary server. backup_retention_period is int, with no default, and cannot be NULL.

Box 4: 15 minutes.

[ @freq_subday_type = ] freq_subday_type

Specifies the units for freq_subday_interval. freq_subday_typeis int, with a default of 0, and can be one of these values.

Here it is 4, which means minutes.

[ @freq_subday_interval = ] freq_subday_interval

The number of freq_subday_type periods to occur between eachexecution of a job. freq_subday_intervalis int, with a default of 0.

Note: Interval should be longer than 10 seconds. freq_subday_interval is ignored in those cases where freq_subday_type is equal to 1.

send

light_mode

delete

Question #42

HOTSPOT -

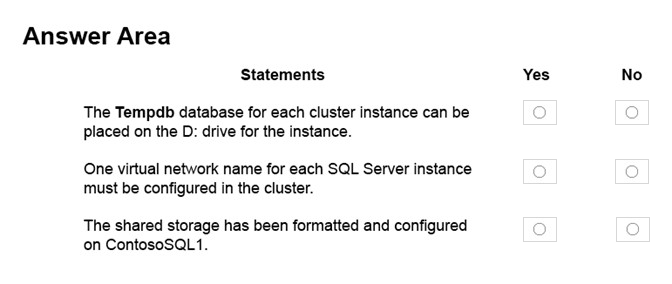

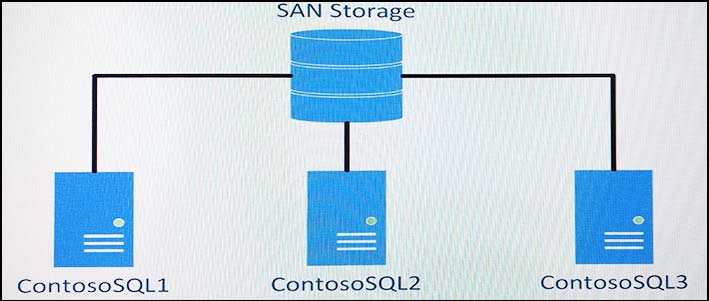

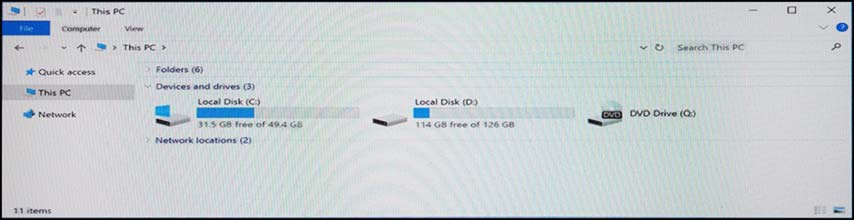

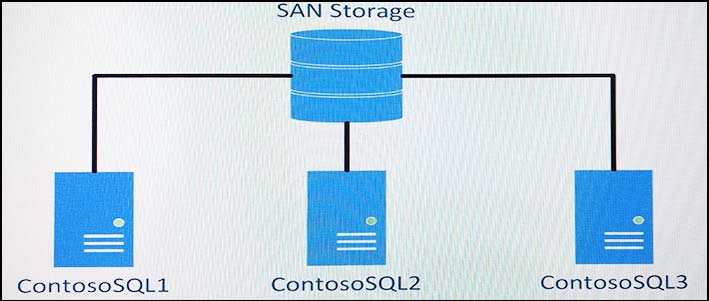

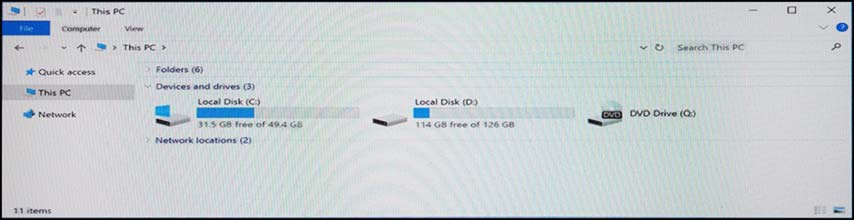

You are planning the deployment of two new Always On Failover Cluster Instances (FCIs) of Microsoft SQL Server to a single Windows Server Cluster with three nodes. The planned configuration for the cluster is shown in the Server Layout exhibit. (Click the Exhibit button.)

The SAN team has configured storage for the cluster and sent the configuration to you in the email shown in the SAN Team Email exhibit. (Click the Exhibit button.)

Each node of the cluster has identical local storage available as shown in the Local Storage exhibit. (Click the Exhibit button.)

All local storage is on SSD.

You need to plan specific configurations for the new cluster.

For each of the following statement, select Yes if the statement is true. Otherwise, select No.

Hot Area:

You are planning the deployment of two new Always On Failover Cluster Instances (FCIs) of Microsoft SQL Server to a single Windows Server Cluster with three nodes. The planned configuration for the cluster is shown in the Server Layout exhibit. (Click the Exhibit button.)

The SAN team has configured storage for the cluster and sent the configuration to you in the email shown in the SAN Team Email exhibit. (Click the Exhibit button.)

Each node of the cluster has identical local storage available as shown in the Local Storage exhibit. (Click the Exhibit button.)

All local storage is on SSD.

You need to plan specific configurations for the new cluster.

For each of the following statement, select Yes if the statement is true. Otherwise, select No.

Hot Area:

send

light_mode

delete

Question #43

HOTSPOT -

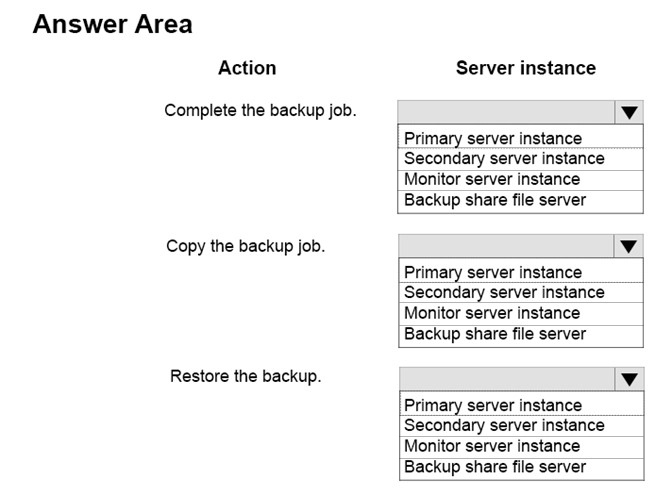

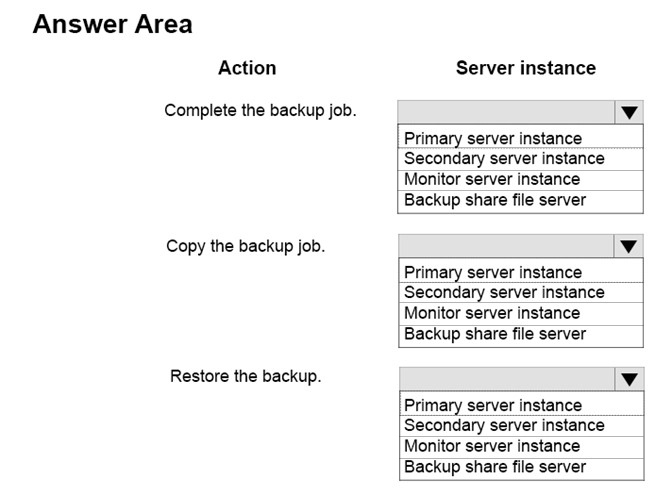

You are planning to deploy log shipping for Microsoft SQL Server and store all backups on a dedicated fileshare.

You need to configure the servers to perform each log shipping step.

Which server instance should you configure to perform each action? To answer, select the appropriate server instances in the dialog box in the answer area.

Hot Area:

You are planning to deploy log shipping for Microsoft SQL Server and store all backups on a dedicated fileshare.

You need to configure the servers to perform each log shipping step.

Which server instance should you configure to perform each action? To answer, select the appropriate server instances in the dialog box in the answer area.

Hot Area:

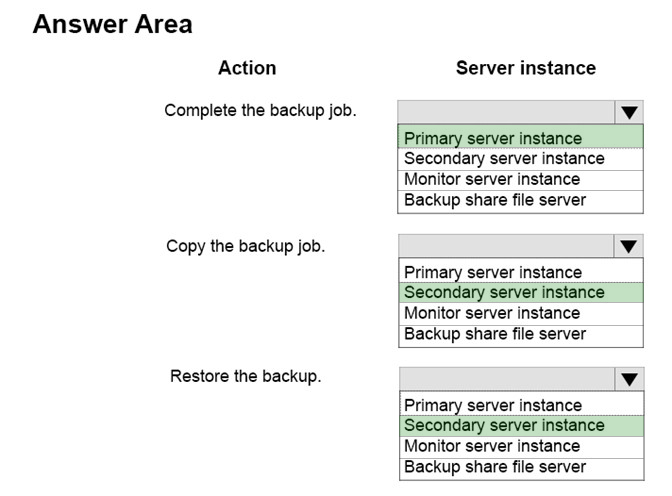

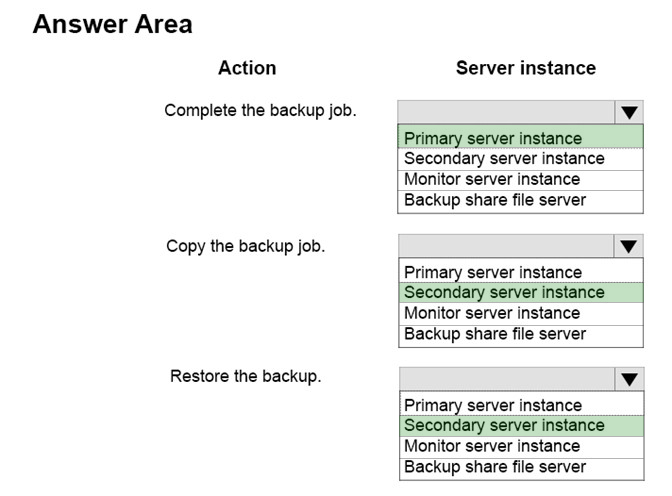

Correct Answer:

Note: Before you configure log shipping, you must create a share to make the transaction log backups available to the secondary server.

SQL Server Log shipping allows you to automatically send transaction log backups from a primary database on a primary server instance to one or more secondary databases on separate secondary server instances. The transaction log backups are applied to each of the secondary databases individually. An optional third server instance, known as the monitor server, records the history and status of backup and restore operations and, optionally, raises alerts if these operations fail to occur as scheduled.

Box 1: Primary server instance.

The primary server instance runs the backup job to back up the transaction log on the primary database. backup job: A SQL Server Agent job that performs the backup operation, logs history to the local server and the monitor server, and deletes old backup files and history information. When log shipping is enabled, the job category "Log Shipping Backup" is created on the primary server instance.

Box 2: Secondary server instance

Each of the three secondary server instances runs its own copy job to copy the primary log-backup file to its own local destination folder. copy job: A SQL Server Agent job that copies the backup files from the primary server to a configurable destination on the secondary server and logs history on the secondary server and the monitor server. When log shipping is enabled on a database, the job category "Log Shipping Copy" is created on each secondary server in a log shipping configuration.

Box 3: Secondary server instance.

Each secondary server instance runs its own restore job to restore the log backup from the local destination folder onto the local secondary database. restore job: A SQL Server Agent job that restores the copied backup files to the secondary databases. It logs history on the local server and the monitor server, and deletes old files and old history information. When log shipping is enabled on a database, the job category "Log Shipping Restore" is created on the secondary server instance.

References: https://docs.microsoft.com/en-us/sql/database-engine/log-shipping/about-log-shipping-sql-server

Note: Before you configure log shipping, you must create a share to make the transaction log backups available to the secondary server.

SQL Server Log shipping allows you to automatically send transaction log backups from a primary database on a primary server instance to one or more secondary databases on separate secondary server instances. The transaction log backups are applied to each of the secondary databases individually. An optional third server instance, known as the monitor server, records the history and status of backup and restore operations and, optionally, raises alerts if these operations fail to occur as scheduled.

Box 1: Primary server instance.

The primary server instance runs the backup job to back up the transaction log on the primary database. backup job: A SQL Server Agent job that performs the backup operation, logs history to the local server and the monitor server, and deletes old backup files and history information. When log shipping is enabled, the job category "Log Shipping Backup" is created on the primary server instance.

Box 2: Secondary server instance

Each of the three secondary server instances runs its own copy job to copy the primary log-backup file to its own local destination folder. copy job: A SQL Server Agent job that copies the backup files from the primary server to a configurable destination on the secondary server and logs history on the secondary server and the monitor server. When log shipping is enabled on a database, the job category "Log Shipping Copy" is created on each secondary server in a log shipping configuration.

Box 3: Secondary server instance.

Each secondary server instance runs its own restore job to restore the log backup from the local destination folder onto the local secondary database. restore job: A SQL Server Agent job that restores the copied backup files to the secondary databases. It logs history on the local server and the monitor server, and deletes old files and old history information. When log shipping is enabled on a database, the job category "Log Shipping Restore" is created on the secondary server instance.

References: https://docs.microsoft.com/en-us/sql/database-engine/log-shipping/about-log-shipping-sql-server

send

light_mode

delete

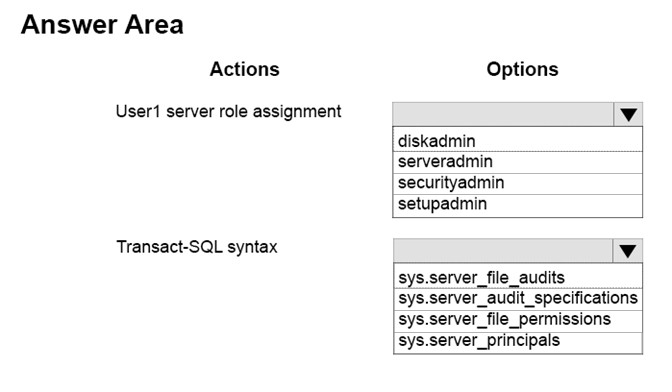

Question #44

HOTSPOT -

You manage a Microsoft SQL Server instance. You have a user named User1.

You need to grant the minimum permissions necessary to allow User1 to review audit logs.

For each action, which option should you use? To answer, select the appropriate options in the answer area.

Hot Area:

You manage a Microsoft SQL Server instance. You have a user named User1.

You need to grant the minimum permissions necessary to allow User1 to review audit logs.

For each action, which option should you use? To answer, select the appropriate options in the answer area.

Hot Area:

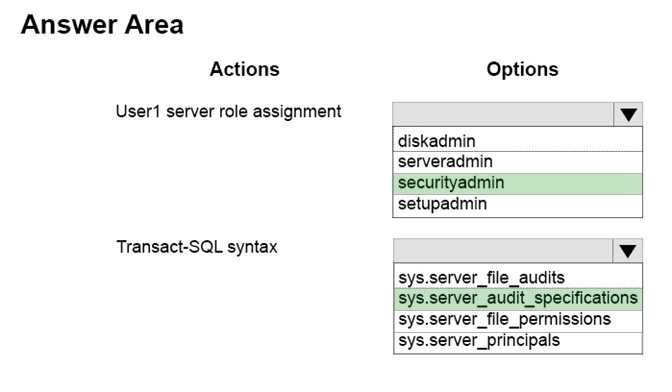

Correct Answer:

Box 1: securityadmin -

To access log files for instances of SQL Server that are online, this requires membership in the securityadmin fixed server role.

Box 2: sys.server_audit_specifications

sys.server_audit_specifications contains information about the server audit specifications in a SQL Server audit on a server instance.

Box 1: securityadmin -

To access log files for instances of SQL Server that are online, this requires membership in the securityadmin fixed server role.

Box 2: sys.server_audit_specifications

sys.server_audit_specifications contains information about the server audit specifications in a SQL Server audit on a server instance.

send

light_mode

delete

Question #45

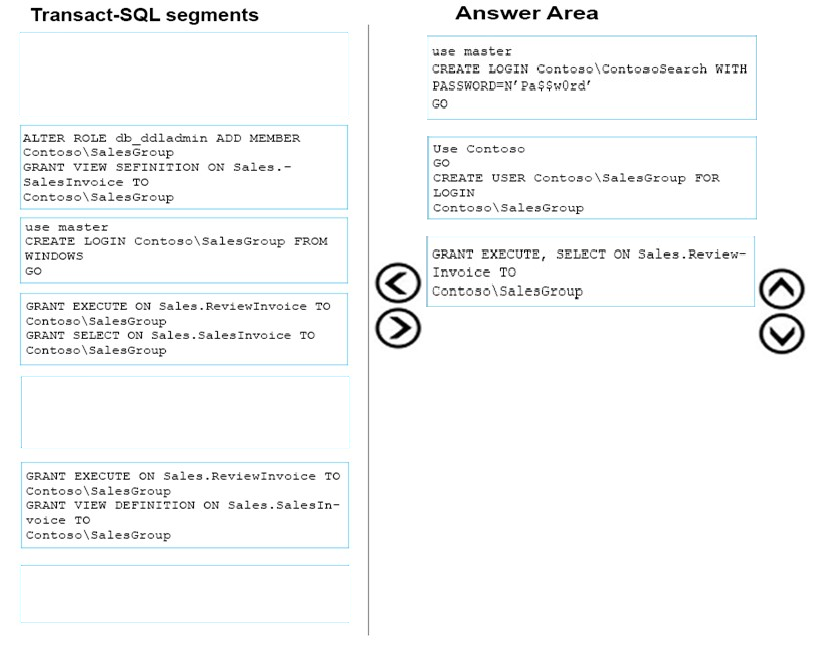

DRAG DROP -

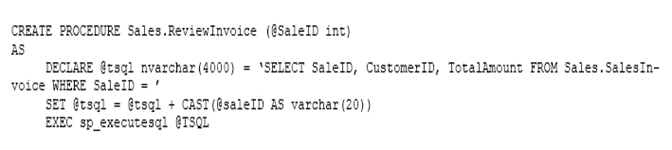

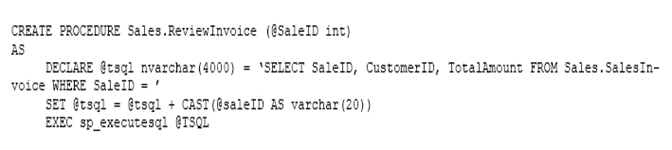

You administer a Microsoft SQL Server database named Contoso. You create a stored procedure named Sales.ReviewInvoice by running the following Transact-

SQL statement:

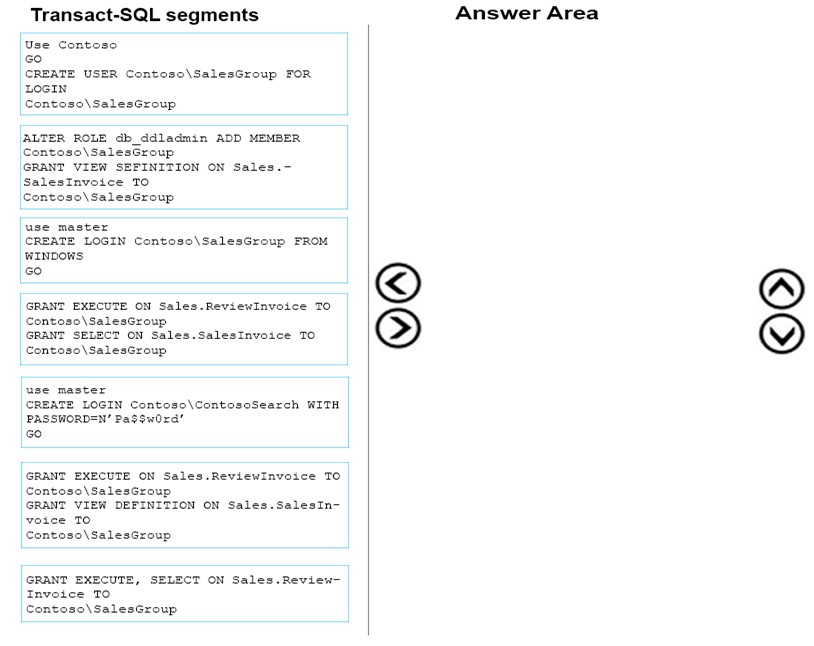

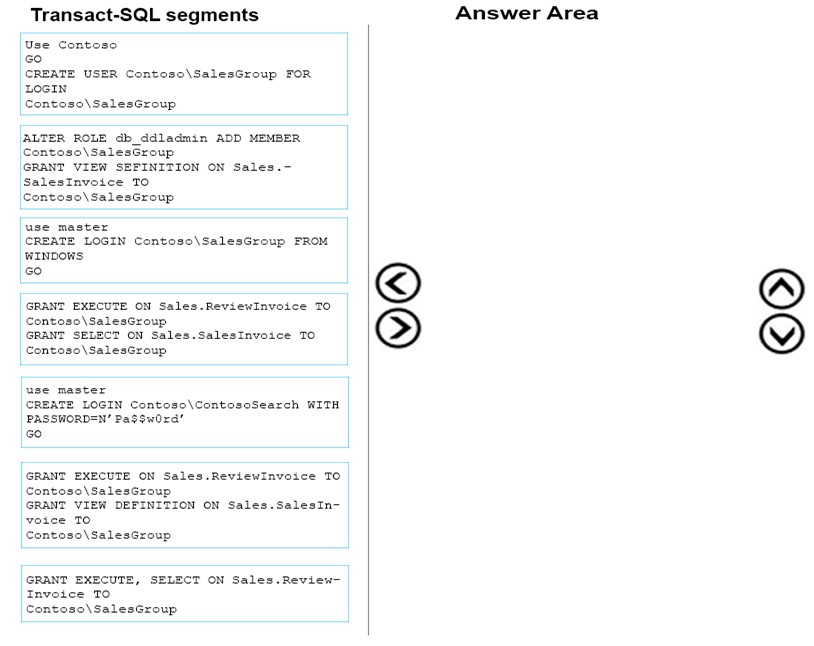

You need to create a Windows-authenticated login named ContosoSearch and ensure that ContosoSearch can run the Sales.ReviewInvoices stored procedure.

Which three Transact-SQL segments should you use to develop the solution? To answer, move the appropriate Transact-SQL segments from the list of Transact-

SQL segments to the answer area and arrange them in the correct order.

Select and Place:

You administer a Microsoft SQL Server database named Contoso. You create a stored procedure named Sales.ReviewInvoice by running the following Transact-

SQL statement:

You need to create a Windows-authenticated login named ContosoSearch and ensure that ContosoSearch can run the Sales.ReviewInvoices stored procedure.

Which three Transact-SQL segments should you use to develop the solution? To answer, move the appropriate Transact-SQL segments from the list of Transact-

SQL segments to the answer area and arrange them in the correct order.

Select and Place:

send

light_mode

delete

Question #46

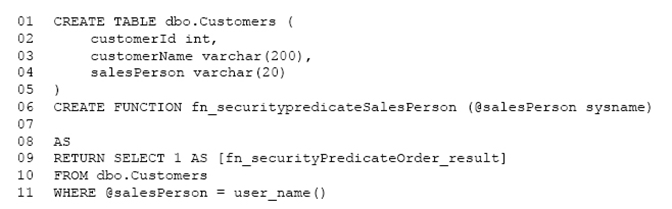

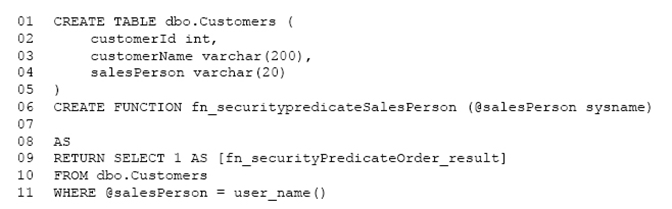

You have a database that stores information for a shipping company. You create a table named Customers by running the following Transact-SQL statement.

(Line numbers are included for reference only.)

You need to ensure that salespeople can view data only for the customers that are assigned to them.

Which Transact-SQL segment should you insert at line 07?

(Line numbers are included for reference only.)

You need to ensure that salespeople can view data only for the customers that are assigned to them.

Which Transact-SQL segment should you insert at line 07?

- ARETURNS varchar(20)WITH Schemabinding

- BRETURNS dbo.CustomersORDER BY @salesPerson

- CRETURNS tableORDER BY @salesPerson

- DRETURNS tableWITH Schemabinding

Correct Answer:

D

The return value can either be a scalar (single) value or a table.

SELECT 1 just selects a 1 for every row, of course. What it's used for in this case is testing whether any rows exist that match the criteria: if a row exists that matches the WHERE clause, then it returns 1, otherwise it returns nothing.

Specify the WITH SCHEMABINDING clause when you are creating the function. This ensures that the objects referenced in the function definition cannot be modified unless the function is also modified.

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-function-transact-sql

D

The return value can either be a scalar (single) value or a table.

SELECT 1 just selects a 1 for every row, of course. What it's used for in this case is testing whether any rows exist that match the criteria: if a row exists that matches the WHERE clause, then it returns 1, otherwise it returns nothing.

Specify the WITH SCHEMABINDING clause when you are creating the function. This ensures that the objects referenced in the function definition cannot be modified unless the function is also modified.

References: https://docs.microsoft.com/en-us/sql/t-sql/statements/create-function-transact-sql

send

light_mode

delete

Question #47

You manage a Microsoft SQL Server environment. You plan to encrypt data when you create backups.

You need to configure the encryption options for backups.

What should you configure?

You need to configure the encryption options for backups.

What should you configure?

- Aa certificate

- Ban MD5 hash

- Ca DES key

- Dan AES 256-bit key

Correct Answer:

D

To encrypt during backup, you must specify an encryption algorithm, and an encryptor to secure the encryption key. The following are the supported encryption options:

Encryption Algorithm: The supported encryption algorithms are: AES 128, AES 192, AES 256, and Triple DES

Encryptor: A certificate or asymmetric Key

References: https://docs.microsoft.com/en-us/sql/relational-databases/backup-restore/backup-encryption

D

To encrypt during backup, you must specify an encryption algorithm, and an encryptor to secure the encryption key. The following are the supported encryption options:

Encryption Algorithm: The supported encryption algorithms are: AES 128, AES 192, AES 256, and Triple DES

Encryptor: A certificate or asymmetric Key

References: https://docs.microsoft.com/en-us/sql/relational-databases/backup-restore/backup-encryption

send

light_mode

delete

Question #48

You have a database named DB1 that stores more than 700 gigabyte (GB) of data and serves millions of requests per hour.

Queries on DB1 are taking longer than normal to complete.

You run the following Transact-SQL statement:

SELECT * FROM sys.database_query_store_options

You determine that the Query Store is in Read-Only mode.

You need to maximize the time that the Query Store is in Read-Write mode.

Which Transact-SQL statement should you run?

Queries on DB1 are taking longer than normal to complete.

You run the following Transact-SQL statement:

SELECT * FROM sys.database_query_store_options

You determine that the Query Store is in Read-Only mode.

You need to maximize the time that the Query Store is in Read-Write mode.

Which Transact-SQL statement should you run?

- AALTER DATABASE DB1SET QUERY_STORE (QUERY_CAPTURE_MODE = ALL)

- BALTER DATABASE DB1SET QUERY_STORE (MAX_STORAGE_SIZE_MB = 50)

- CALTER DATABASE DB1SET QUERY_STORE (CLEANUP_POLICY = (STALE_QUERY_THRESHOLD_DAYS = 14));

- DALTER DATABASE DB1SET QUERY_STORE (QUERY_CAPTURE_MODE = NONE)

Correct Answer:

C

Stale Query Threshold (Days): Time-based cleanup policy that controls the retention period of persisted runtime statistics and inactive queries.

By default, Query Store is configured to keep the data for 30 days which may be unnecessarily long for your scenario.

Avoid keeping historical data that you do not plan to use. This will reduce changes to read-only status. The size of Query Store data as well as the time to detect and mitigate the issue will be more predictable. Use Management Studio or the following script to configure time-based cleanup policy:

ALTER DATABASE [QueryStoreDB]

SET QUERY_STORE (CLEANUP_POLICY = (STALE_QUERY_THRESHOLD_DAYS = 14));

References: https://docs.microsoft.com/en-us/sql/relational-databases/performance/best-practice-with-the-query-store

C

Stale Query Threshold (Days): Time-based cleanup policy that controls the retention period of persisted runtime statistics and inactive queries.

By default, Query Store is configured to keep the data for 30 days which may be unnecessarily long for your scenario.

Avoid keeping historical data that you do not plan to use. This will reduce changes to read-only status. The size of Query Store data as well as the time to detect and mitigate the issue will be more predictable. Use Management Studio or the following script to configure time-based cleanup policy:

ALTER DATABASE [QueryStoreDB]

SET QUERY_STORE (CLEANUP_POLICY = (STALE_QUERY_THRESHOLD_DAYS = 14));

References: https://docs.microsoft.com/en-us/sql/relational-databases/performance/best-practice-with-the-query-store

send

light_mode

delete

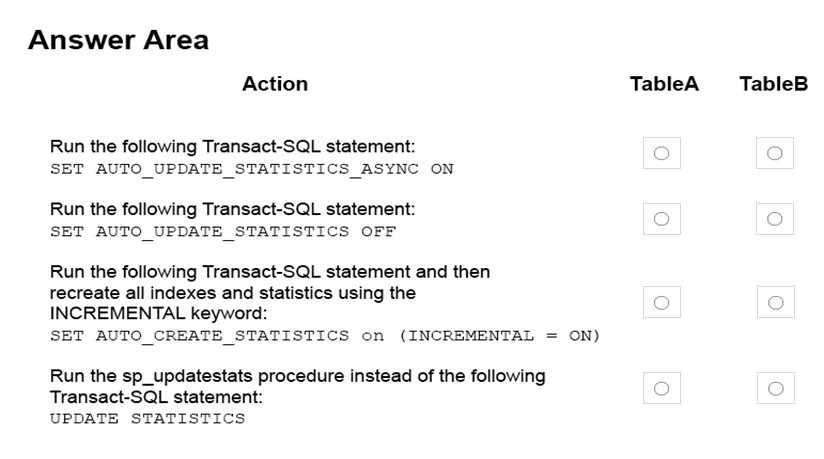

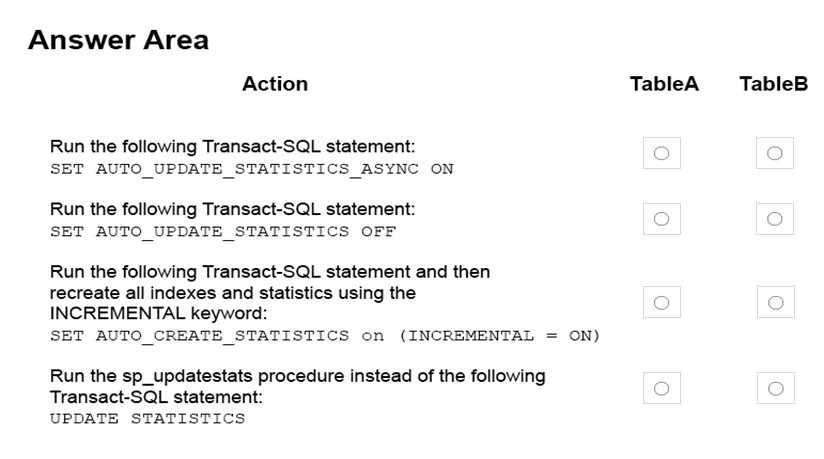

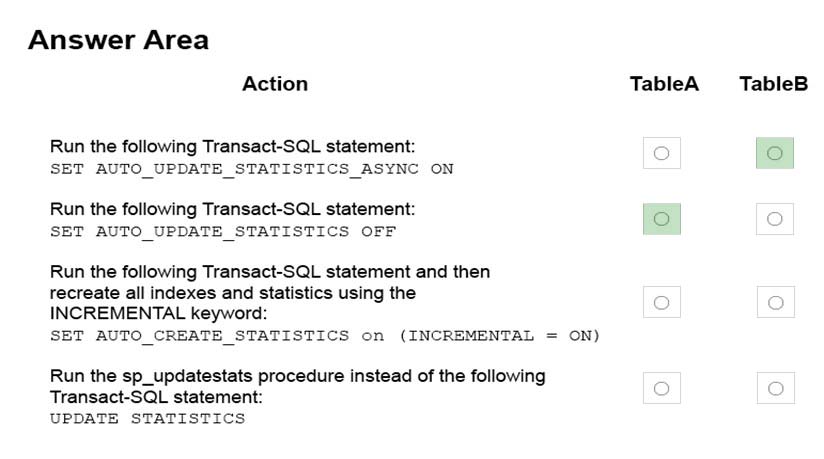

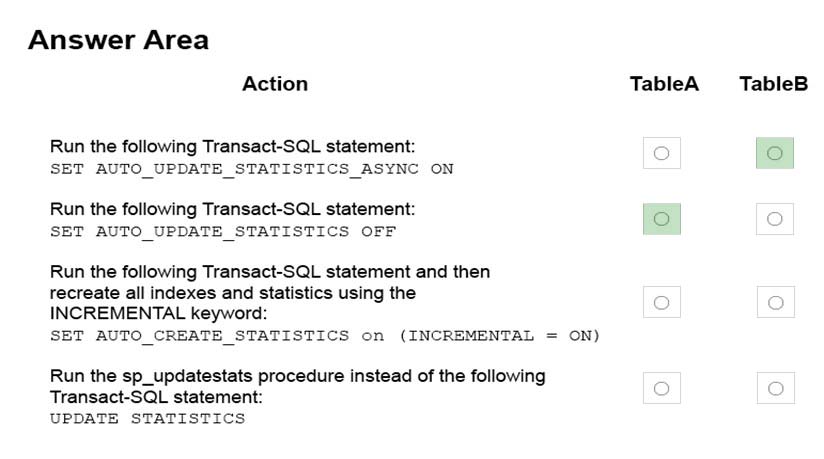

Question #49

HOTSPOT -

You deploy a Microsoft SQL Server instance to support a global sales application. The instance includes the following tables: TableA and TableB.

TableA is a partitioned table that uses an incrementing integer number for partitioning. The table has millions of rows in each partition. Most changes to the data in

TableA affect recently added data. The UPDATE STATISTICS for TableA takes longer to complete than the allotted maintenance window.

Thousands of operations are performed against TableB each minute. You observe a large number of Auto Update Statistics events for TableB.

You need to address the performance issues with each table.

In the table below, identify the action that will resolve the issues for each table.

NOTE:

Make only one selection in each column.

Hot Area:

You deploy a Microsoft SQL Server instance to support a global sales application. The instance includes the following tables: TableA and TableB.

TableA is a partitioned table that uses an incrementing integer number for partitioning. The table has millions of rows in each partition. Most changes to the data in

TableA affect recently added data. The UPDATE STATISTICS for TableA takes longer to complete than the allotted maintenance window.

Thousands of operations are performed against TableB each minute. You observe a large number of Auto Update Statistics events for TableB.

You need to address the performance issues with each table.

In the table below, identify the action that will resolve the issues for each table.

NOTE:

Make only one selection in each column.

Hot Area:

Correct Answer:

Table A: Auto_update statistics off

Table A does not change much. There is no need to update the statistics on this table.

Table B: SET AUTO_UPDATE_STATISTICS_ASYNC ON

You can set the database to update statistics asynchronously:

ALTER DATABASE YourDBName -

SET AUTO_UPDATE_STATISTICS_ASYNC ON

If you enable this option then the Query Optimizer will run the query first and update the outdated statistics afterwards. When you set this option to OFF, the Query

Optimizer will update the outdated statistics before compiling the query. This option can be useful in OLTP environments

References: https://www.mssqltips.com/sqlservertip/2766/sql-server-auto-update-and-auto-create-statistics-options/

Table A: Auto_update statistics off

Table A does not change much. There is no need to update the statistics on this table.

Table B: SET AUTO_UPDATE_STATISTICS_ASYNC ON

You can set the database to update statistics asynchronously:

ALTER DATABASE YourDBName -

SET AUTO_UPDATE_STATISTICS_ASYNC ON

If you enable this option then the Query Optimizer will run the query first and update the outdated statistics afterwards. When you set this option to OFF, the Query

Optimizer will update the outdated statistics before compiling the query. This option can be useful in OLTP environments

References: https://www.mssqltips.com/sqlservertip/2766/sql-server-auto-update-and-auto-create-statistics-options/

send

light_mode

delete

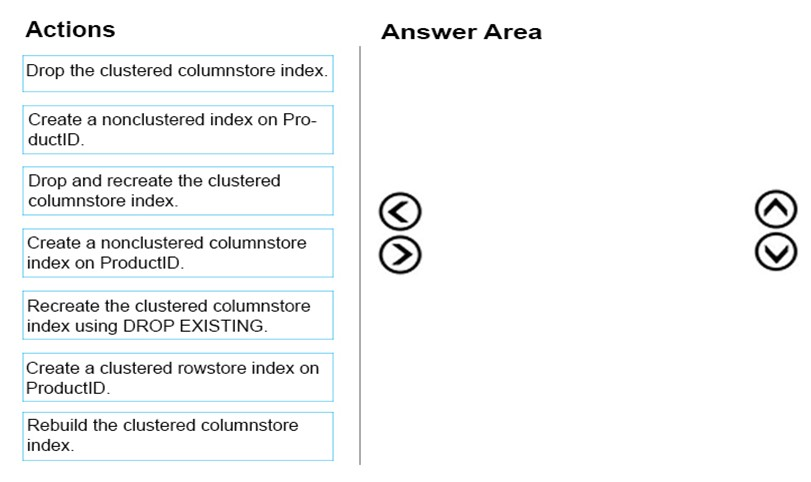

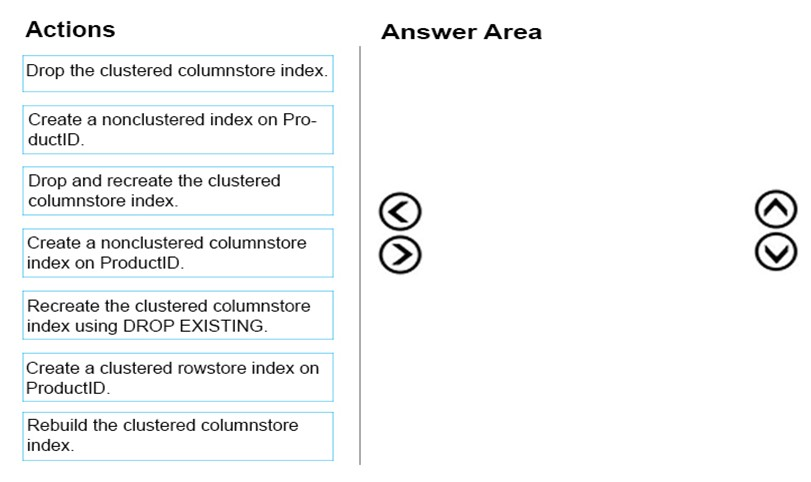

Question #50

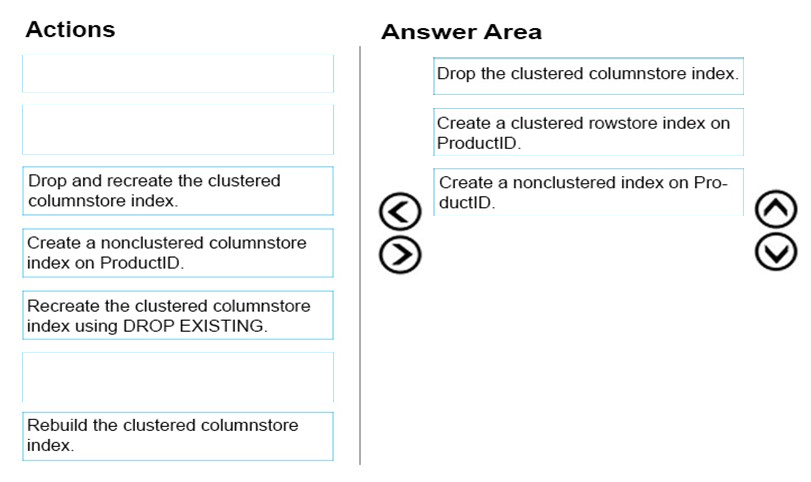

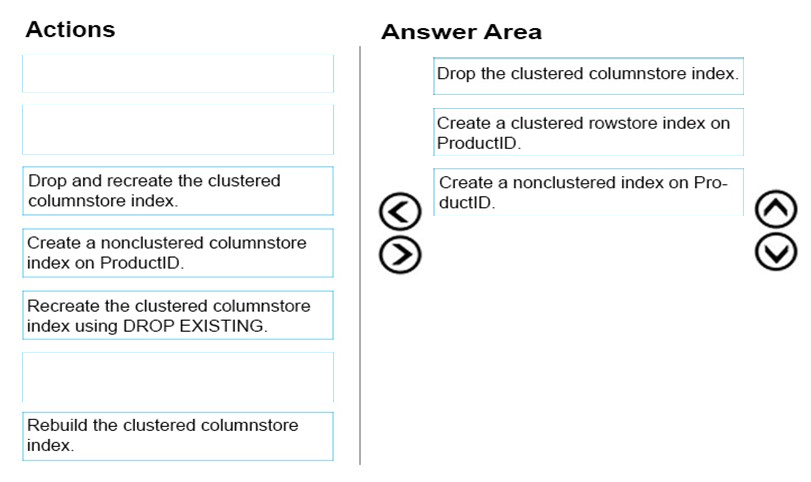

DRAG DROP -

You administer a database that is used for reporting purposes. The database has a large fact table that contains three hundred million rows. The table includes a clustered columnstore index and a nonclustered index on the ProductID column. New rows are inserted into the table every day.

Performance of queries that filter the Product ID column have degraded significantly.

You need to improve the performance of the queries.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

You administer a database that is used for reporting purposes. The database has a large fact table that contains three hundred million rows. The table includes a clustered columnstore index and a nonclustered index on the ProductID column. New rows are inserted into the table every day.

Performance of queries that filter the Product ID column have degraded significantly.

You need to improve the performance of the queries.

Which three actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

Correct Answer:

Step 1: Drop the clustered columnstore index

Step 2: Create a clustered rowstore index on ProductID.

Rowstore indexes perform best on queries that seek into the data, searching for a particular value, or for queries on a small range of values. Use rowstore indexes with transactional workloads since they tend to require mostly table seeks instead of table scans.

Step 3: Create a nonclustered index on ProductID

Incorrect Answers:

Not: Create a nonclustered columnstore index on ProductID

Use a nonclustered columnstore index to perform analysis in real-time on an OLTP workload.

References: https://docs.microsoft.com/en-us/sql/relational-databases/indexes/columnstore-indexes-overview

Step 1: Drop the clustered columnstore index

Step 2: Create a clustered rowstore index on ProductID.

Rowstore indexes perform best on queries that seek into the data, searching for a particular value, or for queries on a small range of values. Use rowstore indexes with transactional workloads since they tend to require mostly table seeks instead of table scans.

Step 3: Create a nonclustered index on ProductID

Incorrect Answers:

Not: Create a nonclustered columnstore index on ProductID

Use a nonclustered columnstore index to perform analysis in real-time on an OLTP workload.

References: https://docs.microsoft.com/en-us/sql/relational-databases/indexes/columnstore-indexes-overview

send

light_mode

delete

All Pages