Google Professional Machine Learning Engineer Exam Practice Questions (P. 3)

- Full Access (339 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

You have deployed multiple versions of an image classification model on AI Platform. You want to monitor the performance of the model versions over time. How should you perform this comparison?

- ACompare the loss performance for each model on a held-out dataset.

- BCompare the loss performance for each model on the validation data.

- CCompare the receiver operating characteristic (ROC) curve for each model using the What-If Tool.

- DCompare the mean average precision across the models using the Continuous Evaluation feature.Most Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

When monitoring the performance of various model versions in a machine learning workflow, utilizing validation data offers a structured approach to performance evaluation. This method allows for consistent comparison across different model versions by using a designated subset of data that hasn't been involved in the training process. This consistency is crucial as it directly impacts decision-making regarding model improvements or selections over time. Validation data serves this purpose effectively, ensuring that the performance metrics you're comparing are relevant and provide a reliable basis for assessing model evolution.

send

light_mode

delete

Question #22

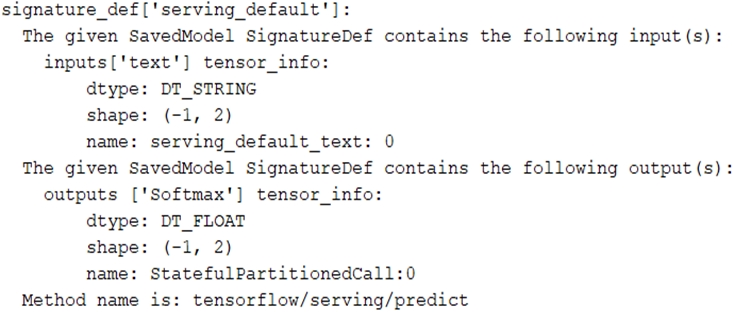

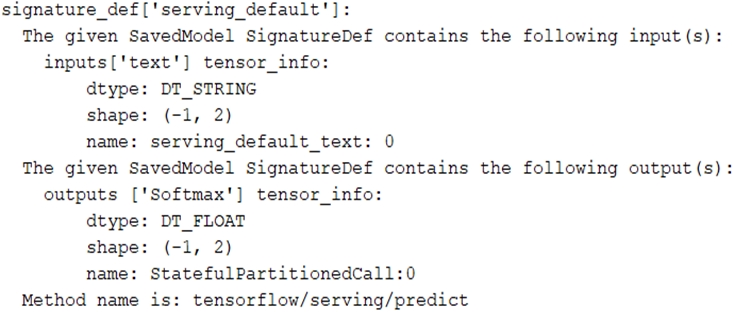

You trained a text classification model. You have the following SignatureDefs:

You started a TensorFlow-serving component server and tried to send an HTTP request to get a prediction using: headers = {"content-type": "application/json"} json_response = requests.post('http: //localhost:8501/v1/models/text_model:predict', data=data, headers=headers)

What is the correct way to write the predict request?

You started a TensorFlow-serving component server and tried to send an HTTP request to get a prediction using: headers = {"content-type": "application/json"} json_response = requests.post('http: //localhost:8501/v1/models/text_model:predict', data=data, headers=headers)

What is the correct way to write the predict request?

- Adata = json.dumps({ג€signature_nameג€: ג€seving_defaultג€, ג€instancesג€ [['ab', 'bc', 'cd']]})

- Bdata = json.dumps({ג€signature_nameג€: ג€serving_defaultג€, ג€instancesג€ [['a', 'b', 'c', 'd', 'e', 'f']]})

- Cdata = json.dumps({ג€signature_nameג€: ג€serving_defaultג€, ג€instancesג€ [['a', 'b', 'c'], ['d', 'e', 'f']]})

- Ddata = json.dumps({ג€signature_nameג€: ג€serving_defaultג€, ג€instancesג€ [['a', 'b'], ['c', 'd'], ['e', 'f']]})Most Voted

Correct Answer:

C

C

send

light_mode

delete

Question #23

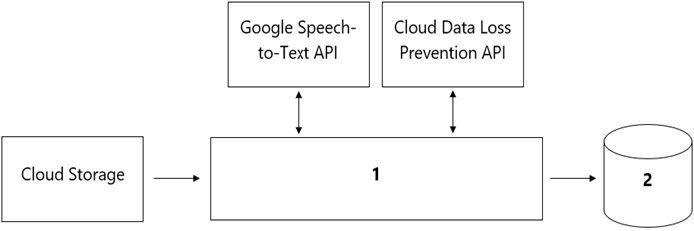

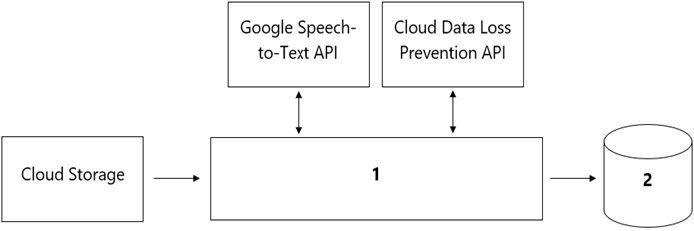

Your organization's call center has asked you to develop a model that analyzes customer sentiments in each call. The call center receives over one million calls daily, and data is stored in Cloud Storage. The data collected must not leave the region in which the call originated, and no Personally Identifiable Information (PII) can be stored or analyzed. The data science team has a third-party tool for visualization and access which requires a SQL ANSI-2011 compliant interface. You need to select components for data processing and for analytics. How should the data pipeline be designed?

- A1= Dataflow, 2= BigQueryMost Voted

- B1 = Pub/Sub, 2= Datastore

- C1 = Dataflow, 2 = Cloud SQL

- D1 = Cloud Function, 2= Cloud SQL

Correct Answer:

B

B

send

light_mode

delete

Question #24

You are an ML engineer at a global shoe store. You manage the ML models for the company's website. You are asked to build a model that will recommend new products to the user based on their purchase behavior and similarity with other users. What should you do?

- ABuild a classification model

- BBuild a knowledge-based filtering model

- CBuild a collaborative-based filtering modelMost Voted

- DBuild a regression model using the features as predictors

Correct Answer:

C

Reference:

https://cloud.google.com/solutions/recommendations-using-machine-learning-on-compute-engine

C

Reference:

https://cloud.google.com/solutions/recommendations-using-machine-learning-on-compute-engine

send

light_mode

delete

Question #25

You work for a social media company. You need to detect whether posted images contain cars. Each training example is a member of exactly one class. You have trained an object detection neural network and deployed the model version to AI Platform Prediction for evaluation. Before deployment, you created an evaluation job and attached it to the AI Platform Prediction model version. You notice that the precision is lower than your business requirements allow. How should you adjust the model's final layer softmax threshold to increase precision?

- AIncrease the recall.

- BDecrease the recall.Most Voted

- CIncrease the number of false positives.

- DDecrease the number of false negatives.

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

To boost precision in your model, tweaking the threshold to decrease recall is key. This adjustment generally means accepting fewer true positives (unless compensated by a reduction in false positives), which naturally reduces the recall but enhances the precision. This method helps refine predictions focusing more on quality (precision) rather than quantity (recall), aligning better with business needs demanding higher precision in detecting cars in images. Remember, balancing precision and recall often requires careful threshold management based on specific performance criteria.

send

light_mode

delete

Question #26

You are responsible for building a unified analytics environment across a variety of on-premises data marts. Your company is experiencing data quality and security challenges when integrating data across the servers, caused by the use of a wide range of disconnected tools and temporary solutions. You need a fully managed, cloud-native data integration service that will lower the total cost of work and reduce repetitive work. Some members on your team prefer a codeless interface for building Extract, Transform, Load (ETL) process. Which service should you use?

- ADataflow

- BDataprep

- CApache Flink

- DCloud Data FusionMost Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

Using Google Cloud Data Fusion is ideal for your scenario since it offers a codeless interface for constructing ETL processes. This serves both your requirement for a seamless integration solution across diverse data environments and accommodates team preferences for a codeless setup. Additionally, its capability to handle high volumes of data pipelines efficiently and its fully managed, cloud-native nature supports scaling and security, effectively addressing your challenges. Note: Both Data Fusion and Dataprep could potentially meet your needs, but Data Fusion aligns better with the overall requirements of minimizing repetitive tasks and integrating multiple data sources.

send

light_mode

delete

Question #27

You are an ML engineer at a regulated insurance company. You are asked to develop an insurance approval model that accepts or rejects insurance applications from potential customers. What factors should you consider before building the model?

- ARedaction, reproducibility, and explainability

- BTraceability, reproducibility, and explainabilityMost Voted

- CFederated learning, reproducibility, and explainability

- DDifferential privacy, federated learning, and explainability

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Certainly, in the context of developing an insurance approval model, the key factors to include are traceability, reproducibility, and explainability. Traceability ensures we know where data originates, which is crucial for accountability. Reproducibility means others can recreate the model results, maintaining transparency and trust. Explainability is essential, as both customers and regulators require clear reasoning behind decisions. While other factors such as redaction and advanced privacy techniques are relevant, these three core principles form the foundation for a trustworthy model in the regulated insurance sector.

send

light_mode

delete

Question #28

You are training a Resnet model on AI Platform using TPUs to visually categorize types of defects in automobile engines. You capture the training profile using the

Cloud TPU profiler plugin and observe that it is highly input-bound. You want to reduce the bottleneck and speed up your model training process. Which modifications should you make to the tf.data dataset? (Choose two.)

Cloud TPU profiler plugin and observe that it is highly input-bound. You want to reduce the bottleneck and speed up your model training process. Which modifications should you make to the tf.data dataset? (Choose two.)

- AUse the interleave option for reading data.Most Voted

- BReduce the value of the repeat parameter.

- CIncrease the buffer size for the shuttle option.

- DSet the prefetch option equal to the training batch size.Most Voted

- EDecrease the batch size argument in your transformation.

Correct Answer:

AE

AE

send

light_mode

delete

Question #29

You have trained a model on a dataset that required computationally expensive preprocessing operations. You need to execute the same preprocessing at prediction time. You deployed the model on AI Platform for high-throughput online prediction. Which architecture should you use?

- AValidate the accuracy of the model that you trained on preprocessed data. Create a new model that uses the raw data and is available in real time. Deploy the new model onto AI Platform for online prediction.

- BSend incoming prediction requests to a Pub/Sub topic. Transform the incoming data using a Dataflow job. Submit a prediction request to AI Platform using the transformed data. Write the predictions to an outbound Pub/Sub queue.Most Voted

- CStream incoming prediction request data into Cloud Spanner. Create a view to abstract your preprocessing logic. Query the view every second for new records. Submit a prediction request to AI Platform using the transformed data. Write the predictions to an outbound Pub/Sub queue.

- DSend incoming prediction requests to a Pub/Sub topic. Set up a Cloud Function that is triggered when messages are published to the Pub/Sub topic. Implement your preprocessing logic in the Cloud Function. Submit a prediction request to AI Platform using the transformed data. Write the predictions to an outbound Pub/Sub queue.

Correct Answer:

D

Reference:

https://cloud.google.com/pubsub/docs/publisher

D

Reference:

https://cloud.google.com/pubsub/docs/publisher

send

light_mode

delete

Question #30

Your team trained and tested a DNN regression model with good results. Six months after deployment, the model is performing poorly due to a change in the distribution of the input data. How should you address the input differences in production?

- ACreate alerts to monitor for skew, and retrain the model.Most Voted

- BPerform feature selection on the model, and retrain the model with fewer features.

- CRetrain the model, and select an L2 regularization parameter with a hyperparameter tuning service.

- DPerform feature selection on the model, and retrain the model on a monthly basis with fewer features.

Correct Answer:

C

C

send

light_mode

delete

All Pages