Databricks Certified Data Engineer Associate Exam Practice Questions (P. 4)

- Full Access (223 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #16

Which of the following commands can be used to write data into a Delta table while avoiding the writing of duplicate records?

- ADROP

- BIGNORE

- CMERGEMost Voted

- DAPPEND

- EINSERT

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The MERGE command in Delta Lake indeed shines for its ability to perform upserts—that is, update existing records and insert new ones based on defined conditions. Beyond simply writing data, it checks against what's already present, seamlessly syncing incoming data with the current state of the table, ensuring no duplicates emerge. It's the go-to for maintaining cleanliness and accuracy in your Delta tables, making it the perfect fit for the task described.

send

light_mode

delete

Question #17

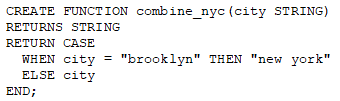

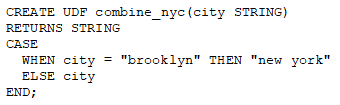

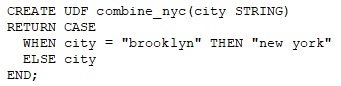

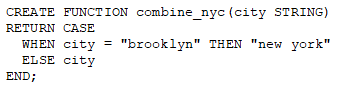

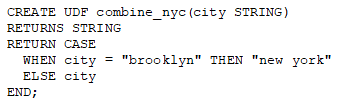

A data engineer needs to apply custom logic to string column city in table stores for a specific use case. In order to apply this custom logic at scale, the data engineer wants to create a SQL user-defined function (UDF).

Which of the following code blocks creates this SQL UDF?

Which of the following code blocks creates this SQL UDF?

send

light_mode

delete

Question #18

A data analyst has a series of queries in a SQL program. The data analyst wants this program to run every day. They only want the final query in the program to run on Sundays. They ask for help from the data engineering team to complete this task.

Which of the following approaches could be used by the data engineering team to complete this task?

Which of the following approaches could be used by the data engineering team to complete this task?

- AThey could submit a feature request with Databricks to add this functionality.

- BThey could wrap the queries using PySpark and use Python’s control flow system to determine when to run the final query.Most Voted

- CThey could only run the entire program on Sundays.

- DThey could automatically restrict access to the source table in the final query so that it is only accessible on Sundays.

- EThey could redesign the data model to separate the data used in the final query into a new table.

Correct Answer:

B

B

send

light_mode

delete

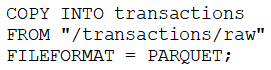

Question #19

A data engineer runs a statement every day to copy the previous day’s sales into the table transactions. Each day’s sales are in their own file in the location "/transactions/raw".

Today, the data engineer runs the following command to complete this task:

After running the command today, the data engineer notices that the number of records in table transactions has not changed.

Which of the following describes why the statement might not have copied any new records into the table?

Today, the data engineer runs the following command to complete this task:

After running the command today, the data engineer notices that the number of records in table transactions has not changed.

Which of the following describes why the statement might not have copied any new records into the table?

- AThe format of the files to be copied were not included with the FORMAT_OPTIONS keyword.

- BThe names of the files to be copied were not included with the FILES keyword.

- CThe previous day’s file has already been copied into the table.Most Voted

- DThe PARQUET file format does not support COPY INTO.

- EThe COPY INTO statement requires the table to be refreshed to view the copied rows.

Correct Answer:

C

C

send

light_mode

delete

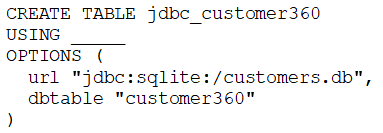

Question #20

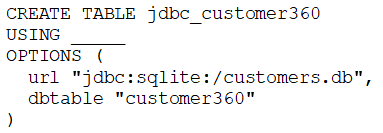

A data engineer needs to create a table in Databricks using data from their organization’s existing SQLite database.

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

They run the following command:

Which of the following lines of code fills in the above blank to successfully complete the task?

- Aorg.apache.spark.sql.jdbcMost Voted

- Bautoloader

- CDELTA

- Dsqlite

- Eorg.apache.spark.sql.sqlite

Correct Answer:

A

A

send

light_mode

delete

All Pages