Databricks Certified Data Engineer Associate Exam Practice Questions (P. 3)

- Full Access (223 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

A data engineer has left the organization. The data team needs to transfer ownership of the data engineer’s Delta tables to a new data engineer. The new data engineer is the lead engineer on the data team.

Assuming the original data engineer no longer has access, which of the following individuals must be the one to transfer ownership of the Delta tables in Data Explorer?

Assuming the original data engineer no longer has access, which of the following individuals must be the one to transfer ownership of the Delta tables in Data Explorer?

- ADatabricks account representative

- BThis transfer is not possible

- CWorkspace administratorMost Voted

- DNew lead data engineer

- EOriginal data engineer

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The correct response seems to be C, the Workspace Administrator rather than D, the New Lead Data Engineer. Workspace administrators customarily hold the authority necessary for changing ownership of resources like Delta tables in Data Explorer. This task normally falls under administrative privileges because they manage broader access controls and data governance frameworks, especially when the original owner is no longer available to make such changes. Always be sure to consult specific workspace permissions and configurations to verify the appropriate procedures.

send

light_mode

delete

Question #12

A data analyst has created a Delta table sales that is used by the entire data analysis team. They want help from the data engineering team to implement a series of tests to ensure the data is clean. However, the data engineering team uses Python for its tests rather than SQL.

Which of the following commands could the data engineering team use to access sales in PySpark?

Which of the following commands could the data engineering team use to access sales in PySpark?

- ASELECT * FROM sales

- BThere is no way to share data between PySpark and SQL.

- Cspark.sql("sales")D. spark.delta.table("sales")

- Dspark.table("sales")

Correct Answer:

E

?️

GPT-4o - Answer

GPT-4o - Answer

The correct function for the data engineering team to use in accessing the "sales" Delta table within a PySpark environment would indeed be spark.table("sales"). This function allows them to access any table that's registered in the Spark Session catalog, facilitating the execution of Python-based operations on the Delta table. It’s a straightforward and common method in PySpark to engage with tables without needing to specify the data format, making it an apt choice for seamlessly transitioning between SQL and PySpark contexts.

send

light_mode

delete

Question #13

Which of the following commands will return the location of database customer360?

- ADESCRIBE LOCATION customer360;

- BDROP DATABASE customer360;

- CDESCRIBE DATABASE customer360;Most Voted

- DALTER DATABASE customer360 SET DBPROPERTIES ('location' = '/user'};

- EUSE DATABASE customer360;

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

For the retrieval of the location of the database "customer360" especially in environments like Hive or Databricks, the command "DESCRIBE DATABASE customer360" is indeed the correct choice. It succinctly gives you comprehensive details about the database, of which the location is a key part. Utilizing this precise command effectively fetches all necessary metadata, ensuring clarity and ease in database management.

send

light_mode

delete

Question #14

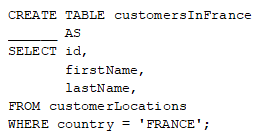

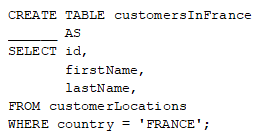

A data engineer wants to create a new table containing the names of customers that live in France.

They have written the following command:

A senior data engineer mentions that it is organization policy to include a table property indicating that the new table includes personally identifiable information (PII).

Which of the following lines of code fills in the above blank to successfully complete the task?

They have written the following command:

A senior data engineer mentions that it is organization policy to include a table property indicating that the new table includes personally identifiable information (PII).

Which of the following lines of code fills in the above blank to successfully complete the task?

- AThere is no way to indicate whether a table contains PII.

- B"COMMENT PII"

- CTBLPROPERTIES PII

- DCOMMENT "Contains PII"Most Voted

- EPII

Correct Answer:

D

D

send

light_mode

delete

Question #15

Which of the following benefits is provided by the array functions from Spark SQL?

- AAn ability to work with data in a variety of types at once

- BAn ability to work with data within certain partitions and windows

- CAn ability to work with time-related data in specified intervals

- DAn ability to work with complex, nested data ingested from JSON filesMost Voted

- EAn ability to work with an array of tables for procedural automation

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

Array functions in Spark SQL are particularly valuable for handling complex, nested data structures, frequently found in JSON files. These functions streamline the processing of nested arrays and structures, enabling easier manipulation, querying, and extraction of specific elements. This empowers data engineers to efficiently handle and analyze data that comes in layered or hierarchical formats. If you're dealing with such complex data types, leveraging these array functions can significantly simplify your workflows.

send

light_mode

delete

All Pages