Amazon AWS Certified Solutions Architect - Professional Exam Practice Questions (P. 2)

- Full Access (1019 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

Your company has an on-premises multi-tier PHP web application, which recently experienced downtime due to a large burst in web traffic due to a company announcement Over the coming days, you are expecting similar announcements to drive similar unpredictable bursts, and are looking to find ways to quickly improve your infrastructures ability to handle unexpected increases in traffic.

The application currently consists of 2 tiers a web tier which consists of a load balancer and several Linux Apache web servers as well as a database tier which hosts a Linux server hosting a MySQL database.

Which scenario below will provide full site functionality, while helping to improve the ability of your application in the short timeframe required?

The application currently consists of 2 tiers a web tier which consists of a load balancer and several Linux Apache web servers as well as a database tier which hosts a Linux server hosting a MySQL database.

Which scenario below will provide full site functionality, while helping to improve the ability of your application in the short timeframe required?

- AFailover environment: Create an S3 bucket and configure it for website hosting. Migrate your DNS to Route53 using zone file import, and leverage Route53 DNS failover to failover to the S3 hosted website.

- BHybrid environment: Create an AMI, which can be used to launch web servers in EC2. Create an Auto Scaling group, which uses the AMI to scale the web tier based on incoming traffic. Leverage Elastic Load Balancing to balance traffic between on-premises web servers and those hosted in AWS.

- COffload traffic from on-premises environment: Setup a CIoudFront distribution, and configure CloudFront to cache objects from a custom origin. Choose to customize your object cache behavior, and select a TTL that objects should exist in cache.Most Voted

- DMigrate to AWS: Use VM Import/Export to quickly convert an on-premises web server to an AMI. Create an Auto Scaling group, which uses the imported AMI to scale the web tier based on incoming traffic. Create an RDS read replica and setup replication between the RDS instance and on-premises MySQL server to migrate the database.

Correct Answer:

C

You can have CloudFront sit in front of your on-prem web environment, via a custom origin (the origin doesn't have to be in AWS). This would protect against unexpected bursts in traffic by letting CloudFront handle the traffic that it can out of cache, thus hopefully removing some of the load from your on-prem web servers.

C

You can have CloudFront sit in front of your on-prem web environment, via a custom origin (the origin doesn't have to be in AWS). This would protect against unexpected bursts in traffic by letting CloudFront handle the traffic that it can out of cache, thus hopefully removing some of the load from your on-prem web servers.

send

light_mode

delete

Question #12

You are implementing AWS Direct Connect. You intend to use AWS public service end points such as Amazon S3, across the AWS Direct Connect link. You want other Internet traffic to use your existing link to an Internet Service Provider.

What is the correct way to configure AWS Direct connect for access to services such as Amazon S3?

What is the correct way to configure AWS Direct connect for access to services such as Amazon S3?

- AConfigure a public Interface on your AWS Direct Connect link. Configure a static route via your AWS Direct Connect link that points to Amazon S3 Advertise a default route to AWS using BGP.

- BCreate a private interface on your AWS Direct Connect link. Configure a static route via your AWS Direct connect link that points to Amazon S3 Configure specific routes to your network in your VPC.

- CCreate a public interface on your AWS Direct Connect link. Redistribute BGP routes into your existing routing infrastructure; advertise specific routes for your network to AWS.Most Voted

- DCreate a private interface on your AWS Direct connect link. Redistribute BGP routes into your existing routing infrastructure and advertise a default route to AWS.

Correct Answer:

C

Reference:

https://aws.amazon.com/directconnect/faqs/

C

Reference:

https://aws.amazon.com/directconnect/faqs/

send

light_mode

delete

Question #13

Your application is using an ELB in front of an Auto Scaling group of web/application servers deployed across two AZs and a Multi-AZ RDS Instance for data persistence.

The database CPU is often above 80% usage and 90% of I/O operations on the database are reads. To improve performance you recently added a single-node

Memcached ElastiCache Cluster to cache frequent DB query results. In the next weeks the overall workload is expected to grow by 30%.

Do you need to change anything in the architecture to maintain the high availability or the application with the anticipated additional load? Why?

The database CPU is often above 80% usage and 90% of I/O operations on the database are reads. To improve performance you recently added a single-node

Memcached ElastiCache Cluster to cache frequent DB query results. In the next weeks the overall workload is expected to grow by 30%.

Do you need to change anything in the architecture to maintain the high availability or the application with the anticipated additional load? Why?

- AYes, you should deploy two Memcached ElastiCache Clusters in different AZs because the RDS instance will not be able to handle the load if the cache node fails.Most Voted

- BNo, if the cache node fails you can always get the same data from the DB without having any availability impact.

- CNo, if the cache node fails the automated ElastiCache node recovery feature will prevent any availability impact.

- DYes, you should deploy the Memcached ElastiCache Cluster with two nodes in the same AZ as the RDS DB master instance to handle the load if one cache node fails.

Correct Answer:

A

ElastiCache for Memcached -

The primary goal of caching is typically to offload reads from your database or other primary data source. In most apps, you have hot spots of data that are regularly queried, but only updated periodically. Think of the front page of a blog or news site, or the top 100 leaderboard in an online game. In this type of case, your app can receive dozens, hundreds, or even thousands of requests for the same data before it's updated again. Having your caching layer handle these queries has several advantages. First, it's considerably cheaper to add an in-memory cache than to scale up to a larger database cluster. Second, an in-memory cache is also easier to scale out, because it's easier to distribute an in-memory cache horizontally than a relational database.

Last, a caching layer provides a request buffer in the event of a sudden spike in usage. If your app or game ends up on the front page of Reddit or the App Store, it's not unheard of to see a spike that is 10 to 100 times your normal application load. Even if you autoscale your application instances, a 10x request spike will likely make your database very unhappy.

Let's focus on ElastiCache for Memcached first, because it is the best fit for a cachingfocused solution. We'll revisit Redis later in the paper, and weigh its advantages and disadvantages.

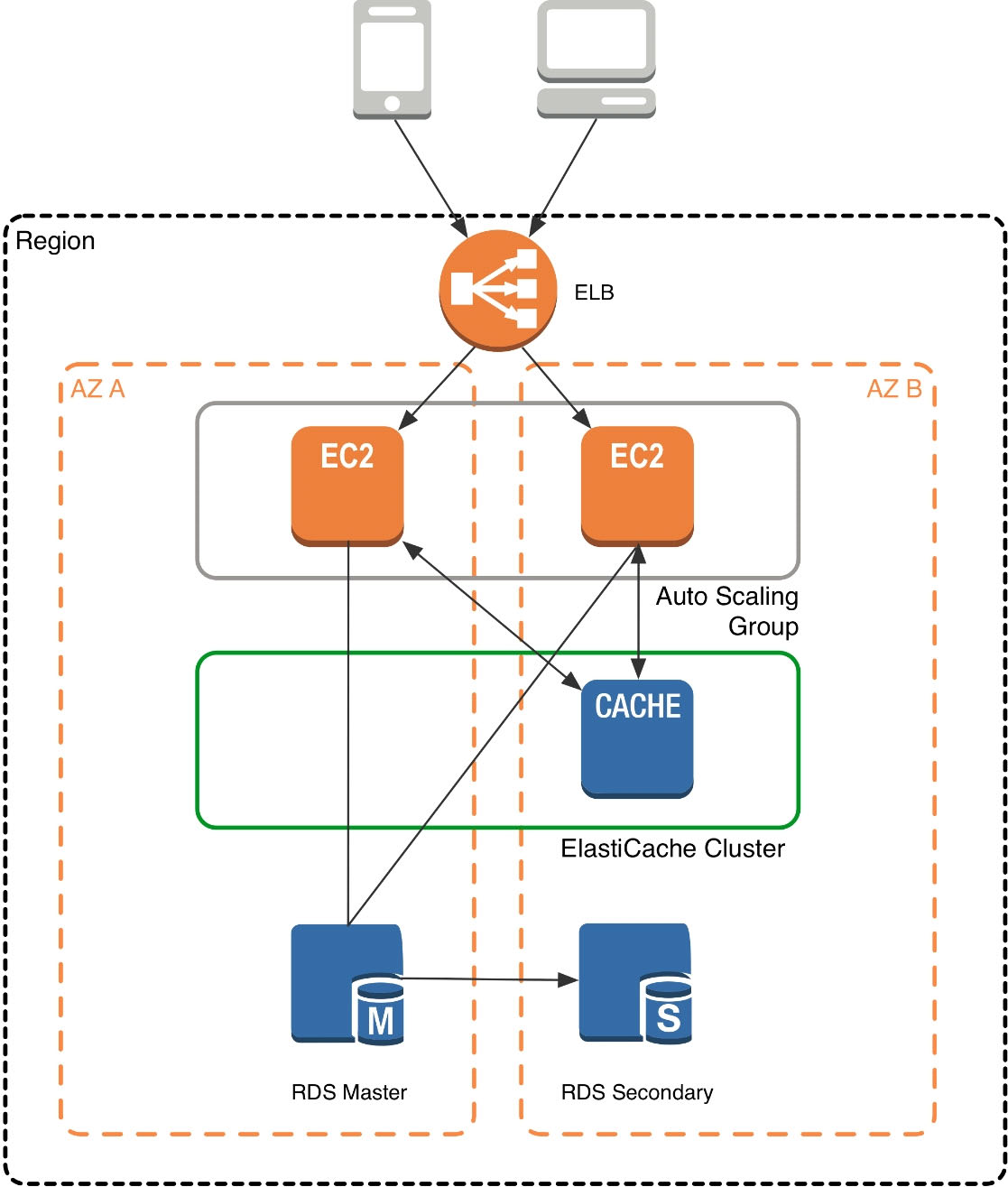

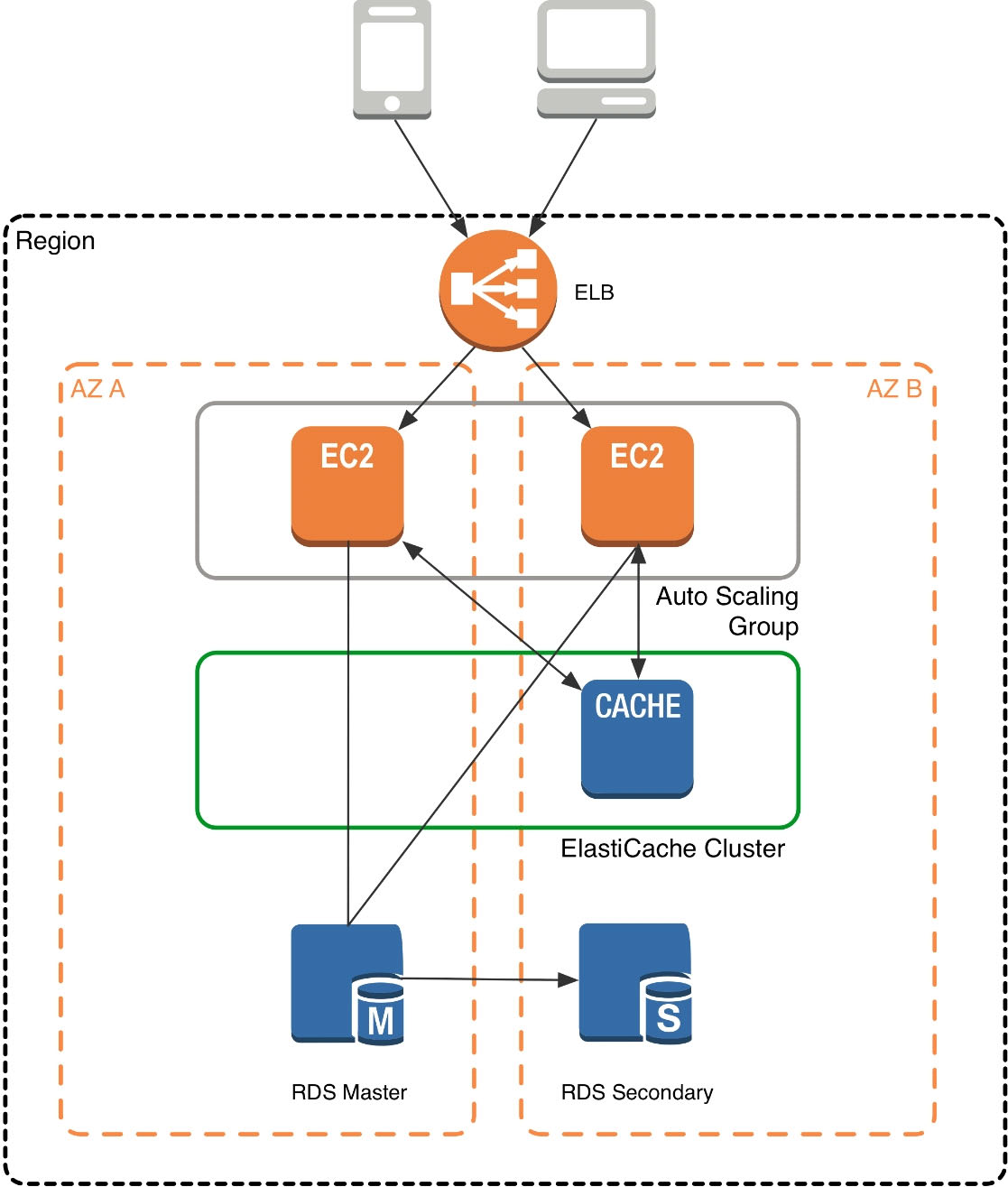

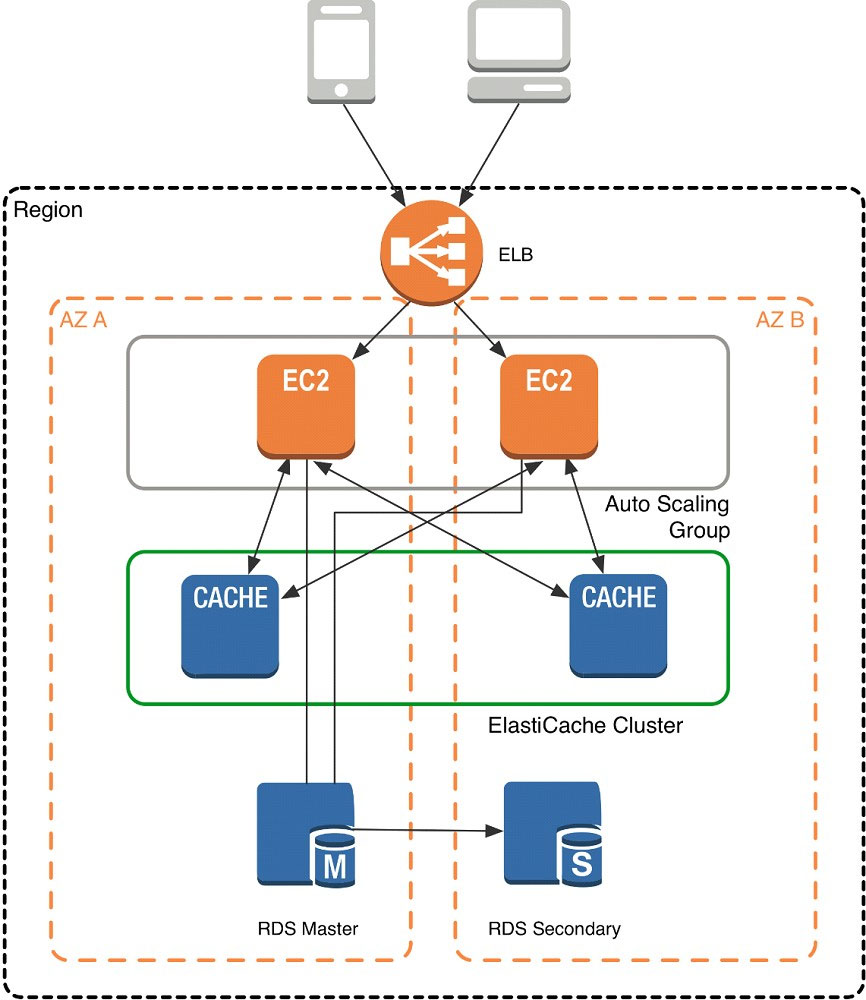

Architecture with ElastiCache for Memcached -

When you deploy an ElastiCache Memcached cluster, it sits in your application as a separate tier alongside your database. As mentioned previously, Amazon

ElastiCache does not directly communicate with your database tier, or indeed have any particular knowledge of your database. A simplified deployment for a web application looks something like this:

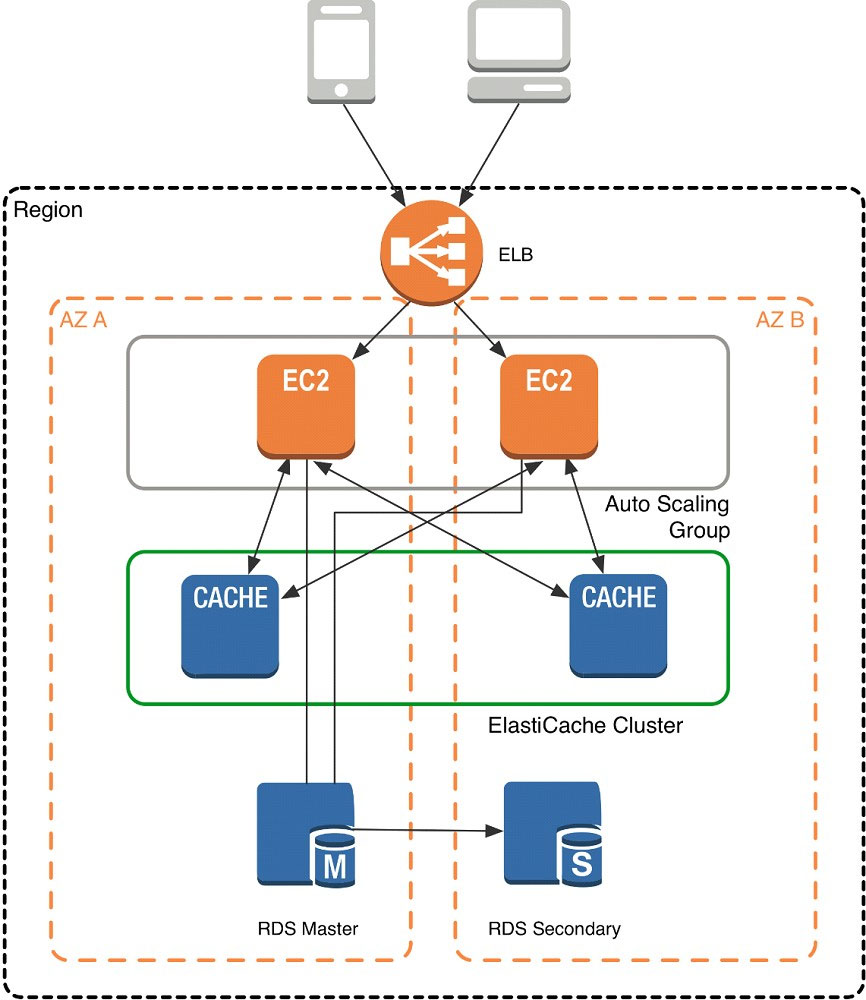

In this architecture diagram, the Amazon EC2 application instances are in an Auto Scaling group, located behind a load balancer using Elastic Load Balancing, which distributes requests among the instances. As requests come into a given EC2 instance, that EC2 instance is responsible for communicating with

ElastiCache and the database tier. For development purposes, you can begin with a single ElastiCache node to test your application, and then scale to additional cluster nodes by modifying the ElastiCache cluster. As you add additional cache nodes, the EC2 application instances are able to distribute cache keys across multiple ElastiCache nodes. The most common practice is to use client-side sharding to distribute keys across cache nodes, which we will discuss later in this paper.

When you launch an ElastiCache cluster, you can choose the Availability Zone(s) that the cluster lives in. For best performance, you should configure your cluster to use the same Availability Zones as your application servers. To launch an ElastiCache cluster in a specific Availability Zone, make sure to specify the Preferred

Zone(s) option during cache cluster creation. The Availability Zones that you specify will be where ElastiCache will launch your cache nodes. We recommend that you select Spread Nodes Across Zones, which tells ElastiCache to distribute cache nodes across these zones as evenly as possible. This distribution will mitigate the impact of an Availability Zone disruption on your ElastiCache nodes. The trade-off is that some of the requests from your application to ElastiCache will go to a node in a different Availability Zone, meaning latency will be slightly higher. For more details, refer to Creating a Cache Cluster in the Amazon ElastiCache User

Guide.

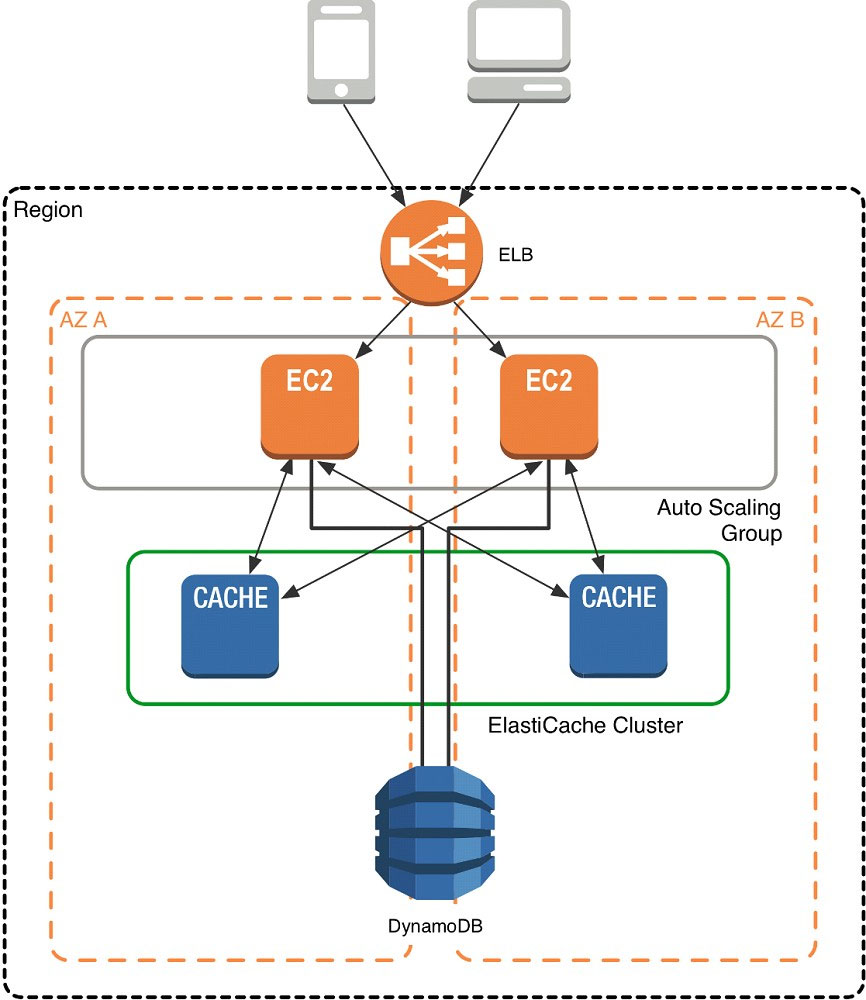

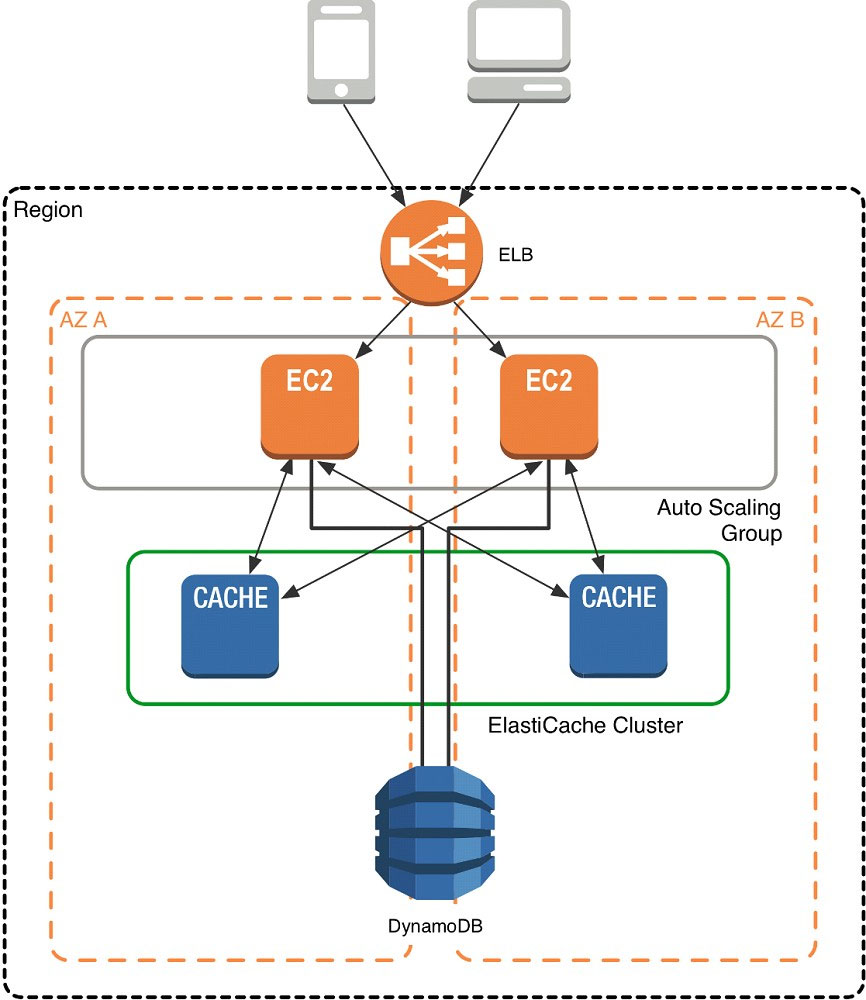

As mentioned at the outset, ElastiCache can be coupled with a wide variety of databases. Here is an example architecture that uses Amazon DynamoDB instead of Amazon RDS and MySQL:

This combination of DynamoDB and ElastiCache is very popular with mobile and game companies, because DynamoDB allows for higher write throughput at lower cost than traditional relational databases. In addition, DynamoDB uses a key-value access pattern similar to ElastiCache, which also simplifies the programming model. Instead of using relational SQL for the primary database but then key-value patterns for the cache, both the primary database and cache can be programmed similarly. In this architecture pattern, DynamoDB remains the source of truth for data, but application reads are offloaded to ElastiCache for a speed boost.

A

ElastiCache for Memcached -

The primary goal of caching is typically to offload reads from your database or other primary data source. In most apps, you have hot spots of data that are regularly queried, but only updated periodically. Think of the front page of a blog or news site, or the top 100 leaderboard in an online game. In this type of case, your app can receive dozens, hundreds, or even thousands of requests for the same data before it's updated again. Having your caching layer handle these queries has several advantages. First, it's considerably cheaper to add an in-memory cache than to scale up to a larger database cluster. Second, an in-memory cache is also easier to scale out, because it's easier to distribute an in-memory cache horizontally than a relational database.

Last, a caching layer provides a request buffer in the event of a sudden spike in usage. If your app or game ends up on the front page of Reddit or the App Store, it's not unheard of to see a spike that is 10 to 100 times your normal application load. Even if you autoscale your application instances, a 10x request spike will likely make your database very unhappy.

Let's focus on ElastiCache for Memcached first, because it is the best fit for a cachingfocused solution. We'll revisit Redis later in the paper, and weigh its advantages and disadvantages.

Architecture with ElastiCache for Memcached -

When you deploy an ElastiCache Memcached cluster, it sits in your application as a separate tier alongside your database. As mentioned previously, Amazon

ElastiCache does not directly communicate with your database tier, or indeed have any particular knowledge of your database. A simplified deployment for a web application looks something like this:

In this architecture diagram, the Amazon EC2 application instances are in an Auto Scaling group, located behind a load balancer using Elastic Load Balancing, which distributes requests among the instances. As requests come into a given EC2 instance, that EC2 instance is responsible for communicating with

ElastiCache and the database tier. For development purposes, you can begin with a single ElastiCache node to test your application, and then scale to additional cluster nodes by modifying the ElastiCache cluster. As you add additional cache nodes, the EC2 application instances are able to distribute cache keys across multiple ElastiCache nodes. The most common practice is to use client-side sharding to distribute keys across cache nodes, which we will discuss later in this paper.

When you launch an ElastiCache cluster, you can choose the Availability Zone(s) that the cluster lives in. For best performance, you should configure your cluster to use the same Availability Zones as your application servers. To launch an ElastiCache cluster in a specific Availability Zone, make sure to specify the Preferred

Zone(s) option during cache cluster creation. The Availability Zones that you specify will be where ElastiCache will launch your cache nodes. We recommend that you select Spread Nodes Across Zones, which tells ElastiCache to distribute cache nodes across these zones as evenly as possible. This distribution will mitigate the impact of an Availability Zone disruption on your ElastiCache nodes. The trade-off is that some of the requests from your application to ElastiCache will go to a node in a different Availability Zone, meaning latency will be slightly higher. For more details, refer to Creating a Cache Cluster in the Amazon ElastiCache User

Guide.

As mentioned at the outset, ElastiCache can be coupled with a wide variety of databases. Here is an example architecture that uses Amazon DynamoDB instead of Amazon RDS and MySQL:

This combination of DynamoDB and ElastiCache is very popular with mobile and game companies, because DynamoDB allows for higher write throughput at lower cost than traditional relational databases. In addition, DynamoDB uses a key-value access pattern similar to ElastiCache, which also simplifies the programming model. Instead of using relational SQL for the primary database but then key-value patterns for the cache, both the primary database and cache can be programmed similarly. In this architecture pattern, DynamoDB remains the source of truth for data, but application reads are offloaded to ElastiCache for a speed boost.

send

light_mode

delete

Question #14

An ERP application is deployed across multiple AZs in a single region. In the event of failure, the Recovery Time Objective (RTO) must be less than 3 hours, and the Recovery Point Objective (RPO) must be 15 minutes. The customer realizes that data corruption occurred roughly 1.5 hours ago.

What DR strategy could be used to achieve this RTO and RPO in the event of this kind of failure?

What DR strategy could be used to achieve this RTO and RPO in the event of this kind of failure?

- ATake hourly DB backups to S3, with transaction logs stored in S3 every 5 minutes.Most Voted

- BUse synchronous database master-slave replication between two availability zones.

- CTake hourly DB backups to EC2 Instance store volumes with transaction logs stored In S3 every 5 minutes.

- DTake 15 minute DB backups stored In Glacier with transaction logs stored in S3 every 5 minutes.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

To mitigate the incident described while meeting the specified RTO and RPO, strategy A is indeed effective. It combines hourly database backups with transaction logs captured every five minutes. This approach ensures data can be restored back to a state as close as 5 minutes prior to any detected corruption, effectively limiting data loss far within the 15-minute RPO target. Additionally, the use of Amazon S3 for storing these backups and logs not only provides durability but also allows for rapid recovery suitable for the 3-hour RTO stipulation, taking advantage of S3's reliability and accessibility.

send

light_mode

delete

Question #15

You are designing the network infrastructure for an application server in Amazon VPC. Users will access all application instances from the Internet, as well as from an on-premises network. The on-premises network is connected to your VPC over an AWS Direct Connect link.

How would you design routing to meet the above requirements?

How would you design routing to meet the above requirements?

- AConfigure a single routing table with a default route via the Internet gateway. Propagate a default route via BGP on the AWS Direct Connect customer router. Associate the routing table with all VPC subnets.

- BConfigure a single routing table with a default route via the Internet gateway. Propagate specific routes for the on-premises networks via BGP on the AWS Direct Connect customer router. Associate the routing table with all VPC subnets.

- CConfigure a single routing table with two default routes: on to the Internet via an Internet gateway, the other to the on-premises network via the VPN gateway. Use this routing table across all subnets in the VPC.

- DConfigure two routing tables: on that has a default router via the Internet gateway, and other that has a default route via the VPN gateway. Associate both routing tables with each VPC subnet.Most Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

The correct approach for designing routing in this scenario involves using a single routing table with a default route directed to the Internet gateway for general internet access. In addition, it's crucial to propagate specific routes for the on-premises networks using BGP through the AWS Direct Connect. This setup will efficiently route the relevant traffic over the Direct Connect link specifically for on-premises resources, while internet-bound traffic uses the internet gateway. Other configurations like having two default routes or multiple routing tables for the same subnet either go against AWS best practices or technically aren't feasible.

send

light_mode

delete

Question #16

You control access to S3 buckets and objects with:

- AIdentity and Access Management (IAM) Policies.

- BAccess Control Lists (ACLs).

- CBucket Policies.

- DAll of the aboveMost Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

All the options listed, i.e., IAM Policies, ACLs, and Bucket Policies, are instrumental in governing access to S3 buckets and their contents. IAM policies can be applied to users or groups to manage permissions across all AWS resources, including S3. ACLs offer a finer granularity by allowing permissions to be set on individual objects within a bucket. Bucket Policies are applied directly to buckets, giving you centralized control over access. Thus, the comprehensive approach to controlling access in S3 incorporates all these elements, making option D the correct and holistic choice for S3 security management.

send

light_mode

delete

Question #17

The AWS IT infrastructure that AWS provides, complies with the following IT security standards, including:

- ASOC 1/SSAE 16/ISAE 3402 (formerly SAS 70 Type II), SOC 2 and SOC 3

- BFISMA, DIACAP, and FedRAMP

- CPCI DSS Level 1, ISO 27001, ITAR and FIPS 140-2

- DHIPAA, Cloud Security Alliance (CSA) and Motion Picture Association of America (MPAA)

- EAll of the aboveMost Voted

Correct Answer:

E

E

GPT-4o - Answer

GPT-4o - Answer

AWS's IT infrastructure is indeed compliant with a broad range of security standards, which makes it a strong platform for supporting various compliance requirements across different industries. These standards include SOC 1/SSAE 16/ISAE 3402 (formerly SAS 70 Type II), SOC 2, SOC 3, FISMA, DIACAP, FedRAMP, PCI DSS Level 1, ISO 27001, ITAR, FIPS 140-2, HIPAA, the Cloud Security Alliance (CSA), and the Motion Picture Association of America (MPAA). Each of these compliance certifications supports different aspects of cloud security and regulatory compliance, establishing AWS as a versatile choice for organizations concerned with information security and compliance.

send

light_mode

delete

Question #18

Auto Scaling requests are signed with a _________ signature calculated from the request and the user's private key.

- ASSL

- BAES-256

- CHMAC-SHA1Most Voted

- DX.509

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

For signing AWS Auto Scaling requests, HMAC-SHA1 is utilized due to its capability to generate a unique signature from a combination of the request details and the user's private key. This method ensures a secure verification process by AWS using the corresponding public key, confirming the request's authenticity and integrity. While other technologies like SSL, AES-256, and X.509 focus on different aspects of encryption and certification, they are not used for signing purposes in this specific context.

send

light_mode

delete

Question #19

The following policy can be attached to an IAM group. It lets an IAM user in that group access a "home directory" in AWS S3 that matches their user name using the console.

{

"Version": "2012-10-17",

"Statement": [

{

"Action": ["s3:*"],

"Effect": "Allow",

"Resource": ["arn:aws:s3:::bucket-name"],

"Condition":{"StringLike":{"s3:prefix":["home/${aws:username}/*"]}}

},

{

"Action":["s3:*"],

"Effect":"Allow",

"Resource": ["arn:aws:s3:::bucket-name/home/${aws:username}/*"]

}

]

}

{

"Version": "2012-10-17",

"Statement": [

{

"Action": ["s3:*"],

"Effect": "Allow",

"Resource": ["arn:aws:s3:::bucket-name"],

"Condition":{"StringLike":{"s3:prefix":["home/${aws:username}/*"]}}

},

{

"Action":["s3:*"],

"Effect":"Allow",

"Resource": ["arn:aws:s3:::bucket-name/home/${aws:username}/*"]

}

]

}

- ATrue

- BFalseMost Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

The policy as stated does not grant sufficient permissions for navigating in the Amazon S3 console, which is crucial for IAM user access. Users need the "ListAllMyBuckets" action to list all the buckets and "GetBucketLocation" to access the bucket location upon navigating to the Amazon S3 console initially. Through these actions, users can avoid the 'access denied' error which would otherwise appear in the console. Without permissions for these actions, despite being able to directly access home directories by typing the correct URL, functionality through the console will be impaired. Thus, the correct answer is `False`, as users cannot navigate effectively in the console.

send

light_mode

delete

Question #20

What does elasticity mean to AWS?

- AThe ability to scale computing resources up easily, with minimal friction and down with latency.

- BThe ability to scale computing resources up and down easily, with minimal friction.Most Voted

- CThe ability to provision cloud computing resources in expectation of future demand.

- DThe ability to recover from business continuity events with minimal friction.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Elasticity in AWS is crucial for adapting to workload changes efficiently, allowing dynamic scaling of infrastructure resources. This capability ensures minimal friction whether scaling resources up to handle increased demand or scaling them down when less capacity is required, optimizing resource use and potentially reducing costs. The correct understanding of 'scale up and down' refers to both vertical (changing the capacity of an instance) and horizontal adjustments (modifying the number of instances), although the primary focus in cloud environments typically emphasizes horizontal scaling.

send

light_mode

delete

All Pages