Amazon AWS Certified Machine Learning - Specialty Exam Practice Questions (P. 3)

- Full Access (369 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

A Machine Learning Specialist is configuring Amazon SageMaker so multiple Data Scientists can access notebooks, train models, and deploy endpoints. To ensure the best operational performance, the Specialist needs to be able to track how often the Scientists are deploying models, GPU and CPU utilization on the deployed SageMaker endpoints, and all errors that are generated when an endpoint is invoked.

Which services are integrated with Amazon SageMaker to track this information? (Choose two.)

Which services are integrated with Amazon SageMaker to track this information? (Choose two.)

- AAWS CloudTrailMost Voted

- BAWS Health

- CAWS Trusted Advisor

- DAmazon CloudWatchMost Voted

- EAWS Config

Correct Answer:

AD

Reference:

https://aws.amazon.com/sagemaker/faqs/

AD

Reference:

https://aws.amazon.com/sagemaker/faqs/

send

light_mode

delete

Question #22

A retail chain has been ingesting purchasing records from its network of 20,000 stores to Amazon S3 using Amazon Kinesis Data Firehose. To support training an improved machine learning model, training records will require new but simple transformations, and some attributes will be combined. The model needs to be retrained daily.

Given the large number of stores and the legacy data ingestion, which change will require the LEAST amount of development effort?

Given the large number of stores and the legacy data ingestion, which change will require the LEAST amount of development effort?

- ARequire that the stores to switch to capturing their data locally on AWS Storage Gateway for loading into Amazon S3, then use AWS Glue to do the transformation.

- BDeploy an Amazon EMR cluster running Apache Spark with the transformation logic, and have the cluster run each day on the accumulating records in Amazon S3, outputting new/transformed records to Amazon S3.

- CSpin up a fleet of Amazon EC2 instances with the transformation logic, have them transform the data records accumulating on Amazon S3, and output the transformed records to Amazon S3.

- DInsert an Amazon Kinesis Data Analytics stream downstream of the Kinesis Data Firehose stream that transforms raw record attributes into simple transformed values using SQL.Most Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

Inserting an Amazon Kinesis Data Analytics stream downstream of the existing Kinesis Data Firehose setup to handle simple transformations involves minimal development effort. This approach utilizes the ongoing ingestion process, leveraging Kinesis Data Analytics' capability to perform real-time processing using SQL on streaming data, without the need for a significant alteration to existing infrastructure or processes for daily updates. This setup not only maintains data flow efficiency but also directly integrates with Amazon S3 for output.

send

light_mode

delete

Question #23

A Machine Learning Specialist is building a convolutional neural network (CNN) that will classify 10 types of animals. The Specialist has built a series of layers in a neural network that will take an input image of an animal, pass it through a series of convolutional and pooling layers, and then finally pass it through a dense and fully connected layer with 10 nodes. The Specialist would like to get an output from the neural network that is a probability distribution of how likely it is that the input image belongs to each of the 10 classes.

Which function will produce the desired output?

Which function will produce the desired output?

- ADropout

- BSmooth L1 loss

- CSoftmaxMost Voted

- DRectified linear units (ReLU)

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The softmax function is the correct choice because it converts the outputs of the final dense layer into probabilities that sum to one, which are interpretable as the likelihood of the input belonging to each of the 10 classes. This function is especially suitable for multi-class classification problems such as this, where each output node corresponds to a class. Other options like dropout, smooth L1 loss, and ReLU serve different purposes such as regularization and activation but do not provide output in the form of a probability distribution suitable for classification.

send

light_mode

delete

Question #24

A Machine Learning Specialist trained a regression model, but the first iteration needs optimizing. The Specialist needs to understand whether the model is more frequently overestimating or underestimating the target.

What option can the Specialist use to determine whether it is overestimating or underestimating the target value?

What option can the Specialist use to determine whether it is overestimating or underestimating the target value?

- ARoot Mean Square Error (RMSE)

- BResidual plotsMost Voted

- CArea under the curve

- DConfusion matrix

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Residual plots are the optimal choice when you need to understand if a regression model is predominantly overestimating or underestimating its target values. These plots illustrate the residuals on the vertical axis (errors between predicted and actual values) and predicted values or other variables on the horizontal axis. By visually examining these plots, any bias towards positive or negative residuals clearly indicates whether the model tends to overestimate or underestimate. Unlike RMSE, which only provides the magnitude of errors without their direction, residual plots offer detailed, directional insight, critical for refining model accuracy.

send

light_mode

delete

Question #25

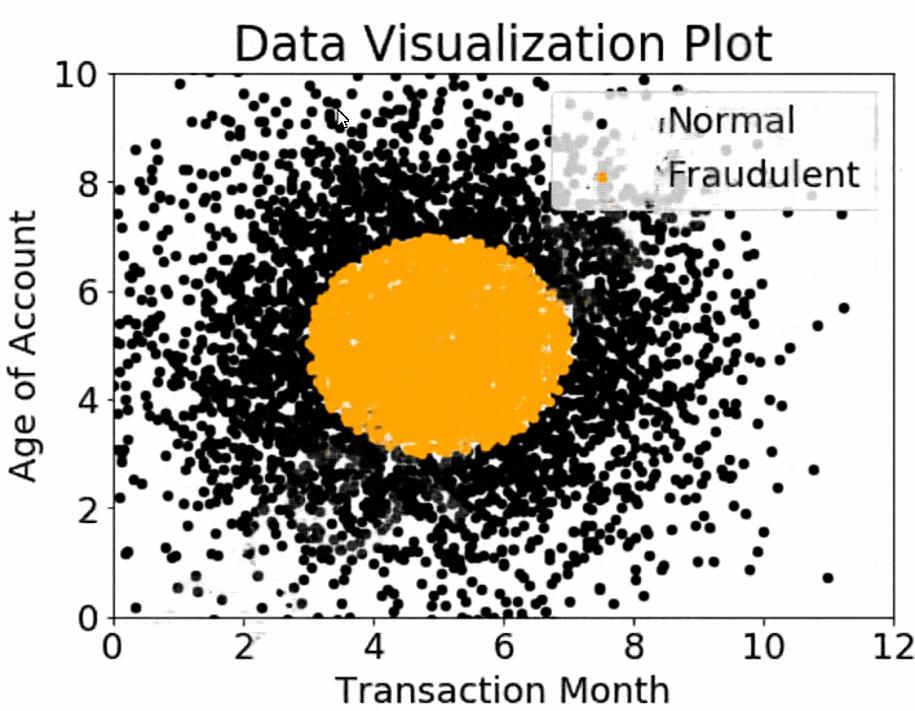

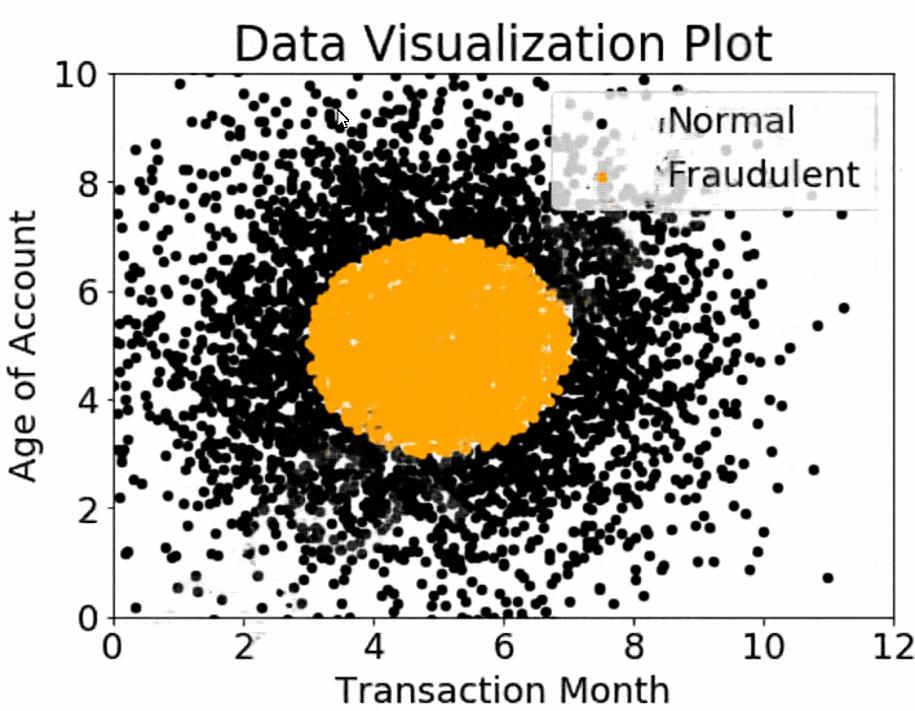

A company wants to classify user behavior as either fraudulent or normal. Based on internal research, a Machine Learning Specialist would like to build a binary classifier based on two features: age of account and transaction month. The class distribution for these features is illustrated in the figure provided.

Based on this information, which model would have the HIGHEST recall with respect to the fraudulent class?

Based on this information, which model would have the HIGHEST recall with respect to the fraudulent class?

- ADecision treeMost Voted

- BLinear support vector machine (SVM)

- CNaive Bayesian classifier

- DSingle Perceptron with sigmoidal activation function

Correct Answer:

C

C

send

light_mode

delete

Question #26

A Machine Learning Specialist kicks off a hyperparameter tuning job for a tree-based ensemble model using Amazon SageMaker with Area Under the ROC Curve

(AUC) as the objective metric. This workflow will eventually be deployed in a pipeline that retrains and tunes hyperparameters each night to model click-through on data that goes stale every 24 hours.

With the goal of decreasing the amount of time it takes to train these models, and ultimately to decrease costs, the Specialist wants to reconfigure the input hyperparameter range(s).

Which visualization will accomplish this?

(AUC) as the objective metric. This workflow will eventually be deployed in a pipeline that retrains and tunes hyperparameters each night to model click-through on data that goes stale every 24 hours.

With the goal of decreasing the amount of time it takes to train these models, and ultimately to decrease costs, the Specialist wants to reconfigure the input hyperparameter range(s).

Which visualization will accomplish this?

- AA histogram showing whether the most important input feature is Gaussian.

- BA scatter plot with points colored by target variable that uses t-Distributed Stochastic Neighbor Embedding (t-SNE) to visualize the large number of input variables in an easier-to-read dimension.

- CA scatter plot showing the performance of the objective metric over each training iteration.

- DA scatter plot showing the correlation between maximum tree depth and the objective metric.Most Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

For the given scenario, where a Machine Learning Specialist is looking to reconfigure hyperparameter ranges to optimize training times, consider the role of visualization types in achieving this. The t-SNE visualization in option B, although primarily a tool for reducing dimensionality and not directly for tuning hyperparameters, facilitates understanding data structure, which indirectly influences hyperparameter setting by revealing patterns in high-dimensional data. Understanding these patterns can guide adjustments in hyperparameter ranges more effectively, making B a viable choice if seen from this broader perspective. However, considering user feedback, option D focusing on the relationship between tree depth and performance metrics provides a more straightforward approach to optimizing a specific hyperparameter, though it's narrower in scope.

send

light_mode

delete

Question #27

A Machine Learning Specialist is creating a new natural language processing application that processes a dataset comprised of 1 million sentences. The aim is to then run Word2Vec to generate embeddings of the sentences and enable different types of predictions.

Here is an example from the dataset:

"The quck BROWN FOX jumps over the lazy dog.`

Which of the following are the operations the Specialist needs to perform to correctly sanitize and prepare the data in a repeatable manner? (Choose three.)

Here is an example from the dataset:

"The quck BROWN FOX jumps over the lazy dog.`

Which of the following are the operations the Specialist needs to perform to correctly sanitize and prepare the data in a repeatable manner? (Choose three.)

- APerform part-of-speech tagging and keep the action verb and the nouns only.

- BNormalize all words by making the sentence lowercase.Most Voted

- CRemove stop words using an English stopword dictionary.Most Voted

- DCorrect the typography on "quck" to "quick.ג€

- EOne-hot encode all words in the sentence.

- FTokenize the sentence into words.Most Voted

Correct Answer:

BCF

BCF

GPT-4o - Answer

GPT-4o - Answer

For the data preprocessing required in a Word2Vec model, it is crucial to begin with text normalization to manage various word forms and improve model accuracy. This typically involves converting all text to lowercase, removing common words—known as stop words—that might skew the analysis, and tokenizing sentences into distinct words. These steps significantly enhance the uniformity and quality of the input data, facilitating more effective word embedding computations by focusing on the meaningful content of the text.

send

light_mode

delete

Question #28

A company is using Amazon Polly to translate plaintext documents to speech for automated company announcements. However, company acronyms are being mispronounced in the current documents.

How should a Machine Learning Specialist address this issue for future documents?

How should a Machine Learning Specialist address this issue for future documents?

- AConvert current documents to SSML with pronunciation tags.

- BCreate an appropriate pronunciation lexicon.Most Voted

- COutput speech marks to guide in pronunciation.

- DUse Amazon Lex to preprocess the text files for pronunciation

Correct Answer:

A

Reference:

https://docs.aws.amazon.com/polly/latest/dg/ssml.html

A

Reference:

https://docs.aws.amazon.com/polly/latest/dg/ssml.html

send

light_mode

delete

Question #29

An insurance company is developing a new device for vehicles that uses a camera to observe drivers' behavior and alert them when they appear distracted. The company created approximately 10,000 training images in a controlled environment that a Machine Learning Specialist will use to train and evaluate machine learning models.

During the model evaluation, the Specialist notices that the training error rate diminishes faster as the number of epochs increases and the model is not accurately inferring on the unseen test images.

Which of the following should be used to resolve this issue? (Choose two.)

During the model evaluation, the Specialist notices that the training error rate diminishes faster as the number of epochs increases and the model is not accurately inferring on the unseen test images.

Which of the following should be used to resolve this issue? (Choose two.)

- AAdd vanishing gradient to the model.

- BPerform data augmentation on the training data.Most Voted

- CMake the neural network architecture complex.

- DUse gradient checking in the model.

- EAdd L2 regularization to the model.Most Voted

Correct Answer:

BE

BE

GPT-4o - Answer

GPT-4o - Answer

When training and evaluating machine learning models that demonstrate fast decrement in training error but poor performance on unseen test images, this typically indicates overfitting. To address this, incorporating L2 regularization is highly effective as it places constraints on the magnitude of the parameters, effectively penalizing large weights to reduce model complexity and improve generalization to new data. Additionally, implementing data augmentation can artificially expand the size of the training dataset by generating diverse scenarios, which enhances the model's ability to generalize rather than memorize the training data. Both techniques are practical for mitigating overfitting in neural network models.

send

light_mode

delete

Question #30

When submitting Amazon SageMaker training jobs using one of the built-in algorithms, which common parameters MUST be specified? (Choose three.)

- AThe training channel identifying the location of training data on an Amazon S3 bucket.

- BThe validation channel identifying the location of validation data on an Amazon S3 bucket.

- CThe IAM role that Amazon SageMaker can assume to perform tasks on behalf of the users.

- DHyperparameters in a JSON array as documented for the algorithm used.

- EThe Amazon EC2 instance class specifying whether training will be run using CPU or GPU.

- FThe output path specifying where on an Amazon S3 bucket the trained model will persist.

Correct Answer:

AEF

AEF

send

light_mode

delete

All Pages