Amazon AWS Certified Machine Learning - Specialty Exam Practice Questions (P. 1)

- Full Access (369 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #1

A large mobile network operating company is building a machine learning model to predict customers who are likely to unsubscribe from the service. The company plans to offer an incentive for these customers as the cost of churn is far greater than the cost of the incentive.

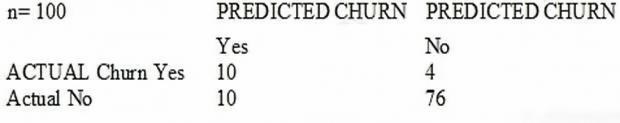

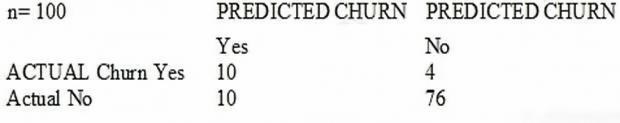

The model produces the following confusion matrix after evaluating on a test dataset of 100 customers:

Based on the model evaluation results, why is this a viable model for production?

The model produces the following confusion matrix after evaluating on a test dataset of 100 customers:

Based on the model evaluation results, why is this a viable model for production?

- AThe model is 86% accurate and the cost incurred by the company as a result of false negatives is less than the false positives.Most Voted

- BThe precision of the model is 86%, which is less than the accuracy of the model.

- CThe model is 86% accurate and the cost incurred by the company as a result of false positives is less than the false negatives.

- DThe precision of the model is 86%, which is greater than the accuracy of the model.

Correct Answer:

A

A

send

light_mode

delete

Question #2

A Machine Learning Specialist is designing a system for improving sales for a company. The objective is to use the large amount of information the company has on users' behavior and product preferences to predict which products users would like based on the users' similarity to other users.

What should the Specialist do to meet this objective?

What should the Specialist do to meet this objective?

- ABuild a content-based filtering recommendation engine with Apache Spark ML on Amazon EMR

- BBuild a collaborative filtering recommendation engine with Apache Spark ML on Amazon EMR.Most Voted

- CBuild a model-based filtering recommendation engine with Apache Spark ML on Amazon EMR

- DBuild a combinative filtering recommendation engine with Apache Spark ML on Amazon EMR

Correct Answer:

B

Many developers want to implement the famous Amazon model that was used to power the ג€People who bought this also bought these itemsג€ feature on

Amazon.com. This model is based on a method called Collaborative Filtering. It takes items such as movies, books, and products that were rated highly by a set of users and recommending them to other users who also gave them high ratings. This method works well in domains where explicit ratings or implicit user actions can be gathered and analyzed.

Reference:

https://aws.amazon.com/blogs/big-data/building-a-recommendation-engine-with-spark-ml-on-amazon-emr-using-zeppelin/

B

Many developers want to implement the famous Amazon model that was used to power the ג€People who bought this also bought these itemsג€ feature on

Amazon.com. This model is based on a method called Collaborative Filtering. It takes items such as movies, books, and products that were rated highly by a set of users and recommending them to other users who also gave them high ratings. This method works well in domains where explicit ratings or implicit user actions can be gathered and analyzed.

Reference:

https://aws.amazon.com/blogs/big-data/building-a-recommendation-engine-with-spark-ml-on-amazon-emr-using-zeppelin/

send

light_mode

delete

Question #3

A Mobile Network Operator is building an analytics platform to analyze and optimize a company's operations using Amazon Athena and Amazon S3.

The source systems send data in .CSV format in real time. The Data Engineering team wants to transform the data to the Apache Parquet format before storing it on Amazon S3.

Which solution takes the LEAST effort to implement?

The source systems send data in .CSV format in real time. The Data Engineering team wants to transform the data to the Apache Parquet format before storing it on Amazon S3.

Which solution takes the LEAST effort to implement?

- AIngest .CSV data using Apache Kafka Streams on Amazon EC2 instances and use Kafka Connect S3 to serialize data as Parquet

- BIngest .CSV data from Amazon Kinesis Data Streams and use Amazon Glue to convert data into Parquet.

- CIngest .CSV data using Apache Spark Structured Streaming in an Amazon EMR cluster and use Apache Spark to convert data into Parquet.

- DIngest .CSV data from Amazon Kinesis Data Streams and use Amazon Kinesis Data Firehose to convert data into Parquet.Most Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Choosing option B, which utilizes Amazon Kinesis Data Streams for data ingestion and AWS Glue for the conversion to Parquet, provides a highly streamlined and low-effort solution. AWS Glue effectively manages the underlying resources and simplifies the data conversion process. This allows you to easily transform large volumes of data without managing complex code or server configurations. Hence, this approach not only reduces the setup and operational burden but also leverages serverless services to optimize cost and scalability.

send

light_mode

delete

Question #4

A city wants to monitor its air quality to address the consequences of air pollution. A Machine Learning Specialist needs to forecast the air quality in parts per million of contaminates for the next 2 days in the city. As this is a prototype, only daily data from the last year is available.

Which model is MOST likely to provide the best results in Amazon SageMaker?

Which model is MOST likely to provide the best results in Amazon SageMaker?

- AUse the Amazon SageMaker k-Nearest-Neighbors (kNN) algorithm on the single time series consisting of the full year of data with a predictor_type of regressor.

- BUse Amazon SageMaker Random Cut Forest (RCF) on the single time series consisting of the full year of data.

- CUse the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of regressor.Most Voted

- DUse the Amazon SageMaker Linear Learner algorithm on the single time series consisting of the full year of data with a predictor_type of classifier.

Correct Answer:

C

Reference:

https://aws.amazon.com/blogs/machine-learning/build-a-model-to-predict-the-impact-of-weather-on-urban-air-quality-using-amazon-sagemaker/? ref=Welcome.AI

C

Reference:

https://aws.amazon.com/blogs/machine-learning/build-a-model-to-predict-the-impact-of-weather-on-urban-air-quality-using-amazon-sagemaker/? ref=Welcome.AI

send

light_mode

delete

Question #5

A Data Engineer needs to build a model using a dataset containing customer credit card information

How can the Data Engineer ensure the data remains encrypted and the credit card information is secure?

How can the Data Engineer ensure the data remains encrypted and the credit card information is secure?

- AUse a custom encryption algorithm to encrypt the data and store the data on an Amazon SageMaker instance in a VPC. Use the SageMaker DeepAR algorithm to randomize the credit card numbers.

- BUse an IAM policy to encrypt the data on the Amazon S3 bucket and Amazon Kinesis to automatically discard credit card numbers and insert fake credit card numbers.

- CUse an Amazon SageMaker launch configuration to encrypt the data once it is copied to the SageMaker instance in a VPC. Use the SageMaker principal component analysis (PCA) algorithm to reduce the length of the credit card numbers.

- DUse AWS KMS to encrypt the data on Amazon S3 and Amazon SageMaker, and redact the credit card numbers from the customer data with AWS Glue.Most Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

AWS KMS provides a secure way to encrypt data at rest on both Amazon S3 and SageMaker, ensuring the safety of sensitive information like credit card numbers. Utilizing AWS Glue to redact credit card numbers further enhances data security by transforming the data before it's used in analytical processes, aligning with best practices for handling sensitive personal information. This comprehensive approach not only secures the data but also maintains its usability for analytical purposes without compromising customer privacy.

send

light_mode

delete

Question #6

A Machine Learning Specialist is using an Amazon SageMaker notebook instance in a private subnet of a corporate VPC. The ML Specialist has important data stored on the Amazon SageMaker notebook instance's Amazon EBS volume, and needs to take a snapshot of that EBS volume. However, the ML Specialist cannot find the Amazon SageMaker notebook instance's EBS volume or Amazon EC2 instance within the VPC.

Why is the ML Specialist not seeing the instance visible in the VPC?

Why is the ML Specialist not seeing the instance visible in the VPC?

- AAmazon SageMaker notebook instances are based on the EC2 instances within the customer account, but they run outside of VPCs.

- BAmazon SageMaker notebook instances are based on the Amazon ECS service within customer accounts.

- CAmazon SageMaker notebook instances are based on EC2 instances running within AWS service accounts.Most Voted

- DAmazon SageMaker notebook instances are based on AWS ECS instances running within AWS service accounts.

Correct Answer:

C

Reference:

https://docs.aws.amazon.com/sagemaker/latest/dg/gs-setup-working-env.html

C

Reference:

https://docs.aws.amazon.com/sagemaker/latest/dg/gs-setup-working-env.html

send

light_mode

delete

Question #7

A Machine Learning Specialist is building a model that will perform time series forecasting using Amazon SageMaker. The Specialist has finished training the model and is now planning to perform load testing on the endpoint so they can configure Auto Scaling for the model variant.

Which approach will allow the Specialist to review the latency, memory utilization, and CPU utilization during the load test?

Which approach will allow the Specialist to review the latency, memory utilization, and CPU utilization during the load test?

- AReview SageMaker logs that have been written to Amazon S3 by leveraging Amazon Athena and Amazon QuickSight to visualize logs as they are being produced.

- BGenerate an Amazon CloudWatch dashboard to create a single view for the latency, memory utilization, and CPU utilization metrics that are outputted by Amazon SageMaker.Most Voted

- CBuild custom Amazon CloudWatch Logs and then leverage Amazon ES and Kibana to query and visualize the log data as it is generated by Amazon SageMaker.

- DSend Amazon CloudWatch Logs that were generated by Amazon SageMaker to Amazon ES and use Kibana to query and visualize the log data.

Correct Answer:

B

Reference:

https://docs.aws.amazon.com/sagemaker/latest/dg/monitoring-cloudwatch.html

B

Reference:

https://docs.aws.amazon.com/sagemaker/latest/dg/monitoring-cloudwatch.html

send

light_mode

delete

Question #8

A manufacturing company has structured and unstructured data stored in an Amazon S3 bucket. A Machine Learning Specialist wants to use SQL to run queries on this data.

Which solution requires the LEAST effort to be able to query this data?

Which solution requires the LEAST effort to be able to query this data?

- AUse AWS Data Pipeline to transform the data and Amazon RDS to run queries.

- BUse AWS Glue to catalogue the data and Amazon Athena to run queries.Most Voted

- CUse AWS Batch to run ETL on the data and Amazon Aurora to run the queries.

- DUse AWS Lambda to transform the data and Amazon Kinesis Data Analytics to run queries.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Option B, using AWS Glue to catalog the data coupled with Amazon Athena for SQL querying, presents a streamlined and low-effort solution for handling both structured and unstructured data in S3. AWS Glue automatically catalogs your data, making it ready for query without further data movement, while Athena allows you to run SQL queries directly on the data stored in S3. This combination not only reduces the complexity typically associated with data transformation and querying processes but also leverages fully managed services to minimize the operational load. This setup is highly efficient for ad-hoc queries and standard SQL usage.

send

light_mode

delete

Question #9

A Machine Learning Specialist is developing a custom video recommendation model for an application. The dataset used to train this model is very large with millions of data points and is hosted in an Amazon S3 bucket. The Specialist wants to avoid loading all of this data onto an Amazon SageMaker notebook instance because it would take hours to move and will exceed the attached 5 GB Amazon EBS volume on the notebook instance.

Which approach allows the Specialist to use all the data to train the model?

Which approach allows the Specialist to use all the data to train the model?

- ALoad a smaller subset of the data into the SageMaker notebook and train locally. Confirm that the training code is executing and the model parameters seem reasonable. Initiate a SageMaker training job using the full dataset from the S3 bucket using Pipe input mode.Most Voted

- BLaunch an Amazon EC2 instance with an AWS Deep Learning AMI and attach the S3 bucket to the instance. Train on a small amount of the data to verify the training code and hyperparameters. Go back to Amazon SageMaker and train using the full dataset

- CUse AWS Glue to train a model using a small subset of the data to confirm that the data will be compatible with Amazon SageMaker. Initiate a SageMaker training job using the full dataset from the S3 bucket using Pipe input mode.

- DLoad a smaller subset of the data into the SageMaker notebook and train locally. Confirm that the training code is executing and the model parameters seem reasonable. Launch an Amazon EC2 instance with an AWS Deep Learning AMI and attach the S3 bucket to train the full dataset.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Using Pipe input mode in Amazon SageMaker is highly effective for handling large datasets, like the one in your scenario hosted on S3. This mode streams the data directly to the training instance, bypassing the need to download large volumes of data to your SageMaker notebook instance, which is constrained by disk space and bandwidth. In addition, it starts the training process more quickly and improves data throughput compared to traditional methods that require full dataset downloads. This approach not only satisfies the model's data needs but optimizes resource usage and training time.

send

light_mode

delete

Question #10

A Machine Learning Specialist has completed a proof of concept for a company using a small data sample, and now the Specialist is ready to implement an end- to-end solution in AWS using Amazon SageMaker. The historical training data is stored in Amazon RDS.

Which approach should the Specialist use for training a model using that data?

Which approach should the Specialist use for training a model using that data?

- AWrite a direct connection to the SQL database within the notebook and pull data in

- BPush the data from Microsoft SQL Server to Amazon S3 using an AWS Data Pipeline and provide the S3 location within the notebook.Most Voted

- CMove the data to Amazon DynamoDB and set up a connection to DynamoDB within the notebook to pull data in.

- DMove the data to Amazon ElastiCache using AWS DMS and set up a connection within the notebook to pull data in for fast access.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Absolutely, the best strategy is definitely to move the data to Amazon S3 first. SageMaker integrates smoothly with S3, ensuring efficient training processes. The mention of using AWS Data Pipeline just strengthens the case here, as it simplifies the data transfer from SQL Server to S3, making the whole process neat and streamlined. Remember, even though the question mentions RDS and the answer mentions SQL Server, the overall logic of using S3 as a staging area remains solid for handling large datasets in SageMaker.

send

light_mode

delete

All Pages