Amazon AWS Certified Database - Specialty Exam Practice Questions (P. 5)

- Full Access (359 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #41

A company has an Amazon RDS Multi-AZ DB instances that is 200 GB in size with an RPO of 6 hours. To meet the company's disaster recovery policies, the database backup needs to be copied into another Region. The company requires the solution to be cost-effective and operationally efficient.

What should a Database Specialist do to copy the database backup into a different Region?

What should a Database Specialist do to copy the database backup into a different Region?

- AUse Amazon RDS automated snapshots and use AWS Lambda to copy the snapshot into another Region

- BUse Amazon RDS automated snapshots every 6 hours and use Amazon S3 cross-Region replication to copy the snapshot into another Region

- CCreate an AWS Lambda function to take an Amazon RDS snapshot every 6 hours and use a second Lambda function to copy the snapshot into another RegionMost Voted

- DCreate a cross-Region read replica for Amazon RDS in another Region and take an automated snapshot of the read replica

Correct Answer:

D

Reference:

https://aws.amazon.com/blogs/database/implementing-a-disaster-recovery-strategy-with-amazon-rds/

D

Reference:

https://aws.amazon.com/blogs/database/implementing-a-disaster-recovery-strategy-with-amazon-rds/

send

light_mode

delete

Question #42

An Amazon RDS EBS-optimized instance with Provisioned IOPS (PIOPS) storage is using less than half of its allocated IOPS over the course of several hours under constant load. The RDS instance exhibits multi-second read and write latency, and uses all of its maximum bandwidth for read throughput, yet the instance uses less than half of its CPU and RAM resources.

What should a Database Specialist do in this situation to increase performance and return latency to sub-second levels?

What should a Database Specialist do in this situation to increase performance and return latency to sub-second levels?

- AIncrease the size of the DB instance storage

- BChange the underlying EBS storage type to General Purpose SSD (gp2)

- CDisable EBS optimization on the DB instance

- DChange the DB instance to an instance class with a higher maximum bandwidthMost Voted

Correct Answer:

B

B

send

light_mode

delete

Question #43

After restoring an Amazon RDS snapshot from 3 days ago, a company's Development team cannot connect to the restored RDS DB instance. What is the likely cause of this problem?

- AThe restored DB instance does not have Enhanced Monitoring enabled

- BThe production DB instance is using a custom parameter group

- CThe restored DB instance is using the default security groupMost Voted

- DThe production DB instance is using a custom option group

Correct Answer:

B

B

send

light_mode

delete

Question #44

A gaming company has implemented a leaderboard in AWS using a Sorted Set data structure within Amazon ElastiCache for Redis. The ElastiCache cluster has been deployed with cluster mode disabled and has a replication group deployed with two additional replicas. The company is planning for a worldwide gaming event and is anticipating a higher write load than what the current cluster can handle.

Which method should a Database Specialist use to scale the ElastiCache cluster ahead of the upcoming event?

Which method should a Database Specialist use to scale the ElastiCache cluster ahead of the upcoming event?

- AEnable cluster mode on the existing ElastiCache cluster and configure separate shards for the Sorted Set across all nodes in the cluster.

- BIncrease the size of the ElastiCache cluster nodes to a larger instance size.Most Voted

- CCreate an additional ElastiCache cluster and load-balance traffic between the two clusters.

- DUse the EXPIRE command and set a higher time to live (TTL) after each call to increment a given key.

Correct Answer:

B

Reference:

https://aws.amazon.com/blogs/database/work-with-cluster-mode-on-amazon-elasticache-for-redis/

B

Reference:

https://aws.amazon.com/blogs/database/work-with-cluster-mode-on-amazon-elasticache-for-redis/

send

light_mode

delete

Question #45

An ecommerce company has tasked a Database Specialist with creating a reporting dashboard that visualizes critical business metrics that will be pulled from the core production database running on Amazon Aurora. Data that is read by the dashboard should be available within 100 milliseconds of an update.

The Database Specialist needs to review the current configuration of the Aurora DB cluster and develop a cost-effective solution. The solution needs to accommodate the unpredictable read workload from the reporting dashboard without any impact on the write availability and performance of the DB cluster.

Which solution meets these requirements?

The Database Specialist needs to review the current configuration of the Aurora DB cluster and develop a cost-effective solution. The solution needs to accommodate the unpredictable read workload from the reporting dashboard without any impact on the write availability and performance of the DB cluster.

Which solution meets these requirements?

- ATurn on the serverless option in the DB cluster so it can automatically scale based on demand.

- BProvision a clone of the existing DB cluster for the new Application team.

- CCreate a separate DB cluster for the new workload, refresh from the source DB cluster, and set up ongoing replication using AWS DMS change data capture (CDC).

- DAdd an automatic scaling policy to the DB cluster to add Aurora Replicas to the cluster based on CPU consumption.Most Voted

Correct Answer:

A

A

send

light_mode

delete

Question #46

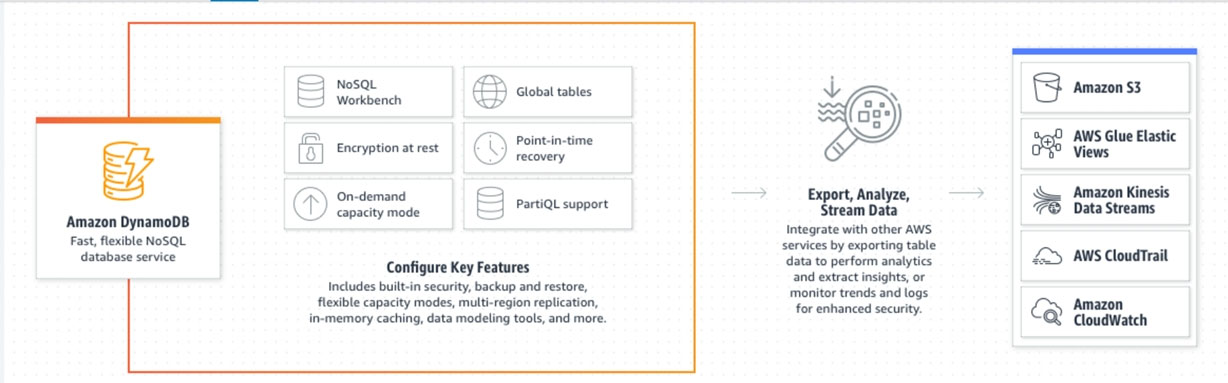

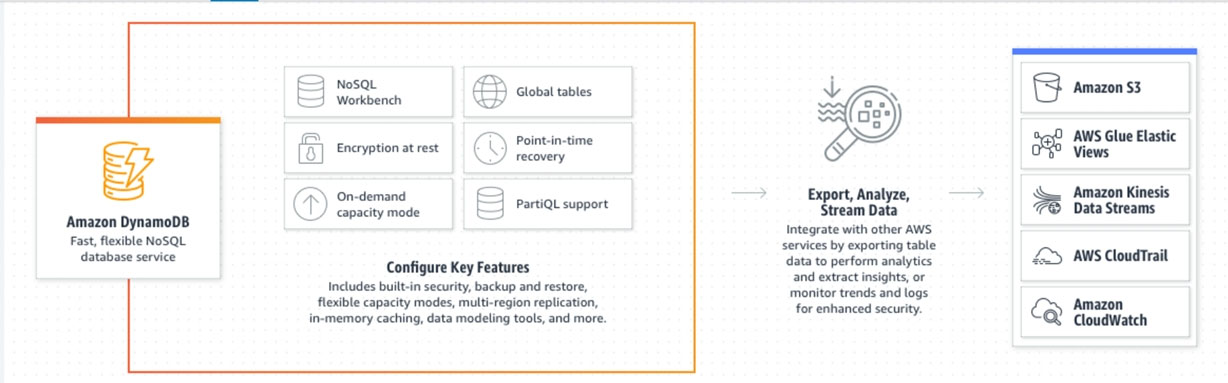

A retail company is about to migrate its online and mobile store to AWS. The company's CEO has strategic plans to grow the brand globally. A Database

Specialist has been challenged to provide predictable read and write database performance with minimal operational overhead.

What should the Database Specialist do to meet these requirements?

Specialist has been challenged to provide predictable read and write database performance with minimal operational overhead.

What should the Database Specialist do to meet these requirements?

- AUse Amazon DynamoDB global tables to synchronize transactionsMost Voted

- BUse Amazon EMR to copy the orders table data across Regions

- CUse Amazon Aurora Global Database to synchronize all transactions

- DUse Amazon DynamoDB Streams to replicate all DynamoDB transactions and sync them

Correct Answer:

A

Reference:

https://aws.amazon.com/dynamodb/

A

Reference:

https://aws.amazon.com/dynamodb/

send

light_mode

delete

Question #47

A company is closing one of its remote data centers. This site runs a 100 TB on-premises data warehouse solution. The company plans to use the AWS Schema

Conversion Tool (AWS SCT) and AWS DMS for the migration to AWS. The site network bandwidth is 500 Mbps. A Database Specialist wants to migrate the on- premises data using Amazon S3 as the data lake and Amazon Redshift as the data warehouse. This move must take place during a 2-week period when source systems are shut down for maintenance. The data should stay encrypted at rest and in transit.

Which approach has the least risk and the highest likelihood of a successful data transfer?

Conversion Tool (AWS SCT) and AWS DMS for the migration to AWS. The site network bandwidth is 500 Mbps. A Database Specialist wants to migrate the on- premises data using Amazon S3 as the data lake and Amazon Redshift as the data warehouse. This move must take place during a 2-week period when source systems are shut down for maintenance. The data should stay encrypted at rest and in transit.

Which approach has the least risk and the highest likelihood of a successful data transfer?

- ASet up a VPN tunnel for encrypting data over the network from the data center to AWS. Leverage AWS SCT and apply the converted schema to Amazon Redshift. Once complete, start an AWS DMS task to move the data from the source to Amazon S3. Use AWS Glue to load the data from Amazon S3 to Amazon Redshift.

- BLeverage AWS SCT and apply the converted schema to Amazon Redshift. Start an AWS DMS task with two AWS Snowball Edge devices to copy data from on-premises to Amazon S3 with AWS KMS encryption. Use AWS DMS to finish copying data to Amazon Redshift.Most Voted

- CLeverage AWS SCT and apply the converted schema to Amazon Redshift. Once complete, use a fleet of 10 TB dedicated encrypted drives using the AWS Import/Export feature to copy data from on-premises to Amazon S3 with AWS KMS encryption. Use AWS Glue to load the data to Amazon redshift.

- DSet up a VPN tunnel for encrypting data over the network from the data center to AWS. Leverage a native database export feature to export the data and compress the files. Use the aws S3 cp multi-port upload command to upload these files to Amazon S3 with AWS KMS encryption. Once complete, load the data to Amazon Redshift using AWS Glue.

Correct Answer:

C

C

send

light_mode

delete

Question #48

A company is looking to migrate a 1 TB Oracle database from on-premises to an Amazon Aurora PostgreSQL DB cluster. The company's Database Specialist discovered that the Oracle database is storing 100 GB of large binary objects (LOBs) across multiple tables. The Oracle database has a maximum LOB size of

500 MB with an average LOB size of 350 MB. The Database Specialist has chosen AWS DMS to migrate the data with the largest replication instances.

How should the Database Specialist optimize the database migration using AWS DMS?

500 MB with an average LOB size of 350 MB. The Database Specialist has chosen AWS DMS to migrate the data with the largest replication instances.

How should the Database Specialist optimize the database migration using AWS DMS?

- ACreate a single task using full LOB mode with a LOB chunk size of 500 MB to migrate the data and LOBs together

- BCreate two tasks: task1 with LOB tables using full LOB mode with a LOB chunk size of 500 MB and task2 without LOBs

- CCreate two tasks: task1 with LOB tables using limited LOB mode with a maximum LOB size of 500 MB and task 2 without LOBsMost Voted

- DCreate a single task using limited LOB mode with a maximum LOB size of 500 MB to migrate data and LOBs together

Correct Answer:

C

C

send

light_mode

delete

Question #49

A Database Specialist is designing a disaster recovery strategy for a production Amazon DynamoDB table. The table uses provisioned read/write capacity mode, global secondary indexes, and time to live (TTL). The Database Specialist has restored the latest backup to a new table.

To prepare the new table with identical settings, which steps should be performed? (Choose two.)

To prepare the new table with identical settings, which steps should be performed? (Choose two.)

- ARe-create global secondary indexes in the new table

- BDefine IAM policies for access to the new tableMost Voted

- CDefine the TTL settingsMost Voted

- DEncrypt the table from the AWS Management Console or use the update-table command

- ESet the provisioned read and write capacity

Correct Answer:

AE

Reference:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/HowItWorks.ReadWriteCapacityMode.html

AE

Reference:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/HowItWorks.ReadWriteCapacityMode.html

send

light_mode

delete

Question #50

A Database Specialist is creating Amazon DynamoDB tables, Amazon CloudWatch alarms, and associated infrastructure for an Application team using a development AWS account. The team wants a deployment method that will standardize the core solution components while managing environment-specific settings separately, and wants to minimize rework due to configuration errors.

Which process should the Database Specialist recommend to meet these requirements?

Which process should the Database Specialist recommend to meet these requirements?

- AOrganize common and environmental-specific parameters hierarchically in the AWS Systems Manager Parameter Store, then reference the parameters dynamically from an AWS CloudFormation template. Deploy the CloudFormation stack using the environment name as a parameter.Most Voted

- BCreate a parameterized AWS CloudFormation template that builds the required objects. Keep separate environment parameter files in separate Amazon S3 buckets. Provide an AWS CLI command that deploys the CloudFormation stack directly referencing the appropriate parameter bucket.

- CCreate a parameterized AWS CloudFormation template that builds the required objects. Import the template into the CloudFormation interface in the AWS Management Console. Make the required changes to the parameters and deploy the CloudFormation stack.

- DCreate an AWS Lambda function that builds the required objects using an AWS SDK. Set the required parameter values in a test event in the Lambda console for each environment that the Application team can modify, as needed. Deploy the infrastructure by triggering the test event in the console.

Correct Answer:

C

Reference:

https://aws.amazon.com/blogs/mt/aws-cloudformation-signed-sealed-and-deployed/

C

Reference:

https://aws.amazon.com/blogs/mt/aws-cloudformation-signed-sealed-and-deployed/

send

light_mode

delete

All Pages