Amazon AWS Certified Database - Specialty Exam Practice Questions (P. 4)

- Full Access (359 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #31

A global digital advertising company captures browsing metadata to contextually display relevant images, pages, and links to targeted users. A single page load can generate multiple events that need to be stored individually. The maximum size of an event is 200 KB and the average size is 10 KB. Each page load must query the user's browsing history to provide targeting recommendations. The advertising company expects over 1 billion page visits per day from users in the

United States, Europe, Hong Kong, and India. The structure of the metadata varies depending on the event. Additionally, the browsing metadata must be written and read with very low latency to ensure a good viewing experience for the users.

Which database solution meets these requirements?

United States, Europe, Hong Kong, and India. The structure of the metadata varies depending on the event. Additionally, the browsing metadata must be written and read with very low latency to ensure a good viewing experience for the users.

Which database solution meets these requirements?

- AAmazon DocumentDB

- BAmazon RDS Multi-AZ deployment

- CAmazon DynamoDB global tableMost Voted

- DAmazon Aurora Global Database

Correct Answer:

C

Reference:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/GlobalTables.html

C

Reference:

https://docs.aws.amazon.com/amazondynamodb/latest/developerguide/GlobalTables.html

send

light_mode

delete

Question #32

A Database Specialist modified an existing parameter group currently associated with a production Amazon RDS for SQL Server Multi-AZ DB instance. The change is associated with a static parameter type, which controls the number of user connections allowed on the most critical RDS SQL Server DB instance for the company. This change has been approved for a specific maintenance window to help minimize the impact on users.

How should the Database Specialist apply the parameter group change for the DB instance?

How should the Database Specialist apply the parameter group change for the DB instance?

- ASelect the option to apply the change immediately

- BAllow the preconfigured RDS maintenance window for the given DB instance to control when the change is applied

- CApply the change manually by rebooting the DB instance during the approved maintenance windowMost Voted

- DReboot the secondary Multi-AZ DB instance

Correct Answer:

D

Reference:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Troubleshooting.html

D

Reference:

https://docs.aws.amazon.com/AmazonRDS/latest/UserGuide/CHAP_Troubleshooting.html

send

light_mode

delete

Question #33

A Database Specialist is designing a new database infrastructure for a ride hailing application. The application data includes a ride tracking system that stores

GPS coordinates for all rides. Real-time statistics and metadata lookups must be performed with high throughput and microsecond latency. The database should be fault tolerant with minimal operational overhead and development effort.

Which solution meets these requirements in the MOST efficient way?

GPS coordinates for all rides. Real-time statistics and metadata lookups must be performed with high throughput and microsecond latency. The database should be fault tolerant with minimal operational overhead and development effort.

Which solution meets these requirements in the MOST efficient way?

- AUse Amazon RDS for MySQL as the database and use Amazon ElastiCache

- BUse Amazon DynamoDB as the database and use DynamoDB AcceleratorMost Voted

- CUse Amazon Aurora MySQL as the database and use Aurora's buffer cache

- DUse Amazon DynamoDB as the database and use Amazon API Gateway

Correct Answer:

D

Reference:

https://aws.amazon.com/solutions/case-studies/lyft/

D

Reference:

https://aws.amazon.com/solutions/case-studies/lyft/

send

light_mode

delete

Question #34

A company is using an Amazon Aurora PostgreSQL DB cluster with an xlarge primary instance master and two large Aurora Replicas for high availability and read-only workload scaling. A failover event occurs and application performance is poor for several minutes. During this time, application servers in all Availability

Zones are healthy and responding normally.

What should the company do to eliminate this application performance issue?

Zones are healthy and responding normally.

What should the company do to eliminate this application performance issue?

- AConfigure both of the Aurora Replicas to the same instance class as the primary DB instance. Enable cache coherence on the DB cluster, set the primary DB instance failover priority to tier-0, and assign a failover priority of tier-1 to the replicas.

- BDeploy an AWS Lambda function that calls the DescribeDBInstances action to establish which instance has failed, and then use the PromoteReadReplica operation to promote one Aurora Replica to be the primary DB instance. Configure an Amazon RDS event subscription to send a notification to an Amazon SNS topic to which the Lambda function is subscribed.

- CConfigure one Aurora Replica to have the same instance class as the primary DB instance. Implement Aurora PostgreSQL DB cluster cache management. Set the failover priority to tier-0 for the primary DB instance and one replica with the same instance class. Set the failover priority to tier-1 for the other replicas.Most Voted

- DConfigure both Aurora Replicas to have the same instance class as the primary DB instance. Implement Aurora PostgreSQL DB cluster cache management. Set the failover priority to tier-0 for the primary DB instance and to tier-1 for the replicas.

Correct Answer:

D

D

send

light_mode

delete

Question #35

A company has a database monitoring solution that uses Amazon CloudWatch for its Amazon RDS for SQL Server environment. The cause of a recent spike in

CPU utilization was not determined using the standard metrics that were collected. The CPU spike caused the application to perform poorly, impacting users. A

Database Specialist needs to determine what caused the CPU spike.

Which combination of steps should be taken to provide more visibility into the processes and queries running during an increase in CPU load? (Choose two.)

CPU utilization was not determined using the standard metrics that were collected. The CPU spike caused the application to perform poorly, impacting users. A

Database Specialist needs to determine what caused the CPU spike.

Which combination of steps should be taken to provide more visibility into the processes and queries running during an increase in CPU load? (Choose two.)

- AEnable Amazon CloudWatch Events and view the incoming T-SQL statements causing the CPU to spike.

- BEnable Enhanced Monitoring metrics to view CPU utilization at the RDS SQL Server DB instance level.Most Voted

- CImplement a caching layer to help with repeated queries on the RDS SQL Server DB instance.

- DUse Amazon QuickSight to view the SQL statement being run.

- EEnable Amazon RDS Performance Insights to view the database load and filter the load by waits, SQL statements, hosts, or users.Most Voted

Correct Answer:

BE

BE

send

light_mode

delete

Question #36

A company is using Amazon with Aurora Replicas for read-only workload scaling. A Database Specialist needs to split up two read-only applications so each application always connects to a dedicated replica. The Database Specialist wants to implement load balancing and high availability for the read-only applications.

Which solution meets these requirements?

Which solution meets these requirements?

- AUse a specific instance endpoint for each replica and add the instance endpoint to each read-only application connection string.

- BUse reader endpoints for both the read-only workload applications.

- CUse a reader endpoint for one read-only application and use an instance endpoint for the other read-only application.

- DUse custom endpoints for the two read-only applications.Most Voted

Correct Answer:

B

Reference:

https://rimzy.net/category/amazon-rds/page/4/

B

Reference:

https://rimzy.net/category/amazon-rds/page/4/

send

light_mode

delete

Question #37

An online gaming company is planning to launch a new game with Amazon DynamoDB as its data store. The database should be designated to support the following use cases:

✑ Update scores in real time whenever a player is playing the game.

✑ Retrieve a player's score details for a specific game session.

A Database Specialist decides to implement a DynamoDB table. Each player has a unique user_id and each game has a unique game_id.

Which choice of keys is recommended for the DynamoDB table?

✑ Update scores in real time whenever a player is playing the game.

✑ Retrieve a player's score details for a specific game session.

A Database Specialist decides to implement a DynamoDB table. Each player has a unique user_id and each game has a unique game_id.

Which choice of keys is recommended for the DynamoDB table?

- ACreate a global secondary index with game_id as the partition key

- BCreate a global secondary index with user_id as the partition key

- CCreate a composite primary key with game_id as the partition key and user_id as the sort key

- DCreate a composite primary key with user_id as the partition key and game_id as the sort keyMost Voted

Correct Answer:

B

Reference:

https://aws.amazon.com/blogs/database/amazon-dynamodb-gaming-use-cases-and-design-patterns/

B

Reference:

https://aws.amazon.com/blogs/database/amazon-dynamodb-gaming-use-cases-and-design-patterns/

send

light_mode

delete

Question #38

A Database Specialist migrated an existing production MySQL database from on-premises to an Amazon RDS for MySQL DB instance. However, after the migration, the database needed to be encrypted at rest using AWS KMS. Due to the size of the database, reloading, the data into an encrypted database would be too time-consuming, so it is not an option.

How should the Database Specialist satisfy this new requirement?

How should the Database Specialist satisfy this new requirement?

- ACreate a snapshot of the unencrypted RDS DB instance. Create an encrypted copy of the unencrypted snapshot. Restore the encrypted snapshot copy.Most Voted

- BModify the RDS DB instance. Enable the AWS KMS encryption option that leverages the AWS CLI.

- CRestore an unencrypted snapshot into a MySQL RDS DB instance that is encrypted.

- DCreate an encrypted read replica of the RDS DB instance. Promote it the master.

Correct Answer:

A

A

send

light_mode

delete

Question #39

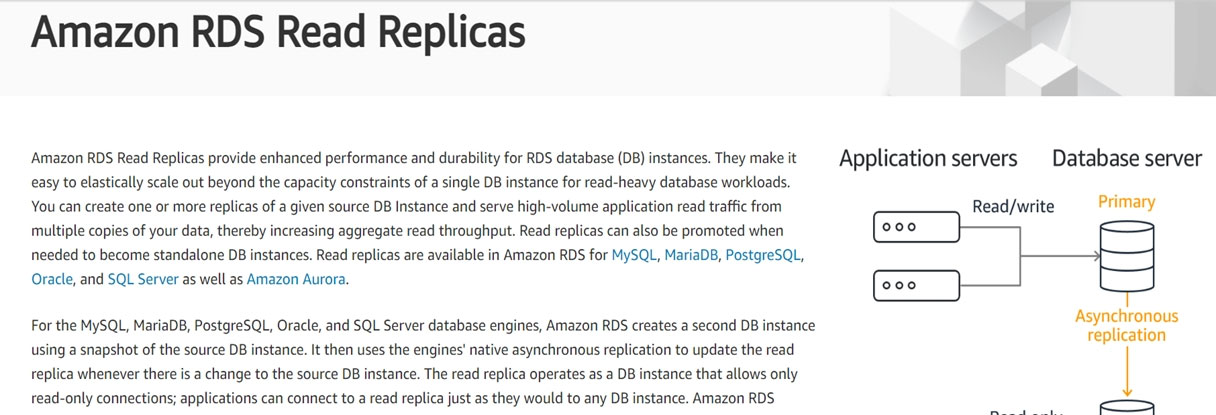

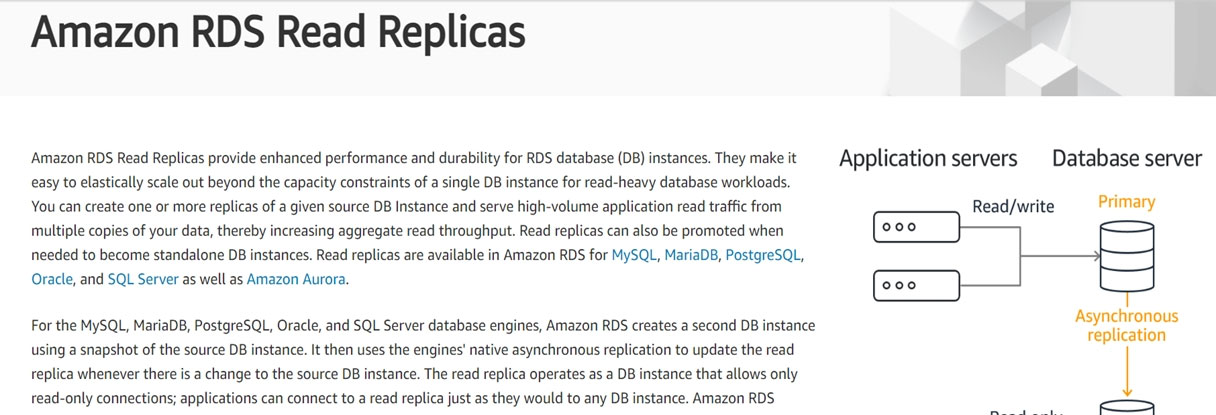

A Database Specialist is planning to create a read replica of an existing Amazon RDS for MySQL Multi-AZ DB instance. When using the AWS Management

Console to conduct this task, the Database Specialist discovers that the source RDS DB instance does not appear in the read replica source selection box, so the read replica cannot be created.

What is the most likely reason for this?

Console to conduct this task, the Database Specialist discovers that the source RDS DB instance does not appear in the read replica source selection box, so the read replica cannot be created.

What is the most likely reason for this?

- AThe source DB instance has to be converted to Single-AZ first to create a read replica from it.

- BEnhanced Monitoring is not enabled on the source DB instance.

- CThe minor MySQL version in the source DB instance does not support read replicas.

- DAutomated backups are not enabled on the source DB instance.Most Voted

Correct Answer:

D

Reference:

https://aws.amazon.com/rds/features/read-replicas/

D

Reference:

https://aws.amazon.com/rds/features/read-replicas/

send

light_mode

delete

Question #40

A Database Specialist has migrated an on-premises Oracle database to Amazon Aurora PostgreSQL. The schema and the data have been migrated successfully.

The on-premises database server was also being used to run database maintenance cron jobs written in Python to perform tasks including data purging and generating data exports. The logs for these jobs show that, most of the time, the jobs completed within 5 minutes, but a few jobs took up to 10 minutes to complete. These maintenance jobs need to be set up for Aurora PostgreSQL.

How can the Database Specialist schedule these jobs so the setup requires minimal maintenance and provides high availability?

The on-premises database server was also being used to run database maintenance cron jobs written in Python to perform tasks including data purging and generating data exports. The logs for these jobs show that, most of the time, the jobs completed within 5 minutes, but a few jobs took up to 10 minutes to complete. These maintenance jobs need to be set up for Aurora PostgreSQL.

How can the Database Specialist schedule these jobs so the setup requires minimal maintenance and provides high availability?

- ACreate cron jobs on an Amazon EC2 instance to run the maintenance jobs following the required schedule.

- BConnect to the Aurora host and create cron jobs to run the maintenance jobs following the required schedule.

- CCreate AWS Lambda functions to run the maintenance jobs and schedule them with Amazon CloudWatch Events.Most Voted

- DCreate the maintenance job using the Amazon CloudWatch job scheduling plugin.

Correct Answer:

D

Reference:

https://docs.aws.amazon.com/systems-manager/latest/userguide/mw-cli-task-options.html

D

Reference:

https://docs.aws.amazon.com/systems-manager/latest/userguide/mw-cli-task-options.html

send

light_mode

delete

All Pages