Oracle 1z0-062 Exam Practice Questions (P. 3)

- Full Access (382 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

Which two statements are true about extents? (Choose two.)

- ABlocks belonging to an extent can be spread across multiple data files.

- BData blocks in an extent are logically contiguous but can be non-contiguous on disk.

- CThe blocks of a newly allocated extent, although free, may have been used before.

- DData blocks in an extent are automatically reclaimed for use by other objects in a tablespace when all the rows in a table are deleted.

Correct Answer:

BC

BC

send

light_mode

delete

Question #22

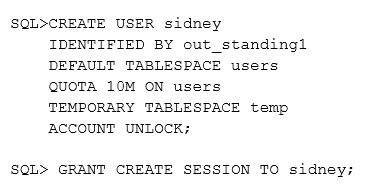

You execute the commands:

Which two statements are true? (Choose two.)

Which two statements are true? (Choose two.)

- AThe create user command fails if any role with the name Sidney exists in the database.

- BThe user Sidney can connect to the database instance but cannot perform sort operations because no space quota is specified for the temp tablespace.

- CThe user Sidney is created but cannot connect to the database instance because no profile is default.

- DThe user Sidney can connect to the database instance but requires relevant privileges to create objects in the users tablespace.

- EThe user Sidney is created and authenticated by the operating system.

Correct Answer:

AD

References:

AD

References:

send

light_mode

delete

Question #23

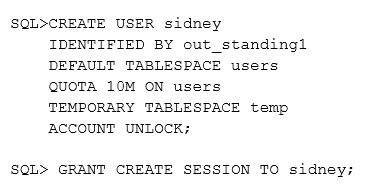

Examine the query and its output:

What might have caused three of the alerts to disappear?

What might have caused three of the alerts to disappear?

- AThe threshold alerts were cleared and transferred to DBA_ALERT_HISTORY.

- BAn Automatic Workload Repository (AWR) snapshot was taken before the execution of the second query.

- CAn Automatic Database Diagnostic Monitor (ADOM) report was generated before the execution of the second query.

- DThe database instance was restarted before the execution of the second query.

Correct Answer:

D

D

send

light_mode

delete

Question #24

Which two statements are true? (Choose two.)

- AA role cannot be assigned external authentication.

- BA role can be granted to other roles.

- CA role can contain both system and object privileges.

- DThe predefined resource role includes the unlimited_tablespace privilege.

- EAll roles are owned by the sys user.

- FThe predefined connect role is always automatically granted to all new users at the time of their creation.

Correct Answer:

BC

References:

BC

References:

send

light_mode

delete

Question #25

Identify three valid options for adding a pluggable database (PDB) to an existing multitenant container database (CDB).

- AUse the CREATE PLUGGABLE DATABASE statement to create a PDB using the files from the SEED.

- BUse the CREATE DATABASE . . . ENABLE PLUGGABLE DATABASE statement to provision a PDB by copying file from the SEED.

- CUse the DBMS_PDB package to clone an existing PDB.

- DUse the DBMS_PDB package to plug an Oracle 12c non-CDB database into an existing CDB.

- EUse the DBMS_PDB package to plug an Oracle 11 g Release 2 (11.2.0.3.0) non-CDB database into an existing CDB.

Correct Answer:

ACD

Use the CREATE PLUGGABLE DATABASE statement to create a pluggable database (PDB).

This statement enables you to perform the following tasks:

* (A) Create a PDB by using the seed as a template

Use the create_pdb_from_seed clause to create a PDB by using the seed in the multitenant container database (CDB) as a template. The files associated with the seed are copied to a new location and the copied files are then associated with the new PDB.

* (C) Create a PDB by cloning an existing PDB

Use the create_pdb_clone clause to create a PDB by copying an existing PDB (the source PDB) and then plugging the copy into the CDB. The files associated with the source PDB are copied to a new location and the copied files are associated with the new PDB. This operation is called cloning a PDB.

The source PDB can be plugged in or unplugged. If plugged in, then the source PDB can be in the same CDB or in a remote CDB. If the source PDB is in a remote CDB, then a database link is used to connect to the remote CDB and copy the files.

* Create a PDB by plugging an unplugged PDB or a non-CDB into a CDB

Use the create_pdb_from_xml clause to plug an unplugged PDB or a non-CDB into a CDB, using an XML metadata file.

ACD

Use the CREATE PLUGGABLE DATABASE statement to create a pluggable database (PDB).

This statement enables you to perform the following tasks:

* (A) Create a PDB by using the seed as a template

Use the create_pdb_from_seed clause to create a PDB by using the seed in the multitenant container database (CDB) as a template. The files associated with the seed are copied to a new location and the copied files are then associated with the new PDB.

* (C) Create a PDB by cloning an existing PDB

Use the create_pdb_clone clause to create a PDB by copying an existing PDB (the source PDB) and then plugging the copy into the CDB. The files associated with the source PDB are copied to a new location and the copied files are associated with the new PDB. This operation is called cloning a PDB.

The source PDB can be plugged in or unplugged. If plugged in, then the source PDB can be in the same CDB or in a remote CDB. If the source PDB is in a remote CDB, then a database link is used to connect to the remote CDB and copy the files.

* Create a PDB by plugging an unplugged PDB or a non-CDB into a CDB

Use the create_pdb_from_xml clause to plug an unplugged PDB or a non-CDB into a CDB, using an XML metadata file.

send

light_mode

delete

Question #26

Your database supports a DSS workload that involves the execution of complex queries: Currently, the library cache contains the ideal workload for analysis. You want to analyze some of the queries for an application that are cached in the library cache.

What must you do to receive recommendations about the efficient use of indexes and materialized views to improve query performance?

What must you do to receive recommendations about the efficient use of indexes and materialized views to improve query performance?

- ACreate a SQL Tuning Set (STS) that contains the queries cached in the library cache and run the SQL Tuning Advisor (STA) on the workload captured in the STS.

- BRun the Automatic Workload Repository Monitor (ADDM).

- CCreate an STS that contains the queries cached in the library cache and run the SQL Performance Analyzer (SPA) on the workload captured in the STS.

- DCreate an STS that contains the queries cached in the library cache and run the SQL Access Advisor on the workload captured in the STS.

Correct Answer:

D

* SQL Access Advisor is primarily responsible for making schema modification recommendations, such as adding or dropping indexes and materialized views.

SQL Tuning Advisor makes other types of recommendations, such as creating SQL profiles and restructuring SQL statements.

* The query optimizer can also help you tune SQL statements. By using SQL Tuning Advisor and SQL Access Advisor, you can invoke the query optimizer in advisory mode to examine a SQL statement or set of statements and determine how to improve their efficiency. SQL Tuning Advisor and SQL Access Advisor can make various recommendations, such as creating SQL profiles, restructuring SQL statements, creating additional indexes or materialized views, and refreshing optimizer statistics.

Note:

* Decision support system (DSS) workload

* The library cache is a shared pool memory structure that stores executable SQL and PL/SQL code. This cache contains the shared SQL and PL/SQL areas and control structures such as locks and library cache handles.

References:

D

* SQL Access Advisor is primarily responsible for making schema modification recommendations, such as adding or dropping indexes and materialized views.

SQL Tuning Advisor makes other types of recommendations, such as creating SQL profiles and restructuring SQL statements.

* The query optimizer can also help you tune SQL statements. By using SQL Tuning Advisor and SQL Access Advisor, you can invoke the query optimizer in advisory mode to examine a SQL statement or set of statements and determine how to improve their efficiency. SQL Tuning Advisor and SQL Access Advisor can make various recommendations, such as creating SQL profiles, restructuring SQL statements, creating additional indexes or materialized views, and refreshing optimizer statistics.

Note:

* Decision support system (DSS) workload

* The library cache is a shared pool memory structure that stores executable SQL and PL/SQL code. This cache contains the shared SQL and PL/SQL areas and control structures such as locks and library cache handles.

References:

send

light_mode

delete

Question #27

The following parameter are set for your Oracle 12c database instance:

OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES=FALSE

OPTIMIZER_USE_SQL_PLAN_BASELINES=TRUE

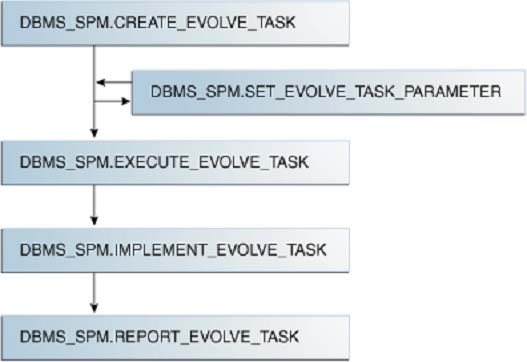

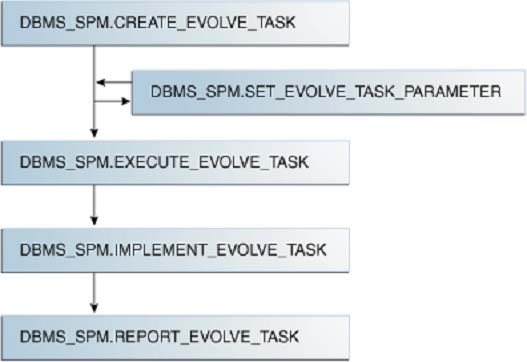

You want to manage the SQL plan evolution task manually. Examine the following steps:

1. Set the evolve task parameters.

2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

3. Implement the recommendations in the task by using the DBMS_SPM.IMPLEMENT_EVOLVE_TASK function.

4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

5. Report the task outcome by using the DBMS_SPM.REPORT_EVOLVE_TASK function.

Identify the correct sequence of steps:

OPTIMIZER_CAPTURE_SQL_PLAN_BASELINES=FALSE

OPTIMIZER_USE_SQL_PLAN_BASELINES=TRUE

You want to manage the SQL plan evolution task manually. Examine the following steps:

1. Set the evolve task parameters.

2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

3. Implement the recommendations in the task by using the DBMS_SPM.IMPLEMENT_EVOLVE_TASK function.

4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

5. Report the task outcome by using the DBMS_SPM.REPORT_EVOLVE_TASK function.

Identify the correct sequence of steps:

- A2, 4, 5

- B2, 1, 4, 3, 5

- C1, 2, 3, 4, 5

- D1, 2, 4, 5

Correct Answer:

B

* Evolving SQL Plan Baselines

*

2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

This function creates an advisor task to prepare the plan evolution of one or more plans for a specified SQL statement. The input parameters can be a SQL handle, plan name or a list of plan names, time limit, task name, and description.

1. Set the evolve task parameters.

SET_EVOLVE_TASK_PARAMETER -

This function updates the value of an evolve task parameter. In this release, the only valid parameter is TIME_LIMIT.

4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

This function executes an evolution task. The input parameters can be the task name, execution name, and execution description. If not specified, the advisor generates the name, which is returned by the function.

3: IMPLEMENT_EVOLVE_TASK

This function implements all recommendations for an evolve task. Essentially, this function is equivalent to using ACCEPT_SQL_PLAN_BASELINE for all recommended plans. Input parameters include task name, plan name, owner name, and execution name.

5. Report the task outcome by using the DBMS_SPM_EVOLVE_TASK function.

This function displays the results of an evolve task as a CLOB. Input parameters include the task name and section of the report to include.

References:

B

* Evolving SQL Plan Baselines

*

2. Create the evolve task by using the DBMS_SPM.CREATE_EVOLVE_TASK function.

This function creates an advisor task to prepare the plan evolution of one or more plans for a specified SQL statement. The input parameters can be a SQL handle, plan name or a list of plan names, time limit, task name, and description.

1. Set the evolve task parameters.

SET_EVOLVE_TASK_PARAMETER -

This function updates the value of an evolve task parameter. In this release, the only valid parameter is TIME_LIMIT.

4. Execute the evolve task by using the DBMS_SPM.EXECUTE_EVOLVE_TASK function.

This function executes an evolution task. The input parameters can be the task name, execution name, and execution description. If not specified, the advisor generates the name, which is returned by the function.

3: IMPLEMENT_EVOLVE_TASK

This function implements all recommendations for an evolve task. Essentially, this function is equivalent to using ACCEPT_SQL_PLAN_BASELINE for all recommended plans. Input parameters include task name, plan name, owner name, and execution name.

5. Report the task outcome by using the DBMS_SPM_EVOLVE_TASK function.

This function displays the results of an evolve task as a CLOB. Input parameters include the task name and section of the report to include.

References:

send

light_mode

delete

Question #28

In a recent Automatic Workload Repository (AWR) report for your database, you notice a high number of buffer busy waits. The database consists of locally managed tablespaces with free list managed segments.

On further investigation, you find that buffer busy waits is caused by contention on data blocks.

Which option would you consider first to decrease the wait event immediately?

On further investigation, you find that buffer busy waits is caused by contention on data blocks.

Which option would you consider first to decrease the wait event immediately?

- ADecreasing PCTUSED

- BDecreasing PCTFREE

- CIncreasing the number of DBWN process

- DUsing Automatic Segment Space Management (ASSM)

- EIncreasing db_buffer_cache based on the V$DB_CACHE_ADVICE recommendation

Correct Answer:

D

* Automatic segment space management (ASSM) is a simpler and more efficient way of managing space within a segment. It completely eliminates any need to specify and tune the pctused,freelists, and freelist groups storage parameters for schema objects created in the tablespace. If any of these attributes are specified, they are ignored.

* Oracle introduced Automatic Segment Storage Management (ASSM) as a replacement for traditional freelists management which used one-way linked-lists to manage free blocks with tables and indexes. ASSM is commonly called "bitmap freelists" because that is how Oracle implement the internal data structures for free block management.

Note:

* Buffer busy waits are most commonly associated with segment header contention onside the data buffer pool (db_cache_size, etc.).

* The most common remedies for high buffer busy waits include database writer (DBWR) contention tuning, adding freelists (or ASSM), and adding missing indexes.

D

* Automatic segment space management (ASSM) is a simpler and more efficient way of managing space within a segment. It completely eliminates any need to specify and tune the pctused,freelists, and freelist groups storage parameters for schema objects created in the tablespace. If any of these attributes are specified, they are ignored.

* Oracle introduced Automatic Segment Storage Management (ASSM) as a replacement for traditional freelists management which used one-way linked-lists to manage free blocks with tables and indexes. ASSM is commonly called "bitmap freelists" because that is how Oracle implement the internal data structures for free block management.

Note:

* Buffer busy waits are most commonly associated with segment header contention onside the data buffer pool (db_cache_size, etc.).

* The most common remedies for high buffer busy waits include database writer (DBWR) contention tuning, adding freelists (or ASSM), and adding missing indexes.

send

light_mode

delete

Question #29

Examine this command:

SQL > exec DBMS_STATS.SET_TABLE_PREFS (SH, CUSTOMERS, PUBLISH, false);

Which three statements are true about the effect of this command? (Choose three.)

SQL > exec DBMS_STATS.SET_TABLE_PREFS (SH, CUSTOMERS, PUBLISH, false);

Which three statements are true about the effect of this command? (Choose three.)

- AStatistics collection is not done for the CUSTOMERS table when schema stats are gathered.

- BStatistics collection is not done for the CUSTOMERS table when database stats are gathered.

- CAny existing statistics for the CUSTOMERS table are still available to the optimizer at parse time.

- DStatistics gathered on the CUSTOMERS table when schema stats are gathered are stored as pending statistics.

- EStatistics gathered on the CUSTOMERS table when database stats are gathered are stored as pending statistics.

Correct Answer:

CDE

* SET_TABLE_PREFS Procedure

This procedure is used to set the statistics preferences of the specified table in the specified schema.

* Example:

Using Pending Statistics -

Assume many modifications have been made to the employees table since the last time statistics were gathered. To ensure that the cost-based optimizer is still picking the best plan, statistics should be gathered once again; however, the user is concerned that new statistics will cause the optimizer to choose bad plans when the current ones are acceptable. The user can do the following:

EXEC DBMS_STATS.SET_TABLE_PREFS('hr', 'employees', 'PUBLISH', 'false');

By setting the employees tables publish preference to FALSE, any statistics gather from now on will not be automatically published. The newly gathered statistics will be marked as pending.

CDE

* SET_TABLE_PREFS Procedure

This procedure is used to set the statistics preferences of the specified table in the specified schema.

* Example:

Using Pending Statistics -

Assume many modifications have been made to the employees table since the last time statistics were gathered. To ensure that the cost-based optimizer is still picking the best plan, statistics should be gathered once again; however, the user is concerned that new statistics will cause the optimizer to choose bad plans when the current ones are acceptable. The user can do the following:

EXEC DBMS_STATS.SET_TABLE_PREFS('hr', 'employees', 'PUBLISH', 'false');

By setting the employees tables publish preference to FALSE, any statistics gather from now on will not be automatically published. The newly gathered statistics will be marked as pending.

send

light_mode

delete

Question #30

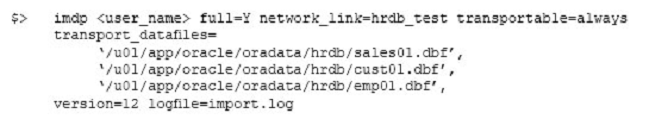

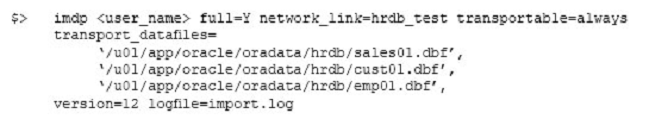

Examine the following impdp command to import a database over the network from a pre-12c Oracle database (source):

Which three are prerequisites for successful execution of the command? (Choose three.)

Which three are prerequisites for successful execution of the command? (Choose three.)

- AThe import operation must be performed by a user on the target database by a user with the DATAPUMP_IMP_FULL_DATABASE role, and the database link must connect to a user with the DATAPUMP_EXP_FULL_DATABASE role on the source database.

- BAll the user-defined tablespaces must be in read-only mode on the source database.

- CThe export dump file must be created before starting the import on the target database.

- DThe source and target database must be running on the same operating system (OS) with the same endianness.

- EThe impdp operation must be performed by the same user that performed the expdp operation.

Correct Answer:

ABD

In this case we have run the impdp without performing any conversion if endian format is different then we have to first perform conversion.

ABD

In this case we have run the impdp without performing any conversion if endian format is different then we have to first perform conversion.

send

light_mode

delete

All Pages