Microsoft DP-600 Exam Practice Questions (P. 3)

- Full Access (199 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #11

HOTSPOT -

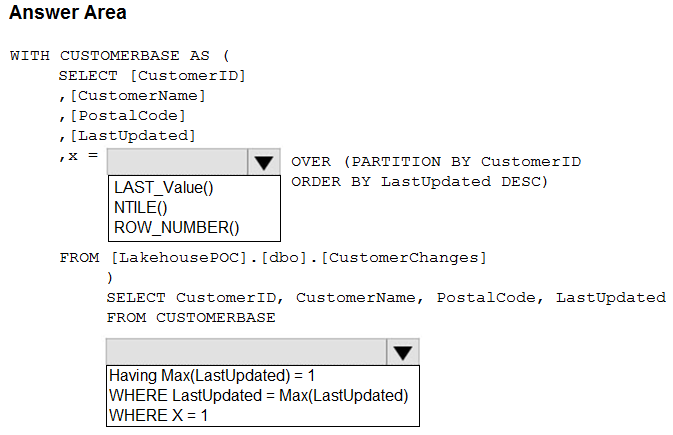

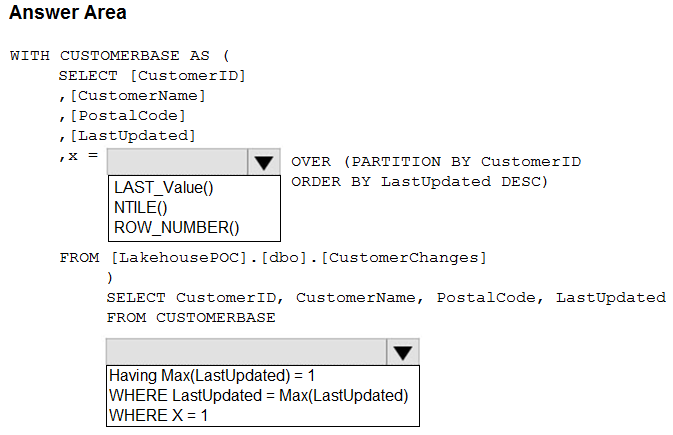

You have a data warehouse that contains a table named Stage.Customers. Stage.Customers contains all the customer record updates from a customer relationship management (CRM) system. There can be multiple updates per customer.

You need to write a T-SQL query that will return the customer ID, name. postal code, and the last updated time of the most recent row for each customer ID.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

You have a data warehouse that contains a table named Stage.Customers. Stage.Customers contains all the customer record updates from a customer relationship management (CRM) system. There can be multiple updates per customer.

You need to write a T-SQL query that will return the customer ID, name. postal code, and the last updated time of the most recent row for each customer ID.

How should you complete the code? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

send

light_mode

delete

Question #12

HOTSPOT -

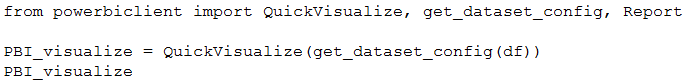

You have a Fabric tenant.

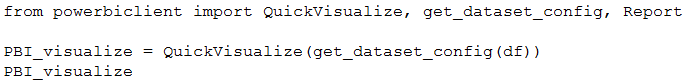

You plan to create a Fabric notebook that will use Spark DataFrames to generate Microsoft Power BI visuals.

You run the following code.

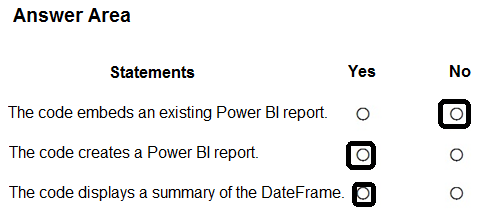

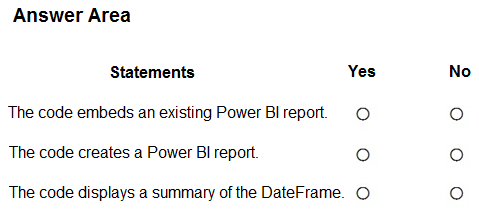

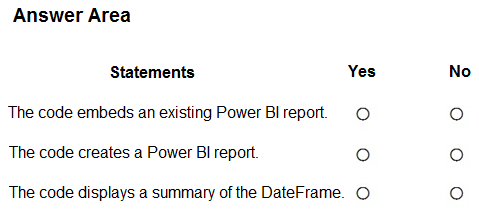

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

You have a Fabric tenant.

You plan to create a Fabric notebook that will use Spark DataFrames to generate Microsoft Power BI visuals.

You run the following code.

For each of the following statements, select Yes if the statement is true. Otherwise, select No.

NOTE: Each correct selection is worth one point.

send

light_mode

delete

Question #13

You are the administrator of a Fabric workspace that contains a lakehouse named Lakehouse1. Lakehouse1 contains the following tables:

Table1: A Delta table created by using a shortcut

Table2: An external table created by using Spark

Table3: A managed table -

You plan to connect to Lakehouse1 by using its SQL endpoint.

What will you be able to do after connecting to Lakehouse1?

Table1: A Delta table created by using a shortcut

Table2: An external table created by using Spark

Table3: A managed table -

You plan to connect to Lakehouse1 by using its SQL endpoint.

What will you be able to do after connecting to Lakehouse1?

- ARead Table3.Most Voted

- BUpdate the data Table3.

- CRead Table2.

- DUpdate the data in Table1.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

The correct answer is indeed D, allowing updates on a Delta table created by using a shortcut via the SQL endpoint. While it's common to think SQL endpoints are typically read-only, in Microsoft Fabric, certain configurations like shortcuts in Delta tables allow for updates. The misunderstanding might stem from generalizing the capabilities of SQL endpoints based on more common configurations or setups. However, in this specific context provided by the exam scenario, updating the delta table is supported.

send

light_mode

delete

Question #14

You have a Fabric tenant that contains a warehouse.

You use a dataflow to load a new dataset from OneLake to the warehouse.

You need to add a PowerQuery step to identify the maximum values for the numeric columns.

Which function should you include in the step?

You use a dataflow to load a new dataset from OneLake to the warehouse.

You need to add a PowerQuery step to identify the maximum values for the numeric columns.

Which function should you include in the step?

- ATable.MaxN

- BTable.MaxMost Voted

- CTable.Range

- DTable.Profile

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

For the scenario involving the Fabric tenant's data processing from OneLake into a warehouse, where a PowerQuery step is needed to identify the highest values for numeric columns, "Table.Max" is pinpointed as the suitable function. However, this function typically returns the maximum value from a singular column. If your objective extends across multiple columns in just one step, a broader approach like "Table.Profile" might indeed serve better by providing a collective analysis of data, including maximum values across multiple columns. Always tailor your choice to the specific complexities and needs of the data manipulation required.

send

light_mode

delete

Question #15

You have a Fabric tenant that contains a machine learning model registered in a Fabric workspace.

You need to use the model to generate predictions by using the PREDICT function in a Fabric notebook.

Which two languages can you use to perform model scoring? Each correct answer presents a complete solution.

NOTE: Each correct answer is worth one point.

You need to use the model to generate predictions by using the PREDICT function in a Fabric notebook.

Which two languages can you use to perform model scoring? Each correct answer presents a complete solution.

NOTE: Each correct answer is worth one point.

- AT-SQL

- BDAX

- CSpark SQLMost Voted

- DPySparkMost Voted

Correct Answer:

CD

CD

GPT-4o - Answer

GPT-4o - Answer

In the context of using a Fabric notebook to utilize a machine learning model, Spark SQL and PySpark are indeed the valid language choices for model scoring. These languages are supported due to their integration with Spark-based analytics, specifically suited for handling large-scale data processing and machine learning tasks within the Azure environment. Other languages such as T-SQL or DAX do not support the required functionalities for deploying and scoring machine learning models directly in this scenario.

send

light_mode

delete

All Pages