Microsoft DP-500 Exam Practice Questions (P. 4)

- Full Access (194 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #16

HOTSPOT -

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study -

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview -

Existing environment -

Litware, Inc. is a retail company that sells outdoor recreational goods and accessories. The company sells goods both online and its stores located in six countries.

Azure Resources -

Litware has the following Azure resources:

An Azure Synapse Analytics workspace named synapseworkspace1

An Azure Data Lake Storage Gen2 account named datalake1 that is associated with synapseworkspace1

A Synapse Analytics dedicated SQL pool named SQLDW

Dedicated SQL Pool -

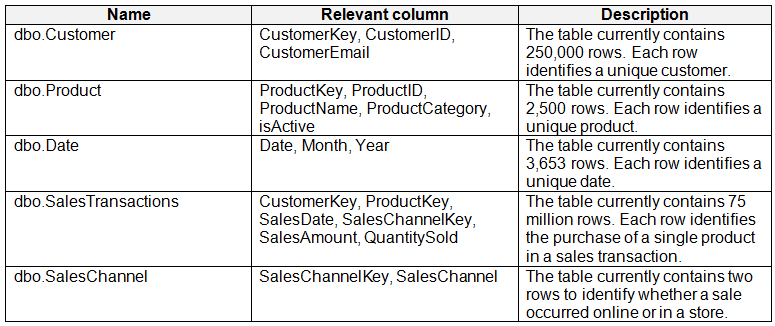

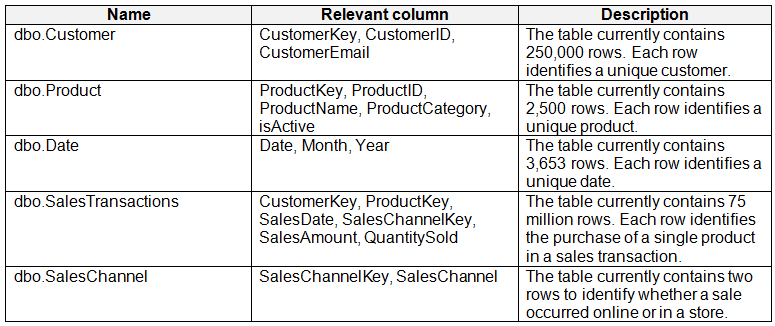

SQLDW contains a dimensional model that contains the following tables.

SQLDW contains the following additional tables.

SQLDW contains a view named dbo.CustomerPurchases that creates a distinct list of values from dbo.Customer [customerID], dbo.Customer [CustomerEmail], dbo.Product [ProductID] and dbo.Product [ProductName].

The sales data in SQLDW is updated every 30 minutes. Records in dbo.SalesTransactions are updated in SQLDW up to three days after being created. The records do NOT change after three days.

Power BI -

Litware has a new Power BI tenant that contains an empty workspace named Sales Analytics.

All users have Power BI Premium per user licenses.

IT data analytics are workspace administrators. The IT data analysts will create datasets and reports.

A single imported dataset will be created to support the company’s sales analytics goals. The dataset will be refreshed every 30 minutes.

Requirements -

Analytics Goals -

Litware identifies the following analytics goals:

Provide historical reporting of sales by product and channel over time.

Allow sales managers to perform ad hoc sales reporting with minimal effort.

Perform market basket analysis to understand which products are commonly purchased in the same transaction.

Identify which customers should receive promotional emails based on their likelihood of purchasing promoted products.

Litware plans to monitor the adoption of Power BI reports over time. The company wants custom Power BI usage reporting that includes the percent change of users that view reports in the Sales Analytics workspace each month.

Security Requirements -

Litware identifies the following security requirements for the analytics environment:

All the users in the sales department and the marketing department must be able to see Power BI reports that contain market basket analysis and data about which customers are likely to purchase a product.

Customer contact data in SQLDW and the Power BI dataset must be labeled as Sensitive. Records must be kept of any users that use the sensitive data.

Sales associates must be prevented from seeing the CustomerEmail column in Power BI reports.

Sales managers must be prevented from modifying reports created by other users.

Development Process Requirements

Litware identifies the following development process requirements:

SQLDW and datalake1 will act as the development environment. Once feature development is complete, all entities in synapseworkspace1 will be promoted to a test workspace, and then to a production workspace.

Power BI content must be deployed to test and production by using deployment pipelines.

All SQL scripts must be stored in Azure Repos.

The IT data analysts prefer to build Power BI reports in Synapse Studio.

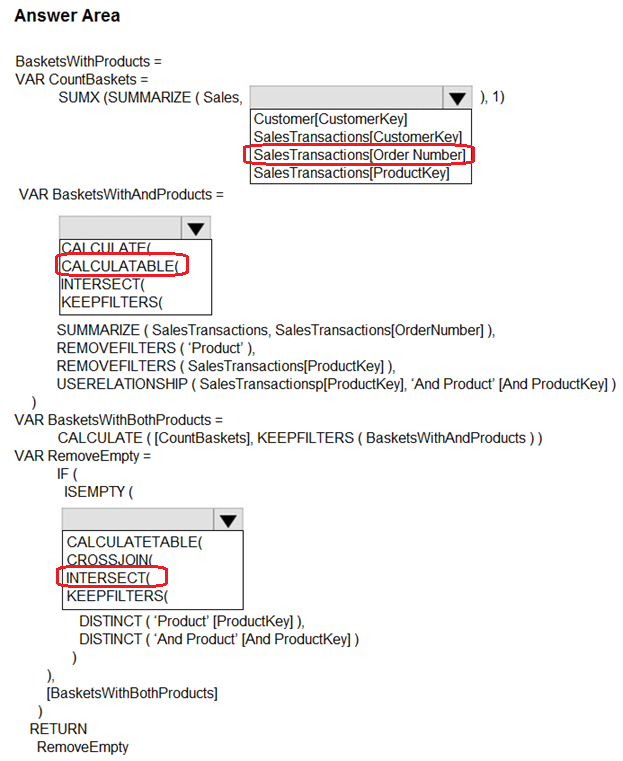

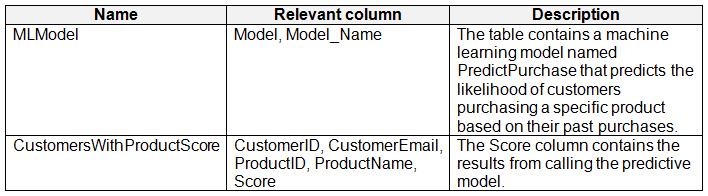

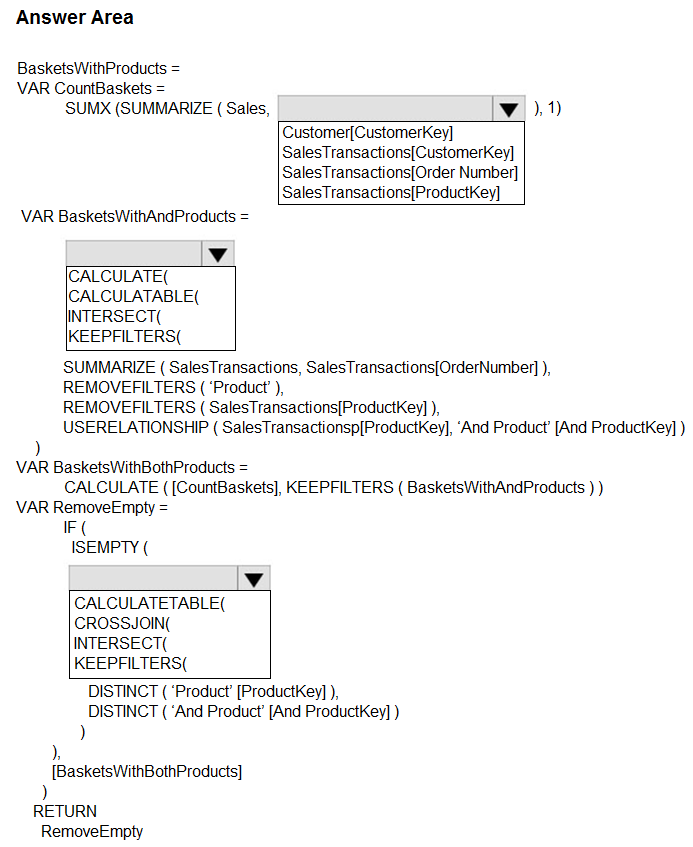

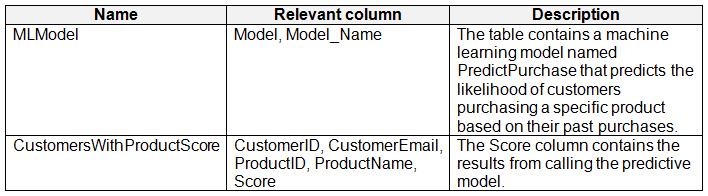

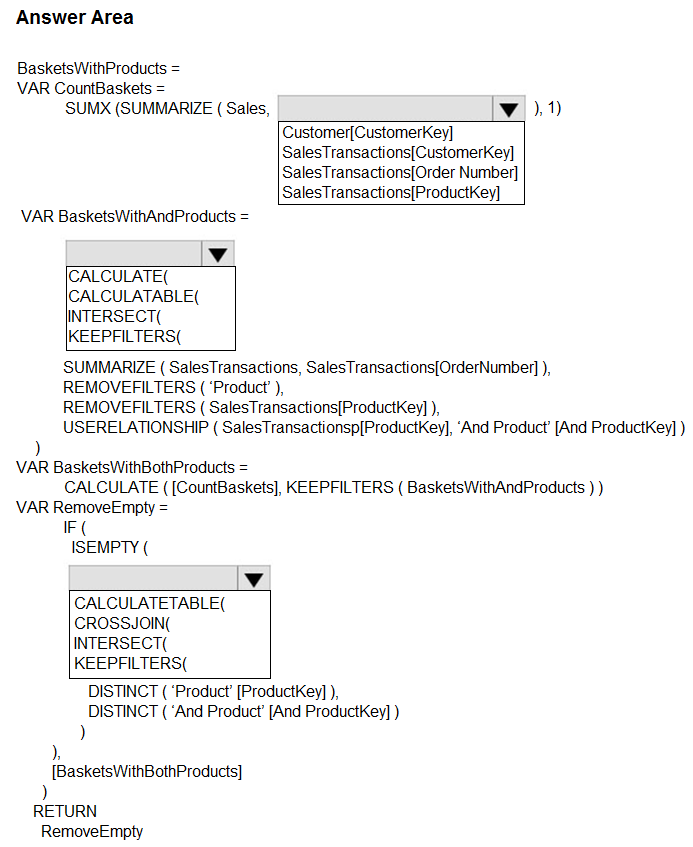

You need to create a measure to count orders for the market basket analysis.

How should you complete the DAX expression? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

This is a case study. Case studies are not timed separately. You can use as much exam time as you would like to complete each case. However, there may be additional case studies and sections on this exam. You must manage your time to ensure that you are able to complete all questions included on this exam in the time provided.

To answer the questions included in a case study, you will need to reference information that is provided in the case study. Case studies might contain exhibits and other resources that provide more information about the scenario that is described in the case study. Each question is independent of the other questions in this case study.

At the end of this case study, a review screen will appear. This screen allows you to review your answers and to make changes before you move to the next section of the exam. After you begin a new section, you cannot return to this section.

To start the case study -

To display the first question in this case study, click the Next button. Use the buttons in the left pane to explore the content of the case study before you answer the questions. Clicking these buttons displays information such as business requirements, existing environment, and problem statements. If the case study has an All Information tab, note that the information displayed is identical to the information displayed on the subsequent tabs. When you are ready to answer a question, click the Question button to return to the question.

Overview -

Existing environment -

Litware, Inc. is a retail company that sells outdoor recreational goods and accessories. The company sells goods both online and its stores located in six countries.

Azure Resources -

Litware has the following Azure resources:

An Azure Synapse Analytics workspace named synapseworkspace1

An Azure Data Lake Storage Gen2 account named datalake1 that is associated with synapseworkspace1

A Synapse Analytics dedicated SQL pool named SQLDW

Dedicated SQL Pool -

SQLDW contains a dimensional model that contains the following tables.

SQLDW contains the following additional tables.

SQLDW contains a view named dbo.CustomerPurchases that creates a distinct list of values from dbo.Customer [customerID], dbo.Customer [CustomerEmail], dbo.Product [ProductID] and dbo.Product [ProductName].

The sales data in SQLDW is updated every 30 minutes. Records in dbo.SalesTransactions are updated in SQLDW up to three days after being created. The records do NOT change after three days.

Power BI -

Litware has a new Power BI tenant that contains an empty workspace named Sales Analytics.

All users have Power BI Premium per user licenses.

IT data analytics are workspace administrators. The IT data analysts will create datasets and reports.

A single imported dataset will be created to support the company’s sales analytics goals. The dataset will be refreshed every 30 minutes.

Requirements -

Analytics Goals -

Litware identifies the following analytics goals:

Provide historical reporting of sales by product and channel over time.

Allow sales managers to perform ad hoc sales reporting with minimal effort.

Perform market basket analysis to understand which products are commonly purchased in the same transaction.

Identify which customers should receive promotional emails based on their likelihood of purchasing promoted products.

Litware plans to monitor the adoption of Power BI reports over time. The company wants custom Power BI usage reporting that includes the percent change of users that view reports in the Sales Analytics workspace each month.

Security Requirements -

Litware identifies the following security requirements for the analytics environment:

All the users in the sales department and the marketing department must be able to see Power BI reports that contain market basket analysis and data about which customers are likely to purchase a product.

Customer contact data in SQLDW and the Power BI dataset must be labeled as Sensitive. Records must be kept of any users that use the sensitive data.

Sales associates must be prevented from seeing the CustomerEmail column in Power BI reports.

Sales managers must be prevented from modifying reports created by other users.

Development Process Requirements

Litware identifies the following development process requirements:

SQLDW and datalake1 will act as the development environment. Once feature development is complete, all entities in synapseworkspace1 will be promoted to a test workspace, and then to a production workspace.

Power BI content must be deployed to test and production by using deployment pipelines.

All SQL scripts must be stored in Azure Repos.

The IT data analysts prefer to build Power BI reports in Synapse Studio.

You need to create a measure to count orders for the market basket analysis.

How should you complete the DAX expression? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

send

light_mode

delete

Question #17

You have a Power BI tenant.

You plan to register the tenant in an Azure Purview account.

You need to ensure that you can scan the tenant by using Azure Purview.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You plan to register the tenant in an Azure Purview account.

You need to ensure that you can scan the tenant by using Azure Purview.

Which two actions should you perform? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- AFrom the Microsoft 365 admin center, create a Microsoft 365 group.

- BFrom the Power BI Admin center, set Allow live connections to Enabled.

- CFrom the Power BI Admin center, set Allow service principals to use read-only Power BI admin APIs to Enabled.Most Voted

- DFrom the Azure Active Directory admin center, create a security group.Most Voted

- EFrom the Power BI Admin center, set Share content with external users to Enabled.

Correct Answer:

CD

CD

GPT-4o - Answer

GPT-4o - Answer

For successfully scanning your Power BI tenant with Azure Purview, enabling 'Allow service principals to use read-only Power BI admin APIs' in the Power BI Admin center is crucial as it permits Azure services to interact and manage Power BI resources. Additionally, creating a security group in the Azure Active Directory admin center is necessary for controlling access and permissions efficiently. This setup establishes a secure and manageable integration between Power BI and Azure Purview.

send

light_mode

delete

Question #18

You have a Power BI workspace named Workspace1 that contains five dataflows.

You need to configure Workspace1 to store the dataflows in an Azure Data Lake Storage Gen2 account.

What should you do first?

You need to configure Workspace1 to store the dataflows in an Azure Data Lake Storage Gen2 account.

What should you do first?

- AFrom the Power BI Admin portal, enable tenant-level storage.Most Voted

- BDisable load for all dataflow queries.

- CDelete the dataflow queries.

- DChange the Data source settings in the dataflow queries.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Chalking up the first move to configure Workspace1 for Azure Data Lake integration actually starts with enabling tenant-level storage through the Power BI Admin portal. It's crucial as this establishes the necessary groundwork allowing for linkage between Power BI's data management capabilities and Azure Data Lake's scalable storage solutions. This setup must precede any specific workspace or dataflow adjustments to ensure system-wide permissions and access settings are correctly aligned for seamless integration and functionality.

send

light_mode

delete

Question #19

You have an Azure Synapse Analytics dedicated SQL pool.

You need to ensure that the SQL pool is scanned by Azure Purview.

What should you do first?

You need to ensure that the SQL pool is scanned by Azure Purview.

What should you do first?

- ACreate a data policy.

- BCreate a data share connection.

- CSearch the data catalog.

- DRegister a data source.Most Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

To ensure Azure Synapse Analytics dedicated SQL pool is efficiently scanned by Azure Purview, the initial step involves registering the data source within Azure Purview. This action establishes the connection between the two services, thereby enabling the proper settings and permissions required for scanning and integrating data from the dedicated SQL pool. This step is pivotal and precedes the formulation and application of data policies or data sharing connections.

send

light_mode

delete

Question #20

You have a deployment pipeline for a Power BI workspace. The workspace contains two datasets that use import storage mode.

A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline.

An investigation into the issue identifies the following:

One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production.

What should you recommend?

A database administrator reports a drastic increase in the number of queries sent from the Power BI service to an Azure SQL database since the creation of the deployment pipeline.

An investigation into the issue identifies the following:

One of the datasets is larger than 1 GB and has a fact table that contains more than 500 million rows.

When publishing dataset changes to development, test, or production pipelines, a refresh is triggered against the entire dataset.

You need to recommend a solution to reduce the size of the queries sent to the database when the dataset changes are published to development, test, or production.

What should you recommend?

- ATurn off auto refresh when publishing the dataset changes to the Power BI service.

- BIn the dataset, change the fact table from an import table to a hybrid table.Most Voted

- CEnable the large dataset storage format for workspace.

- DCreate a dataset parameter to reduce the fact table row count in the development and test pipelines.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Option B is indeed the most effective solution to address the issue described. By transitioning the fact table to a hybrid table mode, combining import and DirectQuery methods, you facilitate a scenario where data queries pull directly from the Azure SQL database during user interactions. This direct query approach minimizes data movement and transfer overheads that are prevalent in full dataset refreshes, making it a strategic choice to reduce the load on your Azure SQL resources in both real-time use and during developmental updates.

send

light_mode

delete

All Pages