Microsoft DP-201 Exam Practice Questions (P. 5)

- Full Access (208 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

You need to recommend a storage solution to store flat files and columnar optimized files. The solution must meet the following requirements:

✑ Store standardized data that data scientists will explore in a curated folder.

✑ Ensure that applications cannot access the curated folder.

✑ Store staged data for import to applications in a raw folder.

✑ Provide data scientists with access to specific folders in the raw folder and all the content the curated folder.

Which storage solution should you recommend?

✑ Store standardized data that data scientists will explore in a curated folder.

✑ Ensure that applications cannot access the curated folder.

✑ Store staged data for import to applications in a raw folder.

✑ Provide data scientists with access to specific folders in the raw folder and all the content the curated folder.

Which storage solution should you recommend?

- AAzure Synapse Analytics

- BAzure Blob storage

- CAzure Data Lake Storage Gen2

- DAzure SQL Database

Correct Answer:

B

Azure Blob Storage containers is a general purpose object store for a wide variety of storage scenarios. Blobs are stored in containers, which are similar to folders.

Incorrect Answers:

C: Azure Data Lake Storage is an optimized storage for big data analytics workloads.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-storage

B

Azure Blob Storage containers is a general purpose object store for a wide variety of storage scenarios. Blobs are stored in containers, which are similar to folders.

Incorrect Answers:

C: Azure Data Lake Storage is an optimized storage for big data analytics workloads.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-storage

send

light_mode

delete

Question #22

Your company is an online retailer that can have more than 100 million orders during a 24-hour period, 95 percent of which are placed between 16:30 and 17:00.

All the orders are in US dollars. The current product line contains the following three item categories:

✑ Games with 15,123 items

✑ Books with 35,312 items

✑ Pens with 6,234 items

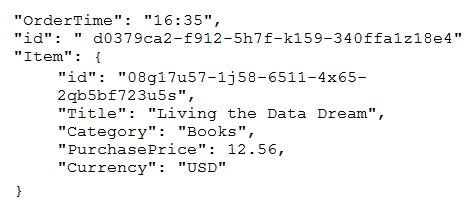

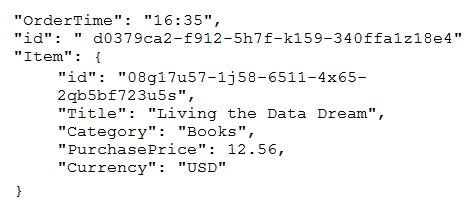

You are designing an Azure Cosmos DB data solution for a collection named Orders Collection. The following documents is a typical order in Orders Collection.

Orders Collection is expected to have a balanced read/write-intensive workload.

Which partition key provides the most efficient throughput?

All the orders are in US dollars. The current product line contains the following three item categories:

✑ Games with 15,123 items

✑ Books with 35,312 items

✑ Pens with 6,234 items

You are designing an Azure Cosmos DB data solution for a collection named Orders Collection. The following documents is a typical order in Orders Collection.

Orders Collection is expected to have a balanced read/write-intensive workload.

Which partition key provides the most efficient throughput?

- AItem/Category

- BOrderTime

- CItem/Currency

- DItem/id

Correct Answer:

A

Choose a partition key that has a wide range of values and access patterns that are evenly spread across logical partitions. This helps spread the data and the activity in your container across the set of logical partitions, so that resources for data storage and throughput can be distributed across the logical partitions.

Choose a partition key that spreads the workload evenly across all partitions and evenly over time. Your choice of partition key should balance the need for efficient partition queries and transactions against the goal of distributing items across multiple partitions to achieve scalability.

Candidates for partition keys might include properties that appear frequently as a filter in your queries. Queries can be efficiently routed by including the partition key in the filter predicate.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/partitioning-overview#choose-partitionkey

A

Choose a partition key that has a wide range of values and access patterns that are evenly spread across logical partitions. This helps spread the data and the activity in your container across the set of logical partitions, so that resources for data storage and throughput can be distributed across the logical partitions.

Choose a partition key that spreads the workload evenly across all partitions and evenly over time. Your choice of partition key should balance the need for efficient partition queries and transactions against the goal of distributing items across multiple partitions to achieve scalability.

Candidates for partition keys might include properties that appear frequently as a filter in your queries. Queries can be efficiently routed by including the partition key in the filter predicate.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/partitioning-overview#choose-partitionkey

send

light_mode

delete

Question #23

You have a MongoDB database that you plan to migrate to an Azure Cosmos DB account that uses the MongoDB API.

During testing, you discover that the migration takes longer than expected.

You need to recommend a solution that will reduce the amount of time it takes to migrate the data.

What are two possible recommendations to achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

During testing, you discover that the migration takes longer than expected.

You need to recommend a solution that will reduce the amount of time it takes to migrate the data.

What are two possible recommendations to achieve this goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- AIncrease the Request Units (RUs).

- BTurn off indexing.

- CAdd a write region.

- DCreate unique indexes.

- ECreate compound indexes.

Correct Answer:

AB

A: Increase the throughput during the migration by increasing the Request Units (RUs).

For customers that are migrating many collections within a database, it is strongly recommend to configure database-level throughput. You must make this choice when you create the database. The minimum database-level throughput capacity is 400 RU/sec. Each collection sharing database-level throughput requires at least 100 RU/sec.

B: By default, Azure Cosmos DB indexes all your data fields upon ingestion. You can modify the indexing policy in Azure Cosmos DB at any time. In fact, it is often recommended to turn off indexing when migrating data, and then turn it back on when the data is already in Cosmos DB.

Reference:

https://docs.microsoft.com/bs-latn-ba/Azure/cosmos-db/mongodb-pre-migration

AB

A: Increase the throughput during the migration by increasing the Request Units (RUs).

For customers that are migrating many collections within a database, it is strongly recommend to configure database-level throughput. You must make this choice when you create the database. The minimum database-level throughput capacity is 400 RU/sec. Each collection sharing database-level throughput requires at least 100 RU/sec.

B: By default, Azure Cosmos DB indexes all your data fields upon ingestion. You can modify the indexing policy in Azure Cosmos DB at any time. In fact, it is often recommended to turn off indexing when migrating data, and then turn it back on when the data is already in Cosmos DB.

Reference:

https://docs.microsoft.com/bs-latn-ba/Azure/cosmos-db/mongodb-pre-migration

send

light_mode

delete

Question #24

You need to recommend a storage solution for a sales system that will receive thousands of small files per minute. The files will be in JSON, text, and CSV formats. The files will be processed and transformed before they are loaded into a data warehouse in Azure Synapse Analytics. The files must be stored and secured in folders.

Which storage solution should you recommend?

Which storage solution should you recommend?

- AAzure Data Lake Storage Gen2

- BAzure Cosmos DB

- CAzure SQL Database

- DAzure Blob storage

Correct Answer:

A

Azure provides several solutions for working with CSV and JSON files, depending on your needs. The primary landing place for these files is either Azure Storage or Azure Data Lake Store.1

Azure Data Lake Storage is an optimized storage for big data analytics workloads.

Incorrect Answers:

D: Azure Blob Storage containers is a general purpose object store for a wide variety of storage scenarios. Blobs are stored in containers, which are similar to folders.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/scenarios/csv-and-json

A

Azure provides several solutions for working with CSV and JSON files, depending on your needs. The primary landing place for these files is either Azure Storage or Azure Data Lake Store.1

Azure Data Lake Storage is an optimized storage for big data analytics workloads.

Incorrect Answers:

D: Azure Blob Storage containers is a general purpose object store for a wide variety of storage scenarios. Blobs are stored in containers, which are similar to folders.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/scenarios/csv-and-json

send

light_mode

delete

Question #25

You are designing an Azure Cosmos DB database that will support vertices and edges.

Which Cosmos DB API should you include in the design?

Which Cosmos DB API should you include in the design?

- ASQL

- BCassandra

- CGremlin

- DTable

Correct Answer:

C

The Azure Cosmos DB Gremlin API can be used to store massive graphs with billions of vertices and edges.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/graph-introduction

C

The Azure Cosmos DB Gremlin API can be used to store massive graphs with billions of vertices and edges.

Reference:

https://docs.microsoft.com/en-us/azure/cosmos-db/graph-introduction

send

light_mode

delete

All Pages