Microsoft DP-100 Exam Practice Questions (P. 5)

- Full Access (528 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #41

This question is included in a number of questions that depicts the identical set-up. However, every question has a distinctive result. Establish if the recommendation satisfies the requirements.

You are planning to make use of Azure Machine Learning designer to train models.

You need choose a suitable compute type.

Recommendation: You choose Compute cluster.

Will the requirements be satisfied?

You are planning to make use of Azure Machine Learning designer to train models.

You need choose a suitable compute type.

Recommendation: You choose Compute cluster.

Will the requirements be satisfied?

- AYesMost Voted

- BNo

Correct Answer:

A

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-studio

A

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/how-to-create-attach-compute-studio

send

light_mode

delete

Question #42

You are making use of the Azure Machine Learning to designer construct an experiment.

After dividing a dataset into training and testing sets, you configure the algorithm to be Two-Class Boosted Decision Tree.

You are preparing to ascertain the Area Under the Curve (AUC).

Which of the following is a sequential combination of the models required to achieve your goal?

After dividing a dataset into training and testing sets, you configure the algorithm to be Two-Class Boosted Decision Tree.

You are preparing to ascertain the Area Under the Curve (AUC).

Which of the following is a sequential combination of the models required to achieve your goal?

- ATrain, Score, Evaluate.Most Voted

- BScore, Evaluate, Train.

- CEvaluate, Export Data, Train.

- DTrain, Score, Export Data.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

To achieve the Area Under the Curve (AUC) with Azure Machine Learning, the correct sequence involves first training the model using the dataset ('Train'), which is followed by predicting the outputs using the test data ('Score'), and finally, evaluating the model performance ('Evaluate'). This logical order ensures that the model is properly trained before assessments and that the evaluation accurately reflects the model's ability to generalize to new data. This sequential flow is essential for accurately testing the effectiveness of the Two-Class Boosted Decision Tree algorithm.

send

light_mode

delete

Question #43

You are developing a hands-on workshop to introduce Docker for Windows to attendees.

You need to ensure that workshop attendees can install Docker on their devices.

Which two prerequisite components should attendees install on the devices? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

You need to ensure that workshop attendees can install Docker on their devices.

Which two prerequisite components should attendees install on the devices? Each correct answer presents part of the solution.

NOTE: Each correct selection is worth one point.

- AMicrosoft Hardware-Assisted Virtualization Detection Tool

- BKitematic

- CBIOS-enabled virtualizationMost Voted

- DVirtualBox

- EWindows 10 64-bit ProfessionalMost Voted

Correct Answer:

CE

C: Make sure your Windows system supports Hardware Virtualization Technology and that virtualization is enabled.

Ensure that hardware virtualization support is turned on in the BIOS settings. For example:

E: To run Docker, your machine must have a 64-bit operating system running Windows 7 or higher.

Reference:

https://docs.docker.com/toolbox/toolbox_install_windows/

https://blogs.technet.microsoft.com/canitpro/2015/09/08/step-by-step-enabling-hyper-v-for-use-on-windows-10/

CE

C: Make sure your Windows system supports Hardware Virtualization Technology and that virtualization is enabled.

Ensure that hardware virtualization support is turned on in the BIOS settings. For example:

E: To run Docker, your machine must have a 64-bit operating system running Windows 7 or higher.

Reference:

https://docs.docker.com/toolbox/toolbox_install_windows/

https://blogs.technet.microsoft.com/canitpro/2015/09/08/step-by-step-enabling-hyper-v-for-use-on-windows-10/

send

light_mode

delete

Question #44

Your team is building a data engineering and data science development environment.

The environment must support the following requirements:

✑ support Python and Scala

✑ compose data storage, movement, and processing services into automated data pipelines

✑ the same tool should be used for the orchestration of both data engineering and data science

✑ support workload isolation and interactive workloads

✑ enable scaling across a cluster of machines

You need to create the environment.

What should you do?

The environment must support the following requirements:

✑ support Python and Scala

✑ compose data storage, movement, and processing services into automated data pipelines

✑ the same tool should be used for the orchestration of both data engineering and data science

✑ support workload isolation and interactive workloads

✑ enable scaling across a cluster of machines

You need to create the environment.

What should you do?

- ABuild the environment in Apache Hive for HDInsight and use Azure Data Factory for orchestration.

- BBuild the environment in Azure Databricks and use Azure Data Factory for orchestration.Most Voted

- CBuild the environment in Apache Spark for HDInsight and use Azure Container Instances for orchestration.

- DBuild the environment in Azure Databricks and use Azure Container Instances for orchestration.

Correct Answer:

B

In Azure Databricks, we can create two different types of clusters.

✑ Standard, these are the default clusters and can be used with Python, R, Scala and SQL

✑ High-concurrency

Azure Databricks is fully integrated with Azure Data Factory.

Incorrect Answers:

D: Azure Container Instances is good for development or testing. Not suitable for production workloads.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-science-and-machine-learning

B

In Azure Databricks, we can create two different types of clusters.

✑ Standard, these are the default clusters and can be used with Python, R, Scala and SQL

✑ High-concurrency

Azure Databricks is fully integrated with Azure Data Factory.

Incorrect Answers:

D: Azure Container Instances is good for development or testing. Not suitable for production workloads.

Reference:

https://docs.microsoft.com/en-us/azure/architecture/data-guide/technology-choices/data-science-and-machine-learning

send

light_mode

delete

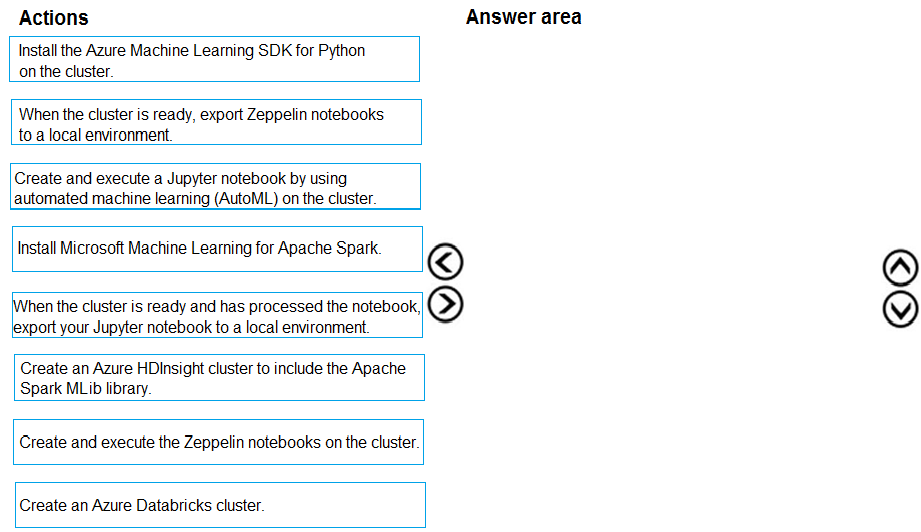

Question #45

DRAG DROP -

You are building an intelligent solution using machine learning models.

The environment must support the following requirements:

✑ Data scientists must build notebooks in a cloud environment

✑ Data scientists must use automatic feature engineering and model building in machine learning pipelines.

✑ Notebooks must be deployed to retrain using Spark instances with dynamic worker allocation.

✑ Notebooks must be exportable to be version controlled locally.

You need to create the environment.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

You are building an intelligent solution using machine learning models.

The environment must support the following requirements:

✑ Data scientists must build notebooks in a cloud environment

✑ Data scientists must use automatic feature engineering and model building in machine learning pipelines.

✑ Notebooks must be deployed to retrain using Spark instances with dynamic worker allocation.

✑ Notebooks must be exportable to be version controlled locally.

You need to create the environment.

Which four actions should you perform in sequence? To answer, move the appropriate actions from the list of actions to the answer area and arrange them in the correct order.

Select and Place:

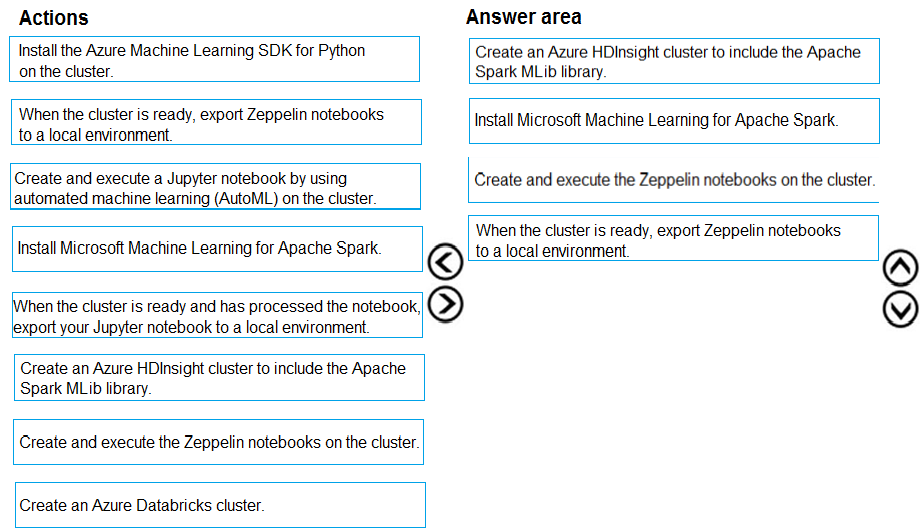

Correct Answer:

Step 1: Create an Azure HDInsight cluster to include the Apache Spark Mlib library

Step 2: Install Microsot Machine Learning for Apache Spark

You install AzureML on your Azure HDInsight cluster.

Microsoft Machine Learning for Apache Spark (MMLSpark) provides a number of deep learning and data science tools for Apache Spark, including seamless integration of Spark Machine Learning pipelines with Microsoft Cognitive Toolkit (CNTK) and OpenCV, enabling you to quickly create powerful, highly-scalable predictive and analytical models for large image and text datasets.

Step 3: Create and execute the Zeppelin notebooks on the cluster

Step 4: When the cluster is ready, export Zeppelin notebooks to a local environment.

Notebooks must be exportable to be version controlled locally.

Reference:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-zeppelin-notebook https://azuremlbuild.blob.core.windows.net/pysparkapi/intro.html

Step 1: Create an Azure HDInsight cluster to include the Apache Spark Mlib library

Step 2: Install Microsot Machine Learning for Apache Spark

You install AzureML on your Azure HDInsight cluster.

Microsoft Machine Learning for Apache Spark (MMLSpark) provides a number of deep learning and data science tools for Apache Spark, including seamless integration of Spark Machine Learning pipelines with Microsoft Cognitive Toolkit (CNTK) and OpenCV, enabling you to quickly create powerful, highly-scalable predictive and analytical models for large image and text datasets.

Step 3: Create and execute the Zeppelin notebooks on the cluster

Step 4: When the cluster is ready, export Zeppelin notebooks to a local environment.

Notebooks must be exportable to be version controlled locally.

Reference:

https://docs.microsoft.com/en-us/azure/hdinsight/spark/apache-spark-zeppelin-notebook https://azuremlbuild.blob.core.windows.net/pysparkapi/intro.html

send

light_mode

delete

Question #46

You plan to build a team data science environment. Data for training models in machine learning pipelines will be over 20 GB in size.

You have the following requirements:

✑ Models must be built using Caffe2 or Chainer frameworks.

✑ Data scientists must be able to use a data science environment to build the machine learning pipelines and train models on their personal devices in both connected and disconnected network environments.

Personal devices must support updating machine learning pipelines when connected to a network.

You need to select a data science environment.

Which environment should you use?

You have the following requirements:

✑ Models must be built using Caffe2 or Chainer frameworks.

✑ Data scientists must be able to use a data science environment to build the machine learning pipelines and train models on their personal devices in both connected and disconnected network environments.

Personal devices must support updating machine learning pipelines when connected to a network.

You need to select a data science environment.

Which environment should you use?

- AAzure Machine Learning ServiceMost Voted

- BAzure Machine Learning Studio

- CAzure Databricks

- DAzure Kubernetes Service (AKS)

Correct Answer:

A

The Data Science Virtual Machine (DSVM) is a customized VM image on Microsoft's Azure cloud built specifically for doing data science. Caffe2 and Chainer are supported by DSVM.

DSVM integrates with Azure Machine Learning.

Incorrect Answers:

B: Use Machine Learning Studio when you want to experiment with machine learning models quickly and easily, and the built-in machine learning algorithms are sufficient for your solutions.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

A

The Data Science Virtual Machine (DSVM) is a customized VM image on Microsoft's Azure cloud built specifically for doing data science. Caffe2 and Chainer are supported by DSVM.

DSVM integrates with Azure Machine Learning.

Incorrect Answers:

B: Use Machine Learning Studio when you want to experiment with machine learning models quickly and easily, and the built-in machine learning algorithms are sufficient for your solutions.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

send

light_mode

delete

Question #47

You are implementing a machine learning model to predict stock prices.

The model uses a PostgreSQL database and requires GPU processing.

You need to create a virtual machine that is pre-configured with the required tools.

What should you do?

The model uses a PostgreSQL database and requires GPU processing.

You need to create a virtual machine that is pre-configured with the required tools.

What should you do?

- ACreate a Data Science Virtual Machine (DSVM) Windows edition.

- BCreate a Geo Al Data Science Virtual Machine (Geo-DSVM) Windows edition.

- CCreate a Deep Learning Virtual Machine (DLVM) Linux edition.Most Voted

- DCreate a Deep Learning Virtual Machine (DLVM) Windows edition.

Correct Answer:

A

In the DSVM, your training models can use deep learning algorithms on hardware that's based on graphics processing units (GPUs).

PostgreSQL is available for the following operating systems: Linux (all recent distributions), 64-bit installers available for macOS (OS X) version 10.6 and newer ג€"

Windows (with installers available for 64-bit version; tested on latest versions and back to Windows 2012 R2.

Incorrect Answers:

B: The Azure Geo AI Data Science VM (Geo-DSVM) delivers geospatial analytics capabilities from Microsoft's Data Science VM. Specifically, this VM extends the

AI and data science toolkits in the Data Science VM by adding ESRI's market-leading ArcGIS Pro Geographic Information System.

C, D: DLVM is a template on top of DSVM image. In terms of the packages, GPU drivers etc are all there in the DSVM image. Mostly it is for convenience during creation where we only allow DLVM to be created on GPU VM instances on Azure.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

A

In the DSVM, your training models can use deep learning algorithms on hardware that's based on graphics processing units (GPUs).

PostgreSQL is available for the following operating systems: Linux (all recent distributions), 64-bit installers available for macOS (OS X) version 10.6 and newer ג€"

Windows (with installers available for 64-bit version; tested on latest versions and back to Windows 2012 R2.

Incorrect Answers:

B: The Azure Geo AI Data Science VM (Geo-DSVM) delivers geospatial analytics capabilities from Microsoft's Data Science VM. Specifically, this VM extends the

AI and data science toolkits in the Data Science VM by adding ESRI's market-leading ArcGIS Pro Geographic Information System.

C, D: DLVM is a template on top of DSVM image. In terms of the packages, GPU drivers etc are all there in the DSVM image. Mostly it is for convenience during creation where we only allow DLVM to be created on GPU VM instances on Azure.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/data-science-virtual-machine/overview

send

light_mode

delete

Question #48

You are developing deep learning models to analyze semi-structured, unstructured, and structured data types.

You have the following data available for model building:

✑ Video recordings of sporting events

✑ Transcripts of radio commentary about events

✑ Logs from related social media feeds captured during sporting events

You need to select an environment for creating the model.

Which environment should you use?

You have the following data available for model building:

✑ Video recordings of sporting events

✑ Transcripts of radio commentary about events

✑ Logs from related social media feeds captured during sporting events

You need to select an environment for creating the model.

Which environment should you use?

- AAzure Cognitive ServicesMost Voted

- BAzure Data Lake Analytics

- CAzure HDInsight with Spark MLib

- DAzure Machine Learning Studio

Correct Answer:

A

Azure Cognitive Services expand on Microsoft's evolving portfolio of machine learning APIs and enable developers to easily add cognitive features ג€" such as emotion and video detection; facial, speech, and vision recognition; and speech and language understanding ג€" into their applications. The goal of Azure Cognitive

Services is to help developers create applications that can see, hear, speak, understand, and even begin to reason. The catalog of services within Azure Cognitive

Services can be categorized into five main pillars - Vision, Speech, Language, Search, and Knowledge.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/welcome

A

Azure Cognitive Services expand on Microsoft's evolving portfolio of machine learning APIs and enable developers to easily add cognitive features ג€" such as emotion and video detection; facial, speech, and vision recognition; and speech and language understanding ג€" into their applications. The goal of Azure Cognitive

Services is to help developers create applications that can see, hear, speak, understand, and even begin to reason. The catalog of services within Azure Cognitive

Services can be categorized into five main pillars - Vision, Speech, Language, Search, and Knowledge.

Reference:

https://docs.microsoft.com/en-us/azure/cognitive-services/welcome

send

light_mode

delete

Question #49

You must store data in Azure Blob Storage to support Azure Machine Learning.

You need to transfer the data into Azure Blob Storage.

What are three possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

You need to transfer the data into Azure Blob Storage.

What are three possible ways to achieve the goal? Each correct answer presents a complete solution.

NOTE: Each correct selection is worth one point.

- ABulk Insert SQL Query

- BAzCopyMost Voted

- CPython scriptMost Voted

- DAzure Storage ExplorerMost Voted

- EBulk Copy Program (BCP)

Correct Answer:

BCD

You can move data to and from Azure Blob storage using different technologies:

✑ Azure Storage-Explorer

✑ AzCopy

✑ Python

✑ SSIS

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-azure-blob

BCD

You can move data to and from Azure Blob storage using different technologies:

✑ Azure Storage-Explorer

✑ AzCopy

✑ Python

✑ SSIS

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/team-data-science-process/move-azure-blob

send

light_mode

delete

Question #50

You are moving a large dataset from Azure Machine Learning Studio to a Weka environment.

You need to format the data for the Weka environment.

Which module should you use?

You need to format the data for the Weka environment.

Which module should you use?

- AConvert to CSV

- BConvert to Dataset

- CConvert to ARFFMost Voted

- DConvert to SVMLight

Correct Answer:

C

Use the Convert to ARFF module in Azure Machine Learning Studio, to convert datasets and results in Azure Machine Learning to the attribute-relation file format used by the Weka toolset. This format is known as ARFF.

The ARFF data specification for Weka supports multiple machine learning tasks, including data preprocessing, classification, and feature selection. In this format, data is organized by entites and their attributes, and is contained in a single text file.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/convert-to-arff

C

Use the Convert to ARFF module in Azure Machine Learning Studio, to convert datasets and results in Azure Machine Learning to the attribute-relation file format used by the Weka toolset. This format is known as ARFF.

The ARFF data specification for Weka supports multiple machine learning tasks, including data preprocessing, classification, and feature selection. In this format, data is organized by entites and their attributes, and is contained in a single text file.

Reference:

https://docs.microsoft.com/en-us/azure/machine-learning/studio-module-reference/convert-to-arff

send

light_mode

delete

All Pages