Google Associate Cloud Engineer Exam Practice Questions (P. 3)

- Full Access (336 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #21

You have one GCP account running in your default region and zone and another account running in a non-default region and zone. You want to start a new

Compute Engine instance in these two Google Cloud Platform accounts using the command line interface. What should you do?

Compute Engine instance in these two Google Cloud Platform accounts using the command line interface. What should you do?

- ACreate two configurations using gcloud config configurations create [NAME]. Run gcloud config configurations activate [NAME] to switch between accounts when running the commands to start the Compute Engine instances.Most Voted

- BCreate two configurations using gcloud config configurations create [NAME]. Run gcloud configurations list to start the Compute Engine instances.

- CActivate two configurations using gcloud configurations activate [NAME]. Run gcloud config list to start the Compute Engine instances.

- DActivate two configurations using gcloud configurations activate [NAME]. Run gcloud configurations list to start the Compute Engine instances.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Option A is indeed correct for running Compute Engine instances across different GCP accounts. By creating distinct configurations for each account with `gcloud config configurations create [NAME]`, you can neatly separate settings per account. Activating these configurations using `gcloud config configurations activate [NAME]` allows you to switch context effortlessly between these accounts, making it practical to manage resources in them accordingly. Remember, the vital step is activating the right configuration before launching an instance to ensure you are operating within the intended account's context.

send

light_mode

delete

Question #22

You significantly changed a complex Deployment Manager template and want to confirm that the dependencies of all defined resources are properly met before committing it to the project. You want the most rapid feedback on your changes. What should you do?

- AUse granular logging statements within a Deployment Manager template authored in Python.

- BMonitor activity of the Deployment Manager execution on the Stackdriver Logging page of the GCP Console.

- CExecute the Deployment Manager template against a separate project with the same configuration, and monitor for failures.

- DExecute the Deployment Manager template using the ג€"-preview option in the same project, and observe the state of interdependent resources.Most Voted

Correct Answer:

D

Reference:

https://cloud.google.com/deployment-manager/docs/deployments/updating-deployments

D

Reference:

https://cloud.google.com/deployment-manager/docs/deployments/updating-deployments

send

light_mode

delete

Question #23

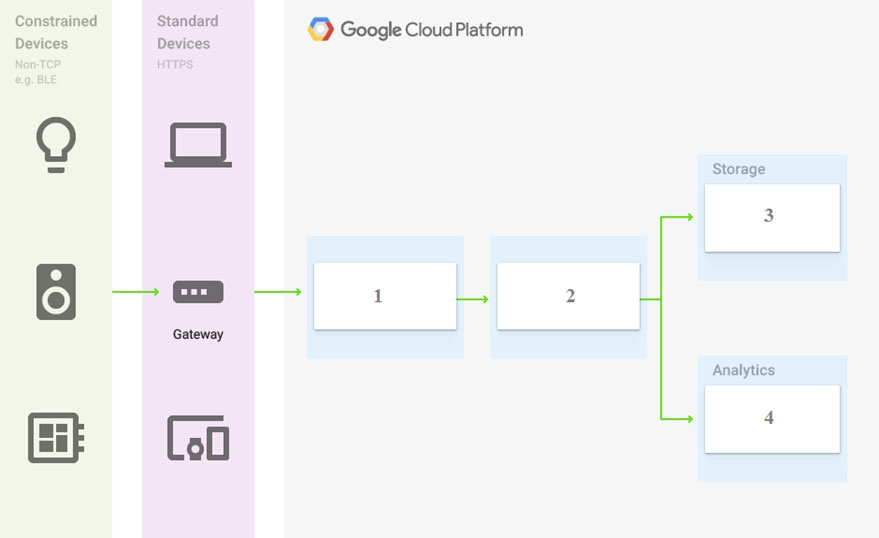

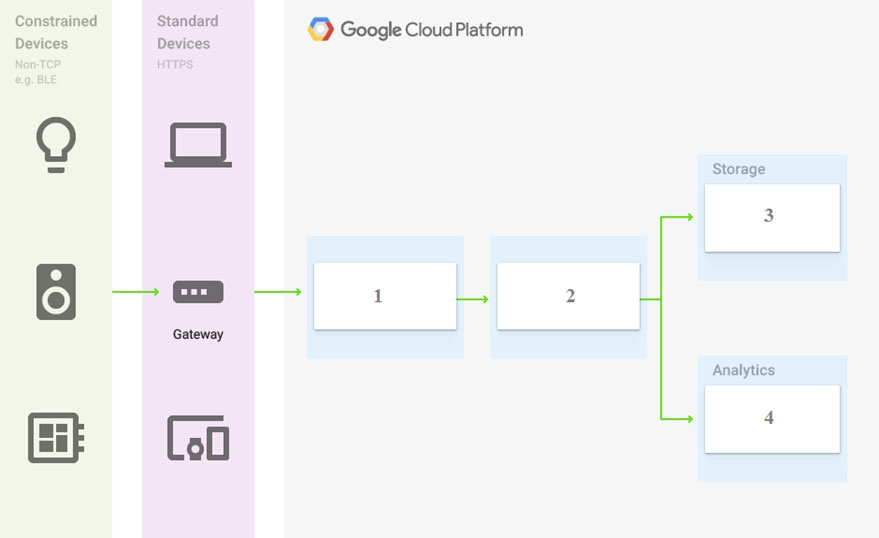

You are building a pipeline to process time-series data. Which Google Cloud Platform services should you put in boxes 1,2,3, and 4?

- ACloud Pub/Sub, Cloud Dataflow, Cloud Datastore, BigQuery

- BFirebase Messages, Cloud Pub/Sub, Cloud Spanner, BigQuery

- CCloud Pub/Sub, Cloud Storage, BigQuery, Cloud Bigtable

- DCloud Pub/Sub, Cloud Dataflow, Cloud Bigtable, BigQueryMost Voted

Correct Answer:

D

Reference:

https://cloud.google.com/solutions/correlating-time-series-dataflow

D

Reference:

https://cloud.google.com/solutions/correlating-time-series-dataflow

send

light_mode

delete

Question #24

You have a project for your App Engine application that serves a development environment. The required testing has succeeded and you want to create a new project to serve as your production environment. What should you do?

- AUse gcloud to create the new project, and then deploy your application to the new project.Most Voted

- BUse gcloud to create the new project and to copy the deployed application to the new project.

- CCreate a Deployment Manager configuration file that copies the current App Engine deployment into a new project.

- DDeploy your application again using gcloud and specify the project parameter with the new project name to create the new project.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

When you are ready to move from a development to a production environment for an App Engine application, the most effective approach is straightforward. First, use the `gcloud` command line tool to create the new project. Following the creation, deploy your application to this freshly established project. This ensures that your production environment is set up with a clean configuration, preventing any inconsistencies that could arise from trying to copy configurations or applications directly from the development environment.

send

light_mode

delete

Question #25

You need to configure IAM access audit logging in BigQuery for external auditors. You want to follow Google-recommended practices. What should you do?

- AAdd the auditors group to the 'logging.viewer' and 'bigQuery.dataViewer' predefined IAM roles.Most Voted

- BAdd the auditors group to two new custom IAM roles.

- CAdd the auditor user accounts to the 'logging.viewer' and 'bigQuery.dataViewer' predefined IAM roles.

- DAdd the auditor user accounts to two new custom IAM roles.

Correct Answer:

A

Reference:

https://cloud.google.com/iam/docs/roles-audit-logging

A

Reference:

https://cloud.google.com/iam/docs/roles-audit-logging

send

light_mode

delete

Question #26

You need to set up permissions for a set of Compute Engine instances to enable them to write data into a particular Cloud Storage bucket. You want to follow

Google-recommended practices. What should you do?

Google-recommended practices. What should you do?

- ACreate a service account with an access scope. Use the access scope 'https://www.googleapis.com/auth/devstorage.write_only'.

- BCreate a service account with an access scope. Use the access scope 'https://www.googleapis.com/auth/cloud-platform'.

- CCreate a service account and add it to the IAM role 'storage.objectCreator' for that bucket.Most Voted

- DCreate a service account and add it to the IAM role 'storage.objectAdmin' for that bucket.

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

When setting up permissions for Compute Engine instances to interact with Cloud Storage buckets, the IAM role approach in D, which specifies 'storage.objectAdmin', is optimal. This role encompasses permissions beyond creating objects, such as deleting or updating them, aligning well when broader object management within a bucket is necessary. However, if the requirement is strictly to write data without deletion or other management capabilities, role 'storage.objectCreator' mentioned in option C would be more aligned with the principle of least privilege. Thus, both D and potentially C are viable, depending on specific operational needs.

send

light_mode

delete

Question #27

You have sensitive data stored in three Cloud Storage buckets and have enabled data access logging. You want to verify activities for a particular user for these buckets, using the fewest possible steps. You need to verify the addition of metadata labels and which files have been viewed from those buckets. What should you do?

- AUsing the GCP Console, filter the Activity log to view the information.Most Voted

- BUsing the GCP Console, filter the Stackdriver log to view the information.

- CView the bucket in the Storage section of the GCP Console.

- DCreate a trace in Stackdriver to view the information.

Correct Answer:

B

?️

GPT-4o - Answer

GPT-4o - Answer

The best approach to verify activities for a particular user across multiple Cloud Storage buckets is by filtering the Activity logs in the GCP Console. This allows you to efficiently review all user interactions, including metadata modifications and file access. The process involves navigating to the Activity log section, applying filters for specific buckets and the user in question. This method provides a comprehensive view of all relevant activities without the need for advanced configuration or additional tools, confirming the functionality of data access logging as outlined in Google Cloud's documentation on administrative and data access activities.

send

light_mode

delete

Question #28

You are the project owner of a GCP project and want to delegate control to colleagues to manage buckets and files in Cloud Storage. You want to follow Google- recommended practices. Which IAM roles should you grant your colleagues?

- AProject Editor

- BStorage AdminMost Voted

- CStorage Object Admin

- DStorage Object Creator

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Opting for the Storage Admin role in this case is spot on. This role provides comprehensive permissions, including full control over both buckets and objects within Google Cloud Storage. It's exactly what you need if you want your colleagues to manage everything within Cloud Storage without restrictions. Remember, granting broader access like this should always be done with a clear understanding of trust and responsibility due to the extensive capabilities it enables.

send

light_mode

delete

Question #29

You have an object in a Cloud Storage bucket that you want to share with an external company. The object contains sensitive data. You want access to the content to be removed after four hours. The external company does not have a Google account to which you can grant specific user-based access privileges. You want to use the most secure method that requires the fewest steps. What should you do?

- ACreate a signed URL with a four-hour expiration and share the URL with the company.Most Voted

- BSet object access to 'public' and use object lifecycle management to remove the object after four hours.

- CConfigure the storage bucket as a static website and furnish the object's URL to the company. Delete the object from the storage bucket after four hours.

- DCreate a new Cloud Storage bucket specifically for the external company to access. Copy the object to that bucket. Delete the bucket after four hours have passed.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Creating a signed URL for sharing an object from Cloud Storage is both efficient and secure. This method gives the external company a time-limited access to the sensitive data, expiring automatically after four hours. Since the external company does not have a Google account, this approach bypasses the need for user-based access privileges, maintaining security without any unnecessary complexity. It's a swift, straightforward solution without the overhead of managing public access or additional storage resources.

send

light_mode

delete

Question #30

You are creating a Google Kubernetes Engine (GKE) cluster with a cluster autoscaler feature enabled. You need to make sure that each node of the cluster will run a monitoring pod that sends container metrics to a third-party monitoring solution. What should you do?

- ADeploy the monitoring pod in a StatefulSet object.

- BDeploy the monitoring pod in a DaemonSet object.Most Voted

- CReference the monitoring pod in a Deployment object.

- DReference the monitoring pod in a cluster initializer at the GKE cluster creation time.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

To ensure that each node in your GKE cluster has a monitoring pod operating, deploying these pods as a DaemonSet is optimal. DaemonSets are specifically tailored for such purposes, as they automatically deploy a copy of the pod to all current and future nodes within the cluster. This means consistent monitoring across all nodes, which is crucial for effective container metrics collection sent to your third-party monitoring solution. Other options like StatefulSets, Deployments, or cluster initializers do not specifically guarantee this node-wide pod distribution, making DaemonSet the most appropriate choice for this scenario.

send

light_mode

delete

All Pages