Cisco® 350-901 Exam Practice Questions (P. 1)

- Full Access (497 questions)

- One Year of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #1

A developer has created an application based on customer requirements. The customer needs to run the application with the minimum downtime.

Which design approach regarding high-availability applications, Recovery Time Objective, and Recovery Point Objective must be taken?

Which design approach regarding high-availability applications, Recovery Time Objective, and Recovery Point Objective must be taken?

- AActive/passive results in lower RTO and RPO. For RPO, data synchronization between the two data centers must be timely to allow seamless request flow.

- BActive/passive results in lower RTO and RPO. For RPO, data synchronization between the two data centers does not need to be timely to allow seamless request flow.

- CActive/active results in lower RTO and RPO. For RPO, data synchronization between the two data centers does not need to be timely to allow seamless request flow.

- DActive/active results in lower RTO and RPO. For RPO, data synchronization between the two data centers must be timely to allow seamless request flow.Most Voted

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

Answer A, which opts for an active/passive configuration with timely data synchronization between data centers, is indeed appropriate for minimizing downtime and achieving lower RTO and RPO. In an active/passive setup, one system is actively handling requests, while another stands by ready to take over seamlessly in case of system failure. This configuration, coupled with timely data synchronization, ensures that the passive system can become active with virtually no loss of data or operational delays, thereby significantly minimizing downtime and enhancing overall system resilience.

send

light_mode

delete

Question #2

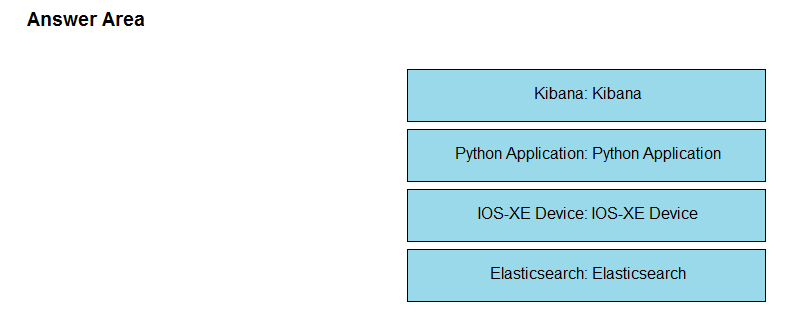

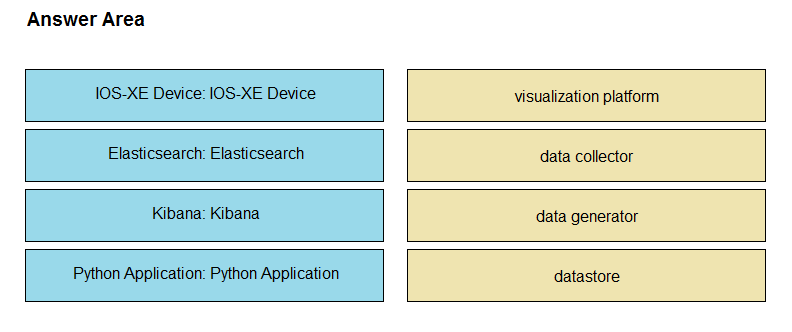

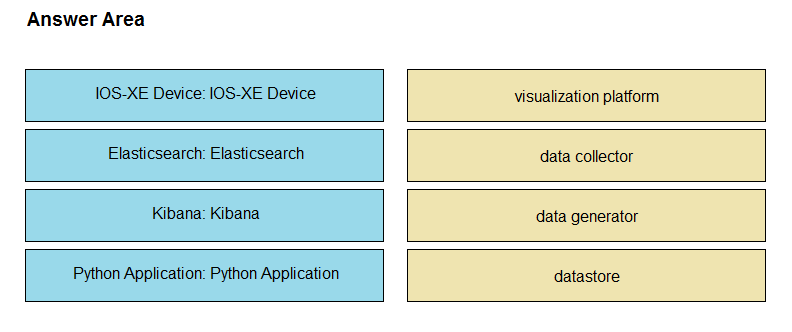

DRAG DROP -

An application is being built to collect and display telemetry streaming data. Drag and drop the elements of this stack from the left onto the correct element functions on the right.

Select and Place:

An application is being built to collect and display telemetry streaming data. Drag and drop the elements of this stack from the left onto the correct element functions on the right.

Select and Place:

send

light_mode

delete

Question #3

A cloud native project is being worked on in which all source code and dependencies are written in Python, Ruby, and/or JavaScnpt. A change in code triggers a notification to the CI/CD tool to run the CI/CD pipeline.

Which step should be omitted from the pipeline?

Which step should be omitted from the pipeline?

- ADeploy the code to one or more environments, such as staging and/or production.

- BBuild one of more containers that package up code and all its dependencies.

- CCompile code.Most Voted

- DRun automated tests to validate the code.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

The correct choice is A, as deploying code to environments like staging or production should ideally be omitted when the code is still in the development phase and actively being worked on. Deploying partially completed code can lead to unexpected behaviors in production environments, undermining the stability and reliability of the application. Always ensure that deployments to these environments are made with thoroughly tested and completed code to maintain application integrity.

send

light_mode

delete

Question #4

Which two statements are considered best practices according to the 12-factor app methodology for application design? (Choose two.)

- AApplication code writes its event stream to stdout.Most Voted

- BApplication log streams are archived in multiple replicated databases.

- CApplication log streams are sent to log indexing and analysis systems.Most Voted

- DApplication code writes its event stream to specific log files.

- ELog files are aggregated into a single file on individual nodes.

Correct Answer:

AD

AD

GPT-4o - Answer

GPT-4o - Answer

The correct choices per the 12-factor methodology, which focuses on treating logs as event streams, should favor output via standard output (stdout), not through managing specific log files or nodes. Thus, A is definitely correct as it adheres directly to treating logs simply as event streams that each process should write directly to stdout, rather than managing how they’re stored or processed locally. While user comment 3 & 5 align with the question's original answers, it's important to note that the methodology advises against using specific log files (option D). This might suggest a reconsideration towards option C, given its alignment with exporting logs to external systems without management by the application itself.

send

light_mode

delete

Question #5

An organization manages a large cloud-deployed application that employs a microservices architecture. No notable issues occur with downtime because the services of this application are redundantly deployed over three or more data center regions. However, several times a week reports are received about application slowness. The container orchestration logs show faults in a variety of containers that cause them to fail and then spin up brand new.

Which action must be taken to improve the resiliency design of the application while maintaining current scale?

Which action must be taken to improve the resiliency design of the application while maintaining current scale?

- AUpdate the base image of the containers.

- BTest the execution of the application with another cloud services platform.

- CIncrease the number of containers running per service.

- DAdd consistent ג€try/catch(exception)ג€ clauses to the code.Most Voted

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

Indeed, adding "try/catch(exception)" clauses within the application code allows handling unexpected or erroneous situations gracefully without causing the entire container to crash. This practice helps to maintain the application's performance by avoiding unnecessary restarts of containers, which consume resources and time, ultimately affecting the application's speed and reliability during peak loads. This targeted approach to error handling is crucial for improving application resiliency and stability, especially in a microservices architecture where independent services must operate reliably.

send

light_mode

delete

Question #6

How should a web application be designed to work on a platform where up to 1000 requests per second can be served?

- AUse algorithms like random early detection to deny excessive requests.

- BSet a per-user limit (for example, 5 requests/minute/user) and deny the requests from the users who have reached the limit.Most Voted

- COnly 1000 user connections are allowed; further connections are denied so that all connected users can be served.

- DAll requests are saved and processed one by one so that all users can be served eventually.

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

The correct answer, "D," pertaining to saving and queuing requests for sequential processing, suits scenarios where request volume exceeds processing capacity, thus ensuring all requests are eventually served without system overload. This approach is useful in managing spikes in demand by queuing excessive requests and processing them in an orderly fashion, promoting fairness and efficiency in resource utilization. It’s a practical solution for maintaining service availability and responsiveness without denying access outright, a critical attribute for applications experiencing variable load intensities.

send

light_mode

delete

Question #7

An organization manages a large cloud-deployed application that employs a microservices architecture across multiple data centers. Reports have been received about application slowness. The container orchestration logs show that faults have been raised in a variety of containers that caused them to fail and then spin up brand new instances.

Which two actions can improve the design of the application to identify the faults? (Choose two.)

Which two actions can improve the design of the application to identify the faults? (Choose two.)

- AAutomatically pull out the container that fails the most over a time period.

- BImplement a tagging methodology that follows the application execution from service to service.Most Voted

- CAdd logging on exception and provide immediate notification.Most Voted

- DDo a write to the datastore every time there is an application failure.

- EImplement an SNMP logging system with alerts in case a network link is slow.

Correct Answer:

BC

BC

GPT-4o - Answer

GPT-4o - Answer

Absolutely, choices B and C enhance the observability of the application. Implementing a tagging methodology allows precise tracing of each component in the service flow, cutting down on troubleshooting and identification time when issues arise. Similarly, adding logging at the point of exception combined with immediate notification speeds up the response time to issues, ensuring they are addressed promptly. Both these strategies are crucial for maintaining the robustness and reliability of a microservices architecture.

send

light_mode

delete

Question #8

Which two situations are flagged by software tools designed for dependency checking in continuous integration environments, such as OWASP? (Choose two.)

- Apublicly disclosed vulnerabilities related to the included dependenciesMost Voted

- Bmismatches in coding styles and conventions in the included dependencies

- Cincompatible licenses in the included dependencies

- Dtest case failures introduced by bugs in the included dependencies

- Ebuffer overflows to occur as the result of a combination of the included dependenciesMost Voted

Correct Answer:

AE

AE

GPT-4o - Answer

GPT-4o - Answer

The correct selections "A" and "E" are well-supported by the functionality of OWASP Dependency Check and similar tools. These tools are specifically designed to identify publicly disclosed vulnerabilities in project dependencies (like those noted in option "A") and have capabilities related to finding certain high-confidence security flaws within dependencies, including buffer overflows (as referred to in "E"). It's key to recognize that while dependency checks are robust in spotting known vulnerabilities and high-risk security issues, they do not typically address issues like mismatched coding styles or incompatible licenses.

send

light_mode

delete

Question #9

A network operations team is using the cloud to automate some of their managed customer and branch locations. They require that all of their tooling be ephemeral by design and that the entire automation environment can be recreated without manual commands. Automation code and configuration state will be stored in git for change control and versioning. The engineering high-level plan is to use VMs in a cloud-provider environment, then configure open source tooling onto these VMs to poll, test, and configure the remote devices, as well as deploy the tooling itself.

Which configuration management and/or automation tooling is needed for this solution?

Which configuration management and/or automation tooling is needed for this solution?

- AAnsible

- BAnsible and TerraformMost Voted

- CNSO

- DTerraform

- EAnsible and NSO

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

The correct choice, Ansible and Terraform, leverages the strengths of both tools in a complementary fashion. Terraform is ideal for setting up and managing the infrastructure on the cloud, like VMs, ensuring that the infrastructure itself is as transient as required by being able to stand it up and tear it down programmatically. On the other hand, Ansible excels in configuration management, making it suitable for setting up the software environment needed on those VMs to manage network operations efficiently. Together, they provide a robust solution for automated, ephemeral environments as needed by the network operations team.

send

light_mode

delete

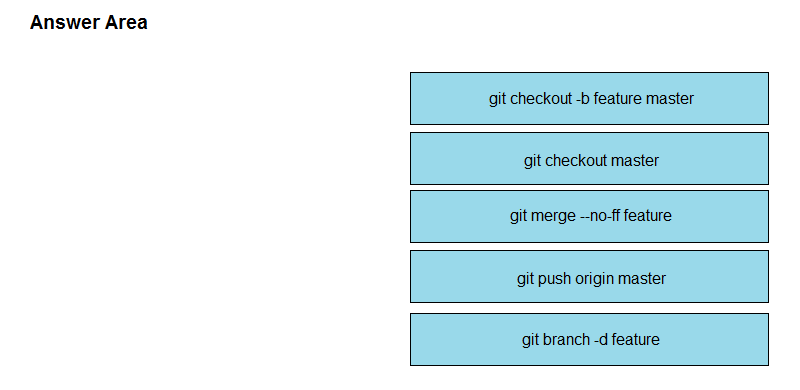

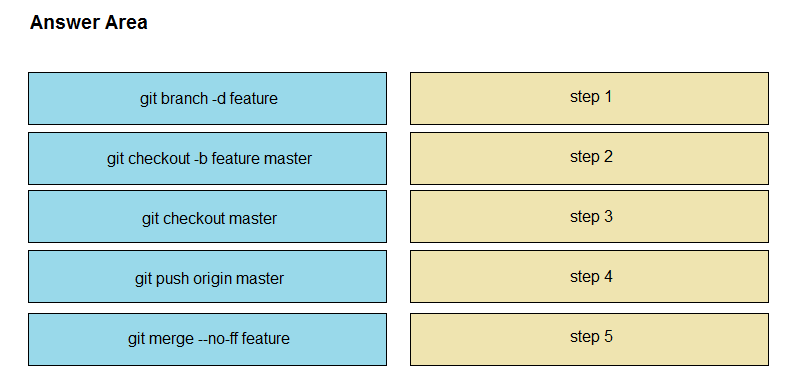

Question #10

DRAG DROP -

Drag and drop the git commands from the left into the correct order on the right to create a feature branch from the master and then incorporate that feature branch into the master.

Select and Place:

Drag and drop the git commands from the left into the correct order on the right to create a feature branch from the master and then incorporate that feature branch into the master.

Select and Place:

send

light_mode

delete

All Pages