Amazon AWS Certified Solutions Architect - Professional SAP-C02 Exam Practice Questions (P. 1)

- Full Access (529 questions)

- Six months of Premium Access

- Access to one million comments

- Seamless ChatGPT Integration

- Ability to download PDF files

- Anki Flashcard files for revision

- No Captcha & No AdSense

- Advanced Exam Configuration

Question #1

A company needs to architect a hybrid DNS solution. This solution will use an Amazon Route 53 private hosted zone for the domain cloud.example.com for the resources stored within VPCs.

The company has the following DNS resolution requirements:

On-premises systems should be able to resolve and connect to cloud.example.com.

All VPCs should be able to resolve cloud.example.com.

There is already an AWS Direct Connect connection between the on-premises corporate network and AWS Transit Gateway.

Which architecture should the company use to meet these requirements with the HIGHEST performance?

The company has the following DNS resolution requirements:

On-premises systems should be able to resolve and connect to cloud.example.com.

All VPCs should be able to resolve cloud.example.com.

There is already an AWS Direct Connect connection between the on-premises corporate network and AWS Transit Gateway.

Which architecture should the company use to meet these requirements with the HIGHEST performance?

- AAssociate the private hosted zone to all the VPCs. Create a Route 53 inbound resolver in the shared services VPC. Attach all VPCs to the transit gateway and create forwarding rules in the on-premises DNS server for cloud.example.com that point to the inbound resolver.Most Voted

- BAssociate the private hosted zone to all the VPCs. Deploy an Amazon EC2 conditional forwarder in the shared services VPC. Attach all VPCs to the transit gateway and create forwarding rules in the on-premises DNS server for cloud.example.com that point to the conditional forwarder.

- CAssociate the private hosted zone to the shared services VPCreate a Route 53 outbound resolver in the shared services VPAttach all VPCs to the transit gateway and create forwarding rules in the on-premises DNS server for cloud.example.com that point to the outbound resolver.

- DAssociate the private hosted zone to the shared services VPC. Create a Route 53 inbound resolver in the shared services VPC. Attach the shared services VPC to the transit gateway and create forwarding rules in the on-premises DNS server for cloud.example.com that point to the inbound resolver.

Correct Answer:

A

A

GPT-4o - Answer

GPT-4o - Answer

The ideal architectural choice is D, which leverages a single Route 53 private hosted zone in a shared services VPC. This setup not only minimizes the complexity but also enhances performance by consolidating DNS queries through a singular, centrally managed point. Moreover, connecting the shared services VPC to the transit gateway facilities efficient DNS resolution across all VPCs and from on-premises systems as well. This model ensures both high performance and streamlined DNS management within a hybrid cloud environment. Therefore, associating the private hosted zone universally and using Route 53 inbound resolvers in the shared services VPC strikes a balance between resource efficiency and DNS resolution efficacy.

send

light_mode

delete

Question #2

A company is providing weather data over a REST-based API to several customers. The API is hosted by Amazon API Gateway and is integrated with different AWS Lambda functions for each API operation. The company uses Amazon Route 53 for DNS and has created a resource record of weather.example.com. The company stores data for the API in Amazon DynamoDB tables. The company needs a solution that will give the API the ability to fail over to a different AWS Region.

Which solution will meet these requirements?

Which solution will meet these requirements?

- ADeploy a new set of Lambda functions in a new Region. Update the API Gateway API to use an edge-optimized API endpoint with Lambda functions from both Regions as targets. Convert the DynamoDB tables to global tables.

- BDeploy a new API Gateway API and Lambda functions in another Region. Change the Route 53 DNS record to a multivalue answer. Add both API Gateway APIs to the answer. Enable target health monitoring. Convert the DynamoDB tables to global tables.

- CDeploy a new API Gateway API and Lambda functions in another Region. Change the Route 53 DNS record to a failover record. Enable target health monitoring. Convert the DynamoDB tables to global tables.Most Voted

- DDeploy a new API Gateway API in a new Region. Change the Lambda functions to global functions. Change the Route 53 DNS record to a multivalue answer. Add both API Gateway APIs to the answer. Enable target health monitoring. Convert the DynamoDB tables to global tables.

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The correct solution to enable failover for the API across different AWS regions involves deploying a new API Gateway and Lambda functions in an alternative region and utilizing Amazon Route 53 for DNS failover management. Specifically, changing the Route 53 DNS record to a failover type and enabling target health monitoring ensures that traffic is automatically redirected to the backup region during primary region failure. Additionally, converting DynamoDB tables to global tables allows for data replication across regions, ensuring data availability and consistency irrespective of the region serving the API requests. This comprehensive setup ensures reliable and seamless failover functionality.

send

light_mode

delete

Question #3

A company uses AWS Organizations with a single OU named Production to manage multiple accounts. All accounts are members of the Production OU. Administrators use deny list SCPs in the root of the organization to manage access to restricted services.

The company recently acquired a new business unit and invited the new unit’s existing AWS account to the organization. Once onboarded, the administrators of the new business unit discovered that they are not able to update existing AWS Config rules to meet the company’s policies.

Which option will allow administrators to make changes and continue to enforce the current policies without introducing additional long-term maintenance?

The company recently acquired a new business unit and invited the new unit’s existing AWS account to the organization. Once onboarded, the administrators of the new business unit discovered that they are not able to update existing AWS Config rules to meet the company’s policies.

Which option will allow administrators to make changes and continue to enforce the current policies without introducing additional long-term maintenance?

- ARemove the organization’s root SCPs that limit access to AWS Config. Create AWS Service Catalog products for the company’s standard AWS Config rules and deploy them throughout the organization, including the new account.

- BCreate a temporary OU named Onboarding for the new account. Apply an SCP to the Onboarding OU to allow AWS Config actions. Move the new account to the Production OU when adjustments to AWS Config are complete.

- CConvert the organization’s root SCPs from deny list SCPs to allow list SCPs to allow the required services only. Temporarily apply an SCP to the organization’s root that allows AWS Config actions for principals only in the new account.

- DCreate a temporary OU named Onboarding for the new account. Apply an SCP to the Onboarding OU to allow AWS Config actions. Move the organization’s root SCP to the Production OU. Move the new account to the Production OU when adjustments to AWS Config are complete.Most Voted

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

The correct choice, B, effectively meets the requirements by using a strategic, transitional setup that allows necessary adjustments while enforcing current policies. Creating a temporary Onboarding OU with specific SCPs permits administrators from the newly acquired unit to modify their AWS Config settings without disrupting the overarching access control mechanisms. Once configurations align with organizational policies, moving the account to the Production OU ensures that all accounts maintain uniform security controls. This approach circumvents the inflexibility found when modifying root SCPs directly and provides a controlled environment for integration.

send

light_mode

delete

Question #4

A company is running a two-tier web-based application in an on-premises data center. The application layer consists of a single server running a stateful application. The application connects to a PostgreSQL database running on a separate server. The application’s user base is expected to grow significantly, so the company is migrating the application and database to AWS. The solution will use Amazon Aurora PostgreSQL, Amazon EC2 Auto Scaling, and Elastic Load Balancing.

Which solution will provide a consistent user experience that will allow the application and database tiers to scale?

Which solution will provide a consistent user experience that will allow the application and database tiers to scale?

- AEnable Aurora Auto Scaling for Aurora Replicas. Use a Network Load Balancer with the least outstanding requests routing algorithm and sticky sessions enabled.

- BEnable Aurora Auto Scaling for Aurora writers. Use an Application Load Balancer with the round robin routing algorithm and sticky sessions enabled.

- CEnable Aurora Auto Scaling for Aurora Replicas. Use an Application Load Balancer with the round robin routing and sticky sessions enabled.Most Voted

- DEnable Aurora Scaling for Aurora writers. Use a Network Load Balancer with the least outstanding requests routing algorithm and sticky sessions enabled.

Correct Answer:

C

C

GPT-4o - Answer

GPT-4o - Answer

The most effective AWS setup for scaling both application and database efficiently is found in option C. This is achieved through enabling Aurora Auto Scaling for Aurora Replicas, which manage read operations and are best suited for scaling out. Coupling this with an Application Load Balancer (ALB) that employs round-robin routing and sticky sessions, balances load effectively while maintaining user session consistency. ALB’s capabilities of routing and maintaining client-specific data make it optimal for web-based applications, ensuring a consistent user experience as demand fluctuates. While Network Load Balancers (NLB) offer high performance for TLS traffic, their lack of support for sticky sessions becomes a limiting factor in web-facing scenarios where user session persistence is crucial. Therefore, option C stands out as the more comprehensive solution for this scenario.

send

light_mode

delete

Question #5

A company uses a service to collect metadata from applications that the company hosts on premises. Consumer devices such as TVs and internet radios access the applications. Many older devices do not support certain HTTP headers and exhibit errors when these headers are present in responses. The company has configured an on-premises load balancer to remove the unsupported headers from responses sent to older devices, which the company identified by the User-Agent headers.

The company wants to migrate the service to AWS, adopt serverless technologies, and retain the ability to support the older devices. The company has already migrated the applications into a set of AWS Lambda functions.

Which solution will meet these requirements?

The company wants to migrate the service to AWS, adopt serverless technologies, and retain the ability to support the older devices. The company has already migrated the applications into a set of AWS Lambda functions.

Which solution will meet these requirements?

- ACreate an Amazon CloudFront distribution for the metadata service. Create an Application Load Balancer (ALB). Configure the CloudFront distribution to forward requests to the ALB. Configure the ALB to invoke the correct Lambda function for each type of request. Create a CloudFront function to remove the problematic headers based on the value of the User-Agent header.Most Voted

- BCreate an Amazon API Gateway REST API for the metadata service. Configure API Gateway to invoke the correct Lambda function for each type of request. Modify the default gateway responses to remove the problematic headers based on the value of the User-Agent header.

- CCreate an Amazon API Gateway HTTP API for the metadata service. Configure API Gateway to invoke the correct Lambda function for each type of request. Create a response mapping template to remove the problematic headers based on the value of the User-Agent. Associate the response data mapping with the HTTP API.

- DCreate an Amazon CloudFront distribution for the metadata service. Create an Application Load Balancer (ALB). Configure the CloudFront distribution to forward requests to the ALB. Configure the ALB to invoke the correct Lambda function for each type of request. Create a Lambda@Edge function that will remove the problematic headers in response to viewer requests based on the value of the User-Agent header.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

User 5 provides a valid point on opting for a serverless solution, highlighting the importance of choosing either a REST API or HTTP API within API Gateway to achieve a fully serverless architecture, since using Application Load Balancer (ALB) or Amazon CloudFront could potentially introduce non-serverless components. However, the core requirement in the question revolves around removing headers for compatibility with older devices, which can be configured directly within API Gateway for both REST and HTTP APIs. Given that the REST API allows for direct modifications on the default gateway responses to remove problematic headers based on User-Agent without additional components, it is an efficient and effective solution to meet the company's needs. This enables precise control over the response headers, ensuring compatibility with older devices while leveraging a fully serverless architecture.

send

light_mode

delete

Question #6

A retail company needs to provide a series of data files to another company, which is its business partner. These files are saved in an Amazon S3 bucket under Account A, which belongs to the retail company. The business partner company wants one of its IAM users, User_DataProcessor, to access the files from its own AWS account (Account B).

Which combination of steps must the companies take so that User_DataProcessor can access the S3 bucket successfully? (Choose two.)

Which combination of steps must the companies take so that User_DataProcessor can access the S3 bucket successfully? (Choose two.)

- ATurn on the cross-origin resource sharing (CORS) feature for the S3 bucket in Account A.

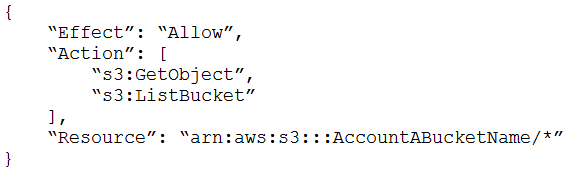

- BIn Account A, set the S3 bucket policy to the following:

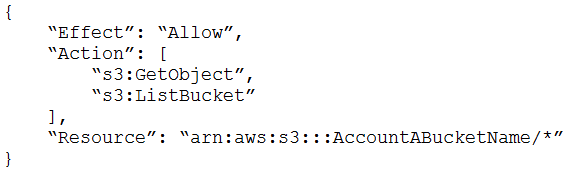

- CIn Account A, set the S3 bucket policy to the following:

Most Voted

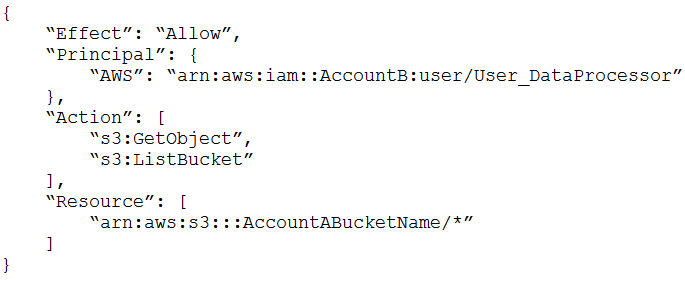

Most Voted - DIn Account B, set the permissions of User_DataProcessor to the following:

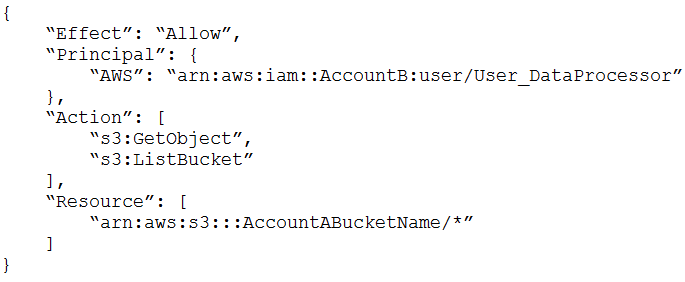

- EIn Account B, set the permissions of User_DataProcessor to the following:

Correct Answer:

D

D

GPT-4o - Answer

GPT-4o - Answer

Correct, option D is essential as it involves setting explicit permissions for User_DataProcessor in Account B. This policy enables the user to perform necessary actions such as GetObject and ListBucket directly on the S3 bucket, using the specific ARN linkage. This is a classic example of a policy that grants necessary S3 permissions at the user level in a cross-account scenario, thus ensuring that the user can access the required resources without ambiguity or unnecessary permissions. Additionally, ensure there’s a corresponding trust relationship or permissions in Account A (like option C) allowing this cross-account access.

send

light_mode

delete

Question #7

A company is running a traditional web application on Amazon EC2 instances. The company needs to refactor the application as microservices that run on containers. Separate versions of the application exist in two distinct environments: production and testing. Load for the application is variable, but the minimum load and the maximum load are known. A solutions architect needs to design the updated application with a serverless architecture that minimizes operational complexity.

Which solution will meet these requirements MOST cost-effectively?

Which solution will meet these requirements MOST cost-effectively?

- AUpload the container images to AWS Lambda as functions. Configure a concurrency limit for the associated Lambda functions to handle the expected peak load. Configure two separate Lambda integrations within Amazon API Gateway: one for production and one for testing.

- BUpload the container images to Amazon Elastic Container Registry (Amazon ECR). Configure two auto scaled Amazon Elastic Container Service (Amazon ECS) clusters with the Fargate launch type to handle the expected load. Deploy tasks from the ECR images. Configure two separate Application Load Balancers to direct traffic to the ECS clusters.Most Voted

- CUpload the container images to Amazon Elastic Container Registry (Amazon ECR). Configure two auto scaled Amazon Elastic Kubernetes Service (Amazon EKS) clusters with the Fargate launch type to handle the expected load. Deploy tasks from the ECR images. Configure two separate Application Load Balancers to direct traffic to the EKS clusters.

- DUpload the container images to AWS Elastic Beanstalk. In Elastic Beanstalk, create separate environments and deployments for production and testing. Configure two separate Application Load Balancers to direct traffic to the Elastic Beanstalk deployments.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

The correct choice, Option B, leverages Amazon ECS with the Fargate launch type, ideally aligning with the company's requirement for a serverless architecture that efficiently supports microservices. Fargate's serverless nature facilitates scaling without needing to manage servers. This strategy reduces operational complexity inherent in managing server-level details while allowing separate environments for production and testing through different ECS clusters and load balancers, streamlining both deployment and management. Simultaneously, this design optimizes cost by scaling resources based on demand, thus avoiding unnecessary expenses associated with overprovisioning or underutilization.

send

light_mode

delete

Question #8

A company has a multi-tier web application that runs on a fleet of Amazon EC2 instances behind an Application Load Balancer (ALB). The instances are in an Auto Scaling group. The ALB and the Auto Scaling group are replicated in a backup AWS Region. The minimum value and the maximum value for the Auto Scaling group are set to zero. An Amazon RDS Multi-AZ DB instance stores the application’s data. The DB instance has a read replica in the backup Region. The application presents an endpoint to end users by using an Amazon Route 53 record.

The company needs to reduce its RTO to less than 15 minutes by giving the application the ability to automatically fail over to the backup Region. The company does not have a large enough budget for an active-active strategy.

What should a solutions architect recommend to meet these requirements?

The company needs to reduce its RTO to less than 15 minutes by giving the application the ability to automatically fail over to the backup Region. The company does not have a large enough budget for an active-active strategy.

What should a solutions architect recommend to meet these requirements?

- AReconfigure the application’s Route 53 record with a latency-based routing policy that load balances traffic between the two ALBs. Create an AWS Lambda function in the backup Region to promote the read replica and modify the Auto Scaling group values. Create an Amazon CloudWatch alarm that is based on the HTTPCode_Target_5XX_Count metric for the ALB in the primary Region. Configure the CloudWatch alarm to invoke the Lambda function.

- BCreate an AWS Lambda function in the backup Region to promote the read replica and modify the Auto Scaling group values. Configure Route 53 with a health check that monitors the web application and sends an Amazon Simple Notification Service (Amazon SNS) notification to the Lambda function when the health check status is unhealthy. Update the application’s Route 53 record with a failover policy that routes traffic to the ALB in the backup Region when a health check failure occurs.Most Voted

- CConfigure the Auto Scaling group in the backup Region to have the same values as the Auto Scaling group in the primary Region. Reconfigure the application’s Route 53 record with a latency-based routing policy that load balances traffic between the two ALBs. Remove the read replica. Replace the read replica with a standalone RDS DB instance. Configure Cross-Region Replication between the RDS DB instances by using snapshots and Amazon S3.

- DConfigure an endpoint in AWS Global Accelerator with the two ALBs as equal weighted targets. Create an AWS Lambda function in the backup Region to promote the read replica and modify the Auto Scaling group values. Create an Amazon CloudWatch alarm that is based on the HTTPCode_Target_5XX_Count metric for the ALB in the primary Region. Configure the CloudWatch alarm to invoke the Lambda function.

Correct Answer:

B

B

GPT-4o - Answer

GPT-4o - Answer

Option B is indeed the optimal strategy for a company requiring a cost-effective method to maintain an RTO of less than 15 minutes. This solution's architecture uses Route 53's health checks to monitor the primary region's health, and upon detection of downtime, Route 53 initiates a failover to the backup region. The incorporated AWS Lambda function plays a crucial role by promoting the read replica to primary and adjusting the Auto Scaling parameters appropriately, ensuring the backup becomes fully operational rapidly. This method delivers swift recovery without the need for constant resource allocation characteristic of an active-active setup, aligning perfectly with the company’s budget restrictions.

send

light_mode

delete

Question #9

A company is hosting a critical application on a single Amazon EC2 instance. The application uses an Amazon ElastiCache for Redis single-node cluster for an in-memory data store. The application uses an Amazon RDS for MariaDB DB instance for a relational database. For the application to function, each piece of the infrastructure must be healthy and must be in an active state.

A solutions architect needs to improve the application's architecture so that the infrastructure can automatically recover from failure with the least possible downtime.

Which combination of steps will meet these requirements? (Choose three.)

A solutions architect needs to improve the application's architecture so that the infrastructure can automatically recover from failure with the least possible downtime.

Which combination of steps will meet these requirements? (Choose three.)

- AUse an Elastic Load Balancer to distribute traffic across multiple EC2 instances. Ensure that the EC2 instances are part of an Auto Scaling group that has a minimum capacity of two instances.Most Voted

- BUse an Elastic Load Balancer to distribute traffic across multiple EC2 instances. Ensure that the EC2 instances are configured in unlimited mode.

- CModify the DB instance to create a read replica in the same Availability Zone. Promote the read replica to be the primary DB instance in failure scenarios.

- DModify the DB instance to create a Multi-AZ deployment that extends across two Availability Zones.Most Voted

- ECreate a replication group for the ElastiCache for Redis cluster. Configure the cluster to use an Auto Scaling group that has a minimum capacity of two instances.

- FCreate a replication group for the ElastiCache for Redis cluster. Enable Multi-AZ on the cluster.Most Voted

Correct Answer:

ADF

ADF

GPT-4o - Answer

GPT-4o - Answer

To significantly enhance the reliability and availability of the application, implementing an Elastic Load Balancer to route traffic across multiple Amazon EC2 instances is essential. This approach should be complemented by configuring these instances to be part of an Auto Scaling group with at least two instances to handle potential failures seamlessly.

Further fortifying the architecture with a Multi-AZ deployment for the Amazon RDS for MariaDB ensures that database operations are uninterrupted during zone disturbances by providing a ready-to-use replica in another Availability Zone.

Lastly, provisioning a replication group with Multi-AZ enabled for the Amazon ElastiCache for Redis cluster facilitates continued accessibility and operation of the in-memory data store, even with node or zone failures. This setup is crucial for maintaining high availability and minimizing service interruptions.

send

light_mode

delete

Question #10

A retail company is operating its ecommerce application on AWS. The application runs on Amazon EC2 instances behind an Application Load Balancer (ALB). The company uses an Amazon RDS DB instance as the database backend. Amazon CloudFront is configured with one origin that points to the ALB. Static content is cached. Amazon Route 53 is used to host all public zones.

After an update of the application, the ALB occasionally returns a 502 status code (Bad Gateway) error. The root cause is malformed HTTP headers that are returned to the ALB. The webpage returns successfully when a solutions architect reloads the webpage immediately after the error occurs.

While the company is working on the problem, the solutions architect needs to provide a custom error page instead of the standard ALB error page to visitors.

Which combination of steps will meet this requirement with the LEAST amount of operational overhead? (Choose two.)

After an update of the application, the ALB occasionally returns a 502 status code (Bad Gateway) error. The root cause is malformed HTTP headers that are returned to the ALB. The webpage returns successfully when a solutions architect reloads the webpage immediately after the error occurs.

While the company is working on the problem, the solutions architect needs to provide a custom error page instead of the standard ALB error page to visitors.

Which combination of steps will meet this requirement with the LEAST amount of operational overhead? (Choose two.)

- ACreate an Amazon S3 bucket. Configure the S3 bucket to host a static webpage. Upload the custom error pages to Amazon S3.Most Voted

- BCreate an Amazon CloudWatch alarm to invoke an AWS Lambda function if the ALB health check response Target.FailedHealthChecks is greater than 0. Configure the Lambda function to modify the forwarding rule at the ALB to point to a publicly accessible web server.

- CModify the existing Amazon Route 53 records by adding health checks. Configure a fallback target if the health check fails. Modify DNS records to point to a publicly accessible webpage.

- DCreate an Amazon CloudWatch alarm to invoke an AWS Lambda function if the ALB health check response Elb.InternalError is greater than 0. Configure the Lambda function to modify the forwarding rule at the ALB to point to a public accessible web server.

- EAdd a custom error response by configuring a CloudFront custom error page. Modify DNS records to point to a publicly accessible web page.Most Voted

Correct Answer:

CE

CE

GPT-4o - Answer

GPT-4o - Answer

The correct choices, C and E, provide a strategic and low-overhead method for handling 502 errors by leveraging existing AWS services directly related to traffic and error management. Option E utilizes CloudFront's built-in capability to display custom error pages, which aligns seamlessly with its role as a content delivery network. Simultaneously, option C employs Route 53's health checks and DNS failover techniques to redirect users effectively during incident occurrences without altering the existing infrastructure drastically. These methods are preferred over others that might introduce unnecessary complexity and management overhead, such as setting up new Lambda functions or using additional S3 configurations for error handling.

send

light_mode

delete

All Pages